Abstract

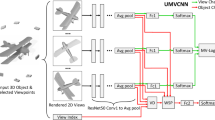

Multi-view observations provide complementary clues for 3D object recognition, but also include redundant information that appears different across views due to view-dependent projection, light reflection and self-occlusions. This paper presents a view-relation constrained global representation network (VCGR-Net) for 3D object recognition that can mitigate the view interference problem at all phases, from view-level source feature generation to multi-view feature aggregation. Specifically, we determine inter-view relations via LSTM implicitly. Based on the relations, we construct a two-stage feature selection module to filter features at each view according to their importance to the global representation and their reliability as observations at specific views. The selected features are then aggregated by referring to intra- and inter-view spatial context to generate global representation for 3D object recognition. Experiments on the ModelNet40 and ModelNet10 datasets demonstrate that the proposed method can suppress view interference and therefore outperform state-of-the-art methods in 3D object recognition.

Similar content being viewed by others

References

Ma C, Guo Y, Yang J, An W (2019) Learning multi-view representation with LSTM for 3D shape recognition and retrieval. IEEE Trans Multimedia 21(5):1169–1182

Chen K, Oldja R, Smolyanskiy N, Birchfield S, Popov A, Wehr D, Eden I, Pehserl J (2020) MVLIdarnet: real-time multi-class scene understanding for autonomous driving using multiple views. In: IEEE international conference on intelligent robots and systems

Su H, Maji S, Kalogerakis E, Learned-Miller E (2015) Multi-view convolutional neural networks for 3D shape recognition. In: International conference on computer vision

Sedaghat N, Zolfaghari M, Amiri E, Brox T (2017) Orientation-boosted voxel nets for 3d object recognition. In: British machine vision conference

Wang C, Cheng M, Sohel F, Bennamoun M, Li J (2018) Normalnet: a voxel-based CNN for 3D object classification and retrieval. Neurocomputing 323:139–147

Qi CR, Su H, Mo K, Guibas LJ (2017) Pointnet: deep learning on point sets for 3D classification and segmentation. In: IEEE conference on computer vision and pattern recognition

Fujiwara K, Hashimoto T (2020) Neural implicit embedding for point cloud analysis. In: IEEE conference on computer vision and pattern recognition

Chen X, Liu L, Zhang L, Zhang H, Meng L, Liu D (2021) Group-pair deep feature learning for multi-view 3D model retrieval. Appl Intell. https://doi.org/10.1007/s10489-021-02471-7

Yu T, Meng J, Yuan J (2018) Multi-view harmonized bilinear network for 3d object recognition. In: IEEE conference on computer vision and pattern recognition

Liang Q, Li Q, Zhang L, Mi H, Nie W, Li X (2021) MHFP: multi-view based hierarchical fusion pooling method for 3D shape recognition. Pattern Recogn Lett 150:214–220

Lee DH, Chen KL, Liou KH, Liu CL, Liu JL (2021) Deep learning and control algorithms of direct perception for autonomous driving. Appl Intell 51(1):237–247

Han Z, Shang M, Liu Z, Vong CM, Liu YS, Zwicker M, Han J, Chen C (2019) Seqviews2seqlabels: learning 3D global features via aggregating sequential views by RNN with attention. IEEE Trans Image Process 28(2):658–672

Ullah A, Muhammad K, Ser JD, Baik SW, Albuquerque V (2019) Activity recognition using temporal optical flow convolutional features and multilayer LSTM. IEEE Trans Ind Electron 66(12):9692–9702

Kazhdan M, Funkhouser T, Rusinkiewicz S (2003) Arotation invariant spherical harmonic representation of 3D shape descriptors. Eurographics Symp Geom Process 6:156–164

Chen DY, Tian XP, Shen YT, Ouhyoung M (2010) On visual similarity based 3d model retrieval. Comput Graph Forum 22(3):223–232

Wu Z, Song S, Khosla A, Yu F, Zhang L, Tang X, Xiao J (2015) 3D ShapeNets: a deep representation for volumetric shapes. In: IEEE conference on computer vision and pattern recognition

Maturana D, Scherer S (2015) Voxnet: a 3D convolutional neural network for real-time object recognition. In: IEEE international conference on intelligent robots and systems

Wang PS, Liu Y, Guo YX, Sun CY, Tong X (2017) O-CNN: octree-based convolutional neural networks for 3D shape analysis. ACM Trans Graph 36(4):72

Le T, Duan Y (2018) Pointgrid: a deep network for 3D shape understanding. In: IEEE conference on computer vision and pattern recognition

Qi CR, Yi L, Su H.Y, Guibas LJ (2017) Pointnet+ +: deep hierarchical feature learning on point sets in a metric space conference and workshop on neural information processing systems

Yan X, Zheng C, Li Z, Wang S, Cui S (2020) Pointasnl: robust point clouds processing using nonlocal neural networks with adaptive sampling. In: IEEE conference on computer vision and pattern recognition

Yu T, Meng J, Yang M, Yuan J (2021) 3D object representation learning: a set-to-set matching perspective. IEEE Trans Image Process 30:2168–217

Feng Y, Zhang Z, Zhao X, Ji R, Gao Y (2018) GVCNN: group-view convolutional neural networks for 3D shape recognition. In: IEEE conference on computer vision and pattern recognition

Yang Z, Wang L (2019) Learning relationships for multi-view 3D object recognition. In: IEEE international conference on computer vision

Xu J, Zhang X, Li W, Liu X, Han J (2021) Joint multi-view 2D convolutional neural networks for 3D object classification. In: International joint conference on artificial intelligence

Liu A-A, Zhou H, Nie W, Liu Z, Liu W, Xie H, Mao Z, Li X, Song D (2021) Hierarchical multi-view context modelling for 3D object classification and retrieval. Inf Sci 547:984–995

Han Z, Lu H, Liu Z, Vong CM, Liua YS, Zwicker M, Han J, Chen CLP (2019) 3D2SeqViews: aggregating sequential views for 3D global feature learning by CNN with hierarchical attention aggregation. IEEE Trans Image Process 28(8):3986–3999

Jiang J, Bao D, Chen Z, Zhao X, Gao Y (2019) MLVCNN: multi-loop-view convolutional neural network for 3D shape retrieval. In: Proceedings of the AAAI conference on artificial intelligence

Huang J, Yan W, Li TH, Liu S, Li G (2020) Learning the global descriptor for 3D object recognition based on multiple views decomposition. IEEE Trans Multimedia 24:188–201

Shao Z, Li Y, Zhang H (2020) Learning representations from skeletal self-similarities for cross-view action recognition. IEEE Trans Circuits Syst Video Technol 31(1):160–174

Liu M, Li Y, Liu H (2021) Robust 3D gaze estimation via data optimization and saliency aggregation for mobile eye-tracking systems. IEEE Trans Instrum Meas 70:1–10

Liu H, Liu T, Zhang Z, Sangaiah AK, Yang B, Li Y (2022) ARHPE: Asymmetric relation-aware representation learning for head pose estimation in industrial human-machine interaction. IEEE Trans Ind Inf. https://doi.org/10.1109/TII.2022.3143605

Ma W, Xu S, Ma W, Zha H (2020) Multiview feature aggregation for facade parsing. IEEE Geosci Remote Sens Lett 19:1–5

Ren Z, Sun Q (2021) Simultaneous global and local graph structure preserving for multiple kernel clustering. IEEE Trans Neural Netw Learn Syst 32(5):1839–1851

Ren Z, Yang S, Sun Q, Wang T (2018) Consensus affinity graph learning for multiple kernel clustering. IEEE Trans Cybern 51(6):3273–3284

Woo S, Park J, Lee J, Kweon I (2018) Cbam: convolutional block attention module. In: European conference on computer vision

Acknowledgements

This research is partially supported by National Natural Science Foundation of China (Nos. 62176010, 61771026). It is also supported by the Key Project of Beijing Municipal Education Commission (No. KZ201910005008).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Xu, R., Mi, Q., Ma, W. et al. View-relation constrained global representation learning for multi-view-based 3D object recognition. Appl Intell 53, 7741–7750 (2023). https://doi.org/10.1007/s10489-022-03949-8

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-03949-8