Abstract

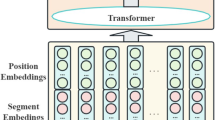

With the advent of transformers having attention mechanisms, the advancements in Natural Language Processing (NLP) have been manifold. However, these models possess huge complexity and enormous computational overhead. Besides, the performance of such models relies on the feature representation strategy for encoding the input text. To address these issues, we propose a novel transformer encoder architecture with Selective Learn-Forget Network (SLFN) and contextualized word representation enhanced through Parts-of-Speech Characteristics Embedding (PSCE). The novel SLFN selectively retains significant information in the text through a gated mechanism. It enables parallel processing, captures long-range dependencies and simultaneously increases the transformer’s efficiency while processing long sequences. While the intuitive PSCE deals with polysemy, distinguishes word-inflections based on context and effectively understands the syntactic as well as semantic information in the text. The single-block architecture is extremely efficient with 96.1% reduced parameters compared to BERT. The proposed architecture yields 6.8% higher accuracy than vanilla transformer architecture and appreciable improvement over various state-of-the-art models for sentiment analysis over three data-sets from diverse domains.

Similar content being viewed by others

Code Availability

The code is available in the GitHub repository https://github.com/Wazib/Transformer_SLFN_PSCE

Notes

For the tokens present in the sequence, the corresponding POS has been annotated using spaCy: https://spacy.io/

References

Nasukawa T, Yi J (2003) Sentiment analysis: capturing favorability using natural language processing. In: Proceedings of the 2nd international conference on knowledge capture. ACM, pp 70–77

Poria S, Cambria E, Ku L-W, Gui C, Gelbukh A (2014) A rule-based approach to aspect extraction from product reviews. In: Proceedings of the second workshop on natural language processing for social media (SocialNLP), pp 28–37

Liu Q, Gao Z, Liu B, Zhang Y (2015) Automated rule selection for aspect extraction in opinion mining. In: Twenty-fourth international joint conference on artificial intelligence

Ansar W, Goswami S, Das AK (2021) A data science approach to analysis of tweets based on cyclone Fani. In: Data management, analytics and innovation. Springer, Singapore, pp 243–261

Irsoy O, Cardie C (2014) Opinion mining with deep recurrent neural networks.. In: Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP), pp 720–728

Liu P, Joty S, Meng H (2015) Fine-grained opinion mining with recurrent neural networks and word embeddings.. In: Proceedings of the 2015 conference on empirical methods in natural language processing, pp 1433–1443

Poria S, Cambria E, Gelbukh A (2016) Aspect extraction for opinion mining with a deep convolutional neural network. Knowl-Based Syst 108:42–49

Qiang L, Zhu Z, Zhang G, Kang S, Liu P (2021) Aspect-gated graph convolutional networks for aspect-based sentiment analysis. Appl Intell 51(7):4408–4419

Zhou J, Huang JX, Hu QV, He L (2020) Is position important? deep multi-task learning for aspect-based sentiment analysis. Appl Intell 50:3367–3378

Wang Y, Huang M, Zhu X, Li Z (2016) Attention-based LSTM for aspect-level sentiment classification. In: Proceedings of the 2016 conference on empirical methods in natural language processing, pp 606–615

Bahdanau D, Cho K, Bengio Y (2014) Neural machine translation by jointly learning to align and translate. arXiv:1409.0473

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser Ł, Polosukhin I (2017) Attention is all you need. In: In Advances in neural information processing systems, pp 5998–6008

Peters ME, Neumann M, Iyyer M, Gardner M, Clark C, Lee K, Zettlemoyer L (2018) Deep contextualized word representations. arXiv:1802.05365

Howard J, Ruder S (2018) Universal language model fine-tuning for text classification. arXiv:1801.06146

Devlin J, Chang M-W, Lee K, Toutanova K (2018) Bert:, Pre-training of deep bidirectional transformers for language understanding. arXiv:1810.04805

Ansar W, Goswami S, Chakrabarti A, Chakraborty B (2021) An efficient methodology for aspect-based sentiment analysis using BERT through refined aspect extraction. J Intell Fuzzy Syst 40(5):9627–9644

Sun C, Huang L, Qiu X (2019) Utilizing bert for aspect-based sentiment analysis via constructing auxiliary sentence. arXiv:1903.09588

Radford A, Narasimhan K, Salimans T, Sutskever I (2018) Improving language understanding by generative pre-training. http://openai-assets.s3.amazonaws.com/research-covers/language-unsupervised/language_understanding_paper.pdf. Accessed: June 28, 2022

Wang C, Li M, Smola AJ (2019) Language models with transformers. arXiv:1904.09408

Strubell E, Ganesh A, McCallum A (2019) Energy and policy considerations for deep learning in NLP. arXiv:1906.02243

Zhang D, Zhu Z, Kang S, Zhang G, Liu P (2021) Syntactic and semantic analysis network for aspect-level sentiment classification. Appl Intell 51(8):6136–6147

Chen J, Chen Y, He Y, Xu Y, Zhao S, Zhang Y (2022) A classified feature representation three-way decision model for sentiment analysis. Appl Intell 52:7995–8007

Harris ZS (1954) Distributional structure. Word 10(2–3):146–162

Mikolov T, Chen K, Corrado G, Dean J (2013) Efficient estimation of word representations in vector space. arXiv:1301.3781

Mikolov T, Sutskever I, Chen Kai, Corrado GS, Dean J (2013) Distributed representations of words and phrases and their compositionality. In: Advances in neural information processing systems, pp 3111–3119

Pennington J, Socher R, Manning C (2014) Glove: Global vectors for word representation. In: Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP), pp 1532–1543

Joulin A, Grave E, Bojanowski P, Mikolov T (2016) Bag of tricks for efficient text classification. arXiv:1607.01759

Lee YY, Ke H, Yen TY, Huang HH, Chen HH (2019) Combining and learning word embedding with WordNet for semantic relatedness and similarity measurement. J Assoc Inf Sci Technol 71(6):657–670

Gong N, Yao N, Guo S (2020) Seeds: Sampling-Enhanced Embeddings. IEEE Trans Neural Netw Learn Syst 33(2):577–586

Huang EH, Socher R, Manning CD, Ng AY (2012) Improving word representations via global context and multiple word prototypes. In: Proceedings of the 50th annual meeting of the association for computational linguistics (Volume 1: Long Papers). Association for Computational Linguistics, Korea, pp 873–882

Tian F, Dai H, Bian J, Gao B, Zhang R, Chen E, Liu T-Y (2014) A probabilistic model for learning multi-prototype word embeddings. In: Proceedings of COLING 2014, the 25th International Conference on Computational Linguistics: Technical Papers, pp 151–160

Zheng X, Feng J, Yi C, Peng H, Zhang W (2017) Learning context-specific word/character embeddings. In: Proceedings of the AAAI conference on artificial intelligence, vol 1, p 31

Zhou Yuxiang, Liao Lejian, Gao Yang, Wang Rui, Huang Heyan (2021) TopicBERT: a topic-enhanced neural language model fine-tuned for sentiment classification. In: IEEE transactions on neural networks and learning systems. IEEE, New Jersey

Bengio Y, Simard P, Frasconi P (1994) Learning long-term dependencies with gradient descent is difficult. IEEE Trans Neural Netw 5(2):157–166

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780

Khandelwal Urvashi, He H, Qi P, Ju-rafsky D (2018) Sharp nearby, fuzzy far away: How neural language models use context. arXiv:1805.04623

Yoon K (2014) Convolutional neural networks for sentence classification. arXiv:1408.5882

Cho K, Van Merriënboer B, Gulcehre C, Bahdanau D, Bougares F, Schwenk H, Bengio Y (2014) Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv:1406.1078

Sutskever I, Vinyals O, Le QV (2014) Sequence to sequence learning with neural networks. arXiv:1409.3215

Xu Hongfei, Liu Q, Xiong D, van Genabith J (2020) Transformer with depth-wise LSTM. arXiv:2007.06257

Melamud O, Goldberger J, Dagan I (2016) Context2vec: learning generic context embedding with bidirectional lstm. In: Proceedings of the 20th SIGNLL conference on computational natural language learning, pp 51–61

Kumar HM, Harish BS, Darshan HK (2019) Sentiment analysis on IMDb movie reviews using hybrid feature extraction method. Int J Interact Multimed Artif Intell 5:5

Krishnamoorthy S (2018) Sentiment analysis of financial news articles using performance indicators. Knowl Inf Syst 56(2):373–394

Sanh V, Debut L, Chaumond J, Wolf T (2019) DistilBERT, a distilled version of BERT: smaller, faster, cheaper and lighter. arXiv:1910.01108

Yang Y, Uy MCS, Huang A (2020) Finbert:, a pretrained language model for financial communications. arXiv:2006.08097

Xia H, Ding C, Liu Y (2020) Sentiment analysis model based on self-attention and character-level embedding. IEEE Access 8:184614–184620

Liu Z, Huang D, Huang K, Li Z, Zhao J (2020) Finbert: a pre-trained financial language representation model for financial text mining. In: Proceedings of the twenty-ninth international joint conference on artificial intelligence. IJCAI, pp 5–10

Rahimi Z, Homayounpour MM (2021) TensSent: a tensor based sentimental word embedding method. Appl Intell 51(8):6056– 6071

Le Q, Mikolov T (2014) Distributed representations of sentences and documents. In: International conference on machine learning. PMLR, pp 1188–1196

Malo P, Sinha A, Korhonen P, Wallenius J, Takala P (2014) Good debt or bad debt: detecting semantic orientations in economic texts. J Assoc Inf Sci Technol 65(4):782–796

Tang D, Wei F, Q B, Yang N, Liu T, Zhou M (2015) Sentiment embeddings with applications to sentiment analysis. IEEE Trans Knowl Data Eng 28(2):496–509

Camacho-Collados J, Pilehvar MT (2017) On the role of text preprocessing in neural network architectures: an evaluation study on text categorization and sentiment analysis. arXiv:1707.01780

Araci D (2019) Finbert: financial sentiment analysis with pre-trained language models. arXiv:1908.10063

Liu N, Bo S (2020) Aspect-based sentiment analysis with gated alternate neural network. Knowl-Based Syst 105010:188

Lin P, Yang M, Lai J (2021) Deep selective memory network with selective attention and inter-aspect modeling for aspect level sentiment classification. IEEE/ACM Trans Audio Speech Lang Process 29:1093–1106

Funding

The authors declare that no funds, grants or any other kind of support was received for the submitted work.

Author information

Authors and Affiliations

Contributions

Conceptualization: Wazib Ansar, Saptarsi Goswami, Amlan Chakrabarti; Methodology: Wazib Ansar; Formal analysis and investigation: Wazib Ansar; Data curation: Wazib Ansar; Software: Wazib Ansar; Visualization: Wazib Ansar; Validation: Wazib Ansar, Saptarsi Goswami, Amlan Chakrabarti, Basabi Chakraborty; Writing - original draft preparation: Wazib Ansar; Writing - review and editing: Saptarsi Goswami, Amlan Chakrabarti, Basabi Chakraborty; Resources: Saptarsi Goswami, Amlan Chakrabarti; Project administration: Saptarsi Goswami, Amlan Chakrabarti; Supervision: Basabi Chakraborty. All authors have read and approved the final manuscript and have agreed to its publication.

Corresponding author

Ethics declarations

Conflict of Interests

The authors declare that they have no known competing financial interests or personal relationships that are relevant to the content of this article or could have appeared to influence the work reported in this paper.

Additional information

Availability of data and materials

All data and materials used in this work are contained within the manuscript.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ansar, W., Goswami, S., Chakrabarti, A. et al. A novel selective learning based transformer encoder architecture with enhanced word representation. Appl Intell 53, 9424–9443 (2023). https://doi.org/10.1007/s10489-022-03865-x

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-03865-x