Abstract

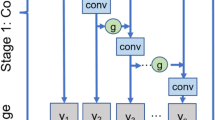

For time series classification, it is a key problem needed to be solved that the deep learning methods do not consider the relationships between different feature layers in neural networks. Additionally, the deep learning methods are slightly inadequate for the feature-learning ability of single-channel time series data. To address these problems, we propose the fractional-order multiscale attention feature pyramid network(FO-MAFPN) to extract feature sequences of different scales from multichannel time series and fuse features of different levels. First, FO-MAFPN forms multichannel time series using a convolutional layer based on fractional-order to increase sample diversity. Subsequently, it uses a pyramid network based on the multiscale attention mechanism to combine low-level detail and high-level abstract semantic information. This pyramid network adaptively learns channel-attention scores and promotes effective feature sequences while suppressing invalid feature sequences. Finally, FO-MAFPN classifies time series by fusing the feature sequences of a long short-term memory layer with the output of the feature pyramid network. The results of experiments conducted on 85 datasets from the UCR archives indicate the superiority of the FO-MAFPN model for time series classification problems.

Similar content being viewed by others

References

Hegui Z, Ruoyang X, Jiapeng Z, Jinhai L, Chengqing L, Lianping Y (2022) A driving behavior risk classification framework via the unbalanced time series samples. IEEE Trans Instrum Meas 71:1–12

Chien-Liang L, Wen-Hoar H, Yao-Chung T (2019) Time series classification with multivariate convolutional neural network. IEEE Trans Ind Electron 66(6):4788–4797

Z X, J P, E K (2010) A brief survey on sequence classification. Acm Sigkdd Explor Newsl 12(1):40–48

Sharabiani A, Darabi H, Rezaei A, Harford S, Johnson H, Karim F (2017) Efficient classification of long time series by 3-d dynamic time warping. IEEE Trans Syst 47:10

Zhi C, Yongguo L, Jiajing Z, Yun Z, Qiaoqin L, Rongjiang J, Xia H (2021) Deep multiple metric learning for time series classification. IEEE Access 9:17829–17842

Schäfer P (2015) The boss is concerned with time series classification in the presence of noise. Data Mining Knowl Discov 29(6):1505–1530

Bagnall BAnthony, Lines J, Hills J, Bostrom A (2015) Time-series classification with cote: the collective of transformation-based ensembles. IEEE Trans Knowl Data Eng 9(27):2522–2535

Lines, J, Taylor, S, Bagnall, A (2016) Hive-cote: the hierarchical vote collective of transformation-based ensembles for time series classification, 1548–1549

Wang Z, Yan W, Oates T (2017) Time series classification from scratch with deep neural networks: a strong baseline in neural networks (ijcnn). In: 2017 International joint conference on, pp 1578–1585

Karim F, Majumdar S, Darabi H, Chen S (2018) Lstm fully convolutional networks for time series classification. IEEE Access 06:1660–1669

Zipeng C, Qianli M, Zhenxi L (2021) Time-aware multi-scale rnns for time series modeling. Proceedings of the Thirtieth International Joint Conference on Artificial Intelligence 30:2285–2291

Cui Z, Chen W, Chen Y (2016) Multi-scale convolutional neural networks for time series classification

Chen W, Shi K (2020) Mulit-scale attention convlutional neural network for time series. Neural Netw 136:126–140

Parker P A, Holan S H, Ravishanker N (2020) Nonlinear time series classification using bispectrum-baseddeep convolutional neural networks. Appl Stoch Model Bus Ind 36:877–890

Windsor E, Cao W (2022) Improving exchange rate forecasting via a new deep multimodal fusion model. Appl Intell

zhichen Gong, Chen H, Yuan B, Yao X (2019) Multiobjective learning in the model space for time series classification. IEEE Trans Cybern 49:3

Lin T-Y, Dollar P, Cirshick R, He K, Hariharan B, Belongie S (2017) Feature pyramid networks for object detection. IEEE Conf Comput Vis Pattern Recogn 06:936–944

Oldham K B, Spanier (1974) The fractional calculus: integrations and differentiations of arbitrary order. Academic, New York

Pu Y-F, Zhou J-L, Yuan X (2010) Fractional differential mask:a fractional differential-based approach for multiscale texture enhancement. IEEE Trans Image Process 02:491–511

Yang S, Gao T, Wang J, Deng B, Lansdell B, Linares-Barrance B (2021) Efficient spike-driven learning with dendritic event-based processing. Front Neurosci

Yang S, wang J, Deng B, Azghadi M R, Linares-Barranco B (2021) Neuromorphic context-dependent learning framework with fault-tolerant spike routing. IEEE Transactions on Neural Networks and Learning Systems

Fawaz H I, Forestier G, Weber J, Idoumghar L, Muller P-A (2019) Deep learning for time series classification: a review. Data Min Knowl Disc 33:917–963

McBride A C (1986) Fractional calculus. Halsted Press, New York

Nishimoto K (1989) Fractional calculus. Halsted Press, New York

Chen M, Pu Y-F, Bai Y-C (2019) A fractional-order variational residual cnn for low dose ct image denoising. Spring, Switzerland, pp 238–249

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez NA, Kaiser L (2017) Attention is all you need

Hu J, Shen L, Albanie S, Sun G, Wu E (2020) Squeeze-and-excitation networks. IEEE Trans Pattern Anal Mach Intell 42:2011–2023

Park J, Woo S, Lee J-Y, Kweon I S (2017) Bam: bottleneck attention moudule

Woo S, Park J, Lee J-Y, Kweon I S (2018) Cbam: convoltional block attention module, 3–19

Ioffe S, Szegedy C (2015) Batch normalization:accelerating deep network training by reducing internal covariate shift, 448–456

Kingma D, Ba J (2014) Adam: a method for stochastic optimization

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

WeiHua Zhang and Yifei Pu contributed equally to this work.

Rights and permissions

About this article

Cite this article

Pan, W., Zhang, W. & Pu, Y. Fractional-order multiscale attention feature pyramid network for time series classification. Appl Intell 53, 8160–8179 (2023). https://doi.org/10.1007/s10489-022-03859-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-03859-9