Abstract

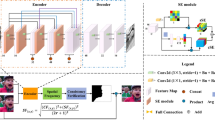

With a span of last several years, Deep Learning (DL) has achieved great success in image fusion. For Multi-Focus Image Fusion (MFIF) task, focus classification learning based methods are the most popular ones. This type of methods seeks to generate an all-in-focus synthetic image by combining the partial focused source images according to their focus properties. The basic premise they rely on is that the fused sources are focus complementary. However, this two-classification model is not always valid in practice and consequently, leads to the quality degradation in the focus/defocus junction regions. In addition, the widely used single-scale stitching rule makes them lack the robustness to the source misregistration, which is hard to avoid in the practical use. To address these drawbacks effectively, we propose a new multi-classification focus model and multi-scale decomposition based MFIF method, termed as MCMSCNN, in this paper. Concretely, we design and train a CNN classifier to obtain an initial relative focus probability map of the sources at first, and then we fuse the sources within a multi-classification and multi-scale decomposition based fusion framework. In this framework, we propose a multi-classification focus model to characterize the pixel focus property and fuse various categories of pixels with more specific rule in a multi-scale manner. All these means make our method presents more outstanding focus fusion performance and anti-misregistration capability. In the experiments, we contrast our method with some recently proposed MFIF methods by subjective and objective comparisons. Extensive experimental results validate the competitive performance of the proposed method.

Similar content being viewed by others

Data availability

The authors declare that the data supporting the findings of this study are available within its supplementary information files

References

Li S, Kang X, Fang L, Hu J, Yin H (2017) Pixel-level image fusion: a survey of the state of the art. Information Fusion 33:100–112

Liu Y, Wang L, Cheng J, Li C, Chen X (2020) Multi-focus image fusion: a survey of the state of the art. Information Fusion 64:71–91

Bhat S, Koundal D (2021) Multi-focus image fusion techniques: a survey. Artif Intell Rev 54:5735–5787

Zhang X (2021) Deep learning-based multi-focus image fusion: a survey and a comparative study. IEEE Trans Pattern Anal Mach Intell 1-1:2021

Zhang H, Xu H, Tian X et al. (2021) Image fusion meets deep learning: a survey and perspective, information fusion, 76: 323–336

Liu Y, Chen X, Wang Z, Wang ZJ, Ward RK, Wang X (2018) Deep learning for pixel-level image fusion: recent advances and future prospects. Information Fusion 42:158–173

Liu Y, Chen X, Peng H, Wang Z (2017) Multi-focus image fusion with a deep convolutional neural network. Information Fusion 36:191–207

Ma H, Liao Q, Zhang J, Liu S, Xue JH (2020) An α-matte boundary defocus model-based cascaded network for multi-focus image fusion. IEEE Transaction on Image Processing 29:8668–8679

Amin-Naji M, Aghagolzadeh A, Ezoji M (2019) Ensemble of CNN for multi-focus image fusion. Information Fusion 51:201–214

Tang H, Xiao B, Li W, Wang G (2018) Pixel convolutional neural network for multi-focus image fusion. Inf Sci 433-434:125–141

Guo X, Nie R. Cao J, et al. (2018) Fully convolutional network based multifocus image fusion, neural computation, 30(7):1–26

Li J, Guo X, Lu G (2020) DRPL: deep regression pair learning for multi-focus image fusion. IEEE Transaction on Image Processing 29:4816–4831

Xu S, Ji L, Wang Z, Li P, Sun K, Zhang C, Zhang J (2020) Towards reducing severe defocus spread effects for multi-focus image fusion via an optimization based strategy. IEEE Transactions on Computational Imaging 6:1561–1570

Liu Y, Liu S, Wang Z (2015) A general framework for image fusion based on multi-scale transform and sparse representation. Information Fusion 24:147–164

Hu Y, Zhang B, Zhang Y, Chen Z (2022) A novel multi-focus image fusion method based on joint regularization optimization layering and sparse representation. Signal Process Image Commun 101:116572

Zhang Q, Liu Y, Blum R et al (2018) Sparse representation based multi-sensor image fusion for multi-focus and multi-modality images: a review. Information Fusion 40:57–75

Zhang J, Liao Q, Liu S, Ma H, Yang W, Xue JH (2020) Real-MFF: a large realistic multi-focus image dataset with ground truth. Pattern Recogn Lett 138:370–377

Jiang L, Fan H, Li J (2022) A multi-focus image fusion method based on attention mechanism and supervised learning, applied intelligence. Vol. 52(04):1–19

Xu H (2020) FusionDN: A Unified Densely Connected Network for Image Fusion. In: J. Ma and Z. Le et al. FusionDN, A Unified Densely Connected Network for Image Fusion, Proceedings of the AAAI Conference on Artificial Intelligence

Mustafa H, Yang J, Zareapoor M (2019) Multi-scale convolutional neural network for multi-focus image fusion. Image Vis Comput 85:26–35

Mustafa H, Zareapoor M, Yang J (2020) MLDNet: multi-level dense network for multi-focus image fusion. Signal Process Image Commun 85:115864

Zhang H, Le Z, Shao Z et al (2021) MFF-GAN: an unsupervised generative adversarial network with adaptive and gradient joint constraints for multi-focus image fusion. Information Fusion 66:40–53

Guo X, Nie R, Cao J et al (1982-1996) FuseGAN: learning to fuse multi-focus image via conditional generative adversarial network, IEEE transactions on multimedia. Vol. 21:2019

Duan Z, Zhang T, Tan J, Luo X (2020) Non-local multi-focus image fusion with recurrent neural networks. IEEE Access 8:135284–135295

Lai R, Li Y, Guan J, Xiong A (2019) Multi-scale visual attention deep convolutional neural network for multi-focus image fusion. IEEE Access 7:114385–114399

Prakash A, Chitta K, Geiger A (2021) Multi-modal fusion transformer for end-to-end autonomous driving, CVPR

Dogra A, Goyal B, Agrawal S (2017) From multi-scale decomposition to non-multi-scale decomposition methods: a comprehensive survey of image fusion techniques and its applications. IEEE access 5:16040–16067

Ma X, Wang Z, Hu S (2021) Multi-focus image fusion based on multi-scale sparse representation. Journal of Visual Communication and Image Representation 81(99):103328

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition, ImageNet large scale visual recognition challenge (ILSVRC) workshop

Huang W, Jing Z (2007) Evaluation of focus measures in multi-focus image fusion. Pattern Recogn 28:493–500

Liu Y, Chen X, Ward R et al (1882-1886) Image fusion with convolutional sparse representation. IEEE Signal Processing Letters 23:2016–1886

Zhang Y, Liu Y, Sun P, Yan H, Zhao X, Zhang L (2020) IFCNN: a general image fusion framework based on convolutional neural network. Information Fusion 54:99–118

Xu H, Ma J, Jiang J et al (2020) U2Fusion: a unified unsupervised image fusion network. IEEE transactions on pattern analysis and machine intelligence. 44(1):502–518

Qu G, Zhang D (2002) Information measure for performance of image fusion. Electron Lett 38:313–315

Xydeas C, Petrovic V (2000) Objective image fusion performance measure. Electron Lett 36:308–309

Zhao J, Laganiere R, Liu Z (2007) Performance assessment of combinative pixel-level image fusion based on an absolute feature measurement. International Journal of Innovative Computing, Information and Control 3:1433–1447

Yang C, Zhang J, Wang X et al (2008) A novel similarity based quality metric for image fusion. Information Fusion 9:156–160

Chen Y, Blum R (2009) A new automated quality assessment algorithm for image fusion. Image and Vision Computing 27:1421–1432

Hu Y, Zhang B, Zhang J, Gao Q (2021) Multi-focus image fusion evaluation based on jointly sparse representation and atom focus measure. IET Image Process 15:1032–1041

Acknowledgements

The authors would like to thank the anonymous reviewers for their serious and valuable comments.

The authors would like to thank Ph. D PANPAN WU for her valuable help in the manuscript revisions.

This work was supported by the National Natural Science Foundation of China under Project Number 61274021 and 61902282, and the Project of the Tianjin Higher Educational Science and Technology Program (2017KJ119).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ma, L., Hu, Y., Zhang, B. et al. A new multi-focus image fusion method based on multi-classification focus learning and multi-scale decomposition. Appl Intell 53, 1452–1468 (2023). https://doi.org/10.1007/s10489-022-03658-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-03658-2