Abstract

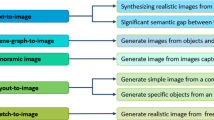

Image synthesis techniques have limited application in the medical field due to unsatisfactory authenticity and precision. Additionally, synthesizing diverse outputs is challenging when the training data are insufficient, as in many medical datasets. In this work, we propose an image-to-image network named the Minimal Generative Adversarial Network (MinimalGAN), to synthesize annotated, accurate, and diverse medical images with minimal training data. The primary concept is to make full use of the internal information of the image and decouple the style from the content by separating them in the self-coding process. After that, the generator is compelled to concentrate on content detail and style separately to synthesize diverse and high-precision images. The proposed MinimalGAN includes two image synthesis techniques; the first is style transfer. We synthesized a stylized retinal fundus dataset. The style transfer deception rate is much higher than that of traditional style transfer methods. The blood vessel segmentation performance increased when only using synthetic data. The other image synthesis technique is target variation. Unlike the traditional translation, rotation, and scaling on the whole image, this approach only performs the above operations on the segmented target being annotated. Experiments demonstrate that segmentation performance improved after utilizing synthetic data.

Similar content being viewed by others

References

Zhao A, Balakrishnan G, Durand F, Guttag JV, Dalca AV (2019) Data augmentation using learned transformations for one-shot medical image segmentation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 8543–8553. https://doi.org/10.1109/CVPR.2019.00874

Acharya U R, Fujita H, Oh S L, Raghavendra U, Tan J H, Adam M, Gertych A, Hagiwara Y (2018) Automated identification of shockable and non-shockable life-threatening ventricular arrhythmias using convolutional neural network. Future Generation Computer Systems 79:952–959. https://doi.org/10.1016/j.future.2017.08.039

Hernandez-Matamoros A, Fujita H, Perez-Meana H (2020) A novel approach to create synthetic biomedical signals using birnn. Inf Sci 541:218–241. https://doi.org/10.1016/j.ins.2020.06.019

Dolinskỳ P, Andras I, Michaeli L, Grimaldi D (2018) Model for generating simple synthetic ecg signals. Acta Electrotechnica et Informatica 18(3):3–8. https://doi.org/10.15546/aeei-2018-0019

Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y (2014) Generative adversarial nets. In: Advances in neural information processing systems, vol 27, pp 2672–2680

Isola P, Zhu J-Y, Zhou T, Efros AA (2017) Image-to-image translation with conditional adversarial networks. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition,, pp 1125–1134, DOI https://doi.org/10.1109/CVPR.2017.632

Sunho Kim BK, Park H (2021) Synthesis of brain tumor multicontrast mr images for improved data augmentation. Med Phys 48:2185–2198. https://doi.org/10.1002/mp.14701

Ju L, Wang X, Zhao X, Bonnington P, Drummond T, Ge Z (2021) Leveraging regular fundus images for training uwf fundus diagnosis models via adversarial learning and pseudo-labeling. IEEE Trans Med Imaging 40(10):2911–2925. https://doi.org/10.1109/TMI.2021.3056395

Hinton G E, Zemel R (1994) Autoencoders, minimum description length and helmholtz free energy. In: Advances in neural information processing systems, vol 6, pp 3–10

Taesung Park T -C W, Liu M-Y, Zhu J-Y (2019) Semantic image synthesis with spatially-adaptive normalization. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 2337–2346, DOI https://doi.org/10.1109/CVPR.2019.00244

Peihao Zhu Y Q, Abdal R, Wonka P (2020) Sean: Image synthesis with semantic region-adaptive normalization. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 5104–5113, DOI https://doi.org/10.1109/CVPR42600.2020.00515

Leon A, Gatys A S E, Bethge M (2016) Image style transfer using convolutional neural networks, pp 2414–2423. https://doi.org/10.1109/CVPR.2016.265

Dmitry Ulyanov A V, Lebedev V, Lempitsky VS (2016) Texture networks: Feed-forward synthesis of textures and stylized images. In: International conference on machine learning, p 4

He Zhao S M-S, li H, Cheng l (2018) Synthesizing retinal and neuronal images with generative adversarial nets. Medical Image Analysis 49:14–26. https://doi.org/10.1016/j.media.2018.07.001

Yanghao Li J L, Wang N, Hou X (2017) Demystifying neural style transfer. In: Proceedings of the 26th international joint conference on artificial intelligence, pp 2230–2236, DOI https://doi.org/10.24963/ijcai.2017/310

Tamar Rott Shaham T. D (2019) Michaeli., T.: Singan: Learning a generative model from a single natural image. In: Proceedings of the IEEE/CVF international conference on computer vision, pp. 4570–4580, DOI 10.1109/ICCV.2019.00467

Tobias Hinz O W, Fisher M, Wermter S (2021) Improved techniques for training single-image gans. In: Proceedings of the IEEE winter conference on applications of computer vision, pp 1300–1309, DOI https://doi.org/10.1109/WACV48630.2021.00134

Brion E, Léger J, Barragán-Montero A M, Meert N, Lee J A, Macq B (2021) Domain adversarial networks and intensity-based data augmentation for male pelvic organ segmentation in cone beam ct. Comput Biol Med 131:104269. https://doi.org/10.1016/j.compbiomed.2021.104269

Lv J, Li G, Tong X, Chen W, Huang J, Wang C, Yang G (2021) Transfer learning enhanced generative adversarial networks for multi-channel mri reconstruction. Comput Biol Med 104504. https://doi.org/10.1016/j.compbiomed.2021.104504

Yurt M, Dar SUH, Erdem A, Erdem E, Oguz KK, Çukur T (2021) Mustgan: Multi-stream generative adversarial networks for mr image synthesis. Med Image Anal 70:101944. https://doi.org/10.1016/j.media.2020.101944

Tuysuzoglu A, Tan J, Eissa K, Kiraly A P, Diallo M, Kamen A (2018) Deep adversarial context-aware landmark detection for ultrasound imaging. In: International conference on medical image computing and computer-assisted intervention, pp 151–158, DOI https://doi.org/10.1007/978-3-030-00937-3_18

Ren J, Hacihaliloglu I, Singer EA, Foran DJ, Qi X (2018) Adversarial domain adaptation for classification of prostate histopathology whole-slide images. In: International conference on medical image computing and computer-assisted intervention, pp 201–209, DOI https://doi.org/10.1007/978-3-030-00934-2_23

Zanjani FG, Zinger S, Bejnordi BE, van der Laak JAWM, de With PH (2018) Stain normalization of histopathology images using generative adversarial networks. In: 2018 IEEE 15Th international symposium on biomedical imaging, pp 573–577, DOI https://doi.org/10.1109/ISBI.2018.8363641

Cheng Chen HC, Dou Q, Heng P-A (2018) Semantic-aware generative adversarial nets for unsupervised domain adaptation in chest x-ray segmentation. In: International workshop on machine learning in medical imaging, pp 143–151, DOI https://doi.org/10.1007/978-3-030-00919-9_17

Yue Zhang TM, Miao S, Liao R (2018) Task driven generative modeling for unsupervised domain adaptation:application to x-ray image segmentation. In: International conference on medical image computing and computer-assisted intervention, pp. 599–607, DOI https://doi.org/10.1007/978-3-030-00934-2_67

Nie D, Trullo R, Lian J, Wang L, Petitjean C, Ruan S, Wang Q, Shen D (2018) Medical image synthesis with deep convolutional adversarial networks. IEEE Trans Biomed Eng 65(12):2720–2730. https://doi.org/10.1109/TBME.2018.2814538

Yang H, Sun J, Carass A, Zhao C, Lee J, Xu Z, Prince J (2018) Unpaired brain mr-to-ct synthesis using a structure-constrained cyclegan. In: Deep learning in medical image analysis and multimodal learning for clinical decision support, pp 174–182, DOI https://doi.org/10.1007/978-3-030-00889-5_20

Jiang J, Hu Y-C, Tyagi N, Zhang P, Rimner A, Mageras GS, Deasy JO, Veeraraghavan H (2018) Tumor-aware, adversarial domain adaptation from ct to mri for lungcancer segmentation. In: International conference on medical image computing and computer-assisted intervention, pp 777–785, DOI https://doi.org/10.1007/978-3-030-00934-2_86

Ben-Cohen A, Klang E, Raskin S P, Soffer S, Ben-Haim S, Konen E, Amitai M M, Greenspan H (2019) Cross-modality synthesis from ct to pet using fcn and gan networksfor improved automated lesion detection. Eng Appl Artif Intell 78:186–194. https://doi.org/10.1016/j.engappai.2018.11.013

Pan Y, Liu M, Lian C, Zhou T, Xia Y, Shen D (2018) Synthesizing missing pet from mri with cycle-consistent generative adversarial networks for alzheimer’s disease diagnosis. In: International conference on medical image computing and computer-assisted intervention, pp 455–463, DOI https://doi.org/10.1007/978-3-030-00931-1_52

Abhishek K, Hamarneh G (2019) Mask2lesion: Mask-constrained adversarial skin lesion imagesynthesis. In: International workshop on simulation and synthesis in medical imaging, pp 71–80, DOI https://doi.org/10.1007/978-3-030-32778-1_8

Jing Y, Yang Y, Feng Z, Ye J, Yu Y, Song M (2019) Neural style transfer: A review. IEEE Transactions on Visualization and Vomputer Graphics 26(11):3365–3385. https://doi.org/10.1109/TVCG.2019.2921336

Dmitry Ulyanov AV, Lebedev V, Lempitsky VS (2016) Texture networks: Feed-forward synthesis of textures and stylizedimages. In: International conference on machine learning, p. 4

Vincent Dumoulin JS, Kudlur M (2017) A learned representation for artistic style. In: International conference on learning representations, pp 9

Huang X, Belongie S (2017) Arbitrary style transfer in real-time with adaptive instancenormalization. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 1501–1510, DOI https://doi.org/10.1109/ICCV.2017.167

Karras T, Laine S, Aila T (2021) A style-based generator architecture for generative adversarial networks. IEEE pattern analysis and machine intelligence 43(12):4217–4228. https://doi.org/10.1109/TPAMI.2020.2970919

Karras T, Laine S, Aittala M, Hellsten J, Lehtinen J, Aila T (2020) Analyzing and improving the image quality of stylegan. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 8110–8119, DOI https://doi.org/10.1109/CVPR42600.2020.00813

Xun Huang S B, Liu M-Y, Kautz J (2018) Multimodal unsupervised image-to-image translation. In: Proceedings of the european conference on computer vision, pp 172–189

Yunjey Choi JY, Uh Y, Ha J-W (2020) Stargan v2: Diverse image synthesis for multiple domains. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 8188–8197, DOI https://doi.org/10.1109/CVPR42600.2020.00821

Chandran P, Zoss G, Gotardo P, Gross M, Bradley D (2021) Adaptive convolutions for structure-aware style transfer. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 7972–7981, DOI https://doi.org/10.1109/CVPR46437.2021.00788

Richardson E, Alaluf Y, Patashnik O, Nitzan Y, Azar Y, Shapiro S, Cohen-Or D (2021) Encoding in style: a stylegan encoder for image-to-image translation. In: Proceedings of the IEEE/CVF international conference on computer vision and pattern recognition, pp 2287–2296, DOI https://doi.org/10.1109/CVPR46437.2021.00232

Chollet F (2017) Xception: Deep learning with depthwise separable convolutions. In: Proceedings of the IEEE/CVF International conference on computer vision and pattern recognition, pp 1251–1258, DOI https://doi.org/10.1109/CVPR.2017.195

Liu M-Y, Huang X, Mallya A, Karras T, Aila T, Lehtinen J, Kautz J (2019) Few-shot unsupervised image-to-image translation. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 10551–10560, DOI https://doi.org/10.1109/ICCV.2019.01065

Kuniaki Saito K S, Liu M-Y (2020) Coco-funit: Few-shot unsupervised image translation with a content conditioned style encoder. In: European cnference on computer vision, pp 382–398, DOI https://doi.org/10.1007/978-3-030-58580-8_23

Han Zhang D M, Goodfellow I, Odena A (2019) Self-attention generative adversarial networks. In: International conference on machine learning, pp 7354–7363

Wang T-C, Liu M-Y, Zhu J-Y, Tao A, Kautz J, Catanzaro B (2018) High-resolution image synthesis and semantic manipulation withconditional gans. In: Proceedings of the IEEE/CVF Conference on computer vision and pattern recognition,, pp 8798–8807, DOI https://doi.org/10.1109/CVPR.2018.00917

Justin Johnson A A, Fei-fei L (2016) Perceptual losses for real-time style transfer and super-resolution. In: European conference on computer vision, pp 694–711, DOI https://doi.org/10.1007/978-3-319-46475-6_43

Staal J, Abràmoff M D, Niemeijer M, Viergever M A, Van Ginneken B (2004) Ridge-based vessel segmentation in color images of the retina. IEEE transactions on medical imaging 23(4):501–509. https://doi.org/10.1109/TMI.2004.825627

Bernal J, Sánchez J, Fernández-Esparrach G, Gil D, Rodríguez C, Vilariño F (2015) Wm-dova maps for accurate polyp highlighting in colonoscopy:validation vs. saliency maps from physicians. Comput Med Imaging Graph 43:99–111. https://doi.org/10.1016/j.compmedimag.2015.02.007

Nima Tajbakhsh S R G, Liang J (2015) Automated polyp detection in colonoscopy videos using shape and context information. IEEE Trans Med Imaging 35(2):630–644. https://doi.org/10.1109/TMI.2015.2487997

Juan Silva O R X D, Histace A, Granado B (2014) Toward embedded detection of polyps in wce images for early diagnosis of colorectal cancer. Int J CARS 9(2):283–293. https://doi.org/10.1007/s11548-013-0926-3

Fan D-P, Ji G-P, Zhou T, Chen G, Fu H, Shen J, Shao L (2020) Pranet: Parallel reverse attention network for polyp segmentation. In: International conference on medical image computing and computer-assisted intervention, pp 263–273, DOI https://doi.org/10.1007/978-3-030-59725-2_26

The International Skin Imaging Collaboration (ISIC) Website. ISIC 2018: Skin Lesion Analysis Towards Melanoma Detection. https://challenge2018.isic-archive.com/

Li Y, Fang C, Yang J, Wang Z, Lu X, Yang M-H (2017) Universal style transfer via feature transforms. In: Advances in neural information processing systems, pp 386–396

Li Y, Liu M-Y, Li X, Yang M-H, Kautz J (2018) A closed-form solution to photorealistic image stylization. In: Proceedings of the european conference on computer vision, pp 453–468

Li X, Liu S, Kautz J, Yang M -H (2019) Learning linear transformations for fast image and video style transfer. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 3809–3817, DOI https://doi.org/10.1109/CVPR.2019.00393

An J, Huang S, Song Y, Dou D, Liu W, Luo J (2021) Artflow: Unbiased image style transfer via reversible neural flows. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 862–871, DOI https://doi.org/10.1109/CVPR46437.2021.00092

Yoo J, Uh Y, Chun S, Kang B, Ha J -W (2019) Photorealistic style transfer via wavelet transforms. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 9036–9045, DOI https://doi.org/10.1109/ICCV.2019.00913

Liu S, Lin T, He D, Li F, Wang M, Li X, Sun Z, Li Q, Ding E (2021) Adaattn: Revisit attention mechanism in arbitrary neural style transfer. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 6649–6658, DOI https://doi.org/10.1109/ICCV.2019.00913

Artsiom Sanakoyeu S L, Kotovenko D, Ommer B (2018) A style-aware content loss for real-time hd style transfer. In: Proceedings of the european conference on computer vision, pp 698–714

Simonyan K, Zisserman A (2015) Very deep convolutional networks for large-scale image recognition. In: International conference on learning representations

Geirhos R, Rubisch P, Michaelis C, Bethge M, Wichmann FA, Brendel W (2018) Imagenet-trained CNNs are biased towards texture; increasing shape bias improves accuracy and robustness. In: International conference on learning representations

Fan D-P, Gong C, Cao Y, Ren B, Cheng M-M, Borji A (2018) Enhanced-alignment measure for binary foreground map evaluation. In: International joint conference on artificial intelligence, pp 698–704

Fan D-P, Cheng M-M, Liu Y, Li T, Borji A (2017) Structure-measure: A new way to evaluate foreground maps. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 4548–4557, DOI https://doi.org/10.1109/ICCV.2017.487

Acknowledgments

The research was supported by the Key Laboratory of Spectral Imaging Technology, Xi’an Institute of Optics and Precision Mechanics of the Chinese Academy of Sciences, the Key laboratory of Biomedical Spectroscopy of Xi’an, the Outstanding Award for Talent Project of the Chinese Academy of Sciences, “From 0 to 1” Original Innovation Project of the Basic Frontier Scientific Research Program of the Chinese Academy of Sciences, and Autonomous Deployment Project of Xi’an Institute of Optics and Precision Mechanics of Chinese Academy of Sciences.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Statements and declarations

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zhang, Y., Wang, Q. & Hu, B. MinimalGAN: diverse medical image synthesis for data augmentation using minimal training data. Appl Intell 53, 3899–3916 (2023). https://doi.org/10.1007/s10489-022-03609-x

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-03609-x