Abstract

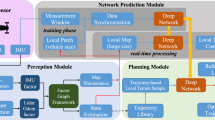

Considering autonomous navigation of an unmanned ground vehicle (UGV) in off-road environments, it faces various problems, such as semantic perception and motion planning. This paper proposes an intelligent approach to perception and planning for UGV in field environments. Firstly, a semantic image of environment is generated in real time based on an improved Convolutional Neural Network (CNN). Secondly, we provide two practical extensions to an open-source 3D mapping framework. One is the semantic point cloud fusion based on 3D LIDAR and Camera, and the other is the generation of traversability cost map using both semantic and geometric information. Thirdly, we propose a new kinodynamic semantic-aware planner which adds the dynamic window approach to the receding horizon planner so that the latter can meet the kinodynamic while perceiving semantic labels. Finally, the above methods, along with a localization module, are integrated into a complete autonomous navigation system with real-time semantic perception and motion planning (RSPMP). In the experiments, the proposed method was successfully applied for safe autonomous perception navigation in off-road environments.

Similar content being viewed by others

Data availability

Available.

References

Bellutta P, Manduchi R, Matthies L, Owens K, Rankin A (2000) "terrain perception for DEMO III," presented at the IEEE intelligent vehicles symposium, 10/4/2000

Belter D, Wietrzykowski J, Skrzypczyński P (2019) Employing Natural Terrain Semantics in Motion Planning for a Multi-Legged Robot. J Intell Robot Syst 93(3):723–743

Berrio JS, Ward J, Worrall S, Zhou W, and Nebot E (2017) Fusing lidar and semantic image information in octree maps. ACRA Australasian conference on robotics and automation 2017,

Berrio JS, Zhou W, Ward J, Worrall S, Nebot E (2018) Octree map based on sparse point cloud and heuristic probability distribution for labeled images. 2018 IEEE/RSJ Int Conf Intell Robots Syst (IROS):3174–3181 IEEE

Berrio JS, Shan M, Worrall S, Ward J, and Nebot EJAPA (2020) Semantic sensor fusion: from camera to sparse lidar information

Boser BE, Guyon IM, Vapnik VN (1992) A training algorithm for optimal margin classifiers. Proceed Fifth Annual Workshop Comput Learn Theory:144–152

Breiman LJML (2001) Random Forests. 45(1):5–32

Cordts M et al. (2016) The Cityscapes dataset for semantic urban scene understanding. presented at the computer vision and pattern recognition, 6/27/2016,

Daily M et al (1988) Autonomous cross-country navigation with the ALV. Presented at the International Conference on Robotics and Automation

Grinvald M et al (2019) Volumetric instance-aware semantic mapping and 3D object discovery. 4(3):3037–3044

Hadsell R et al (2009) Learning long-range vision for autonomous off-road driving. 26(2):120–144

Husain A et al (2013) "Mapping planetary caves with an autonomous, heterogeneous robot team," presented at the IEEE aerospace conference, 3/2/2013

Janwe NJ, Bhoyar KK (2018) Multi-label semantic concept detection in videos using fusion of asymmetrically trained deep convolutional neural networks and foreground driven concept co-occurrence matrix. Appl Intell 48(8):2047–2066

Jeong J, Yoon TS, Park JBJS (2018) Towards a meaningful 3D map using a 3D lidar and a camera. 18(8):2571

Jessup J, Givigi SN, Beaulieu A (2015) Robust and efficient multirobot 3-d mapping merging with octree-based occupancy grids. IEEE Syst J 11(3):1723–1732

Kato S, Takeuchi E, Ishiguro Y, Ninomiya Y, Takeda K, Hamada TJIM (2015) An open approach to autonomous vehicles. 35(6):60–68

Kelly A et al (2006) Toward reliable off road autonomous vehicles operating in challenging environments. Int J Robot Res 25(5–6):449–483

Krüsi P, Furgale P, Bosse M, Siegwart R (2017) Driving on Point Clouds: Motion Planning, Trajectory Optimization, and Terrain Assessment in Generic Nonplanar Environments. J Field Robot 34(5):940–984

Kuffner JJ and LaValle SM (2000) RRT-connect: An efficient approach to single-query path planning. Proceedings 2000 ICRA. Millennium Conference. IEEE International Conference on Robotics and Automation. Symposia Proceedings (Cat. No. 00CH37065) 2: 995–1001: IEEE

Lee D-H, Chen K-L, Liou K-H, Liu C-L, Liu J-L (2021) Deep learning and control algorithms of direct perception for autonomous driving. Appl Intell 51(1):237–247

Long J, Shelhamer E, Darrell T (2015) Fully convolutional networks for semantic segmentation. Presented at the computer vision and pattern recognition

Maturana D, Chou P-W, Uenoyama M, Scherer S (2018) Real-time semantic mapping for autonomous off-road navigation. Field Serv Robot 335–350:Springer

McCormac J, Handa A, Davison A and Leutenegger S (2017) Semanticfusion: Dense 3d semantic mapping with convolutional neural networks 2017 IEEE Int Conf Robot Automat (ICRA). 4628–4635: IEEE

Missura M and Bennewitz M (2019) "Predictive Collision Avoidance for the Dynamic Window Approach," in 2019 International Conference on Robotics and Automation (ICRA) pp. 8620–8626: IEEE

Ojeda L, Borenstein J, Witus G, RJJOFR K (2006) Terrain characterization and classification with a mobile robot. 23(2):103–122

Paz D, Zhang H, Li Q, Xiang H, and Christensen HJAPA (2020) Probabilist Semantic Mapping Urban Autonomous Driving Appl

Robotic Exploration of Planetary Subsurface Voids in Search for Life, 2019

Sasaki T, Otsu K, Thakker R, Haesaert S, Agha-mohammadi A (2020) Where to map? Iterative rover-copter path planning for Mars exploration. IEEE Robot Automat Lett 5(2):2123–2130

Shan T and Englot B (2018) LeGO-LOAM: lightweight and ground-optimized Lidar Odometry and mapping on variable terrain. presented at the intelligent robots and systems, 10/1/2018,

Valada A, Oliveira G, Brox T, and Burgard W (2016) Deep multispectral semantic scene understanding of forested environments using multimodal fusion (deep multispectral semantic scene understanding of forested environments using multimodal fusion).

Wang et al. (2019) LEDNet: A Lightweight Encoder-Decoder Network for Real-time Semantic Segmentation. arXiv preprint arXiv:1905.02423

Westfechtel T, Ohno K, Neto RPB, Kojima S, Tadokoro S (2019) Fusion of camera and lidar data for large scale semantic mapping. 2019 IEEE Intell Trans Syst Conf (ITSC):257–264

Wooden D, Malchano M, Blankespoor K, Howardy A, Rizzi AA, and Raibert M (2010) "Autonomous navigation for BigDog," 2010 IEEE Int Conf Robot and Automat, 4736–4741: IEEE

Wu H, Wu X, Ma Q, Tian G (2019) Cloud robot: semantic map building for intelligent service task. Appl Intell 49(2):319–334

Yang S, Huang Y, and Scherer S (2017) Semantic 3D occupancy mapping through efficient high order CRFs 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 590–597: IEEE

Yue Y, Zhao C, Wu Z, Yang C, Wang Y, Wang D (2020) Collaborative semantic understanding and mapping framework for autonomous systems. IEEE/ASME Trans Mechatron 26(2):978–989

Zapf J, Ahuja G, Papon J, Lee D, Nash J, and Rankin A (2017) "A perception pipeline for expeditionary autonomous ground vehicles," Unmanned Syst Technol XIX, vol. 10195, p. 101950F: International Society for Optics and Photonics

Zhang X, Filliat D (2018) Real-time voxel based 3D semantic mapping with a hand held RGB-D camera. GitHub repository

Zhou D, Hou Q, Chen Y, Feng J, and Yan S (2020) "Rethinking bottleneck structure for efficient Mobile network design," presented at the European conference on computer vision

Acknowledgments

We thank the professor of Harbin Engineering University, Bing Li for his help. We also thank all the reviewers in our research that provided useful and detailed feedback. This paper is supported by the Equipment Advance Research Funds (NO.61405180205) and Foundation Strengthening Programme Technical Area Funds (NO.2021-JCJQ-JJ-0026).

Code availability

Available.

Funding

This paper is supported by the Equipment Advance Research Funds.

Author information

Authors and Affiliations

Contributions

Denglong Chen1: Methodology, Software, Writing - Original Draft.

Mingxi Zhuang1: Methodology, Software, Writing - Original Draft for semantic segmentation.

Xunyu Zhong1: Conceptualization, Investigation, Review & Editing.

Wenhong Wu1: Software, Experimental, Visualization.

Qiang Liu3: Validation, Review & Editing.

Corresponding author

Ethics declarations

Conflicts of interest/competing interests

Not applicable.

Ethics approval

Not applicable.

Consent to participate

Not applicable.

Consent for publication

Not applicable.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

ESM 1

(MP4 265,215 kb)

Rights and permissions

About this article

Cite this article

Chen, D., Zhuang, M., Zhong, X. et al. RSPMP: real-time semantic perception and motion planning for autonomous navigation of unmanned ground vehicle in off-road environments. Appl Intell 53, 4979–4995 (2023). https://doi.org/10.1007/s10489-022-03283-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-03283-z