Abstract

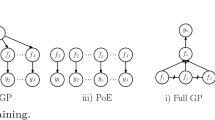

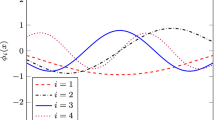

This paper presents techniques to improve the prediction accuracy of approximation methods used in Gaussian process regression models. Conventional methods such as Nyström and subset of data methods rely on low-rank approximations to the kernel matrix derived from a set of representative data points. Prediction accuracy suffers when the number of representative points is small or when the length scale is small. The techniques proposed here augment the set of representative points with neighbors of each test input to improve accuracy. Our approach leverages the general structure of the problem through the low-rank approximation and improves its accuracy further by exploiting locality at each test input. Computations involving neighbor points are cast as updates to the base approximation which result in significant savings. To ensure numerical stability, prediction is done via orthogonal projection onto the subspace of the kernel approximation derived from the augmented set. Experiments on synthetic and real datasets show that our approach is robust with respect to changes in length scale and matches the prediction accuracy of the full kernel matrix while using fewer points for kernel approximation. This results in faster and more accurate predictions compared to conventional methods.

Similar content being viewed by others

References

Bach FR, Jordan MI (2005) Predictive low-rank decomposition for kernel methods. In: Proceedings of the 22nd international conference on machine learning, pp 33–40

Chang CC, Lin CJ (2011) Libsvm: A library for support vector machines. ACM Trans Intell Syst Technol (TIST) 2(3):1–27

Deisenroth M, Ng JW (2015) Distributed gaussian processes. In: International conference on machine learning, pp 1481–1490. PMLR

Deng T, Ye D, Ma R, Fujita H, Xiong L (2020) Low-rank local tangent space embedding for subspace clustering. Inf Sci 508:1–21. https://doi.org/10.1016/j.ins.2019.08.060. https://www.sciencedirect.com/science/article/pii/S0020025519308096

Fine S, Scheinberg K (2001) Efficient svm training using low-rank kernel representations. J Mach Learn Res 2:243–264

Golub GH, Van Loan CF (2012) Matrix computations, vol. 3 JHU press

Gramacy RB, Apley DW (2015) Local gaussian process approximation for large computer experiments. J Comput Graph Stat 24(2):561–578

Kumar S, Mohri M, Talwalkar A (2009) Ensemble Nyström method. In: Advances in Neural information processing systems, pp 1060–1068

MacQueen J, et al. (1967) Some methods for classification and analysis of multivariate observations. In: Proceedings of the fifth Berkeley symposium on mathematical statistics and probability, vol. 1, pp. 281–297. Oakland, CA, USA

Park C, Apley D (2018) Patchwork kriging for large-scale gaussian process regression. J Mach Learn Res 19(1):269–311

Park C, Huang JZ (2016) Efficient computation of gaussian process regression for large spatial data sets by patching local gaussian processes, vol 17. http://jmlr.org/papers/v17/15-327.html

Park C, Huang JZ, Ding Y (2012) Gplp: a local and parallel computation toolbox for gaussian process regression. J Mach Learn Res 13:775–779

Pourkamali-Anaraki F, Becker S (2019) Improved fixed-rank Nyström approximation via qr decomposition: Practical and theoretical aspects. Neurocomputing 363:261–272

Quiñonero-Candela J, Rasmussen CE (2005) A unifying view of sparse approximate gaussian process regression. J Mach Learn Res 6:1939–1959

Rasmussen CE (2006) Gaussian processes for machine learning. MIT Press

Sacks J, Welch WJ, Mitchell TJ, Wynn HP (1989) Design and analysis of computer experiments. Stat Sci 409–423

Si S, Hsieh CJ, Dhillon IS (2017) Memory efficient kernel approximation. J Mach Learn Res 18(1):682–713

Vijayakumar S, Schaal S (2000) Locally weighted projection regression: An o (n) algorithm for incremental real time learning in high dimensional space. In: Proceedings of the seventeenth international conference on machine learning (ICML 2000), vol 1, pp 288–293

Williams CK, Seeger M (2001) Using the Nyström method to speed up kernel machines. In: Advances in neural information processing systems, pp 682–688

Yang X, Jiang X, Tian C, Wang P, Zhou F, Fujita H (2020) Inverse projection group sparse representation for tumor classification: A low rank variation dictionary approach. Knowl Based Syst 196:105768. https://doi.org/10.1016/j.knosys.2020.105768. https://www.sciencedirect.com/science/article/pii/S0950705120301714

Zhang K, Kwok JT (2010) Clustered Nyström method for large scale manifold learning and dimension reduction. IEEE Trans Neural Netw 21(10):1576–1587

Zhou X, Yang C, Zhao H, Yu W (2014) Low-rank modeling and its applications in image analysis. ACM Comput Surv 47(2). https://doi.org/10.1145/2674559

Acknowledgements

We would like to acknowledge support of the High Performance Research Center at Texas A&M University.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

We provide algorithms for updating the QR and Cholesky factorization

Rights and permissions

About this article

Cite this article

Thomas, E., Sarin, V. Augmented low-rank methods for gaussian process regression. Appl Intell 52, 1254–1267 (2022). https://doi.org/10.1007/s10489-021-02481-5

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-021-02481-5