Abstract

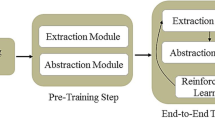

In recent years, deep neural extractive-based summarization approaches have achieved vast popularity over conventional approaches. However, previously proposed neural extractive-based models have issues that limit their performance. One of these issues is related to the architecture of the used neural network that skips some details about the document hierarchical structure. Moreover, these models are optimized to maximize the probabilities of the training data ground truth labels rather than the evaluation metric that actually measures the quality of the summarization; this way of optimization might neglect important information related to sentence ranking. To address these issues, we combined reinforcement and supervised learning to train a hierarchical self-attentive reinforced neural network-based summarization model to rank sentences according to their significance by directly optimizing the ROUGE evaluation metric. The proposed model employs a hierarchical self-attention mechanism to generate document and sentence embeddings that reflect the hierarchical structure of the document and give better feature representation. While reinforcement learning enables direct optimization with respect to evaluation metrics, the attention mechanism adds an extra source of information to direct the summary extraction. The model was evaluated on the basis of three well-known datasets, namely, CNN, Daily Mail, and their combined version CNN/Daily Mail. Experimental results showed that the model achieved higher ROUGE scores than state-of-the-art models for extractive summarization on the three datasets.

Similar content being viewed by others

References

Isonuma M, Fujino T, Mori J, Matsuo Y, Sakata I (2017) Extractive summarization using multi-task learning with document classification. In: Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, ACL, Copenhagen, Denmark, pp 2101–2110, https://doi.org/10.18653/v1/D17-1223

Jg Y, Wan X, Xiao J (2017) Recent advances in document summarization. Knowl Inf Syst 53(2):297–336. https://doi.org/10.1007/s10115-017-1042-4

Erkan G, Radev DR (2004) Lexrank: graph-based lexical centrality as salience in text summarization. J Artif Intell Res 22(1):457–479. https://doi.org/10.1613/jair.1523

Carbinell J, Goldstein J (2017) The use of mmr, diversity-based reranking for reordering documents and producing summaries. ACM SIGIR Forum 51(2):209–210. https://doi.org/10.1145/3130348.3130369

McDonald R (2007) A study of global inference algorithms in multi-document summarization. In: Amati G, Carpineto C, Romano G (eds) Advances in information retrieval. Springer Berlin Heidelberg, Berlin, pp 557–564

Conroy JM, O’leary DP (2001) Text summarization via hidden markov models. In: Proceedings of the 24th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, ACM, New York, NY, USA, SIGIR ‘01, pp 406–407, https://doi.org/10.1145/383952.384042

Cheng J, Lapata M (2016) Neural summarization by extracting sentences and words. In: Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), ACL, Berlin, Germany, pp 484–494, https://doi.org/10.18653/v1/P16-1046

Nallapati R, Zhai F, Zhou B (2017) Summarunner: A recurrent neural network based sequence model for extractive summarization of documents. In: Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, AAAI Press, AAAI’17, pp 3075–3081

Al-Sabahi K, Zuping Z, Nadher M (2018) A hierarchical structured self-attentive model for extractive document summarization (hssas). IEEE Access 6:24205–24212. https://doi.org/10.1109/ACCESS.2018.2829199

Lin Z, Feng M, dos Santos CN, Yu M, Xiang B, Zhou B, Bengio Y (2017) A structured self-attentive sentence embedding. ArXiv abs/1703.03130. https://arxiv.org/abs/1703.03130. Accessed 30 July 2019

Lin CY, Hovy E (2003) Automatic evaluation of summaries using n-gram co-occurrence statistics. In: HLT-NAACL. ACL, Stroudsburg, pp 71–78. https://doi.org/10.3115/1073445.1073465

Narayan S, Cohen SB, Lapata M (2018) Ranking sentences for extractive summarization with reinforcement learning. In: NAACL-HLT

Wu Y, Hu B (2018) Learning to extract coherent summary via deep reinforcement learning. In: Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, pp 5602–5609

Ryang S, Abekawa T (2012) Framework of automatic text summarization using reinforcement learning. In: Proceedings of the 2012 Joint Conference on Empirical Methods in Natural Language Processing and Computational Natural Language Learning, Association for Computational Linguistics, Stroudsburg, PA, USA, EMNLP-CoNLL ‘12, pp 256–265

Rioux C, Hasan SA, Chali Y (2014) Fear the REAPER: A system for automatic multi-document summarization with reinforcement learning. In: Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), ACL, Doha, pp 681–690, https://doi.org/10.3115/v1/D14-1075

Hermann KM, Kočiský T, Grefenstette E, Espeholt L, Kay W, Suleyman M, Blunsom P (2015) Teaching machines to read and comprehend. In: Proceedings of the 28th International Conference on Neural Information Processing Systems - Volume 1, MIT Press, Cambridge, MA, USA, NIPS’15, pp 1693–1701

Kupiec J, Pedersen J, Chen F (1995) A trainable document summarizer. In: Proceedings of the 18th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, ACM, New York, NY, USA, SIGIR ‘95, pp 68–73, https://doi.org/10.1145/215206.215333

Filatova E, Hatzivassiloglou V (2004) Event-based extractive summarization. In: Text summarization branches out. Association for Computational Linguistics, Barcelona, pp 104–111

Shen D, Sun JT, Li H, Yang Q, Chen Z (2007) Document summarization using conditional random fields. In: Proceedings of the 20th International Joint Conference on Artifical Intelligence, Morgan Kaufmann Publishers Inc., San Francisco, CA, USA, IJCAI’07, pp 2862–2867

Wan X (2010) Towards a unified approach to simultaneous single-document and multi-document summarizations. In: Proceedings of the 23rd International Conference on Computational Linguistics, ACL, Stroudsburg, PA, USA, COLING ‘10, pp 1137–1145

Kim Y (2014) Convolutional neural networks for sentence classification. In: Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Association for Computational Linguistics, Doha, pp 1746–1751, https://doi.org/10.3115/v1/D14-1181

Yin W, Pei Y (2015) Optimizing sentence modeling and selection for document summarization. In: Proceedings of the 24th International Conference on Artificial Intelligence, AAAI Press, IJCAI’15, pp 1383–1389

Cao Z, Wei F, Li S, Li W, Zhou M, Wang H (2015) Learning summary prior representation for extractive summarization. ACL, Association for Computational Linguistics, Beijing, pp 829–833. https://doi.org/10.3115/v1/P15-2136

Yao K, Zhang L, Luo T, Wu Y (2018) Deep reinforcement learning for extractive document summarization. Neurocomputing 284:52–62. https://doi.org/10.1016/j.neucom.2018.01.020

Dong Y, Shen Y, Crawford E, van Hoof H, Cheung JCK (2018) BanditSum: Extractive summarization as a contextual bandit. In: Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Association for Computational Linguistics, Brussels, Belgium, pp 3739–3748, https://doi.org/10.18653/v1/D18-1409

Graves A (2012) Supervised sequence labelling. In: Supervised sequence Labelling with recurrent neural networks. Springer Berlin Heidelberg, Berlin, pp 5–13. https://doi.org/10.1007/978-3-642-24797-2_2

Salehinejad H, Baarbe J, Sankar S, Barfett J, Colak E, Valaee S (2018) Recent advances in recurrent neural networks. ArXiv abs/1801.01078. https://arxiv.org/abs/1801.01078. Accessed 02 Aug 2019

Gao L, Li X, Song J, Shen H (2019) Hierarchical lstms with adaptive attention for visual captioning. IEEE Transactions on Pattern Analysis and Machine Intelligence PP:1–1, https://doi.org/10.1109/TPAMI.2019.2894139

Song J, Guo Y, Gao L, Li X, Hanjalic A, Shen HT (2019) From deterministic to generative: multimodal stochastic rnns for video captioning. IEEE Trans on Neur Netw Learn Syst 30(10):3047–3058. https://doi.org/10.1109/TNNLS.2018.2851077

Mikolov T, Chen K, Corrado GS, Dean J (2013) Efficient estimation of word representations in vector space. CoRR abs/1301.3781

Pennington J, Socher R, Manning CD (2014) Glove: Global vectors for word representation. In: Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP), pp 1532–1543

Bahdanau D, Cho K, Bengio Y (2014) Neural machine translation by jointly learning to align and translate. CoRR abs/1409.0473

Bengio S, Vinyals O, Jaitly N, Shazeer N (2015) Scheduled sampling for sequence prediction with recurrent neural networks. In: Proceedings of the 28th International Conference on Neural Information Processing Systems - Volume 1, MIT Press, Cambridge, MA, USA, NIPS’15, pp 1171–1179

Svore KM, Vanderwende L, Burges CJC (2007) Enhancing single-document summarization by combining ranknet and third-party sources. In: EMNLP-CoNLL, p 448″“457

Cao Z, Chen C, Li W, Li S, Wei F, Zhou M (2016) Tgsum: Build tweet guided multi-document summarization dataset. In: Proceedings of the 30th AAAI Conference on Artificial Intelligence, Phoenix, Arizona, USA, p 2906″“2912

Paulus R, Xiong C, Socher R (2017) A deep reinforced model for abstractive summarization. ArXiv abs/1705.04304. https://arxiv.org/abs/1705.04304

Williams RJ (1992) Simple statistical gradient-following algorithms for connectionist reinforcement learning. Mach Learn 8(3):229–256. https://doi.org/10.1007/BF00992696

Ranzato M, Chopra S, Auli M, Zaremba W (2015) Sequence level training with recurrent neural networks. CoRR abs/1511.06732

Gao W, Jiang Y, Jiang ZP, Chai T (2016) Output-feedback adaptive optimal control of interconnected systems based on robust adaptive dynamic programming. Automatica 72(C):37–45. https://doi.org/10.1016/j.automatica.2016.05.008

Schulman J, Moritz P, Levine S, Jordan MI, Abbeel P (2015) High-dimensional continuous control using generalized advantage estimation. CoRR abs/1506.02438

Zhao X, Ding S, An Y, Jia W (2019) Applications of asynchronous deep reinforcement learning based on dynamic updating weights. Appl Intell 49(2):581–591

Zhao X, Ding S, An Y, Jia W (2018) Asynchronous reinforcement learning algorithms for solving discrete space path planning problems. Appl Intell 48(12):4889–4904. https://doi.org/10.1007/s10489-018-1241-z

Ding S, Du W, Zhao X, Wang L, Jia W (2019) A new asynchronous reinforcement learning algorithm based on improved parallel pso. Appl Intell 49(12):4211–4222. https://doi.org/10.1007/s10489-019-01487-4

Sutton RS, Barto AG (1998) Reinforcement learning: an introduction mit press. Cambridge

Nallapati R, Zhou B, dos Santos C, Çağlar Gu̇lçehre , Xiang B (2016) Abstractive text summarization using sequence-to-sequence RNNs and beyond. In: Proceedings of The 20th SIGNLL Conference on Computational Natural Language Learning, Association for Computational Linguistics, Berlin, Germany, pp 280–290, https://doi.org/10.18653/v1/K16-1028

See A, Liu PJ, Manning CD (2017) Get to the point: Summarization with pointer-generator networks. In: Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), ACL, Vancouver, Canada, pp 1073–1083, https://doi.org/10.18653/v1/P17-1099

Tan J, Wan X, Xiao J (2017) Abstractive document summarization with a graph-based attentional neural model. In: Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), ACL, Vancouver, Canada, pp 1171–1181, https://doi.org/10.18653/v1/P17-1108

Google (2013) One billion word benchmark for measuring progress in statistical language modeling. Tech Rep

Mikolov T, Sutskever I, Chen K, Corrado G, Dean J (2013) Distributed representations of words and phrases and their compositionality. In: Proceedings of the 26th International Conference on Neural Information Processing Systems - Volume 2, Curran Associates Inc., USA, NIPS’13, pp 3111–3119

Kingma DP, Ba J (2014) Adam: A method for stochastic optimization. CoRR abs/1412.6980

Pascanu R, Mikolov T, Bengio Y (2013) On the difficulty of training recurrent neural networks. In: Proceedings of the 30th International Conference on International Conference on Machine Learning - Volume 28. JMLR.org, ICML’13, pp III–1310–III–1318

Abadi M, Agarwal A, Barham P, Brevdo E, Chen Z, Citro C, Corrado GS, Davis A, Dean J, Devin M, Ghemawat S, Goodfellow I, Harp A, Irving G, Isard M, Jia Y, Jozefowicz R, Kaiser L, Kudlur M, Levenberg J, Mané D, Monga R, Moore S, Murray D, Olah C, Schuster M, Shlens J, Steiner B, Sutskever I, Talwar K, Tucker P, Vanhoucke V, Vasudevan V, Viégas F, Vinyals O, Warden P, Wattenberg M, Wicke M, Yu Y, Zheng X (2015) TensorFlow: Large-scale machine learning on heterogeneous systems. URL https://www.tensorflow.org/, software available from tensorflow.org. Accessed 10 Nov 2019

Lin CY (2004) ROUGE: a package for automatic evaluation of summaries. In: Text summarization branches out. Association for Computational Linguistics, Barcelona, pp 74–81

Acknowledgements

This work was supported by the National Natural Science Foundation of China (No. 61772031) and the Hunan Natural Science Foundation of China in 2020.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Mohsen, F., Wang, J. & Al-Sabahi, K. A hierarchical self-attentive neural extractive summarizer via reinforcement learning (HSASRL). Appl Intell 50, 2633–2646 (2020). https://doi.org/10.1007/s10489-020-01669-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-020-01669-5