Abstract

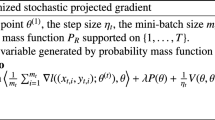

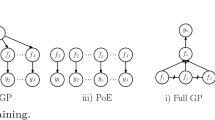

Gaussian Process (GP) model is an elegant tool for the probabilistic prediction. However, the high computational cost of GP prohibits its practical application on large datasets. To address this issue, this paper develops a new sparse GP model, referred to as GPHalf. The key idea is to sparsify the GP model via the newly introduced ℓ 1/2 regularization method. To achieve this, we represent the GP as a generalized linear regression model, then use the modified ℓ 1/2 half thresholding algorithm to optimize the corresponding objective function, thus yielding a sparse GP model. We proof that the proposed model converges to a sparse solution. Numerical experiments on both artificial and real-world datasets validate the effectiveness of the proposed model.

Similar content being viewed by others

References

Williams CK, Rasmussen CE (1996) Gaussian processes for regression. Adv Neural Inf Process Syst 8:514–520

Williams CK, Barber D (1998) Bayesian classification with Gaussian processes. IEEE Trans Pattern Anal Mach Intell 20(12):1342–1351

Kuss M, Rasmussen CE (2005) Assessing approximate inference for binary Gaussian process classification. J Mach Learn Res 6(10):1679–1704

Williams CK, Seeger M (2001) Using the Nystrom method to speed up kernel machines. Adv Neural Inf Process Syst 13:682–688

Lawrence ND, Seeger M, Herbrich R (2003) Fast sparse Gaussian process: the informative vector machine. Adv Neural Inf Process Syst 15:609–616

Smola AJ, Bartlett P (2001) Sparse greedy Gaussian process regression. Adv Neural Inf Process Syst 13:619–625

Keerthi S, Chu W (2006) A matching pursuit approach to sparse Gaussian process regression. Adv Neural Inf Process Syst 18:643–650

Vincent P, Bengio Y (2002) Kernel matching pursuit. Mach Learn 48(1–3):165–187

Seeger M, Williams CK, Lawrence N (2003) Fast forward selection to speed up sparse Gaussian process regression. In: Proceedings of the 9th international workshop on artificial intelligence and statistics, Key West, Florida, January 2003, pp 643–650

Csato L, Opper M (2002) Sparse online Gaussian processes. Neural Comput 14(2):641–668

Ranganathan A, Yang M, Ho J (2011) Online sparse Gaussian process regression and its applications. IEEE Trans Image Process 20(2):391–404

Yan F, Qi Y (2010) Sparse Gaussian process regression via L1 penalization. In: Proceedings of the 27th international conference on machine learning, Haifa, Israel, June 2010 pp 1183–1190

Tibshirani R (1996) Regression shrinkage and selection via the Lasso. J R Stat Soc B 58(1):267–288

Snelson E, Zoubin G (2006) Sparse Gaussian processes using pseudo-inputs. Adv Neural Inf Process Syst 18:1257–1264

Snelson E, Zoubin G (2007) Local and global sparse Gaussian process approximations. In: Proceedings of the 11th international conference on artificial intelligence and statistics, Sun Juan, Puerto Rico, March 2007, pp 524–531

Titsias M (2009) Variational learning of inducing variables in sparse Gaussian processes. In: Proceedings of the 12th international conference on artificial intelligence and statistics, Clearwater Beach, Florida, January 2009, pp 643–650

Gibbs M, MacKay D (2000) Variational Gaussian process classifiers. IEEE Trans Neural Netw 11(6):1458–1464

Girolami M, Rogers S (2006) Variational Bayesian multinomial probit regression with Gaussian process priors. Neural Comput 18(8):1790–1817

Gredilla M, Candela J, Rasmussen CE, Vidal AF (2010) Sparse spectrum Gaussian process regression. J Mach Learn Res 11(6):1865–1881

Hensman J, Lawrence N (2012) Gaussian processes for big data through stochastic variational inference. Adv Neural Inf Process Syst 25

Candela J, Rasmussen CE (2005) A unifying view of sparse approximate Gaussian process regression. J Mach Learn Res 6(12):1939–1959

Rasmussen CE, Williams KI (2006) Gaussian processes for machine learning. MIT Press, Cambridge

Xu ZB (2010) Data modeling: visual psychology approach and L1/2 regularization theory. In: Proceedings of international congress of mathematicians, Hyderabad, India, August 2010, pp 3151–3184

Xu ZB, Chang XY, Xu FM, Zhang H (2012) ℓ 1/2 regularization: a thresholding representation theory and a fast solver. IEEE Trans Neural Netw Learn Syst 23(7):1013–1027

Assaleh K, Shanableh T (2010) Robust polynomial classifier using L1-norm minimization. Appl Intell 33(3):330–339

Debnath R, Muramatsu M, Takahashi H (2005) An efficient support vector machine learning method with second-order cone programming for large-scale problems. Appl Intell 23(3):219–239

Bach F, Jordan M (2005) Predictive low-rank decomposition for kernel methods. In: Proceedings of the 22th international conference on machine learning, Bonn, Germany, August 2005, pp 1183–1190

Fine S, Scheinberg K (2001) Efficient SVM training using low-rank kernel representations. J Mach Learn Res 2(12):243–264

Zou H, Hastie T (2005) Regularization and variable selection via the elastic net. J R Stat Soc B 67(2):301–320

Asuncion FA (2010) UCI machine learning repository. University of California, School of Information and Computer Science, Irvine. http://archive.ics.uci.edu/ml

LIACC repository of regression data. Available from: http://www.liaad.up.pt/~ltorgo/Regression/DataSets.html

Neal RM (1996) Bayesian learning for neural networks. Lecture notes in statistics, vol 118. Springer, New York

GPML toolbox, A toolbox for Gaussian processes. Available from: www.gaussianprocess.org/gpml/code/

Acknowledgements

The authors also gratefully acknowledge the financial support of the National Natural Science Foundation of China (Project Nos. 60974101, 60736027, 61221063).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kou, P., Gao, F. Sparse Gaussian Process regression model based on ℓ 1/2 regularization. Appl Intell 40, 669–681 (2014). https://doi.org/10.1007/s10489-013-0482-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-013-0482-0