Abstract

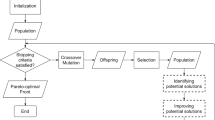

Algorithmic design in neural architecture search (NAS) has received a lot of attention, aiming to improve performance and reduce computational cost. Despite the great advances made, few authors have proposed to tailor initialization techniques for NAS. However, the literature shows that a good initial set of solutions facilitates finding the optima. Therefore, in this study, we propose a data-driven technique to initialize a population-based NAS algorithm. First, we perform a calibrated clustering analysis of the search space, and second, we extract the centroids and use them to initialize a NAS algorithm. We benchmark our proposed approach against random and Latin hypercube sampling initialization using three population-based algorithms, namely a genetic algorithm, an evolutionary algorithm, and aging evolution, on CIFAR-10. More specifically, we use NAS-Bench-101 to leverage the availability of NAS benchmarks. The results show that compared to random and Latin hypercube sampling, the proposed initialization technique enables achieving significant long-term improvements for two of the search baselines, and sometimes in various search scenarios (various training budget). Besides, we also investigate how an initial population gathered on the tabular benchmark can be used for improving search on another dataset, the So2Sat LCZ-42. Our results show similar improvements on the target dataset, despite a limited training budget. Moreover, we analyse the distributions of solutions obtained and find that that the population provided by the data-driven initialization technique enables retrieving local optima (maxima) of high fitness and similar configurations.

Article PDF

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Data Availability

Code Availability

References

Haykin, S.: Neural Networks and Learning Machines, vol. 3. Pearson Upper Saddle River, Hoboken (2009)

LeCun, Y., Bengio, Y., Hinton, G.: Deep learning. Nature 521(7553), 436 (2015)

Elsken, T., Metzen, J.H., Hutter, F., et al.: Neural architecture search: A survey. J. Mach. Learn. Res. 20(55), 1–21 (2019)

Ojha, V.K., Abraham, A., Snášel, V.: Metaheuristic design of feedforward neural networks: a review of two decades of research. Eng. Appl. Artif. Intel. 60, 97–116 (2017)

Hanxiao, L., Karen, S., Yiming, Y.: Darts: Differentiable architecture search. International Conference on Learning Representations (2019)

Real, E., Aggarwal, A., Huang, Y., Le, Q.V.: Regularized evolution for image classifier architecture search. Proceedings of the AAAI Conference on Artificial Intelligence 33(01), 4780–4789 (2019). https://doi.org/10.1609/aaai.v33i01.33014780

Camero, A., Wang, H., Alba, E., Bäck, T.: Bayesian neural architecture search using a training-free performance metric. Applied Soft Computing 107356 (2021)

Domhan, T., Springenberg, J.T., Hutter, F.: Speeding up automatic hyperparameter optimization of deep neural networks by extrapolation of learning curves. In: Proceedings of the 24th International Conference on Artificial Intelligence. IJCAI’15, pp 3460–3468. AAAI Press (2015)

Camero, A., Toutouh, J., Alba, E.: Low-cost recurrent neural network expected performance evaluation. arXiv:1805.07159 (2018)

Ying, C., Klein, A., Christiansen, E., Real, E., Murphy, K., Hutter, F.: NAS-bench-101: Towards reproducible neural architecture search. In: Chaudhuri, K., Salakhutdinov, R. (eds.) Proceedings of the 36th International Conference on Machine Learning. Proceedings of Machine Learning Research, vol. 97, pp 7105–7114. PMLR, Long Beach (2019). http://proceedings.mlr.press/v97/ying19a.html

Dong, X., Yang, Y.: Nas-Bench-201: Extending the scope of reproducible neural architecture search. In: International Conference on Learning Representations (ICLR) (2020)

Back, T.: Evolutionary algorithms in theory and practice: Evolution strategies, evolutionary programming, genetic algorithms. Oxford University Press, Oxford (1996)

Holland, J.H.: Outline for a logical theory of adaptive systems. J. ACM (JACM) 9(3), 297–314 (1962)

Hutter, F., Kotthoff, L., Vanschoren, J.: Automated Machine Learning - Methods, Systems, Challenges. Springer, Berlin (2019)

Engel, J.: Teaching Feed-Forward neural networks by simulated annealing. Complex Systems 2, 641–648 (1988)

Montana, D.J., Davis, L.: Training feedforward neural networks using genetic algorithms. Proceedings of the 11th International Joint Conference on Artificial intelligence 1(89), 762–767 (1989)

Alba, E., Aldana, J., Troya, J.: Genetic algorithms as heuristics for optimizing ANN design. In: Artificial Neural Nets and Genetic Algorithms, pp 683–690. Springer, Berlin (1993)

Alba, E., Aldana, J., Troya, J.M.: Full automatic ann design: A genetic approach. In: International Workshop on Artificial Neural Networks, pp 399–404. Springer (1993)

Yao, X.: A review of evolutionary artificial neural networks. Int. J. Intell. Syst. 8(4), 539–567 (1993)

Stanley, K.O., Miikkulainen, R.: Evolving neural networks through augmenting topologies. Evol. Comput. 10(2), 99–127 (2002)

Camero, A., Toutouh, J., Alba, E.: Random error sampling-based recurrent neural network architecture optimization. Eng. Appl. Artif. Intel. 103946, 96 (2020)

Zhining, Y., Yunming, P.: The genetic convolutional neural network model based on random sample. Int. J. u-and e-Service Sci. Technol. 8(11), 317–326 (2015)

Rosa, G., Papa, J., Marana, A., Scheirer, W., Cox, D.: Fine-Tuning Convolutional Neural Networks Using Harmony Search. In: Pardo, A., Kittler, J. (eds.) Progress in Pattern Recognition, Image Analysis, Computer Vision, and Applications, pp 683–690. Springer, Cham (2015)

Van Stein, B., Wang, H., Bäck, T.: Automatic configuration of deep neural networks with parallel efficient global optimization. In: 2019 International Joint Conference on Neural Networks (IJCNN), pp 1–7. IEEE (2019)

Ororbia, A., ElSaid, A., Desell, T.: Investigating recurrent neural network memory structures using neuro-evolution. In: Proceedings of the Genetic and Evolutionary Computation Conference, pp 446–455. ACM (2019)

Miikkulainen, R., Liang, J., Meyerson, E., Rawal, A., Fink, D., Francon, O., Raju, B., Shahrzad, H., Navruzyan, A., Duffy, N., et al.: Evolving deep neural networks. In: Artificial Intelligence in the Age of Neural Networks and Brain Computing, pp 293–312. Elsevier, Netherlands (2019)

Wang, C., Xu, C., Yao, X., Tao, D.: Evolutionary generative adversarial networks. IEEE Trans. Evol. Comput. 23(6), 921–934 (2019)

Yang, A., Esperança, P.M., Carlucci, F.M.: Nas evaluation is frustratingly hard. In: International Conference on Learning Representations. https://openreview.net/forum?id=HygrdpVKvr. Accessed 01 April 2022 (2020)

Ying, C., Klein, A., Christiansen, E., Real, E., Murphy, K., Hutter, F.: Nas-bench-101: Towards reproducible neural architecture search. In: International Conference on Machine Learning, pp 7105–7114. PMLR (2019)

Zela, A., Siems, J., Frank, H.: Nas-bench-1shot1: Benchmarking and dissecting one-shot neural architecture search. International Conference on Learning Representations (2020)

Siems, J., Zimmer, L., Zela, A., Lukasik, J., Keuper, M., Hutter, F.: Nas-bench-301 and the case for surrogate benchmarks for neural architecture search. arXiv:2008.09777 (2020)

Klyuchnikov, N., Trofimov, I., Artemova, E., Salnikov, M., Fedorov, M., Burnaev, E.: NAS-Bench-NLP: Neural architecture search benchmark for natural language processing. arXiv:2006.07116 (2020)

Pham, H., Guan, M., Zoph, B., Le, Q., Dean, J.: Efficient neural architecture search via parameters sharing. In: International Conference on Machine Learning, pp 4095–4104. PMLR (2018)

Brock, A., Lim, T., Ritchie, J.M., Weston, N.: Smash: one-shot model architecture search through hypernetworks. arXiv:1708.05344 (2017)

Camero, A., Toutouh, J., Alba, E.: Comparing deep recurrent networks based on the mae random sampling, a first approach. In: Conf of the Spanish Association for Artificial Intelligence, pp 24–33. Springer (2018)

Lin, M., Wang, P., Sun, Z., Chen, H., Sun, X., Qian, Q., Li, H., Jin, R.: Zen-nas: a zero-shot nas for high-performance image recognition. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 347–356 (2021)

Dürr, P., Mattiussi, C., Floreano, D.: Neuroevolution with Analog Genetic Encoding. In: Parallel Problem Solving from Nature-PPSN Ix, Pp, pp 671–680. Springer, Berlin (2006)

Ning, X., Zheng, Y., Zhao, T., Wang, Y., Yang, H.: A generic graph-based neural architecture encoding scheme for predictor-based nas. In: Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XIII 16, pp 189–204. Springer (2020)

Chu, X., Zhang, B., Ma, H., Xu, R., Li, Q.: Fast, accurate and lightweight super-resolution with neural architecture search. In: 2020 25th International Conference on Pattern Recognition (ICPR), pp 59–64. IEEE (2021)

Nunes, M., Fraga, P.M., Pappa, G.L.: Fitness landscape analysis of graph neural network architecture search spaces. In: Proceedings of the Genetic and Evolutionary Computation Conference. GECCO ’21, pp 876–884. Association for Computing Machinery (2021), https://doi.org/10.1145/3449639.3459318

Traoré, K.R., Camero, A., Zhu, X.X.: Fitness Landscape Footprint: A Framework to Compare Neural Architecture Search Problems (2021)

Zhang, T., Lei, C., Zhang, Z., Meng, X.-B., Chen, C.P.: As-nas: Adaptive scalable neural architecture search with reinforced evolutionary algorithm for deep learning. IEEE Transactions on Evolutionary Computation (2021)

Maaranen, H., Miettinen, K., Mäkelä, M.M.: Quasi-random initial population for genetic algorithms. Comput. Math.. Appl. 47(12), 1885–1895 (2004)

Rahnamayan, S., Tizhoosh, H.R., Salama, M.M.: Quasi-oppositional differential evolution. In: 2007 IEEE Congress on Evolutionary Computation, pp 2229–2236. IEEE (2007)

Clerc, M.: Initialisations for particle swarm optimisation Online at http://clerc.maurice.free.fr/pso. Accessed 01 April 2022 (2008)

Helwig, S., Wanka, R.: Theoretical analysis of initial particle swarm behavior. In: International Conference on Parallel Problem Solving from Nature, pp 889–898. Springer (2008)

Kazimipour, B., Li, X., Qin, K.: A review of population initialization techniques for evolutionary algorithms. In: Proceedings of the 2014 IEEE Congress on Evolutionary Computation, CEC 2014 (2014), https://doi.org/10.1109/CEC.2014.6900618

Kazimipour, B., Li, X., Qin, A.K.: Initialization methods for large scale global optimization. In: 2013 IEEE Congress on Evolutionary Computation, pp 2750–2757. IEEE (2013)

Kimura, S., Matsumura, K.: Genetic algorithms using low-discrepancy sequences. In: Proceedings of the 7th Annual Conference on Genetic and Evolutionary Computation, pp 1341–1346 (2005)

Morrison, R.W.: Dispersion-based population initialization. In: Genetic and Evolutionary Computation Conference, pp 1210–1221. Springer (2003)

Ma, Z., Vandenbosch, G.A.: Impact of random number generators on the performance of particle swarm optimization in antenna design. In: 2012 6th European Conference on Antennas and Propagation (EUCAP), pp 925–929. IEEE (2012)

Poles, S., Fu, Y., Rigoni, E.: The effect of initial population sampling on the convergence of multi-objective genetic algorithms. In: Multiobjective Programming and Goal Programming, pp 123–133. Springer, Berlin (2009)

Mousavirad, S.J., Bidgoli, A.A., Rahnamayan, S.: Tackling deceptive optimization problems using opposition-based de with center-based latin hypercube initialization. In: 2019 14th International Conference on Computer Science & Education (ICCSE), pp 394–400. IEEE (2019)

Medeiros, H.R., Izidio, D.M., Ferreira, A.P.D.A., Da, S., Barros, E.N.: Latin hypercube initialization strategy for design space exploration of deep neural network architectures. In: Proceedings of the Genetic and Evolutionary Computation Conference Companion, pp 295–296 (2019)

Fortin, F.-A., De Rainville, F.-M., Gardner, M. -A., Parizeau, M., Gagné, C.: DEAP: Evolutionary Algorithms made easy. J. Mach. Learn. Res. 13, 2171–2175 (2012)

Pedregosa, F., Varoquaux, G., Gramfort, A., Michel, V., Thirion, B., Grisel, O., Blondel, M., Prettenhofer, P., Weiss, R., Dubourg, V., Vanderplas, J., Passos, A., Cournapeau, D., Brucher, M., Perrot, M., Duchesnay, E.: Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 12, 2825–2830 (2011)

Rousseeuw, P.J.: Silhouettes: a graphical aid to the interpretation and validation of cluster analysis. J. Comput. Appl. Math. 20, 53–65 (1987). https://doi.org/10.1016/0377-0427(87)90125-7

Caliński, T., Harabasz, J.: A dendrite method for cluster analysis. Commun. Stat. 3(1), 1–27 (1974). https://doi.org/10.1080/03610927408827101

Davies, D.L., Bouldin, D.W.: A cluster separation measure. IEEE Transactions on Pattern Analysis and Machine Intelligence PAMI-1(2) 224–227 (1979)

Zhu, X.X., Hu, J., Qiu, C., Shi, Y., Kang, J., Mou, L., Bagheri, H., Haberle, M., Hua, Y., Huang, R., et al.: So2sat lcz42: a benchmark data set for the classification of global local climate zones [software and data sets]. IEEE Geosci. Remote Sens. 8(3), 76–89 (2020)

Acknowledgements

Authors acknowledge support by the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation program (grant agreement No. [ERC-2016-StG-714087], Acronym: So2Sat), by the Helmholtz Association through the Framework of Helmholtz AI [grant number: ZT-I-PF-5-01] - Local Unit “Munich Unit @Aeronautics, Space and Transport (MASTr)” and Helmholtz Excellent Professorship “Data Science in Earth Observation - Big Data Fusion for Urban Research”(W2-W3-100), by the German Federal Ministry of Education and Research (BMBF) in the framework of the international future AI lab “AI4EO – Artificial Intelligence for Earth Observation: Reasoning, Uncertainties, Ethics and Beyond” (Grant number: 01DD20001), the grant DeToL, and a DAAD Research fellowship.

Funding

Open Access funding enabled and organized by Projekt DEAL. The funding is stated in Acknowledgment section.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Consent for Publication

All authors have checked the manuscript and have agreed to the submission.

Conflict of Interests

The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Traoré, K.R., Camero, A. & Zhu, X.X. A data-driven approach to neural architecture search initialization. Ann Math Artif Intell (2023). https://doi.org/10.1007/s10472-022-09823-0

Accepted:

Published:

DOI: https://doi.org/10.1007/s10472-022-09823-0