Abstract

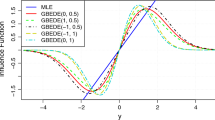

This paper uses a decision theoretic approach for updating a probability measure representing beliefs about an unknown parameter. A cumulative loss function is considered, which is the sum of two terms: one depends on the prior belief and the other one on further information obtained about the parameter. Such information is thus converted to a probability measure and the key to this process is shown to be the Kullback–Leibler divergence. The Bayesian approach can be derived as a natural special case. Some illustrations are presented.

Similar content being viewed by others

References

Aczel J., Pfanzagl J. (1966) Remarks on the measurement of subjective probability and information. Metrika 11: 91–105

Ali S.M., Silvey S.D. (1966) A general class of coefficients of divergence of one distribution from another. Journal of the Royal Statistical Society: Series B 28(1): 131–142

Amari S.-I. (2009) α-divergence is unique, belonging to both f-divergence and Bregman divergence classes. IEEE Transactions on Information Theory 55(11): 4925–4931

Berger J.O. (1980) Statistical decision theory and Bayesian analysis. Springer, New York

Bernardo J.M. (1979) Expected information as expected utility. The Annals of Statistics 7(3): 686–690

Bernardo J., Smith A.F.M. (1994) Bayesian theory. Wiley, Chichester

Bissiri P.G., Walker S.G. (2010) On Bayesian learning from Bernoulli observations. Journal of Statistical Planning and Inference 140(11): 3520–3530

Csiszár I. (1967) Information-type measures of difference of probability distributions and indirect observation. Studia Scientiarum Mathematicarum Hungarica 2: 229–318

Good I.J. (1952) Rational decisions Journal of the Royal Statistical Society. Series B 14: 107–114

Huber P.J., Ronchetti E.M. (2009) Robust statistics. Wiley, New Jersey

Johnson S., Tomlinson G., Hawker G., Granton J., Feldman B. (2010) Methods to elicit beliefs for bayesian priors.. a systematic review. Journal of Clinical Epidemiology 63(4): 355–369

Walker S.G. (2006) Bayesian inference via a minimization rule. Sankhyā 68: 542–553

Zǎlinescu C. (2002) Convex analysis in general vector spaces. World Scientific, Singapore

Author information

Authors and Affiliations

Corresponding author

About this article

Cite this article

Bissiri, P.G., Walker, S.G. Converting information into probability measures with the Kullback–Leibler divergence. Ann Inst Stat Math 64, 1139–1160 (2012). https://doi.org/10.1007/s10463-012-0350-4

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10463-012-0350-4