Abstract

Uncovering the mechanisms of physics is driving a new paradigm in artificial intelligence (AI) discovery. Today, physics has enabled us to understand the AI paradigm in a wide range of matter, energy, and space-time scales through data, knowledge, priors, and laws. At the same time, the AI paradigm also draws on and introduces the knowledge and laws of physics to promote its own development. Then this new paradigm of using physical science to inspire AI is the physical science of artificial intelligence (PhysicsScience4AI, PS4AI). Although AI has become the driving force for development in various fields, there is still a “black box” phenomenon that is difficult to explain in the field of AI deep learning. This article will briefly review the connection between relevant physics disciplines (classical mechanics, electromagnetism, statistical physics, quantum mechanics) and AI. It will focus on discussing the mechanisms of physics disciplines and how they inspire the AI deep learning paradigm, and briefly introduce some related work on how AI solves physics problems. PS4AI is a new research field. At the end of the article, we summarize the challenges facing the new physics-inspired AI paradigm and look forward to the next generation of artificial intelligence technology. This article aims to provide a brief review of research related to physics-inspired AI deep algorithms and to stimulate future research and exploration by elucidating recent advances in physics.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Artificial intelligence contains a wide range of algorithms (Yang et al. 2023; LeCun et al. 1998; Krizhevsky et al. 2012; He et al. 2016) and modeling tools (Sutskever et al. 2014) for large-scale data processing tasks. The emergence of massive data and deep neural networks provides elegant solutions in various fields. The academic community has also begun to explore the application of AI to various traditional disciplines. The objective is to promote the development of AI while further improving the possibilities of traditional analytical modeling (Hsieh 2009; Ivezić et al. 2019; Karpatne et al. 2017, 2018; Kutz 2017; Reichstein et al. 2019). Realizing general artificial intelligence is the goal that human beings have been pursuing. Although AI has made considerable progress in the past few decades, it is still difficult to achieve general machine intelligence and brain-like intelligence Jiao et al. (2016).

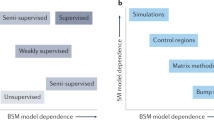

At present, researchers are beginning to explore the field of “AI + Physics” (Muther et al. 2023; Mehta et al. 2019). The objectives of current research are: (1) Utilise the findings of physical science and artificial intelligence to investigate the principles governing brain learning; (2) Utilise AI to facilitate the advancement of physics; (3) Apply physical science to inform the development of novel AI paradigms. We review relevant research on the intersection between AI and physical sciences in a selective manner. This includes the development of AI conceptual and algorithmic driven by physical insights, the application of artificial intelligence technology in multiple fields of physics, and the intersection between these two fields (Zdeborová 2020; Meng et al. 2022).

Physics As we all know, physics is a natural science that plays a heuristic role in the cognition of the objective world, focusing on the study of matter, energy, space, and time, especially their respective properties and the relationship between them. Broadly speaking, physics explores and analyzes the phenomena that occur in nature to understand its rules. Statistical Mechanics describes the theoretical progress made by neural networks in statistical physics Engel (2001). In the long history, physical knowledge (a priori) has been collected, verified, and integrated into practical theories. It is a simplified induction of the laws of nature and human behavior in many important disciplines and engineering applications. If the prior knowledge and AI are properly combined, more abundant and effective feature information can be extracted from the scarce data set, which helps to improve the generalization ability and interpretability of the network model Meng et al. (2022).

Artificial intelligence Artificial intelligence is a discipline that researches and develops theories and application systems for simulating and extending human brain intelligence. The purpose of artificial intelligence is to enable machines to simulate human intelligent behavior (such as learning, reasoning, thinking, planning, etc.) Widrow and Lehr (1990), so that machines have intelligence and complete “complex work”. Today, artificial intelligence is widely valued in the computer field, involving machine vision (Krizhevsky et al. 2012; Heisele et al. 2002), natural language processing Devlin et al. (2018), psychology (Rogers and Mcclelland 2004; Saxe et al. 2018) and pedagogy Piech et al. (2015) and other disciplines Khammash (2022) to model, is an interdisciplinary subject. The convergence of physical sciences and deep learning offers exciting prospects for theoretical science, providing valuable insights into the learning and computational capabilities of deep networks.

Relationship The development of physics is a simplified induction of nature, which promotes the research of brain-like science in artificial intelligence. And the brain that perceives any “experience” technology is close to the so-called “physical sense”, and physics opens new avenues and provides new tools for current artificial intelligence research McCormick (2022). To some extent, both artificial intelligence models and physical models can share information and predict the behavior of complex systems Tiberi et al. (2022), that is, they share certain methods and goals, but the implementation methods are different. Thus, physics should understand natural mechanisms, using prior knowledge Niyogi et al. (1998), regularity, and inductive reasoning to inform models, while model-agnostic AI should provide “intelligence” Werner (2013) through data extraction.

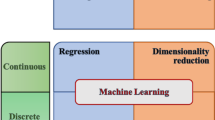

Main contributions Based on these analyses, this study aims to provide a comprehensive review and classification of the field of physics-inspired AI deep learning (Fig. 1) and summarize potential research directions and open questions that need to be addressed urgently in the future. The main contributions of this paper are summarized as follows:

-

1.

Comprehensiveness and readability. This article comprehensively reviews over 400 physical science ideas in progress and physics-inspired deep learning AI algorithms. It also summarizes existing physics-inspired learning and modeling research from four aspects: classical mechanics, electromagnetism, statistical physics, and quantum mechanics.

-

2.

Inspirational. The latest progress in artificial intelligence technology to solve physical science problems is summarized in the article. Finally, in the new generation of deep learning artificial intelligence algorithms, we analyzed the outlooks and implications between AI and physics.

-

3.

In-depth analysis. This article reviews open questions that need to be addressed to facilitate future research and exploration.

In this review, we attempt to provide a coherent review of the different intersections of deep learning artificial intelligence and physics. The rest of the paper is organized as follows: Chapter 2 presents artificial intelligence algorithms inspired by the perspective of classical mechanics and how AI can solve physical problems. Chapter 3 briefly reviews the electromagnetics-inspired artificial intelligence algorithms and the applications of AI in electromagnetics. Chapters 4 and 5 provide an overview of AI algorithms and applications inspired by statistical physics and quantum mechanics, respectively. Chapter 6 explores potential applications and challenges currently facing the intersection of AI and physics. Chapter 7 is the conclusion of this paper.

2 Deep neural network paradigms inspired by classical mechanics

In this section, we briefly introduce manifolds, graphs and fluid dynamics in geometric deep learning, as well as the basics of Hamiltonian/Lagrangian and differential equation solvers in dynamic neural network systems. Then it explains the related work inspired by it, and finally introduces the deep learning method of graph neural networks to solve physical problems. We summarize the structure of this section and an overview of representative methods in Table 1.

2.1 Geometric deep learning

Deep learning simulates the symmetry of the physical world (meaning the invariance of the laws of physics under various transformations). From the invariance of physical laws, an invariable physical quantity can be obtained, which is called a conserved quantity or invariant, and the universe follows translational/rotational symmetry (conservation of momentum). Momentum conservation is the embodiment of space uniformity (distortion degree), which is explained by mathematical group theory: space has translational symmetry—after the spatial translational transformation of an object, the physical motion trend and related physical laws remain unchanged. In the 20th century, Noether proposed Noether’s theorem that every continuous symmetry corresponds to a conservation law, relevant expressions see references (Torres 2003, 2004; Frederico and Torres 2007) and references therein, related applications are shown in Fig. 2.

The translation invariance, locality, and compositionality of Convolutional Neural Networks (CNNs) make them naturally suitable for tasks dealing with Euclidean-structured data like images. However, there are still complex non-Euclidean data in the world, and Geometric Deep Learning (GDL) Gerken et al. (2023) emerges from this. From the perspective of symmetry and invariance, the design of deep learning framework in the case of non-traditional plane data (non-Euclidean data) structure is studied Michael (2017). The term was first proposed by Michael Bronstein in 2016, and GDL attempts to generalize (structured) deep networks to non-Euclidean domains such as graphs and manifolds. The data structure is shown in Fig. 3 .

2.1.1 Manifold neural networks

A manifold is a space with local Euclidean space properties and is used in mathematics to describe geometric shapes, such as the spatial coordinates of the surfaces of various objects returned by radar scans. A Riemann manifold is a differential manifold with a Riemannian metric, where the Riemannian metric is a concept in differential geometry. Simply put, a Riemannian manifold is a smooth manifold given a smooth, symmetric, positive definite second-order tensor field. For example, in physics, the phase space of classical mechanics is an instance of a manifold, and the four-dimensional pseudo-Riemannian manifold that constructs the space-time model of general relativity is also an instance of a manifold.

Often, manifold data have richer spatial information, such as magnetoencephalography on a sphere Defferrard et al. (2020) and human scan data (Armeni et al. 2017; Bogo et al. 2014), which contain local structures and spatial symmetries Meng et al. (2022). At present, a new type of manifold convolution has been introduced into the physics-informed manifold (Masci et al. 2015; Monti et al. 2017; Boscaini et al. 2016; Cohen et al. 2019; De Haan et al. 2020) to make up for the defect that convolutional neural networks cannot fully utilize spatial information.

Manifold learning is a large class of manifold-based frameworks, and recovering low-dimensional structures is often referred to as manifold learning or nonlinear dimensionality reduction, which is an instance of unsupervised learning. Examples of manifold learning include: (1) A multidimensional scaling (MDS) algorithm Tenenbaum et al. (2000) that focuses on preserving “similarity (usually Euclidean distance)” information in high-dimensional spaces, is another linear dimensionality reduction method; (2) Focus on the local linear embedding (LLE) algorithm that preserves the local linear features of the sample during dimensionality reduction Roweis and Saul (2000), abandoning the global optimal dimensionality reduction of all samples; (3) The stochastic neighbor embedding (t-SNE) algorithm Maaten and Hinton (2008) uses the t distribution of heavy-tailed distribution to avoid the crowding problem and optimization problem, it is only suitable for visualization and cannot perform feature extraction; (4) The Uniform Manifold Approximation and Projection (UMAP) McInnes et al. (2018) algorithm is built on the theoretical framework of Riemannian geometry and algebraic topology. UMAP, like t-SNE, is only suitable for visualization, and the performance of UMAP and t-SNE is determined by different initialization choices (Kobak and Linderman 2019, 2021); (5) Spectral embedding, such as Laplacian feature map, is a graph-based dimensionality reduction algorithm to construct the relationship between data from a local perspective. It hopes that the points that are related to each other (the points connected in the graph) are as close as possible in the space after dimensionality reduction so that the original data structure can still be maintained after dimensionality reduction Belkin and Niyogi (2003); (6) The diffusion map method Wang (2012) uses the diffusion map to construct the data kernel, which is also a nonlinear dimensionality reduction algorithm; (7) The deep model Hadsell et al. (2006) learns a model that can evenly map the data to the output a globally consistent nonlinear function (invariant map) on a manifold for dimensionality reduction. Cho et al. (2024) proposed a Gaussian manifold variational autoencoder (GM-VAE) that addresses common limitations previously reported in hyperbolic VAEs. Katsman et al. (2024) studied ResNet and showed how to extend this structure to general Riemannian manifolds in a geometrically principled way.

2.1.2 Graph neural networks

Another type of non-Euclidean geometric data is graph. Graph refers to network structure data composed of nodes and edges, such as social networks. The concept of graph neural network (GNN) was first proposed by Gori et al. to extend existing neural networks to handle more types of graph data Gori et al. (2005), and then further inherited and developed by Scarselli et al. (2008). In 2020, Wu et al. (2020) proposed a new classification method to provide a comprehensive overview of graph neural networks (GNN) in the field of data mining and machine learning. Zhou et al. (2020) proposed a general design process for GNN models and systematically classified and reviewed applications. The network proposed for the first time in the context of spectral graph theory extends convolution and pooling operations in CNN to graph-structured data. The input is the graph and the signal on the graph, and the output is the node on each graph Defferrard et al. (2016).

The graph convolutional neural network (GCN) is the “first work” of GNN. It uses a semi-supervised learning method to approximate the convolution kernel in the original graph convolution operation and improves the original graph convolution algorithm Kipf and Welling (2016), as shown in the Fig. 4 . For the application of GCN in recommender systems, refer to Monti et al. (2017). Graph convolutional networks are the basis for many complex graph neural network models, including autoencoder-based models, generative models, and spatiotemporal networks. Inspired by physics, Martin et al. published an article using graph neural networks to solve combinatorial optimization problems in the journal Nature Machine Intelligence in 2022 Schuetz et al. (2022). In order to solve the limitation of the large amount of computation of GCN, Xu et al. proposed a graph wavelet neural network (GWNN) Xu et al. (2019) that uses graph wavelet transform to reduce the amount of computation.

a Schematic depiction of GCN for semi-supervised learning with C input channels and F feature maps in the output layer. The graph structure (edges shown as black lines) is shared over layers, labels are denoted by \({Y_i}\). b Visualization of hidden layer activations of a two-layer GCN. Colors denote document class Kipf and Welling (2016)

Graph Attention Network is a space-based graph convolutional network, which combines the attention mechanism in natural language processing with the new graph geometry data learning of graph structure data. The attention mechanism is used to determine the weight of the node neighborhood, resulting in a more effective feature representation Velikovi et al. (2017), which is suitable for (graph-based) inductive learning problems and transductive learning problems. The graph attention model proposes a recurrent neural network model that can solve the problem of graph classification. It processes graph information by adaptively visiting the sequence of each important node.

Graph autoencoders are a class of graph embedding methods that aim to represent the vertices of a graph as low-dimensional vectors using a neural network structure. At present, GCN-based autoencoder methods mainly include: GAE Kipf and Welling (2016) and ARGA Pan et al. (2018), and other variants are NetRA Yu et al. (2018), DNGR Cao et al. (2016), DRNE Ke et al. (2018).

The purpose of a graph generation network is to generate new graphs given a set of observed graphs. MolGAN Lee et al. (2021) integrates relational GCNs, modified GANs, and reinforcement learning objectives to generate a graph of the properties required by the models. DGMG Li et al. (2018) utilizes graph convolutional networks with spatial properties to obtain hidden representations of existing graphs, which is suitable for expressive and flexible relational data structures (such as natural language generation, pharmaceutical fields, etc.). GRNN You et al. (2018) generates models through depth graphs of two layers of recurrent neural networks.

In addition to the above-mentioned classic models, researchers have conducted further studies on GCN. For example, GLCN Jiang et al. (2018), RGDCN Brockschmidt (2019), GIC Jiang et al. (2019), HA-GCN Zhou and Li (2017), HGCN Liu et al. (2019), BGCN Zhang et al. (2018), SAGNN Zhang et al. (2019),DVNE Zhu et al. (2018), SDNE Wang et al. (2016), GC-MC Berg et al. (2017), ARGA Pan et al. (2018), Graph2Gauss Bojchevski and Günnemann (2017) and GMNN Qu et al. (2019) and other network models. In fact, the DeepMind team has also begun to pay attention to deep learning on graphs. In 2019, the Megvii Research Institute proposed a GeoConv for modeling the geometric structure between points and a hierarchical feature extraction framework Geo-CNN Lan et al. (2019). Hernández et al. (2022)proposed a method to predict the time evolution of dissipative dynamical systems using graph neural networks. Yao et al. (2024) introduced the Federated Graph Convolutional Network (FedGCN) algorithm for semi-supervised node classification, which has the characteristics of fast convergence and low communication cost.

2.1.3 Fluid dynamics neural networks

Computational fluid dynamics (CFD) is the product of the combination of modern fluid mechanics. The research content is to solve the governing equations of fluid mechanics through computer and numerical methods, and to simulate and analyze fluid mechanics problems.

Maziar et al. proposed a physical neural network in science - Hidden Fluid Mechanics Network Framework (HFM) to solve partial differential equations Raissi et al. (2020). The motion of the fluid in Raissi et al. (2020) is governed by the transport equation, the momentum equation, and the continuity equation, and these equations (knowledge of fluid mechanics) are encoded into the neural network, and the governing equations, and a feasible solution is obtained by combining the residuals of the neural network, as shown in Fig. 5. The HFM framework is not limited by boundary conditions and initial conditions. Realizing the prediction of fluid physics data has the advantages of strong versatility of machine learning and strong pertinence of computational fluid dynamics.

A physics-uninformed neural network (left) takes the input variables t, x, and y and outputs c, u, v, and p. By applying automatic differentiation on the output variables, we encode the transport and NS equations in the physics-informed neural networks \({e_i}\), i = 1,..., 4 (right) Raissi et al. (2020)

Wsewles et al. proposed the NPM–Neural Particle Method Wessels et al. (2020), a computational fluid dynamics using an updated Lagrangian physics-informed neural network, even with discrete point locations highly irregular, NPM is also stable and accurate. A new end-to-end learning deep learning neural network for automatic generation of fluid animations based on Lagrangian fluid simulation data Zhang et al. (2020). Guan et al. proposed the “NeuroFluid” model, which uses the artificial intelligence differentiable rendering technology based on neural implicit fields, regards fluid physics simulation as the inverse problem of solving the 3D rendering problem of fluid scenes and realizes fluid dynamic inversion Guan et al. (2022).

2.2 Dynamic neural network systems

The methods used to express nonlinear functions include dynamic systems and neural networks. At the same time, various nonlinear functions are actually information waves propagating between various layers. If physical systems in the real world are represented by neural networks, it will greatly improve the possibility of applying these physical systems to the field of artificial intelligence for analysis. Neural networks usually use a large amount of data for training, and adjust the weight and bias of the data through a large amount of information obtained. Minimizing the difference between the actual output and the expected output value, approximating the ground truth. Thereby imitating the behavior of human brain neurons to make judgments. However, this training method has the disadvantage of “chaos blindness”, that is, the AI system cannot respond to the chaos (or mutation) in the system.

2.2.1 Hamiltonian/Lagrangian neural networks

The steepest descent curve problem proposed by the Swiss mathematician Johann Bernoulli makes the variational method an essential tool for solving extreme value problems in mathematical physics. The variational principle of physical problems (or problems in other disciplines) is transformed into the problem of finding a function’s extreme value (or stationary value) by using the variational method. The variational principle is also called the principle of least action Feynman (2005). Karl Jacobbit called the principle of least action the mother of analytical mechanics. When applied to the action of a mechanical system, the equation of motion of the mechanical system can be obtained. The study of this principle led to the development of Lagrangian and Hamiltonian formulations of classical mechanics.

Hamiltonian neural networks Hamilton’s principle is a variational principle proposed by Hamilton in 1834 for dynamic complete systems. The Hamiltonian (conservation of momentum) embodies complete information about a dynamic physical system, that is, the total amount of all energies, kinetic and potential energies that exist. The Hamiltonian principle is often used to establish dynamic models of systems with continuous mass distribution and continuous stiffness distribution (elastic systems). Hamilton is the “special seasoning” that gives neural networks the ability to learn order and chaos. Neural networks understand underlying dynamics in a way that conventional networks cannot. This is the first step toward neural networks in physics. The NAIL team incorporated the Hamiltonian structure into a neural network, applying it to the known Hénon-Heiles model of stellar and molecular dynamics models Choudhary et al. (2020), accurately predicting the system dynamics moving between order and chaos.

An unstructured neural network, such as a multi-layer perceptron (MLP), can be utilized to parameterize the Hamiltonian. In 2019, Greydanus et al. proposed Hamiltonian Neural Networks (HNN) Greydanus et al. (2019) that learn the basic laws of physics (Hamiltonian of mass-spring systems) and accurately preserve a quantity similar to the total energy (energy conservation). In the same year, Toth et al. used the Hamiltonian principle (variational method) to transform the optimization problem into a functional extreme value problem (or stationary value) and proposed Hamiltonian Generative Networks (HGN) Toth et al. (2019). Due to the physical limitations defined by the Hamiltonian equations of motion, the research Han et al. (2021) introduces a class of HNNs that can adapt to nonlinear physical systems. By training a time-series-based neural network, from a small number of bifurcation parameter values of the target Hamiltonian system, the dynamic state of other parameter values can be predicted. The work Dierkes and Flaßkamp (2021) introduced the Hamiltonian Neural Network (HNN) to explicitly learn the total energy of the system, training the neural network to learn the equations of motion to overcome the lack of physical rules.

In the field of neural networks applied to chaotic dynamic systems, the work by Haber and Ruthotto (2017) introduces a neural network model called “Stable Neural Networks,” which is inspired by the differential equations of the Hamiltonian dynamical system. This model aims to address the issue of susceptibility to input data disturbance or noise that can affect the performance of neural networks obtained through the discretization of chaotic dynamic systems.

Another relevant research paper by Massaroli et al. (2019) offers a novel perspective on neural network optimization, specifically tackling the problem of escaping saddle points. The non-convexity and high dimensionality of the optimization problem in neural network training make it challenging to converge to a minimum loss function. The proposed framework guarantees convergence to a minimum loss function and avoids the saddle point problem. It also demonstrates applicability to neural networks based on physical systems and pH control, improving learning efficiency and increasing the likelihood of finding the global minimum of the objective function.

Additionally, there are other methods available for identifying Hamiltonian dynamical systems (HDS) using neural networks, as discussed in the referenced paper by Lin et al. (2017). These methods contribute to the exploration of neural network architectures and techniques for modeling and understanding HDS. Zhao et al. (2024) used conservative Hamiltonian neural flow to construct a GNN that is robust to adversarial attacks, greatly improving the robustness to adversarial perturbations.

Overall, these research works highlight important approaches and perspectives in applying neural networks to chaotic dynamic systems, addressing challenges such as input data disturbance, saddle point problems, and optimization difficulties.

Lagrangian neural networks The Lagrangian function of analytical mechanics is a function that describes the dynamical state of the entire physical system. The Lagrangian function of a system represents the properties of the system itself. If the world is symmetric (such as spatial symmetry), then after the system is translated, the Lagrangian function remains unchanged, and momentum conservation can be obtained using the variational principle.

Even if the training data satisfies all physical laws, it is still possible for a trained artificial neural network to make non-physical predictions (there are some scenarios where rigid body kinematics is not applicable, and it is even difficult to calculate with physical formulas). Therefore, in 2019, the object mass matrix in the Euler-Lagrangian equation is represented by a neural network, so that the relationship between the mass distribution and the robot pose can be estimated Lutter et al. (2019). Deep Lagrangian networks learn the equations of motion for mechanical systems, train faster than traditional feedforward neural networks, predict results more physically, and are more robust to new track predictions.

In order to enhance the sparsity and stability of the algorithm, the work Cranmer et al. (2020) proposes a new sparse penalty function based on the dimension reduction algorithm SCAD Fan and Li (2001), and adds it to the Lagrangian Constrained Neural Network to overcome the traditional blind source separation. The defects of the method and the independent component analysis method can effectively avoid the ill-conditioned problem of the equation and improve the sparsity, stability, and accuracy of blind image restoration. Since neural networks cannot conserve energy, it is difficult to model dynamics over a long period of time. In 2020, Cranmer et al. The research Cranmer et al. (2020) used neural networks to learn arbitrary Lagrangian quantities, inducing strong physical priors, as shown in Fig. 6. Xiao et al. (2024) introduce a breakthrough extension of the Lagrangian neural network (LNN) (generalized Lagrangian neural network), which is innovatively tailored for non-conservative systems.

Cranmer et al. (2020) propose a method to address the challenge of modeling the dynamics of physical systems using neural networks. They demonstrate that neural networks struggle to accurately represent these dynamics over long time periods due to their inability to conserve energy. To overcome this limitation, the authors introduce a technique for learning arbitrary Lagrangians with neural networks, which incorporates a strong physical prior on the learned dynamics. By leveraging the principles of Lagrangian mechanics, the neural networks are able to better capture the underlying physics of the system. This approach improves the accuracy of the neural network model (shown in blue) compared to traditional neural networks (shown in red), providing a promising avenue for enhancing the modeling of complex dynamical systems

2.2.2 Neural network differential equation solvers

In physics, due to the concepts of locality and causality equations, differential equations are basic equations, so it is a cutting-edge trend to treat neural networks as dynamic differential equations and to use numerical solution algorithms to design network structures.

Ordinary differential equation neural networks The general neural ODE is as follows:

where \({y_0}\) can be any dimension tensor, \(\theta\) indicates some vector of learned parameters,\({f_\theta }\) indicates a neural network.

Neural networks offer powerful function approximation capabilities, while penalty terms help bridge the gap between theory and practice. One application is in turbulence modeling, as demonstrated in Ling et al. (2016), where a carefully designed neural network approximates closed relations (Reynolds stresses) while adhering to specific physical invariances. This approach enables the modeling of residuals between theoretical and observed data.

Latent ODEs emerge from this framework when incorporating time-varying components. Rubanova et al. (2019) utilize latent ODEs to simulate the dynamics of a small frog entering the air in a simulated environment. Additionally, Du et al. (2020) explore the applications of latent ODEs in reinforcement learning.

Another study by Shi and Morris (2021) combines latent ODEs with change-point detection algorithms to model switching dynamical systems. This approach provides a powerful tool for segmenting and understanding complex dynamics with abrupt changes.

In summary, neural networks coupled with penalty terms and latent ODEs offer valuable methods for modeling and simulating various dynamic systems, including turbulence, reinforcement learning, and switching dynamical systems. These approaches bridge the gap between theoretical principles and practical applications, opening up new possibilities in understanding and predicting complex phenomena.

Euler’s method: The main idea of Euler’s method is to use the first derivative of a point to linearly approximate the final value. Due to the different positions of the points where the first derivative is used, it is divided into the forward Euler method (also known as explicit Euler method) and backward Euler’s method (Implicit Euler’s method). The general form of deep residual network (ResNet) He et al. (2016) can be regarded as a discrete dynamical system, because each step of it is composed of the simplest nonlinear discrete dynamical system-linear transformation and non-linear linear activation function is formed. It can be said that the residual network is an explicit Euler discretization of a neural ODE. Now, the RevNet neural network Behrmann et al. (2019), as a further generalization of ResNet, is a residual learning with a symmetric form. The backward Euler algorithm corresponds to PolyNet Zhang et al. (2017), PolyNet can reduce the depth by increasing the width of each residual block, thereby achieving the most advanced classification accuracy. In addition, from the perspective of ordinary differential equations, the reverse Euler method has better stability than the forward Euler method. For more methods of using ordinary differential equations themselves as neural networks, see Chen et al. (2018).

Partial differential equation neural networks The general form of a second-order PDE:

The design of FractalNet is based on self-similarity, by repeatedly applying a simple extension rule to generate deep networks whose structure is laid out as a truncated fractal Larsson et al. (2016), whose structure can be explained as the famous Runge- Kuta form. The activation and weight dynamics of neural networks in Ramacher (1993) are derived from partial differential equations and incorporate weights as parameters or variables. Results obtained using a combination of time-varying patterns of parameters and dynamics show that learning rules can be replaced by learning laws under equal performance.

Physics Informed Neural Network (PINN) Raissi et al. (2019) is a method of applying scientific machines in traditional numerical fields, especially for solving various problems related to PDE. The principle of PINN is to approximate the solution of PDE by training the neural network to minimize the loss function. The essence is to integrate the equation (physical knowledge) into the network and use the residual term from the governing equation to construct a loss function, which is used as a penalty term to limit the space of feasible solutions.

The PINN-HFM Raissi et al. (2020) algorithm fused with physical knowledge reconstructs the overall velocity field of resolution from sparse velocity information. That is, the loss term of the NS equation is minimized, and the velocity field and the pressure field are obtained at the same time, so that the result conforms to the “laws of physics”. Compared to traditional CFD solvers, PINN is better at integrating data (observations of flow) and physical knowledge (essentially the governing equations describing the physical phenomenon).

Considering that PINN is not robust enough for extreme gradient decline, and the depth increases with the PDE order, resulting in vanishing gradients and slower learning rates, Dwivedi et al. (2019) propose DPINN. In 2020, Meng et al. (2020) used the traditional parareal time domain segmentation method for parallelization to reduce the complexity and learning difficulty of the model. Unlike PINN and its variants, Fang (2021)proposed using the approximation of differential operators instead of automatic differentiation to solve hybrid physical information networks of PDEs. The research Moseley et al. (2021) presents a parallel approach to spatially partitioned regions. As a meshless method, PINN does not require a mesh. Therefore, an algorithm using the fusion differential format to accelerate information dissemination has also emerged Chen et al. (2021). Then the work Schiassi et al. (2022) utilizes PINN to solve the equation paradigm, which is used to “learn” the optimal control of the plane orbit transfer problem. Since the global outbreak of the Covid-19 virus, Treibert et al. used PINN to evaluate model parameters, built an SVIHDR differential dynamical system model Treibert and Ehrhardt (2021), extended Susceptible-Infected-Recovered (SIR) model Trejo and Hengartner (2022).

Although AI using PDE to simulate physical problems has been widely used, there are still limitations in solving high-dimensional PDE problems. This work Karniadakis et al. (2021) discusses the diverse applications of physical knowledge (discipline) learning integrating noisy data and mathematical models, under the condition of satisfying the physical invariance, improving the accuracy, and solving the hidden physical inverse problems and high-dimensional problems. Xiao et al. (2024) proposed a deep learning framework for solving high-order partial differential equations, named SHoP. At the same time, the network was expanded to the Taylor series, providing explicit solutions to partial differential equations.

Controlled differential equations neural networks Neural controlled differential equations (CDEs) rely on two concepts: Bounded paths and Riemann CStieltjes integrals, which are formulated as follows:

Modeling the dynamics of time series using neural differential equations is a promising option, however, the performance of current methods is often limited by the choice of initial conditions. The neural CDEs model generated by Kidger et al. (2020) can handle irregularly sampled and partially observed input data (i.e., time series), and has higher performance than ODE or RNN-based models. Additional terms in the numerical solver are introduced in Morrill et al. (2021) to incorporate substep information to obtain neural rough differential equations. When dealing with data with missing information, it is standard practice to add observation masks Che et al. (2018), which is the appropriate continuous-time analogy.

Stochastic differential equation neural networks Stochastic Differential Equations (SDE) have been widely used to model real-world stochastic phenomena such as particle systems (Coffey and Kalmykov 2012; Pavliotis 2014), financial markets Black and Scholes (2019), population dynamics Arató (2003) and genetics Huillet (2007). Latent ODEs serve as a natural extension of ordinary differential equations (ODEs) for modeling systems that evolve in continuous time while accounting for uncertainty Kidger (2022).

The dynamics of a stochastic differential equation (SDE) encompass both a deterministic term and a stochastic term:

where \(\mu\), \(\sigma\) is a regular function, w is a d dimensional Brownian motion, and y is the resulting d dimensional continuous random process.

The inherent randomness in stochastic differential equations (SDEs) can be viewed as a generative model within the context of modern machine learning. Analogous to recurrent neural networks (RNNs), SDEs can be seen as an RNN with random noise, specifically Brownian motion, as input, and the generated sample as the output. Time series models are classic interest models. Predictive models such as Holt-Winters Holt (2004), ARCH Engle (1982), ARMA Hannan and Rissanen (1982), GARCH Bollerslev (1986), etc.

More deep learning libraries for solving differential equations combined with physical knowledge and machine learning such as the literature Lu et al. (2021).

2.3 Graph neural networks to solve physical problems

Molecular Design: The most critical problem in the fields of materials and pharmaceuticals is to predict the ski, physical, and biological properties of new molecules from their structures. Recent work from Harvard University Duvenaud et al. (2015) proposes to model molecules as graphs and use graph convolutional neural networks to learn the desired molecular properties. Their method significantly outperforms the handcrafted capabilities of Morgan (1965), Rogers and Hahn (2010), a work that opens up opportunities for molecular design in a new way.

Medical Physics: The field of medical physics Manco et al. (2021) is one of the most important areas of artificial intelligence application, which can be roughly divided into radiotherapy and medical imaging. With the success of AI in imaging tasks, AI research in radiotherapy (Hrinivich and Lee 2020; Maffei et al. 2021) and medical imaging (such as x-ray, MRI, and nuclear medicine) Barragán-Montero et al. (2021) has grown rapidly. Among them, magnetic resonance imaging (MRI) technology in medical image analysis Castiglioni et al. (2021) plays a vital role in the diagnosis, management, and monitoring of many diseases Li et al. (2022). A recent study from Imperial College Ktena et al. (2017) uses graph CNNs on non-Euclidean brain imaging data to detect disruptions in autism-related brain functional networks. Zegers et al. outlined the current state-of-the-art applications of deep learning in neuro-oncology MRI Zegers et al. (2021), which has broad potential applications. Rizk et al. introduced deep learning models for meniscal tear detection after external validation Rizk et al. (2021). The discussion and summary of MRI image reconstruction work Montalt-Tordera et al. (2021) provides great potential for the acquisition of future clinical data pairs.

High-energy physics experiments: Introducing graph neural networks to predict the dynamics of N-body systems (Battaglia et al. 2016; Chang et al. 2016) with remarkable results.

Power System Solver: The research Donon et al. (2019) combines graph neural networks to propose a neural network architecture for solving power differential equations to calculate power flow (so-called “load flow”) in the grid. The work Park and Park (2019) proposes a physics-inspired data-driven model for wind farm power estimation tasks.

Structure prediction of glass systems (glass phase transitions): DeepMind published a paper in Nature Physics Bapst et al. (2020) to model glass dynamics with a graph neural network model, linking network predictions to physics. The long-term evolution of glassy systems can be predicted using only the structures hidden around the particles. The model works well across different temperature, pressure, and density ranges, demonstrating the power of graph networks.

3 Deep neural network paradigms inspired by electromagnetics

3.1 Optical design neural networks

Optical neural networks(ONNs) are novel types of neural networks designed with optical technology such as optical connection technology, optical device technology, and so on. The idea of optical neural networks is to imitate neural networks by attaching information to optical features utilizing modulation. At the same time, taking advantage of the optical propagation principle of light such as interference, diffraction, transmission, and reflection to realize neural networks and their operators. The first implementation of ONNs was optical Hopfeild networks, proposed by Demetri Psaltis and Farhat (1985) in 1985. There are three main operators involved in traditional neural networks: linear operations, nonlinear activation operations, and convolution operations, and in this subsection, the optical implementation of the above operators is presented in that order. We summarize the structure of this section and an overview of representative methods in Table 2.

3.1.1 Optical implementation of linear operations

The main linear operators of neural networks are matrix multiplication operators and weighted summation operators. The weighted summation operators are easy to implement due to the property of optical coherence and incoherence, so the challenge of optical implementation of linear operations lies in the optical implementation of matrix multiplication. As early as 1978, J. W. Goodman et al. (1978) first implemented an optical vector–matrix multiplier with a lens set according to the principle of optical transmission; And the optical implementation of matrix-matrix multiplier was first implemented using a 4f-type system consisting of a lens set by Chen (1993).

Optical implementation of vector–matrix multiplications The vector \({\textbf {p}}\) is obtained by multiplying the matrix \({\textbf {A}}\) with the vector \({\textbf {b}}\). The mathematical essence is to use each row of the matrix \({\textbf {A}}\) to make an inner product with the vector \({\textbf {b}}\) to obtain the value of the corresponding position of the vector \({\textbf {p}}\). The mathematical expression is:

The optical vector–matrix multiplier is mainly composed of two parts: the light source such as light-emitting diode light source arrays, and the optical path system composed of a spherical lens, a cylindrical lens, a spatial light modulator, and an optical detector. Its mathematical idea is to transform the vector–matrix multiplication into the matrix-matrix point-wise multiplication.

As shown in Fig. 7, the vector \({\textbf {b}}\) is modulated into optical features of the incoherent light source (LS) such as the amplitude, intensity, phase, and polarization, then the incident light passes the first spherical lens L1. Since the LS array is located in the front focal plane of the spherical lens L1, the light through L1 is emitted in parallel. Next, the light passes the cylindrical lens CL1, which is located in the post-focal plane of the L1. Due to the vertical placement of the cylindrical lens CL1, the light through CL1 is only converged on the post-focal plane in the horizontal direction, and the light is emitted in parallel in the vertical direction. At this time the light field carries the information:

There is a spatial light modulator(SLM) being placed on the back post-focal plane of CL1, which contains the information of matrix \({\textbf {A}}\). The process of passing through the SLM can be seen as the process of dot multiplication of matrix \({\textbf {A}}, {\textbf {B}}\). At this time, the light field carries the information as:

Then, the light through SLM passes the cylindrical lens CL2, between which and SLM the distance is the focal length f of CL2. Due to the horizontal placement of the cylindrical lens CL2, the light through SLM is only converged on the post-focal plane in the vertical direction, and the light is emitted in parallel in the horizontal direction. At this time, the light field carries the information of the multiplication result of the vector \({\textbf {p}}\):

Finally, the light through CL2 is demodulated and the vector \({\textbf {p}}\) can be obtained with a charge-coupled device(CCD).

Optical implementation of vector–matrix from Goodman et al. (1978)

Optical implementation of matrix–matrix multiplications Compared to vector–matrix multiplication, matrix-matrix multiplication is more complicated. The multiplication of matrix \({\textbf {A}}\) and matrix \({\textbf {B}}\) is the inner product for each row of matrix \({\textbf {A}}\) and each column of matrix \({\textbf {B}}\). Assuming that the result matrix is \({\textbf {P}}\), the expression is as follows:

The matrix-matrix multiplication is implemented with the help of an optical 4f-type system, which consists of Fourier lenses, holographic masks(HM), and charge-coupled devices. Taking advantage of the discrete Fourier transform(DFT), the matrix \({\textbf {B}}\) can be constructed with the discrete Fourier transform matrix to implement the multiplication.

As shown in Fig. 8, the matrix \({\textbf {B}}\) is modulated in the complex amplitude of the input light, and the result matrix \({\textbf {P}}\) is obtained in the output plane. The multiplication operation of matrix \({\textbf {A}}\) and matrix \({\textbf {B}}\) is completed during the light propagation from the input plane to the output plane. Let the matrix \({\textbf {B}}\) and the function F be the input light field, the Fourier transform function at the front-focal plane of the Fourier lens, respectively. According to the principle of Fresnel diffraction, the complex amplitude distribution of the light field at the post-focal plane of the lens is the Fourier transform of the complex amplitude distribution of the light field at the front-focal plane, and the expression is as follows:

Since the DFT can be implemented with the DFT matrix, combining with the Equation (10), the discretized light field is expressed as:

In this case, the DFT matrix \({\textbf {G}}\) of the lens is only related to the focal length and the wavelength, so the matrix \({\textbf {A}}\) must be moderated with a holographic mask, which is used to adjust the complex amplitude distribution of the light field. The whole optical system is composed of two Fourier lenses and a holographic mask, so the output light field is:

where the matrices \({\textbf {G}}_1\) and \({\textbf {G}}_2\) denote the DTF matrices of the two lenses, respectively, and \({\textbf {H}}(m)\) is the complex amplitude distribution function of the holographic mask. Comparing the Equation (9) and the Equation (12):

The relationship between the sampling periods and the sampling numbers in the input plane, the output plane, and the holographic mask satisfies:

where \(\triangle {x_1}, \triangle {x}, \triangle {x_2}, L, M, X\) are the sampling periods and the sampling numbers in the input plane, the holographic mask, and the output plane, respectively. According to the equation (13) and the equation (14), \({\textbf {H}}(m)\) can be obtained:

Optical matrix multipliers The vector–matrix multiplier was first proposed by J. W. Goodman et al. (1978) in 1978. With this multiplier, the DFT was implemented in an optical way. These works (Liu et al. 1986; Francis et al. 1990; Yang et al. 1990) proposed to construct a spatial light modulator with a miniature liquid crystal television (LCTV) to replace the matrix mask and lens to implement matrix multiplication. The research Francis et al. (1991) proposed to use a mirror array instead of the commonly used lens array to realize the optical neural network that uses a mirror-array interconnection; And the work Nitta et al. (1993) removed two cylindrical lenses from the matrix multiplier, improved light-emitting diode arrays and the variable-sensitivity photodetector arrays, and produced the first optical neural chip. The research Chen (1993) proposed to construct an optical 4f-type system, which used the optical Fourier transform and inverse transform of Fourier lenses to implement matrix-matrix multiplication. The research Wang et al. (1997) proposed a new optical neural network architecture that uses two perpendicular 1-D prism arrays for optical interconnection to implement matrix multiplication.

Optical implementation of matrix-matrix from Chen (1993)

Psaltis et al. (1988) proposed the implementation of matrix multiplication using the dynamic holographic modification of photorefractive crystals, enabling the construction of most neuro networks. Slinger (1991) proposed a weighted N-to-N volume-holographic neural interconnect method and derived the coupled-wave solutions that describe the behavior of an idealized version of the interconnect. (Yang et al. 1994; Di Leonardo et al. 2007; Nogrette et al. 2014) proposed the use of the Gerchberg-Saxton algorithm to calculate holograms for each region. The research Lin et al. (2018) proposed the use of transmissive and reflective layers to form phase-only masks and construct all-optical neurons by optical diffraction. Yan et al. (2019) proposed a novel diffractive neural network implemented by placing diffraction modulation layers at the Fourier plane of the optical system. The research Qian et al. (2020) proposed to scatter or focus the plane wave at microwave frequencies in a diffractive manner on a compound Huygens metasurface to mimic the functionality of artificial neural networks.

Lin et al. Mengu et al. (2019) proposed to use five phase-only diffractive layers for complex-valued phase modulation and complex-valued amplitude modulation to implement an optical diffraction neural network. Shen et al. (2017), Bagherian et al. (2018) take advantage of the Mach-Zehnder interferometer array to implement matrix multiplication through the principle of singular value decomposition; Hamerly et al. (2019) proposed an optical interference-based zero-difference detection method to implement matrix multiplication and constructed a new type of photonic accelerator to implement optical neural networks. Zang et al. (2019) implemented the vector–matrix multiplications by stretching time-domain pulses. With the help of fiber loops, the multi-layer neural network can be implemented in optical.

3.1.2 Optical implementation of nonlinear activation

Nonlinear activation functions play an important role in neural networks, which enable them to approximate complex nonlinear mappings. However the lack of nonlinear response in optics and the limitations of the fabrication conditions of optical devices, the optical response of devices is often fixed, which limits the optical nonlinearity from being reprogrammed to achieve different forms of nonlinear activation functions. Therefore, previous nonlinearities in ONNs were generally achieved using optoelectronic hybrid methods Dunning et al. (1991). With the development of material fabrication conditions, the all-optical implementation of optical nonlinearity Skinner et al. (1994) has only emerged. This is presented below as an example (Fig. 9).

Optical neural network based on Kerr-type nonlinear materials from Skinner et al. (1994)

The all-optical neural network consists of linear layers and nonlinear layers, where the linear layers are composed of thick linear media, such as free space, and the nonlinear layers are composed of thin nonlinear media, such as Kerr-type nonlinear materials, whose refractive index satisfies the following relationship:

where \(n_0\) is the linear refractive index component, \(n_2\) is the nonlinear refractive index coefficient, and \(I_r(x,y,z)\) is the light field intensity. The material behaves as self-focusing if \(n_2>0\), and the material behaves as self-scattering if \(n_2<0\). Since its refractive index is dependent on the light intensity, the nonlinear layer can play the role of both weighted summation and nonlinear mapping.

When the input light is incident to the plane of the nonlinear layer, the refractive index will be different at various points of the nonlinear plane, which results in changes in the intensity and direction of the transmitted light and the appearance of interference phenomenon, so the nonlinear layer achieves the function of spatial light modulation. The final output light signal depends on the first layer input and the continuous weighting and nonlinear mapping of the nonlinear layer.

Photoelectric hybrid methods Dunning et al. (1991) processed video signals on a point-by-point basis by a frame grabber and image processor to implement programmable nonlinear activation functions. Larger et al. (2012) used an integrated telecom Mach-Zendel modulator to provide an electro-optical nonlinear modulation transfer function to achieve the construction of optical neural networks. Antonik et al. (2019) modulated the phase of spatially extended plane waves by means of a spatial light modulator to improve the parallelism of the optical system, which could significantly increase the scalability and processing speed of the network. Katumba et al. (2019) constructed nonlinear operators of networks with the nonlinearity of electro-optical detectors to achieve extremely high data modulation speed and large-scale network parameter update. Williamson et al. (2019), Fard et al. (2020) converted a small portion of the incident light into the electrical signal and modulated the original light signal with the help of an electro-optical modulator to realize the nonlinearity of the neural network, which increases the operating bandwidth and computational speed of the system.

All-optical methods Skinner et al. (1994) implemented weighted connectivity and nonlinear mapping using Kerr-type nonlinear optical materials as the thin layer separating the free space to improve the response speed of optical neural networks. Saxena and Fiesler (1995) used of liquid crystal light valve (LCLV) to achieve the threshold effect of nonlinear functions and constructed an optical neural network to avoid the energy loss problem of photoelectric conversion. Vandoorne et al. (2008), Vandoorne et al. (2014) used coupled semiconductor optical amplifiers (SOA) as the basic block to achieve nonlinearity in all-optical neural networks, making the networks with low power consumption, high speed, and high parallelism. Rosenbluth et al. (2009) used novel nonlinear optical fibers as thresholds to achieve nonlinear responses in networks, overcoming the scalar problem of digital optical calculations and the noise accumulation problem of analog optical calculations. Mesaritakis et al. (2013), Denis-Le Coarer et al. (2018), Feldmann et al. (2019) used the property of nonlinear refractive index variation of ring resonators to provide the nonlinear response of the network, enabling optical neural networks with high integration and low power consumption. Lin et al. (2018) proposed a method to build optical neural networks using only optical diffraction and passive optical components working in concert, avoiding the use of power layers and building an efficient and fast way to implement machine learning tasks. Bao et al. (2011); Shen et al. (2017); Schirmer and Gaeta (1997) exploited the saturable absorption properties of nanophotons to achieve nonlinearity in networks. Miscuglio et al. (2018) discussed two approaches to achieve nonlinearity in all-optical neural networks with the reverse saturable absorption property and electromagnetically induced transparency of nanophotonics; Zuo et al. (2019)used the spatial light modulator and Fourier lens to program for linear operation and electromagnetically induced transparency of laser-cooled atoms for nonlinear optical activation functions.

3.1.3 Optical implementation of convolutional neural networks

By imitating the information hierarchical processing mechanism of biological vision, the convolutional neural network(CNN) has the properties of local perception and weight sharing, which significantly reduces the computational complexity and makes networks with stronger fitting ability to fit more complex nonlinear functions.

A deep convolutional neural network is proposed in Shan et al. (2018) to accelerate electromagnetic simulations and predict the 3D Poisson equation for the electrostatic potential distribution through the powerful ability to approximate nonlinear functions. Li et al. (2018) proposed a novel DNN architecture called DeepNIS for nonlinear inverse scattering problems (ISPs). DeepNIS consists of a cascade of multilayer complex-valued residual CNN to imitate the multi-scattering mechanism. This network takes the EM scattering data collected by the receiver as input and outputs a super-resolution image of EM inverse scattering, which maps the coarse images to the precise solutions to the ISPs. Wei and Chen (2019) proposed a physics-inspired induced current learning method (ICLM) to solve the full-wave nonlinear ISPs. In this method, a novel CEE-CNN convolutional network is designed, which feeds most of the induced currents directly to the output layer by jump connections and focuses on the other induced currents. The network defines the multi-label combination loss function to reduce the nonlinearity of the objective function to accelerate convergence. Guo et al. (2021) proposed a complex-valued Pix2pix generative adversarial network. This network consists of two parts: the generator and the discriminator. The generator consists of multilayer complex-valued CNNs, and the discriminator calculates the maximum likelihood between the original value and the reconstructed value. By adversarial training between the discriminator and the generator, the generator can capture more nonlinear features than the conventional CNN. The work Tsakyridis et al. (2024) provides an overview and discussion of the basics of photonic neural networks and optical deep learning. Matuszewski et al. (2024) discussed the role of all-optical neural networks.

4 Deep neural network paradigms inspired by statistical physics

The field of artificial intelligence contains a wide range of algorithms and modeling tools to handle tasks in various fields and has become the hottest subject in recent years. In the previous chapters, we reviewed recent research on the intersection of artificial intelligence with classical mechanics and electromagnetism. This includes the conceptual development of artificial intelligence powered by physical insights, the application of artificial intelligence techniques to multiple domains in physics, and the intersection between these two domains. Below we describe how statistical physics can be used to understand AI algorithms and how AI can be applied to the field of statistical physics. An overview of the representative methods is shown in Table 3.

4.1 Unbalanced neural networks

The most general problem in nonequilibrium statistical physics is the detailed description of the time evolution of physical (chemical or astronomical) systems. For example, different phenomena tending towards equilibrium states, considering the response of the system to external influences, metastability, and instability due to fluctuations, pattern formation and self-organization, the emergence of probabilities contrary to deterministic descriptions, and open systems, etc. Nonequilibrium statistical physics has created concepts and models that are not only relevant to physics, but also closely related to information, technology, biology, medicine, and social sciences, and even have a great impact on fundamental philosophical questions.

4.1.1 Neural networks understood from entropy

Entropy Proposed by German physicist Clausius in 1865, it was first a basic concept in the development of thermodynamics. Its essence is the ”inherent degree of chaos” of a system, or the amount of information in a system (the more chaotic the system, the less the amount of information, the more difficult it is to predict, and the greater the information entropy), which is recorded as S in the formula. It summarizes the basic development law of the universe: things in the universe have a tendency to spontaneously become more chaotic, which means that entropy will continue to increase, which is the principle of entropy increase.

Boltzmann distribution In 1877, Boltzmann proposed the physical explanation of entropy: the macroscopic physical property of the system, which can be considered as the equal probability statistical average of all possible microstates.

Information entropy (learning cost) Until the development of statistical physics and information theory, Shannon extended the concept of entropy in statistical physics to the process of channel communication Shannon (1948) in 1948, and proposed information entropy and the universal significance of entropy became more obvious.

In deep learning, the speed at which the model receives information is fixed, so the only way to speed up the learning progress is to reduce the amount of redundant information in the learning target. The so-called “removing the rudiments and saving the essentials” is the principle of minimum entropy in the deep learning model, which can be understood as “removing unnecessary learning costs”(Fig. 10).

Application of algorithms inspired by the principle of minimum entropy, such as using information entropy to represent the shortest code length, InfoMap (Rosvall et al. 2009; Rosvall and Bergstrom 2008), cost minimization (Kuhn 1955; Riesen and Bunke 2009), Word2Vec (Mikolov et al. 2013a, b), t-SNE dimensionality reduction Maaten and Hinton (2008), etc.

4.1.2 Chaotic neural networks

Chaos refers to the unpredictable, random-like motion of a deterministic dynamic system because it is sensitive to initial values. Poole et al. (2016) published on NIPS in 2016 combines Riemannian geometry and dynamic mean field theory Sompolinsky et al. (1988) to analyze signals through the propagation of stochastic deep networks and form variance weights and biases in the phase plane. This work reveals the dynamic phase transition of signal propagation between ordered and chaotic states. Lin and Chen (2009)proposed a chaotic dynamic neural network based on a sinusoidal activation function, which is different from other models and has strong memory storage and retrieval capabilities. The 2020 edition of Keup et al. (2021) develops a statistical mean-field theory for random networks to solve transient chaos problems.

4.1.3 From Ising models to Hopfield networks

In everyday life, we see phase transitions everywhere changing from one phase to another. For example: liquid water is cooled to form ice, or heated and evaporated into water vapor (liquid phase to solid phase, liquid phase to gas phase). According to Landau’s theory, the process of phase transition must be accompanied by some kind of “order” change. For example, liquid water molecules are haphazardly arranged, and once frozen, they are arranged in a regular and orderly lattice position (molecules vibrate near the lattice position, but not far away), so water freezes. The crystal order is created during the liquid–solid phase transition, as shown in Fig. 11.

Another important example of a phase transition is the ferromagnetic phase transition: a process in which a magnet (ferromagnetic phase) loses its magnetism and becomes a paramagnetic phase during heating. In the process of ferromagnetic phase transition (Fig. 12), the spin orientation of atoms changes from a random state in the paramagnetic phase to a specific direction, so the ferromagnetic phase transition is accompanied by the generation of spin orientation order, resulting in the macroscopic magnetism (spontaneous magnetization) of the material. According to Landau’s theory, the order parameter changes continuously/discontinuously in the continuous/discontinuous phase transition, respectively.

Exactly 100 years ago, the mathematical key to solving the phase transition problem appeared, that is, the “primary version” of the spin glass model - the Ising model (the basic model of phase transition). The Ising model (also called the Lenz-Ising model) is one of the most important models in statistical physics. In 1920–1924, Wilhelm Lenz and Ernst Ising proposed a class of Ising describing the stochastic process of the phase transition of matter model. Taking the two-dimensional Ising lattice model as an example, the state of any point \(p\left( {{s_i}} \right)\) can have two values \(\pm 1\) (spin up or down), and is only affected by the point adjacent to it (interaction strength J ), the energy of the system can be obtained (Hamiltonian): For the Ising model, if all the spins are in the same direction, the Hamiltonian of the system is at a minimum, and the system is in the ferromagnetic phase. Likewise, the second law of thermodynamics tells us that, given a fixed temperature and entropy, the system seeks a configuration method that minimizes its energy, using the Gibbs-Bogoliubov-Feynman inequality to perform variational inference on the Ising model to obtain the optimal solution. In 1982, Hopfield, inspired by the Ising model, proposed a Hopfield neural network Hopfield (1982) that can solve a large class of pattern recognition problems and give approximate solutions to a class of combinatorial optimization problems. Its weight is to simulate the adjacent spin coherence of the Ising model; the neuron update is to simulate the Cell update in the Ising model. The unit of the Hopfield network (full connection) is binary, accepting a value of -1 or 1, or 0 or 1; it also provides a model that simulates human memory(Ising model and Hopfield network analogy diagram as shown in Fig. 13).

Hopfield formed a new calculation method with the idea of the energy function and clarified the relationship between neural networks and dynamics. He used the nonlinear dynamics method to study the characteristics of this neural network, and established the neural network stability criterion. At the same time, he pointed out that information is stored on the connections between the various neurons of the network, forming the so-called Hopfield network. By comparing the feedback network with the Ising model in statistical physics, the upward and downward directions of the magnetic spin are regarded as two states of activation and inhibition of the neuron, and the interaction of the magnetic spin is regarded as the synaptic weight of the neuron value. This analogy paved the way for a large number of physical theories and many physicists to enter the field of neural networks. In 1984, Hopfield designed and developed the circuit of the Hopfleld network model, pointing out that neurons can be implemented with operational amplifiers and the connection of all neurons can be simulated by electronic circuits, which is called a continuous Hopfield network. Using this circuit, Hopfleld successfully solved the traveling salesman (TSP) computational puzzle (optimization problem).

Liu et al. (2019) discuss an image encryption algorithm based on the Hopfield chaotic neural network. This algorithm simultaneously scrambles and diffuses color images by utilizing the iterative process of a neural network to modify the pixel values. The encryption process results in highly randomized and complex encrypted images. During decryption, the original image is restored by reversing the iterative process of the Hopfield neural network.

In 2023, Lin et al. (2023) review the research on chaotic systems based on memory impedance Hopfield neural networks. It explores the construction method of chaotic systems using these neural networks, which incorporate memory impedance to preserve resistance changes. The article discusses the properties and applications of chaotic systems achieved through adjusting network parameters and connection weights. These studies offer new ideas and methods for understanding and applying image encryption and chaotic systems. Ma et al. (2024) proposed a variational autoregressive architecture with a message-passing mechanism, which can effectively exploit the interactions between spin variables. Laydevant et al. (2024) Train Ising machines in a supervised manner via a balanced propagation algorithm, which has the potential to enhance machine learning applications.

4.1.4 Classic simulated annealing algorithms

Physical annealing process: First the object is in an amorphous state, then the solid is heated to a sufficiently high level to be disordered, and then slowly cooled, annealing to a crystal (equilibrium state).

The simulated annealing algorithm was first proposed by Metropolis et al. In 1983, Kirkpatrick et al. applied it to combinatorial optimization to form a classical simulated annealing algorithm Kirkpatrick et al. (1983): Using the similarity between the annealing process of solid matter in physics and general optimization problems; Starting from a certain initial temperature, with the continuous decrease of temperature, combined with the probabilistic sudden jump characteristic of the Metropolis criterion (accepting a new state with probability), it searches in the solution space, and stays at the optimal solution with probability 1 (Fig. 14).

Importance Sampling (IS) is an effective variance reduction algorithm for rare events, as described in the seminal work by Marshall (1954). The fundamental concept of IS involves approximating the computation by taking a random weighted average of a simpler distribution function, representing the objective function’s mathematical expectation.

Inspired by the idea of annealing, Radford proposed Annealed Importance Sampling (AIS) Salakhutdinov and Murray (2008) as a solution to address the high bias associated with IS. AIS, along with its extension known as Hamiltonian Annealed Importance Sampling (HAIS) Sohl-Dickstein and Culpepper (2012), represents generalizations of IS that enable the computation of unbiased expectations by reweighting samples from tractable distributions.

In AIS, a bridge is constructed between forward and reverse Markov chains, connecting the two distributions of interest. This bridge allows for the estimation of lower variance compared to what IS alone can provide. By leveraging the connections between the forward and reverse chains, AIS offers improved accuracy and efficiency in estimating expectations for rare events. In summary, Importance Sampling (IS) is a variance reduction algorithm for rare events, while Annealed Importance Sampling (AIS) and its extension HAIS provide solutions to overcome the bias issues associated with IS. AIS constructs a bridge between forward and reverse Markov chains, allowing for lower variance estimates than IS alone can achieve. These techniques offer improved accuracy and efficiency in estimating expectations for challenging problems involving rare events.

Later, Ranzato’s MCRBM model (2010) Bengio et al. (2013), Dickstein’s non-equilibrium diffusion model (2015) Sohl-Dickstein et al. (2015) and Menick’s self-scaling pixel network autoregressive model (2016) Oord et al. (2016) followed Come. To adapt the network null model to weighted network inference, Milisav et al. (2024) proposed a simulated annealing process to generate random networks with strength sequence preservation. The simulated annealing algorithm is widely used and can efficiently solve NP-complete problems, such as the Travelling Salesman Problem, Max Cut Problem, Zero One Knapsack Problem, Graph Colouring Problem, and so on.

4.1.5 Boltzmann machine neural networks

Hinttion proposed the Boltzmann Machine (BM) in 1985, BM is often referred to in physics as the inverse Ising model. BM is a special form of log-linear Markov random field (MRF), that is, the energy function is a linear function of the free variables. It introduces statistical probability in the state change of neurons, the equilibrium state of the network obeys Boltzmann distribution, and the network operation mechanism is based on a simulated annealing algorithm (Fig. 15), which is a good global optimal search method and is widely used in a certain range. See Nguyen et al. (2017) for the latest research on Boltzmann machines.

A Restricted Boltzmann Machine (RBM) is a type of Boltzmann Machine (BM) that exhibits a specific structure and interaction pattern between its neurons. In an RBM, the neurons in the visible layer and the neurons in the hidden layer are the two variables that interact through efficient coupling. Unlike a general BM, where all neurons can interact with each other, an RBM restricts the interactions to occur exclusively between the visible and hidden units.

The RBM’s goal is to adjust its parameters in a way that maximizes the likelihood of the observed data. By learning the weights and biases of the connections between the visible and hidden units, the RBM aims to capture and represent the underlying patterns and dependencies present in the data. Through an iterative learning process, the RBM adjusts its parameters to improve the likelihood of generating the observed data and, consequently, enhance its ability to model and generate similar data instances.

Regarding RBMs, there are many studies in physics that shed light on how they work and what structures can be learned. Since Professor Hinton proposed RBM’s fast learning algorithm contrast divergence, in order to enhance the expressive ability of RBM and take into account the specific structure of the data, many variant models of RBM have been proposed (Bengio 2009; Ranzato et al. 2010; Ranzato and Hinton 2010). Convolutional Restricted Boltzmann Machine (CRBM) Lee et al. (2009) is a new breakthrough in the RBM model. It uses filters and image convolution operations to share weight features to reduce the parameters of the model. Since most of the hidden unit states learned by RBM are not activated (non-sparse), researchers combined the idea of sparse coding to add a sparse penalty term to the log-likelihood function of the original RBM and proposed a sparse RBM model Lee et al. (2007), a sparse group restricted Boltzmann machine (SGRBM) model Salakhutdinov et al. (2007) and LogSumRBM model Ji et al. (2014), etc. In the articles (Cocco et al. 2018; Tubiana and Monasson 2017), the authors investigate a stochastic Restricted Boltzmann Machine (RBM) model with random, sparse, and unlearned weights. Surprisingly, they find that even a single-layer RBM can capture the compositional structure using hidden layers. This highlights the expressive power of RBMs in representing complex data.

Additionally, the relationship between RBMs with random weights and the Hopfield model is explored in Barra et al. (2018), Mézard (2017). These studies demonstrate the connections and similarities between RBMs and the Hopfield model, shedding light on the underlying mechanisms and properties of both models.

Overall, these works provide insights into the capabilities of RBMs with random weights in capturing compositional structures and their connections to the Hopfield model. Such research enhances our understanding of RBMs and their potential applications in various domains.

4.2 Energy models design neural networks

According to physical knowledge, the steady state of a thing actually represents its corresponding state with the lowest potential energy. Therefore, the steady state of a thing corresponds to the lowest state of a certain energy and is transplanted into the network, thus constructing the definition of an energy function when the network is in a steady state.

In 2006, Lecun et al. reviewed the energy model-based neural network and its application. When the model reaches the optimal solution, it is in the lowest energy state (that is, it seeks to minimize positive data versus energy and maximize negative data versus energy) LeCun et al. (2006). The task is to find the configuration of those hidden variables that minimize the energy value given the observed variables (inference); and to find an appropriate energy function such that the energy of the observed variables is lower than that of the hidden variables (learning).

Normalized probability distributions are difficult to implement in high-dimensional spaces, leading to an interesting approach to generative modeling of data Pernkopf et al. (2014). Normalization can still be done analytically when normalizing (Dinh et al. 2014, 2016; Rezende et al. 2016), these interesting methods can be found in the reference Wang (2018).

4.2.1 Generative adversarial networks (GANs)