Abstract

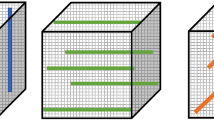

One popular way to compute the CANDECOMP/PARAFAC (CP) decomposition of a tensor is to transform the problem into a sequence of overdetermined least squares subproblems with Khatri-Rao product (KRP) structure involving factor matrices. In this work, based on choosing the factor matrix randomly, we propose a mini-batch stochastic gradient descent method with importance sampling for those special least squares subproblems. Two different sampling strategies are provided. They can avoid forming the full KRP explicitly and computing the corresponding probabilities directly. The adaptive step size version of the method is also given. For the proposed method, we present its theoretical properties and comprehensive numerical performance. The results on synthetic and real data show that our method is effective and efficient, and for unevenly distributed data, it performs better than the corresponding one in the literature.

Similar content being viewed by others

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Kolda, T.G., Bader, B.W.: Tensor decompositions and applications. SIAM Rev. 51(3), 455–500 (2009). https://doi.org/10.1137/07070111X

Sidiropoulos, N.D., De Lathauwer, L., Fu, X., Huang, K., Papalex- akis, E.E., Faloutsos, C.: Tensor decomposition for signal processing and machine learning. IEEE Trans. Signal Process. 65(13), 3551–3582 (2017). https://doi.org/10.1109/TSP.2017.2690524

Carroll, J.D., Chang, J.J.: Analysis of individual differences in mul- tidimensional scaling via an N-way generalization of “Eckart-Young” decomposition. Psychometrika 35(3), 283–319 (1970). https://doi.org/10.1007/BF02310791

Harshman, R.A.: Foundations of the PARAFAC procedure: models and conditions for an “explanatory” multimodal factor analysis. UCLA Working Papers in Phonetics 16, 1–84 (1970)

Battaglino, C., Ballard, G., Kolda, T.G.: A practical randomized CP tensor decomposition. SIAM J. Matrix Anal. Appl. 39(2), 876–901 (2018). https://doi.org/10.1137/17M1112303

Drineas, P., Mahoney, M.W., Muthukrishnan, S., Sarlós, T.: Faster least squares approximation. Numer. Math. 117(2), 219–249 (2011). https://doi.org/10.1007/s00211-010-0331-6

Fu, X., Ibrahim, S., Wai, H.T., Gao, C., Huang, K.: Block-randomized stochastic proximal gradient for low-rank tensor factorization. IEEE Trans. Signal Process. 68, 2170–2185 (2020). https://doi.org/10.1109/TSP.2020.2982321

Wang, Q., Cui, C., Han, D.: A momentum block-randomized stochastic algorithm for low-rank tensor CP decomposition. Pac. J. Optim. 17(3), 433–452 (2021)

Wang, Q., Liu, Z., Cui, C., Han, D.: Inertial accelerated SGD algorithms for solving large-scale lower-rank tensor CP decomposition problems. J. Comput. Appl. Math. 423,(2023). https://doi.org/10.1016/j.cam.2022.114948

Wang, Q., Cui, C., Han, D.: Accelerated doubly stochastic gradient descent for tensor CP decomposition. J. Optim. Theory Appl. 197(2), 665–704 (2023). https://doi.org/10.1007/s10957-023-02193-5

Larsen, B.W., Kolda, T.G.: Practical leverage-based sampling for low- rank tensor decomposition. SIAM J. Matrix Anal. Appl. 43(3), 1488–1517 (2022). https://doi.org/10.1137/21M1441754

Cheng, D., Peng, R., Perros, I., Liu, Y.: SPALS: Fast alternating least squares via implicit leverage scores sampling. In: Proceedings of the 30th international conference on neural information processing systems, pp. 721–729. Curran Associates Inc., Barcelona Spain (2016)

Vervliet, N., Debals, O., Sorber, L., De Lathauwer, L.: Breaking the curse of dimensionality using decompositions of incomplete tensors: tensor- based scientific computing in big data analysis. IEEE Signal Process. Mag. 31(5), 71–79 (2014). https://doi.org/10.1109/MSP.2014.2329429

Bhojanapalli, S., Sanghavi, S.: A new sampling technique for tensors. In: Proceedings of the 30th international conference on neural information processing systems, pp. 3008–3016. Curran Associates Inc., Barcelona Spain (2016)

Vu, X.T., Maire, S., Chaux, C., Thirion-Moreau, N.: A new stochastic optimization algorithm to decompose large nonnegative tensors. IEEE Signal Process. Lett. 22(10), 1713–1717 (2015). https://doi.org/10.1109/LSP.2015.2427456

Beutel, A., Talukdar, P.P., Kumar, A., Faloutsos, C., Papalexakis, E.E., Xing, E.P.: FlexiFaCT: scalable flexible factorization of coupled tensors on hadoop. In: Proceedings of the 2014 SIAM International Conference on Data Mining (SDM), pp. 109–117. SIAM, Philadelphia, PA. (2014)

Vervliet, N., De Lathauwer, L.: A randomized block sampling approach to canonical polyadic decomposition of large-scale tensors. IEEE J. Sel. Topics Signal Process. 10(2), 284–295 (2016). https://doi.org/10.1109/JSTSP.2015.2503260

Needell, D., Srebro, N., Ward, R.: Stochastic gradient descent, weighted sampling, and the randomized Kaczmarz algorithm. Math. Program. 155(1), 549–573 (2016). https://doi.org/10.1007/s10107-015-0864-7

Acar, E., Kolda, T.G., Dunlavy, D.M.: All-at-once optimization for coupled matrix and tensor factorizations. arXiv:1105.3422 (2011)

Acar, E., Dunlavy, D.M., Kolda, T.G.: A scalable optimization approach for fitting canonical tensor decompositions. J. Chemom. 25(2), 67–86 (2011)

Xu, Y., Yin, W.: A block coordinate descent method for regularized multi- convex optimization with applications to nonnegative tensor factorization and completion. SIAM J. Imaging Sci. 6(3), 1758–1789 (2013). https://doi.org/10.1137/120887795

Phan, A.-H., Tichavský, P., Cichocki, A.: Low complexity damped Gauss–Newton algorithms for CANDECOMP/PARAFAC. SIAM J. Matrix Anal. Appl. 34(1), 126–147. https://doi.org/10.1137/100808034

Huang, K., Fu, X.: Low-complexity Levenberg-Marquardt algorithm for tensor canonical polyadic decomposition. In: ICASSP 2020 - 2020 IEEE international conference on acoustics, speech and signal processing (ICASSP), pp. 3922–3926 (2020)

Vandecappelle, M.: Numerical algorithms for tensor decompositions. PhD thesis, Arenberg Doctoral School (2021)

Kolda, T.G., Hong, D.: Stochastic gradients for large-scale tensor decomposition. SIAM J. Math. Data Sci. 2(4), 1066–1095 (2020). https://doi.org/10.1137/19M1266265

Pu, W., Ibrahim, S., Fu, X., Hong, M.: Stochastic mirror descent for low-rank tensor decomposition under non-Euclidean losses. IEEE Trans. Signal Process. 70, 1803–1818 (2022). https://doi.org/10.1109/TSP.2022.3163896

Li, H., Li, Z., Li, K., Rellermeyer, J.S., Chen, L.Y., Li, K.: SGD Tucker: a novel stochastic optimization strategy for parallel sparse Tucker decomposition. IEEE Trans. Parallel Distrib. Syst. 32(7), 1828–1841 (2021). https://doi.org/10.1109/TPDS.2020.3047460

Yuan, L., Zhao, Q., Gui, L., Cao, J.: High-order tensor completion via gradient-based optimization under tensor train format. Signal Process.: Image Commun. 73, 53–61 (2019). https://doi.org/10.1016/j.image.2018.11.012

Newman, E., Horesh, L., Avron, H., Kilmer, M.: Stable tensor neural networks for rapid deep learning. arXiv:1811.06569 (2018)

Drineas, P., Kannan, R., Mahoney, M.W.: Fast Monte Carlo algorithms for matrices I: approximating matrix multiplication. SIAM J. Comput. 36(1), 132–157 (2006). https://doi.org/10.1137/S0097539704442684

Niu, C., Li, H.: Optimal sampling algorithms for block matrix multiplication. J. Comput. Appl. Math. 425,(2023). https://doi.org/10.1016/j.cam.2023.115063

Drineas, P., Magdon-Ismail, M., Mahoney, M.W., Woodruff, D.P.: Fast approximation of matrix coherence and statistical leverage. J. Mach. Learn. Res. 13(1), 3475–3506 (2012)

Ma, P., Chen, Y., Zhang, X., Xing, X., Ma, J., Mahoney, M.W.: Asymptotic analysis of sampling estimators for randomized numerical linear algebra algorithms. J. Mach. Learn. Res. 23(1), 7970–8014 (2022). https://doi.org/10.5555/3586589.3586766

Wang, H., Zhu, R., Ma, P.: Optimal subsampling for large sample logistic regression. J. Amer. Statist. Assoc. 113(522), 829–844 (2018). https://doi.org/10.1080/01621459.2017.1292914

Ai, M., Yu, J., Zhang, H., Wang, H.: Optimal subsampling algorithms for big data regressions. Statist. Sinica 31(2), 749–772 (2021)

Wang, H., Ma, Y.: Optimal subsampling for quantile regression in big data. Biometrika 108(1), 99–112 (2021). https://doi.org/10.1093/biomet/asaa043

Yan, Q., Li, H., Niu, C.: Optimal subsampling for functional quantile regression. Statist. Papers, pp. 1–26 (2022). https://doi.org/10.1007/s00362-022-01367-z

Zhao, P., Zhang, T.: Stochastic optimization with importance sampling for regularized loss minimization. In: Proceedings of the 32nd international conference on machine learning, vol. 37, pp. 1–9. PMLR, Lille, France (2015)

Needell, D., Ward, R.: Batched stochastic gradient descent with weighted sampling. In: Approximation Theory XV: San Antonio 2016, vol. 201, pp. 279–306. Springer, Cham (2017)

Xu, P., Yang, J., Roosta, F., Ré, C., Mahoney,M.W.: Sub-sampled newton methods with non-uniform sampling. In: Advances in neural information processing systems, vol. 29. Curran Associates, Inc., Barcelona, Spain (2016)

Woodruff, D.P.: Sketching as a tool for numerical linear algebra. Found. Trends Theor. Comput. Sci. 10(1–2), 1–157 (2014). https://doi.org/10.1561/0400000060

Ma, P., Mahoney, M., Yu, B.: A statistical perspective on algorithmic leveraging. J. Mach. Learn. Res. textbf16(27), 861–911 (2015). https://doi.org/10.1002/cem.1335

Bader, B.W., Kolda, T.G., et al.: Tensor toolbox for MATLAB. Version 3.2.1 (2021). https://www.tensortoolbox.org. Accessed 04 May 2021

S. A. Nene, S.K.N., Murase, H.: Columbia Object Image Library (COIL- 100). Tech. Report CUCS-006-96, Columbia University, New York, NY (1996)

Bai, Z.Z., Wu, W.T.: On greedy randomized Kaczmarz method for solving large sparse linear systems. SIAM J. Sci. Comput. 40(1), 592–606 (2018). https://doi.org/10.1137/17M1137747

Zhang, Y.J., Li, H.Y.: Greedy Motzkin-Kaczmarz methods for solving linear systems. Numer. Linear Algebra Appl. 29(2), 2429 (2022). https://doi.org/10.1002/nla.2429

Tong, T., Ma, C., Chi, Y.: Accelerating ill-conditioned low-rank matrix estimation via scaled gradient descent. J. Mach. Learn. Res. 22(150), 1–63 (2021)

Acknowledgements

The authors would like to thank the editor and the anonymous reviewers for their detailed comments and helpful sugesstions, which helped considerably to improve the quality of the paper.

Funding

This work was supported by the National Natural Science Foundation of China (No. 11671060) and the Natural Science Foundation Project of CQ CSTC (No. cstc2019jcyj-msxmX0267).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Communicated by: Ivan Oseledets

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yu, Y., Li, H. A block-randomized stochastic method with importance sampling for CP tensor decomposition. Adv Comput Math 50, 22 (2024). https://doi.org/10.1007/s10444-024-10119-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10444-024-10119-6

Keywords

- CP decomposition

- Importance sampling

- Stochastic gradient descent

- Khatri-Rao product

- Randomized algorithm

- Adaptive algorithm