Abstract

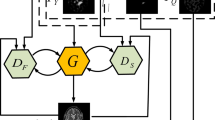

Although medical imaging is frequently used to diagnose diseases, in complex diagnostic situations, specialists typically need to look at different modalities of image information. Creating a composite multimodal medical image can aid professionals in making quick and accurate diagnoses of diseases. The fused images of many medical image fusion algorithms, however, are frequently unable to precisely retain the functional and structural information of the source image. This work develops an end-to-end model based on GAN (U-Patch GAN) to implement the self-supervised fusion of multimodal brain images in order to enhance the fusion quality. The model uses the classical network U-net as the generator, and it uses the dual adversarial mechanism based on the Markovian discriminator (PatchGAN) to enhance the generator's attention to high-frequency information. To ensure that the network satisfies the Lipschitz continuity, we apply the spectral norm to each layer of the network. We also propose better adversarial loss and feature loss (feature matching loss and VGG-16 perceptual loss) based on the F-norm, which significantly enhance the quality of fused images. On public data sets, we performed a lot of tests. First, we studied how clinically useful the fused image was. The model's performance in single-slice images and continuous-slice images was then confirmed by comparison with other six most popular mainstream fusion approaches. Finally, we verify the effectiveness of the adversarial loss and feature loss.

Similar content being viewed by others

References

Hermessi, H.; Mourali, O.; Zagrouba, E., Multimodal medical image fusion review: Theoretical background and recent advances. Signal Processing 2021, 183, 108036.

Tirupal, T.; Mohan, B. C.; Kumar, S. S., Multimodal medical image fusion techniques–a review. Current Signal Transduction Therapy 2021, 16, (2), 142-163.

Maqsood, S.; Javed, U., Multi-modal medical image fusion based on two-scale image decomposition and sparse representation. Biomedical Signal Processing and Control 2020, 57, 101810.

Du, J.; Li, W.; Lu, K.; Xiao, B., An overview of multi-modal medical image fusion. Neurocomputing 2016, 215, 3-20.

Wang, Z.; Cui, Z.; Zhu, Y., Multi-modal medical image fusion by Laplacian pyramid and adaptive sparse representation. Computers in Biology and Medicine 2020, 123, 103823.

Du, J.; Li, W.; Xiao, B.; Nawaz, Q., Union Laplacian pyramid with multiple features for medical image fusion. Neurocomputing 2016, 194, 326-339.

Krishn, A.; Bhateja, V.; Sahu, A. In Medical image fusion using combination of PCA and wavelet analysis, 2014 international conference on advances in computing, communications and informatics (ICACCI), 2014; IEEE: 2014; pp 986-991.

Kong, W.; Lei, Y.; Zhao, H., Adaptive fusion method of visible light and infrared images based on non-subsampled shearlet transform and fast non-negative matrix factorization. Infrared Physics & Technology 2014, 67, 161-172.

Zhu, Z.; Yin, H.; Chai, Y.; Li, Y.; Qi, G., A novel multi-modality image fusion method based on image decomposition and sparse representation. Information Sciences 2018, 432, 516-529.

Tan, W.; Tiwari, P.; Pandey, H. M.; Moreira, C.; Jaiswal, A. K., Multimodal medical image fusion algorithm in the era of big data. Neural Computing and Applications 2020, 1–21.

Azam, M. A.; Khan, K. B.; Salahuddin, S.; Rehman, E.; Khan, S. A.; Khan, M. A.; Kadry, S.; Gandomi, A. H., A review on multimodal medical image fusion: Compendious analysis of medical modalities, multimodal databases, fusion techniques and quality metrics. Computers in Biology and Medicine 2022, 144, 105253.

Xia, K.-j.; Yin, H.-s.; Wang, J.-q., A novel improved deep convolutional neural network model for medical image fusion. Cluster Computing 2019, 22, (1), 1515-1527.

Zhang, Y.; Liu, Y.; Sun, P.; Yan, H.; Zhao, X.; Zhang, L., IFCNN: A general image fusion framework based on convolutional neural network. Information Fusion 2020, 54, 99-118.

Xu, H.; Ma, J.; Le, Z.; Jiang, J.; Guo, X. In Fusiondn: A unified densely connected network for image fusion, Proceedings of the AAAI Conference on Artificial Intelligence, 2020; 2020; pp 12484-12491.

Zhang, H.; Ma, J., SDNet: A versatile squeeze-and-decomposition network for real-time image fusion. International Journal of Computer Vision 2021, 129, (10), 2761-2785.

Ma, J.; Xu, H.; Jiang, J.; Mei, X.; Zhang, X.-P., DDcGAN: A dual-discriminator conditional generative adversarial network for multi-resolution image fusion. IEEE Transactions on Image Processing 2020, 29, 4980-4995.

Nair, R. R.; Singh, T.; Sankar, R.; Gunndu, K., Multi-modal medical image fusion using lmf-gan-a maximum parameter infusion technique. Journal of Intelligent & Fuzzy Systems 2021, 41, (5), 5375-5386.

Fu, J.; Li, W.; Du, J.; Xu, L., DSAGAN: A generative adversarial network based on dual-stream attention mechanism for anatomical and functional image fusion. Information Sciences 2021, 576, 484-506.

Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y., Generative adversarial nets. Advances in neural information processing systems 2014, 27.

Huang, H.; Lin, L.; Tong, R.; Hu, H.; Zhang, Q.; Iwamoto, Y.; Han, X.; Chen, Y.-W.; Wu, J. In Unet 3+: A full-scale connected unet for medical image segmentation, ICASSP 2020–2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2020; IEEE: 2020; pp 1055–1059.

Bera, S.; Biswas, P. K., Noise conscious training of non local neural network powered by self attentive spectral normalized Markovian patch GAN for low dose CT denoising. IEEE Transactions on Medical Imaging 2021, 40, (12), 3663-3673.

Liu, Z.; Blasch, E.; Xue, Z.; Zhao, J.; Laganiere, R.; Wu, W., Objective assessment of multiresolution image fusion algorithms for context enhancement in night vision: a comparative study. IEEE transactions on pattern analysis and machine intelligence 2011, 34, (1), 94-109.

Song, X.; Wu, X.-J.; Li, H. In MSDNet for medical image fusion, International conference on image and graphics, 2019; Springer: 2019; pp 278-288.

Bakurov, I.; Buzzelli, M.; Schettini, R.; Castelli, M.; Vanneschi, L., Structural similarity index (SSIM) revisited: A data-driven approach. Expert Systems with Applications 2022, 189, 116087.

Hodson, T. O., Root-mean-square error (RMSE) or mean absolute error (MAE): when to use them or not. Geoscientific Model Development 2022, 15, (14), 5481-5487.

Acknowledgements

This work was supported by the Henan Science and Technology Research Project (No. 222102210309); the National Natural Science Foundation of China (Nos. 62106067 and 62106068); the Natural Science Project of Henan Education Department, China (No. 21A520010); the Natural Science Project of Zhengzhou Science and Technology Bureau, China (No. 21ZZXTCX21); and the Innovative Funds Plan of Henan University of Technology.

Funding

This work was supported by the Henan Science and Technology Research Project (No. 222102210309); the National Natural Science Foundation of China (Nos. 62106067 and 62106068); the Natural Science Project of Henan Education Department, China (No. 21A520010); the Natural Science Project of Zhengzhou Science and Technology Bureau, China (No. 21ZZXTCX21); and the Innovative Funds Plan of Henan University of Technology (2021ZKCJ14).

Author information

Authors and Affiliations

Contributions

Chao FAN is responsible for the revision of the paper and the completion of the experiment; Hao LIN is responsible for the writing of the paper and the improvement of the experiments; FAN and LIN are responsible for the idea of the thesis; Yingying QIU is responsible for organizing the materials and revising the format of the thesis.

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Fan, C., Lin, H. & Qiu, Y. U-Patch GAN: A Medical Image Fusion Method Based on GAN. J Digit Imaging 36, 339–355 (2023). https://doi.org/10.1007/s10278-022-00696-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10278-022-00696-7