Abstract

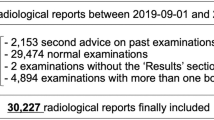

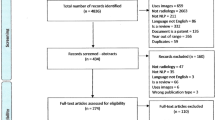

The ideal radiology report reduces diagnostic uncertainty, while avoiding ambiguity whenever possible. The purpose of this study was to characterize the use of uncertainty terms in radiology reports at a single institution and compare the use of these terms across imaging modalities, anatomic sections, patient characteristics, and radiologist characteristics. We hypothesized that there would be variability among radiologists and between subspecialities within radiology regarding the use of uncertainty terms and that the length of the impression of a report would be a predictor of use of uncertainty terms. Finally, we hypothesized that use of uncertainty terms would often be interpreted by human readers as “hedging.” To test these hypotheses, we applied a natural language processing (NLP) algorithm to assess and count the number of uncertainty terms within radiology reports. An algorithm was created to detect usage of a published set of uncertainty terms. All 642,569 radiology report impressions from 171 reporting radiologists were collected from 2011 through 2015. For validation, two radiologists without knowledge of the software algorithm reviewed report impressions and were asked to determine whether the report was “uncertain” or “hedging.” The relationship between the presence of 1 or more uncertainty terms and the human readers’ assessment was compared. There were significant differences in the proportion of reports containing uncertainty terms across patient admission status and across anatomic imaging subsections. Reports with uncertainty were significantly longer than those without, although report length was not significantly different between subspecialities or modalities. There were no significant differences in rates of uncertainty when comparing the experience of the attending radiologist. When compared with reader 1 as a gold standard, accuracy was 0.91, sensitivity was 0.92, specificity was 0.9, and precision was 0.88, with an F1-score of 0.9. When compared with reader 2, accuracy was 0.84, sensitivity was 0.88, specificity was 0.82, and precision was 0.68, with an F1-score of 0.77. Substantial variability exists among radiologists and subspecialities regarding the use of uncertainty terms, and this variability cannot be explained by years of radiologist experience or differences in proportions of specific modalities. Furthermore, detection of uncertainty terms demonstrates good test characteristics for predicting human readers’ assessment of uncertainty.

Similar content being viewed by others

References

Schoenfeld AJ, Harris MB, Davis M. Clinical uncertainty at the intersection of advancing technology, evidence-based medicine, and health care policy. JAMA Surg. 2014;149(12):1221–2.

Platt ML, Huettel SA. Risky business: the neuroeconomics of decision making under uncertainty. Nat Neurosci. 2008;11(4):398–403.

Saposnik G, Sempere AP, Prefasi D, Selchen D, Ruff CC, Maurino J, et al. Decision-making in Multiple Sclerosis: The Role of Aversion to Ambiguity for Therapeutic Inertia among Neurologists (DIScUTIR MS). Front Neurol. 2017;8:65.

Rosenkrantz AB, Kiritsy M, Kim S. How “consistent” is “consistent”? A clinician-based assessment of the reliability of expressions used by radiologists to communicate diagnostic confidence. Clin Radiol. 2014;69(7):745–9.

Khorasani R, Bates DW, Teeger S, Rothschild JM, Adams DF, Seltzer SE. Is terminology used effectively to convey diagnostic certainty in radiology reports? Acad Radiol. 2003;10(6):685–8.

Carney PA, Yi JP, Abraham LA, Miglioretti DL, Aiello EJ, Gerrity MS, et al. Reactions to uncertainty and the accuracy of diagnostic mammography. J Gen Intern Med. 2007;22(2):234–41.

Clinger NJ, Hunter TB, Hillman BJ. Radiology reporting: attitudes of referring physicians. Radiology. 1988;169(3):825–6.

Panicek DM, Hricak H. How Sure Are You, Doctor? A Standardized Lexicon to Describe the Radiologist’s Level of Certainty. AJR Am J Roentgenol. 2016;207(1):2–3.

Wallis A, McCoubrie P. The radiology report—are we getting the message across? Clin Radiol. 2011;66(11):1015–22.

Sobel JL, Pearson ML, Gross K, Desmond KA, Harrison ER, Rubenstein LV, et al. Information content and clarity of radiologists’ reports for chest radiography. Acad Radiol. 1996;3(9):709–17.

Valls C. Pitfalls of the vague radiology report. AJR Am J Roentgenol. 2001;176(1):253–4.

Levinson W. Physician-patient communication. A key to malpractice prevention. JAMA. 1994;272(20):1619–20.

Hoang JK. Do not hedge when there is certainty. J Am Coll Radiol. 2017;14(1):5.

Burnside ES, Sickles EA, Bassett LW, Rubin DL, Lee CH, Ikeda DM, et al. The ACR BI-RADS experience: learning from history. J Am Coll Radiol. 2009;6(12):851–60.

Tessler FN, Middleton WD, Grant EG, Hoang JK, Berland LL, Teefey SA, et al. ACR Thyroid Imaging, Reporting and Data System (TI-RADS): White Paper of the ACR TI-RADS Committee. J Am Coll Radiol. 2017;14(5):587–95.

Berlin L. Radiologic errors and malpractice: a blurry distinction. AJR Am J Roentgenol. 2007;189(3):517–22.

Hripcsak G, Austin JHM, Alderson PO, Friedman C. Use of natural language processing to translate clinical information from a database of 889,921 chest radiographic reports. Radiology. 2002;224(1):157–63.

Do BH, Wu A, Biswal S, Kamaya A, Rubin DL. Informatics in Radiology: RADTF: A Semantic Search–enabled, Natural Language Processor–generated Radiology Teaching File. Radiographics. 2010;30(7):2039–48.

Dutta S, Long WJ, Brown DFM, Reisner AT. Automated detection using natural language processing of radiologists recommendations for additional imaging of incidental findings. Ann Emerg Med. 2013;62(2):162–9.

Dang PA, Kalra MK, Blake MA, Schultz TJ, Stout M, Lemay PR, et al. Natural language processing using online analytic processing for assessing recommendations in radiology reports. J Am Coll Radiol. 2008;5(3):197–204.

Dogra N, Giordano J, France N. Cultural diversity teaching and issues of uncertainty: the findings of a qualitative study. BMC Med Educ. 2007;7(1):8.

Wu AS, Do BH, Kim J, Rubin DL. Evaluation of negation and uncertainty detection and its impact on precision and recall in search. J Digit Imaging. 2011;24(2):234–42.

Hanauer DA, Liu Y, Mei Q, Manion FJ, Balis UJ, Zheng K. Hedging their mets: the use of uncertainty terms in clinical documents and its potential implications when sharing the documents with patients. AMIA Annu Symp Proc. 2012;2012:321–30.

Bhise V, Rajan SS, Sittig DF, Morgan RO, Chaudhary P, Singh H. Defining and measuring diagnostic uncertainty in medicine: a systematic review. J Gen Intern Med. 2018;33(1):103–15.

Zwaan L, Singh H. The challenges in defining and measuring diagnostic error. Diagnosis (Berl). 2015;2(2):97–103.

Singh H, Giardina TD, Meyer AND, Forjuoh SN, Reis MD, Thomas EJ. Types and origins of diagnostic errors in primary care settings. JAMA Intern Med. 2013;173(6):418–25.

Hoang JK, Middleton WD, Farjat AE, Langer JE, Reading CC, Teefey SA, et al. Reduction in thyroid nodule biopsies and improved accuracy with American college of radiology thyroid imaging reporting and data system. Radiology. 2018;287(1):185–93.

Tang A, Bashir MR, Corwin MT, Cruite I, Dietrich CF, Do RKG, et al. Evidence supporting LI-RADS major features for CT- and MR imaging-based diagnosis of hepatocellular carcinoma: a systematic review. Radiology. 2018;286(1):29–48.

MacMahon H, Naidich DP, Goo JM, Lee KS, Leung ANC, Mayo JR, et al. Guidelines for Management of Incidental Pulmonary Nodules Detected on CT Images: From the Fleischner Society 2017. Radiology. 2017;284(1):228–43.

Pons E, Braun LMM, Hunink MGM, Kors JA. Natural language processing in radiology: a systematic review. Radiology. 2016;279(2):329–43.

Lacson R, Khorasani R. Practical examples of natural language processing in radiology. J Am Coll Radiol. 2011;8(12):872–4.

Srinivasa Babu A, Brooks ML. The malpractice liability of radiology reports: minimizing the risk. Radiographics. 2015;35(2):547–54.

Dreyer KJ, Kalra MK, Maher MM, Hurier AM, Asfaw BA, Schultz T, et al. Application of recently developed computer algorithm for automatic classification of unstructured radiology reports: validation study. Radiology. 2005;234(2):323–9.

Abbass IM, Krause TM, Virani SS, Swint JM, Chan W, Franzini L. Revisiting the economic efficiencies of observation units. Manag Care. 2015;24(3):46–52.

Prabhakar AM, Misono AS, Harvey HB, Yun BJ, Saini S, Oklu R. Imaging utilization from the ED: no difference between observation and admitted patients. Am J Emerg Med. 2015;33(8):1076–9.

Molins E, Macià F, Ferrer F, Maristany M-T, Castells X. Association between radiologists’ experience and accuracy in interpreting screening mammograms. BMC Health Serv Res. 2008;8:91.

Miglioretti DL, Gard CC, Carney PA, Onega TL, Buist DSM, Sickles EA, et al. When radiologists perform best: the learning curve in screening mammogram interpretation. Radiology. 2009;253(3):632–40.

Saposnik G, Redelmeier D, Ruff CC, Tobler PN. Cognitive biases associated with medical decisions: a systematic review. BMC Med Inform Decis Mak. 2016;16(1):138.

Funding

Dr. Callen was supported by the National Institutes of Health (NIBIB) T32 Training Grant, T32EB001631.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that they have no conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

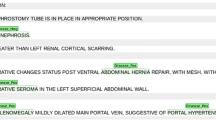

Appendix

Appendix

Rights and permissions

About this article

Cite this article

Callen, A.L., Dupont, S.M., Price, A. et al. Between Always and Never: Evaluating Uncertainty in Radiology Reports Using Natural Language Processing. J Digit Imaging 33, 1194–1201 (2020). https://doi.org/10.1007/s10278-020-00379-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10278-020-00379-1