Abstract

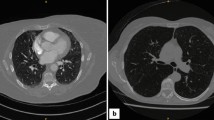

The objectives of this study were to create a mutual conversion system between contrast-enhanced computed tomography (CECT) and non-CECT images using a cycle generative adversarial network (cycleGAN) for the internal jugular region. Image patches were cropped from CT images in 25 patients who underwent both CECT and non-CECT imaging. Using a cycleGAN, synthetic CECT and non-CECT images were generated from original non-CECT and CECT images, respectively. The peak signal-to-noise ratio (PSNR) and structural similarity index measure (SSIM) were calculated. Visual Turing tests were used to determine whether oral and maxillofacial radiologists could tell the difference between synthetic versus original images, and receiver operating characteristic (ROC) analyses were used to assess the radiologists’ performances in discriminating lymph nodes from blood vessels. The PSNR of non-CECT images was higher than that of CECT images, while the SSIM was higher in CECT images. The Visual Turing test showed a higher perceptual quality in CECT images. The area under the ROC curve showed almost perfect performances in synthetic as well as original CECT images. In conclusion, synthetic CECT images created by cycleGAN appeared to have the potential to provide effective information in patients who could not receive contrast enhancement.

Similar content being viewed by others

Data availability

The dataset used in current study is available reasonable request to the corresponding author.

References

Groell R, Willfurth P, Schaffler GJ, Mayer R, Schmidt F, Uggowitzer MM, et al. Contrast-enhanced spiral CT of the head and neck: comparison of contrast material injection rates. AJNR Am J Neuroradiol. 1999;20(9):1732–6.

Lakhal K, Ehrmann S, Robert-Edan V. Iodinated contrast medium: is there a re(n)al problem? a clinical vignette-based review. Crit Care. 2020;24(1):641. https://doi.org/10.1186/s13054-020-03365-9.

Nielsen Y, Thomsen H. Optimal management of acute nonrenal adverse reactions to iodine-based contrast media Reports Med. Imaging. 2013;6:49e55.

Morzycki A, Bhatia A, Murphy KJ. Adverse reactions to contrast material: a canadian update. Can Assoc Radiol J. 2017;68(2):187–93. https://doi.org/10.1016/j.carj.2016.05.006.

Goodfellow J, Pougetbadie M, Mirza B, Xu D, Warde Farley S, Ozair A. Generative adversarial nets. In: Ghahramani Z, Welling M, Cortes C, Lawrence ND, Weinberger KQ, editors. Advances in Neural Information Processing Systems. New York, NY: ACM; 2014.

Zhu JY, Park T, Isola P, Efros AA. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy. 2017;22–29:2223–32.

Wang J, Zhao Y, Noble JH, Dawant BM. Conditional generative adversarial networks for metal artifact reduction in CT images of the ear. Med Image Comput Comput Assist Interv. 2018;11070:3–11. https://doi.org/10.1007/978-3-030-00928-1_1.

Tang C, Li J, Wang L, Li Z, Jiang L, Cai A, et al. Unpaired low-dose CT denoising network based on cycle-consistent generative adversarial network with prior image information. Comput Math Methods Med. 2019;7(2019):8639825. https://doi.org/10.1155/2019/8639825.

Wang J, Noble JH, Dawant BM. Metal artifact reduction for the segmentation of the intra cochlear anatomy in CT images of the ear with 3D-conditional GANs. Med Image Anal. 2019;58: 101553. https://doi.org/10.1016/j.media.2019.101553.

Kida S, Kaji S, Nawa K, Imae T, Nakamoto T, Ozaki S, et al. Visual enhancement of cone-beam CT by use of CycleGAN. Med Phys. 2020;47(3):998–1010. https://doi.org/10.1002/mp.13963.

Kearney V, Ziemer BP, Perry A, Wang T, Chan JW, Ma L, et al. Attention-aware discrimination for MR-to-CT image translation using cycle-consistent generative adversarial networks. Radiol Artif Intell. 2020;2(2): e190027. https://doi.org/10.1148/ryai.2020190027.

Li Z, Shi W, Xing Q, Miao Y, He W, Yang H, et al. Low-dose CT image denoising with improving WGAN and hybrid loss function. Comput Math Methods Med. 2021;26(2021):2973108. https://doi.org/10.1155/2021/2973108.

Haubold J, Hosch R, Umutlu L, Wetter A, Haubold P, Radbruch A, et al. Contrast agent dose reduction in computed tomography with deep learning using a conditional generative adversarial network. Eur Radiol. 2021;31(8):6087–95. https://doi.org/10.1007/s00330-021-07714-2.

Choi JW, Cho YJ, Ha JY, Lee SB, Lee S, Choi YH, et al. Generating synthetic contrast enhancement from non-contrast chest computed tomography using a generative adversarial network. Sci Rep. 2021;11(1):20403. https://doi.org/10.1038/s41598-021-00058-3.

Denck J, Guehring J, Maier A, Rothgang E. MR-contrast-aware image-to-image translations with generative adversarial networks. Int J Comput Assist Radiol Surg. 2021;16(12):2069–78. https://doi.org/10.1007/s11548-021-02433-x.

Gomi T, Kijima Y, Kobayashi T, Koibuchi Y. Evaluation of a generative adversarial network to improve image quality and reduce radiation-dose during digital breast tomosynthesis. Diagnostics (Basel). 2022;12(2):495. https://doi.org/10.3390/diagnostics12020495.

Huang Z, Zhang G, Lin J, Pang Y, Wang H, Bai T, et al. Multi-modal feature-fusion for CT metal artifact reduction using edge-enhanced generative adversarial networks. Comput Methods Programs Biomed. 2022;217: 106700. https://doi.org/10.1016/j.cmpb.2022.106700.

Wang X, Yu Z, Wang L, Zheng P. An enhanced priori knowledge GAN for CT images generation of early lung nodules with small-size labelled samples. Oxid Med Cell Longev. 2022;14(2022):2129303. https://doi.org/10.1155/2022/2129303.

Li J, Qu Z, Yang Y, Zhang F, Li M, Hu S. TCGAN: a transformer-enhanced GAN for PET synthetic CT. Biomed Opt Express. 2022;13(11):6003–18. https://doi.org/10.1364/BOE.467683.

Wang Z, Bovik AC, Sheikh HR, Simoncelli EP. Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process. 2004;13:600–12.

Fleiss JL. Measuring nominal scale agreement among many raters. Psychol Bull. 1971;76:378–82.

Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics. 1977;33:159–74.

Hosmer DW Jr, Lemeshow S, Sturdivant RX. Applied logistic regression. 3rd ed. New York: Wiley; 2013. p. 177.

Power M, Fell G, Wright M. Principles for high-quality, high-value testing. Evidence Based Medicine. 2013;18(1):5–10.

Kim HS, Ha EG, Lee A, Choi YJ, Jeon KJ, Han SS, et al. Refinement of image quality in panoramic radiography using a generative adversarial network. Dentomaxillofac Radiol. 2023;52:20230007.

Acknowledgements

We thank Helen Jeays, BDSc AE, from Edanz (https://jp.edanz.com/ac) for editing a draft of this manuscript.

Author information

Authors and Affiliations

Contributions

Motoki Fukuda conducted the full experiment involving the deep learning method, compiled the results, and wrote the full manuscript. Yoshiko Ariji, Shinya Kotaki, and Michihito Nozawa evaluated the CT images as the observers. Chiaki Kuwada, Yoshitaka Kise, and Eiichiro Ariji supervised the whole experiment and provided important instructions and advice. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

All authors declare that they have no conflicts of interest. These include grants, patent licensing arrangements, consultancies, stock or other equity ownership, advisory board memberships, or payments for conducting or publicizing the study.

Human rights

This study was approved by Aichi Gakuin University’s ethics committee (approval no. 586). All procedures were in accordance with the ethical standards of the responsible committee on human experimentation (institutional and national) and with the 1975 Declaration of Helsinki, as amended in 2008. As this study was a retrospective study, informed consent could not be obtained from all patients. The purposes and methods of this study were made public, and patients were given the right to opt out of participation.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Fukuda, M., Kotaki, S., Nozawa, M. et al. A cycle generative adversarial network for generating synthetic contrast-enhanced computed tomographic images from non-contrast images in the internal jugular lymph node-bearing area. Odontology (2024). https://doi.org/10.1007/s10266-024-00933-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10266-024-00933-1