Abstract

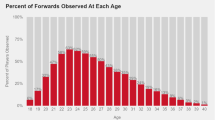

In professional tennis, it is often acknowledged that the server has an initial advantage. Indeed, the majority of points are won by the server, making the serve one of the most important elements in this sport. In this paper, we focus on the role of the serve advantage in winning a point as a function of the rally length. We propose a Bayesian isotonic logistic regression model for the probability of winning a point on serve. In particular, we decompose the logit of the probability of winning via a linear combination of B-splines basis functions, with athlete-specific basis function coefficients. Further, we ensure the serve advantage decreases with rally length by imposing constraints on the spline coefficients. We also consider the rally ability of each player, and study how the different types of court may impact on the player’s rally ability. We apply our methodology to a Grand Slam singles matches dataset.

Similar content being viewed by others

References

Abraham C, Khadraoui K (2015) Bayesian regression with b-splines under combinations of shape constraints and smoothness properties. Stat Neerlandica 69(2):150–170

Baio G, Blangiardo M (2010) Bayesian hierarchical model for the prediction of football results. J Appl Stat 69:150–170

Barnett T, Clarke SR (2005) Combining player statistics to predict outcomes of tennis matches. IMA J Manag Math 16:113–120

Bradley RA, Terry ME (1952) Rank analysis of incomplete block designs: the method of paired comparisons. Biometrika 39:324–345

Carpenter B, Gelman A, Hoffman M, Lee D, Goodrich B, Betancourt M, Brubaker M, Guo J, Li P, Riddell A (2017) Stan: a probabilistic programming language. J Stat Soft 76(1):1–32

Christensen R, Johnson W, Branscum A, Hanson TE (2010) Bayesian ideas and data analysis. RCR Press, Baco Raton

de Boor C (2001) A practical guide to splines. Springer, Berlin

Elphinstone C (1983) A target distribution model for nonparametric density estimation. Commun Stat Theory Methods 12(2):161–198

Geisser S, Eddy WF (1979) A predictive approach to model selection. J Am Stat Assoc 3:153–160

Gelfand AE, Dey DK (1994) Bayesian model choice: asymptotics and exact calculations. J R Stat Soc Ser B (Methodological) 56(3):501–514

Gelfand AE, Sahu SK (1999) Identifiability, improper priors, and gibbs sampling for generalized linear models. J Am Stat Assoc 94(445):247–253

Gelman A, Goegebeur Y, Tuerlinckx F, Mechelen I (2000) Diagnostic checks for discrete data regression models using posterior predictive simulations. J R Stat Soc Ser C 49:247–268

Gelman A, Hwang J, Vehtari A (2014) Understanding predictive information criteria for bayesian models. Stat Comput 24:997–1016

Gilsdorf KF, Sukhatme VA (2008) Testing rosen’s sequential elimination tournament model: incentives and player performance in professional tennis. J Sport Econ 9:287–303

Glickman ME (1999) Parameter estimation in large dynamic paired comparison experiments. J R Stat Soc Ser C (Appl Stat) 48:377–394

Gomes RV, Coutts AJ, Viveiros L, Aoki MS (2011) Physiological demands of match-play in elite tennis: a case study. Eur J Sport Sci 11(2):105–109

Gorgi P, Koopman SJS, Lit R (2018) The analysis and forecasting of ATP tennis matches using a high-dimensional dynamic model. Tinbergen Institute Discussion Papers 18-009/III, Tinbergen Institute

Heinzmann D (2008) A filtered polynomial approach to density estimation. Comput Stat 23(3):343–360

Irons DJ, Buckley S, Paulden T (2014) Developing an improved tennis ranking system. J Quant Anal Sports 10:2

Klaassen FJ, Magnus JR (2003) Forecasting the winner of a tennis match. Eur J Oper Res 148:257–267

Kotze J, Mitchell SJ, Rothberg S (2000) The role of the racket in high-speed tennis serves. Sports Eng 3:67–84

Kovalchik S (2016) Searching for the goat of tennis win prediction. J Quant Anal Sports 12:127–138

Kovalchik S (2018a) deuce: resources for analysis of professional tennis data. R Pack Vers 1:2

Kovalchik S (2018b) The serve advantage in professional tennis (under Review)

Lees A (2003) Science and the major racket sports: a review. J Sports Sci 2:707–732

Lunn D, Jackson C, Best N, Thomas DA Spiegelhalter (2012) The BUGS boo: a practical introduction to bayesian analysis. Texts in statistical science. Chapman & Hall/CRC, Boca Raton

Manderson AA, Cripps E, Murray K, Turlach BA (2017) Monotone polynomials using bugs and stan. Aust N Zeal J Stat 59(4):353–370

Murray K, Müller S, Turlach BA (2013) Revisiting fitting monotone polynomials to data. Comput Stat 28:1989–2005

Murray K, Müller S, Turlach BA (2016) Fast and flexible methods for monotone polynomial fitting. J Stati Comput Simul 86(15):2946–2966

Newton PK, Keller JB (2005) Probability of winning at tennis i. theory and data. Stud Appl Math 114(3):241–269

O’Donoghue GP, Brown E (2008) The importance of service in grand slam singles tennis. Int J Perf Anal Sport 8(3):70–78

Orani V (2019) Bayesian isotonic logistic regression via constrained splines: an application to estimating the serve advantage in professional tennis. Master’s thesis, University of Torino

Plummer M, Best N, Cowles K, Vines K (2006) Coda: convergence diagnosis and output analysis for mcmc. R News 6(1):7–11

Plummer M, Stukalov A, Denwood MJ (2016) rjags: Bayesian Graphical Models Using MCMC

R Core Team (2013) R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing, Vienna, Austria

Robert CP (1996) Intrinsic losses. Theory Decis 40:191–214

Smith S (2013) Effects of playing surface and gender on rally durations in singles grand slam tennis. PhD thesis, Cardiff Metropolitan University

Spiegelhalter DJ, Best NG, Carlin BP, van der Linde A (2002) Bayesian measures of model complexity and fit. J R Stat Soc 64:583–639

Starbuck C, Damm L, Clarke J, Carré M, Capel-Davis J, Miller S, Stiles V, Dixon S (2016) The influence of tennis court surfaces on player perceptions and biomechanical response. J Sports Sci 34(17):1627–1636

Watanabe S (2010) Asymptotic equivalence of bayes cross validation and widely applicable information criterion in singular learning theory. J Mach Learn Res 11:3571–3594

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1: Proof of Proposition 1

Consider the restricted spline function

where k is the order of the B-splines. Following Formula (12) on page 116 and Formula (13) of de Boor (2001), we can compute its derivative as

where we used the fact that that \(\sum _{m=m_{L_0}-k+1}^{M} b_{m,k-1}(s)=1\). The latter follows from Formula (37) on page 96 of de Boor (2001), and from the fact that \(b_{m,k-1}(s)=0\) for \(s\in [L_0,U]\) and \(m\notin \{m=m_{L_0}-k+1,\dots ,M\}\). It is straightforward to observe that the constant \(\sup _{m\in \{m_{L_0}-k+1,\dots ,M\}}\left\{ \frac{\beta _m-\beta _{m-1}}{t_{m+k-1}-t_m} \right\} \) is smaller or equal than zero if and only if

The latter property is equivalent to requiring that the restricted control polygon \({\mathcal {C}}_{[L_0,U]}(s)\) is not increasing on its support, i.e. for \(s\in [{\bar{t}}_{m_{L_0}-k},{\bar{t}}_M]\).

Appendix 2: Rally abilities on different types of courts

See Table 5

Appendix 3: Predictive information criteria

In this “Appendix” we provide additional details and equations for the predictive information criteria used in Sect. 4. The interested reader can refer to Gelman et al. (2014) for a more extensive review.

Let us begin with the Log Pseudo Marginal Likelihood (LPML) criterion (Geisser and Eddy 1979). Let \({M_m}\) be a particular model, and suppose data \({\textit{\textbf{y}}} = (y_1,\cdots , y_n)^\top \) arise independently given a vector of model parameters \(\varvec{\theta }_m\) so that \(p({\textit{\textbf{y}}}\vert \varvec{\theta }_m,{M_m}) = \prod _{i=1}^{n}{p(y_i|\varvec{\theta }_m,M_m)}\). To compare the performance of different models \(M_1, \ldots , M_M\) via Bayes factors, we need to compute \(p({\textit{\textbf{y}}}|{M_m})\) for the various models under consideration, but this density may be difficult to compute. Geisser and Eddy (1979) propose to replace \(p({\textit{\textbf{y}}}|{M_m})\) with the pseudomarginal likelihood:

where \(p(y_i|{\textit{\textbf{y}}}_{-i},{M_m})\) is the i-th conditional predictive ordinate (\(\text {CPO}_i\)), that is, the predictive density of the i-th observation given all the other data, \({\textit{\textbf{y}}}_{-i}\). Let us focus on a particular model \(M_m\) and thus, for ease of notation, let us omit the conditioning to model \(M_m\) and denote \({\varvec{\theta }}_m = {\varvec{\theta }}\) hereafter. Christensen et al. (2010) show that the i-th CPO can be expressed as \(\text {CPO}_i^{-1}= \mathbb {E}\left[ \frac{1}{p(y_i \mid \varvec{\theta })} \Big \vert y_1,\ldots , y_n \right] \). By resorting to a Monte Carlo approximation, we can compute the i-th CPO directly from the MCMC output based on fitting the model to all the data (Gelfand and Dey 1994). Specifically, for each i:

where T denotes the total number of iterations, and \(\left\{ {\varvec{\theta }}^{(t)}\right\} _{t=1}^T\) are samples drawn from the posterior distribution of the model parameters \((\varvec{\theta } \vert {y_1},\ldots , y_n)\). Geisser and Eddy (1979) summarise a model’s predictive performance via the LPML, given by

The best model is the one that maximises the LPML coefficient. Similarly, we can use the MCMC posterior samples to obtain an estimate of the expected log-pointwise posterior predictive density (\(\widehat{\text {elppd}}_{\text {waic}}\)):

where \(p\left( y_i|{\varvec{\theta }}^{(t)}\right) \) is the posterior predictive distribution for individual i given model parameters \({\varvec{\theta }}^{(t)}\) and \(\text {Var}_{t=1}^T \left[ \log p\left( y_i|{\varvec{\theta }}^{(t)}\right) \right] \) represents the sample variance of the log posterior predictive distribution for each data point \(y_i\). The expected log predictive density is a common measure of a model’s predictive accuracy due to its relation to the Kullback-Leibler information measure (Robert 1996). Indeed, asymptotically, the model with the lowest Kullback-Leibler information (equivalently, the highest expected log predictive density) will have the highest posterior probability. Therefore, it can be used as a criterion to compare models in terms of their predictive perfomance. The first term in Eq. (16) is the computed log pointwise posterior predictive density on the observed data \(y_i\)’s, which is typically an over-estimate of the expected log pointwise predictive density for future data (Gelman et al. 2014). The second term in Eq. (16) can be thought of as correction for the effective number of parameters to adjust for overfitting. The Watanabe–Akaike information criterion (WAIC) is then defined as:

The best model is the one that minimises the WAIC. We remark that Eq. 17 does not match the original definition for the WAIC in Watanabe (2010). Eq. 17 corresponds to the definition for the WAIC given in Gelman et al. (2014), who suggest scaling the criterion to put it on the deviance scale, thus making it comparable to the Deviance Information Criterion (DIC) (Spiegelhalter et al. 2002). The DIC is itself the sum of a measure of model fit and a penalty, \(p_{DIC}\), representing the effective number of parameters in the model:

where \({\widehat{{\varvec{\theta }}}}\) is the posterior mean of \({\varvec{\theta }}\), \({\widehat{{\varvec{\theta }}}} = \mathbb {E}[{\varvec{\theta }}\mid {\textit{\textbf{y}}}]\), and

The smallest DIC identifies the model with the best predictive performance, in the same spirit of Akaike’s criterion.

Finally, a popular measure of a model’s predictive accuracy (not based on the log predictive density) is the Root Mean Squared Error (RMSE). Denoting with \(n_s\) the total number of servers, the RMSE of the estimated posterior probability of winning on serve to the truth is computed as:

Here \(p_i(x)\) is the observed frequency of wins on serve given rally length x for server i, and \({\widehat{p}}^{(t)}_i(x)\) is the estimated probability at iteration t, where logit \({\widehat{p}}^{(t)}_i(x) = \sum _{m=1}^M \beta _{i,m}^{(t)} b_m(x)+ (\alpha _i^{(t)} - \alpha _j^{(t)})\).

Appendix 4: Convergence diagnostics and model checks

Valid inference from sequences of MCMC samples rely on the assumption that samples are drawn from the true posterior distribution of the model parameters. Asymptotic theory guarantees the validity of this assumption as the number of iterations approaches infinity. A significant task is to determine the minimum number of samples required to ensure a reasonable approximation to the target posterior density. After some tests and convergence diagnostics about the best setting for the parameters of our model, a good balance between convergence of the chain and computation time was achieved by running our algorithm for 20000 iterations, with a burn-in of 100 iterations and thinning every twenty to reduce autocorrelation within the chain.

In our application, we are interested in estimating the conditional probability of winning the point on serve as a function of rally length. Thus, here we report convergence diagnostics for our quantity of interest in our final model (Sect. 4). In particular, at each iteration \(t = 1,\ldots , T\), we estimate the probability of winning on serve, \({\widehat{p}}_{i}(x)\), given a particular value of rally length \(x \in \{1,\ldots ,15\}\) for a selected player i as

where \(\{\beta _{i m}^{(t)}\}_{m=1}^M\) and \(\alpha _i^{(t)}\) are, respectively, the estimate at iteration t of the basis function coefficients and rally ability for player i, and \({\bar{\alpha }}_j\) is obtained by averaging all the \(\alpha _j^{(t)}\), with \(j\ne i\). Figure 11 shows traceplots and autocorrelation functions of \({\widehat{p}}_i(x)^{(t)}\) for randomly selected players at randomly selected rally lengths. We also report the effective sample size, which indicates the approximate effective number of independent simulations draws for our estimand of interest. It appears that our chain converged quickly and mixed efficiently.

Traceplots and autocorrelation function (ACF) of the estimated probability of winning a point on serve for randomly selected players in the training dataset at randomly selected rally lengths. The solid red lines indicate the observed frequency of wins on serve for the corresponding athlete at the indicated rally length. The ACF plots also report the effective sample size (colour figure online)

For a general assessment of model fit, we compare the discrete data with their predicted value by computing the realised residuals \(r = y - {\mathbb {E}}[y | x, \beta , \alpha ]\), as described in Gelman et al. (2000). For our model, the realised residuals for player i at rally length x are defined and computed at each iteration t as follows:

For each value of rally length, we then compute the mean residuals for player i as \({\bar{r}}_{i}(x) = \sum _{t = B + 1}^{T} r_{i}^{(t)}(x)\), where the mean is computed over the post burn-in (B) iterations. Figure 12 shows the mean residuals for each player in the training set. The mean resalised residuals appear to be symmetric around zero and, as the value of rally length increases, we observe larger variance of the residuals. This result is not surprising and is due to data sparsity at larger rally lengths (Fig. 2), thus more uncertainty in the estimates. Consequently, this strengthens the need for an approach that puts some sort of regularity on the estimate of \(p_i(x)\). Our monotonicity constraints ensures the estimated probability can not change wildly at large rally lengths and, therefore, ensures the estimate is less affected by outlying observations.

Appendix 5: Bayesian polynomial regression (BPR)

In this “Appendix”, we consider polynomial regression to model the probability of winning a point as a function of rally length. We assume

where \(p_{i,j}(x)\) is the probability that server i wins a point against receiver j at rally length x, e.g \({\mathbb {P}}[Y_{i,j}=1\vert x]\). Analogously to the splines model in Sect. 3, we consider two components for the modelling of the logit of \(p_{i,j}(x)\), one describing the serve advantage and the second representing the rally ability of the players. Specifically, we model the logit of \(p_{i,j}(x)\) as follows:

with

for \(i= 1,\dots , n_s,\) \(j = 1,\dots , n_r\) and \(i \ne j\), where d is the degree of the polynomial, \(n_s\) is the total number of servers, and\(n_r\) the total number of receivers.

Probability of winning a point on serve as a function of rally length for Andy Murray, where the serve advantage is modelled as a polynomial function of, respectively, degree 3 (top left), degree 4 (top right), degree 5 (bottom left), and with degree 6 (bottom right). Points represents the real data, while the black lines are the posterior mean estimate of the probability of winning a point as a function of rally length obtained with these models. The blue dashed lines are the \(95\%\) credible intervals (colour figure online)

We fit four Bayesian polynomial regression models to the Grand slam data (Sect. 2) by varying the degree of the polynomials. In particular, we considered \(d = 3, 4, 5, 6\), and we adopted the prior in Eq. (6) for the basis function coefficients \(\{\beta _m\}_{m=0}^d\) (no constraints) and prior in Eq. (10) for the rally ability parameters. In Fig. 13 we represent the probability of winning a point as a function of rally length for Andy Murray, with the serve advantage modelled as a polynomial function with varying degrees. While the model fit is reasonable for small values of rally length, with all polynomial regressions capturing a decreasing trend, for intermediate or large values of rally lengths the estimated probability oscillates or even increases. The cubic polynomial model also captures the decreasing behaviour at the end, even though in the interval \(\left[ 7,10\right] \) the estimate locally increases. Polynomial models of higher degrees appear to be affected by outliers, with the estimated probability of winning the point showing a strange increasing behaviour for large x.

Appendix 6: Players ID for Figs. 8 and 9

In this “Appendix”, we report the legend associating each number in Figs. 8 and 9 to the corresponding player in the ATP and WTA tournaments, respectively.

ID | ATP | WTA |

|---|---|---|

1 | Alexander Bublik | Alison Van Uytvanck |

2 | Andy Murray | Alize Cornet |

3 | Benjamin Becker | Angelique Kerber |

4 | Bernard Tomic | Arina Rodionova |

5 | Damir Dzumhur | Bethanie Mattek Sands |

6 | David Ferrer | Camila Giorgi |

7 | Ernesto Escobedo | Caroline Wozniacki |

8 | Ernests Gulbis | CoCo Vandeweghe |

9 | Evgeny Donskoy | Dominika Cibulkova |

10 | Fernando Verdasco | Ekaterina Alexandrova |

11 | Frances Tiafoe | Francesca Schiavone |

12 | Gilles Muller | Heather Watson |

13 | Guillermo Garcia Lopez | Jelena Jankovic |

14 | Ivo Karlovic | Johanna Konta |

15 | Janko Tipsarevic | Kirsten Flipkens |

16 | Jo Wilfried Tsonga | Kristina Kucova |

17 | Kei Nishikori | Louisa Chirico |

18 | Malek Jaziri | Madison Keys |

19 | Marco Trungelliti | Magda Linette |

20 | Marin Cilic | Mandy Minella |

21 | Mischa Zverev | Mirjana Lucic Baroni |

22 | Nick Kyrgios | Petra Martic |

23 | Novak Djokovic | Risa Ozaki |

24 | Pablo Carreno Busta | Saisai Zheng |

25 | Radek Stepanek | Serena Williams |

26 | Rafael Nadal | Simona Halep |

27 | Roger Federer | Sorana Cirstea |

28 | Rogerio Dutra Silva | Svetlana Kuznetsova |

29 | Ruben Bemelmans | Tsvetana Pironkova |

30 | Steve Johnson | Venus Williams |

31 | Teymuraz Gabashvili | Victoria Azarenka |

32 | Victor Estrella Burgos | Yaroslava Shvedova |

33 | – | Zarina Diyas |

Rights and permissions

About this article

Cite this article

Montagna, S., Orani, V. & Argiento, R. Bayesian isotonic logistic regression via constrained splines: an application to estimating the serve advantage in professional tennis. Stat Methods Appl 30, 573–604 (2021). https://doi.org/10.1007/s10260-020-00535-5

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10260-020-00535-5