Abstract

We develop a dynamic generalized conditional gradient method (DGCG) for dynamic inverse problems with optimal transport regularization. We consider the framework introduced in Bredies and Fanzon (ESAIM: M2AN 54:2351–2382, 2020), where the objective functional is comprised of a fidelity term, penalizing the pointwise in time discrepancy between the observation and the unknown in time-varying Hilbert spaces, and a regularizer keeping track of the dynamics, given by the Benamou–Brenier energy constrained via the homogeneous continuity equation. Employing the characterization of the extremal points of the Benamou–Brenier energy (Bredies et al. in Bull Lond Math Soc 53(5):1436–1452, 2021), we define the atoms of the problem as measures concentrated on absolutely continuous curves in the domain. We propose a dynamic generalization of a conditional gradient method that consists of iteratively adding suitably chosen atoms to the current sparse iterate, and subsequently optimizing the coefficients in the resulting linear combination. We prove that the method converges with a sublinear rate to a minimizer of the objective functional. Additionally, we propose heuristic strategies and acceleration steps that allow to implement the algorithm efficiently. Finally, we provide numerical examples that demonstrate the effectiveness of our algorithm and model in reconstructing heavily undersampled dynamic data, together with the presence of noise.

Similar content being viewed by others

1 Introduction

The aim of this paper is to develop a dynamic generalized condition gradient method (DGCG) to numerically compute solutions of ill-posed dynamic inverse problems regularized with optimal transport energies. The code is openly available on GitHub.Footnote 1

Lately, several approaches have been proposed to tackle dynamic inverse problems [42, 57, 58, 61, 67], all of which take advantage of redundancies in the data, allowing to stabilize reconstructions, both in the presence of noise or undersampling. A common challenge faced in such time-dependent approaches is understanding how to properly connect, or relate, the time-neighboring datapoints, in a way that the reconstructed object follows a presumed dynamic. In this paper, we address such issue by means of dynamic optimal transport. A wide range of applications can benefit from motion-aware approaches. In particular, the employment of dynamic reconstruction methods represents one of the latest key mathematical advances in medical imaging. For instance, magnetic resonance imaging (MRI) [48, 50, 56] and computed tomography (CT) [9, 21, 32] methods allow dynamic modalities in which the time-dependent data are further undersampled to reach high temporal sampling rates; these are required to resolve organ motion, such as the beating heart or the breathing lung. A more accurate reconstruction of the image, and of the underlying dynamics, would yield valuable diagnostic information.

1.1 Setting and Existing Approaches

Recently, it has been proposed to regularize dynamic inverse problems using dynamic optimal transport energies both in a balanced and unbalanced context [15, 59, 60], with the goal of efficiently reconstructing time-dependent Radon measures. Such regularization choice is natural: optimal transport energies incorporate information about time correlations present in the data, and are thus favoring a more stable reconstruction. Optimal transport theory was originally developed to find the most efficient way to move mass from a probability measure \(\rho _0\) to another one \(\rho _1\), with respect to a given cost [44, 54]. More recently, Benamou and Brenier [8] showed that the optimal transport map can be computed by solving

where \(t \mapsto \rho _t\) is a curve of probability measures on the closure of a bounded domain \(\Omega \subset \mathbb {R}^d\), \(v_t\) is a time-dependent vector field advecting the mass, and the continuity equation is intended in the sense of distributions with initial data \(\rho _0\) and final data \(\rho _1\). Notably, the quantity at (1), named Benamou–Brenier energy, admits an equivalent convex reformulation. Specifically, consider the space of bounded Borel measures \(\mathcal {M}:= \mathcal {M}(X) \times \mathcal {M}(X; \mathbb {R}^d)\), \(X:=(0,1)\times \overline{\Omega }\), and define the convex energy \(B: \mathcal {M}\rightarrow [0,+\infty ]\) by setting

if \(\rho \ge 0\) and \(m\ll \rho \), and \(B:=+\infty \) otherwise. Then, (1) is equivalent to minimizing B under the linear constraint \(\partial _t \rho + {{\,\mathrm{div}\,}}m = 0\). Such convex reformulation can be employed as a regularizer for dynamic inverse problems, where instead of fixing initial and final data, a fidelity term is added to measure the discrepancy between the unknown and the observation at each time instant, as proposed in [15]. There the authors consider the dynamic inverse problem of finding a curve of measures \(t \mapsto \rho _t\), with \(\rho _t \in \mathcal {M}(\overline{\Omega })\), such that

where \(f_t \in H_t\) is some given data, \(\{H_t\}\) is a family of Hilbert spaces and \(K_t^* : \mathcal {M}(\overline{\Omega }) \rightarrow H_t\) are linear continuous observation operators. The problem at (2) is then regularized via the minimization problem

where \(\Vert \rho \Vert _{\mathcal {M}(X)}\) denotes the total variation norm of the measure \(\rho :=\mathrm{d}t \otimes \rho _t\), and \(\alpha , \beta >0\) are regularization parameters. Notice that any curve \(t \mapsto \rho _t\) having finite Benamou–Brenier energy and satisfying the continuity equation constraint must have constant mass (see Lemma 9). As a consequence, the regularization (3) is especially suited to reconstruct motions where preservation of mass is expected. We point our that such formulation is remarkably flexible, as the measurements spaces and measurement operators are allowed to be very general. In this way, one could model, for example, undersampled acquisition strategies in medical imaging, particularly MRI [15].

The aim of this paper is to design a numerical algorithm to solve (3). The main difficulties arise due to the non-reflexivity of measure spaces. Even in the static case, solving the classical LASSO problem [62] in the space of bounded Borel measures (known as BLASSO [29]), i.e.,

for a Hilbert space Y and a linear continuous operator \(K : \mathcal {M}(\Omega ) \rightarrow Y\) has proven to be challenging. Usual strategies to tackle (4) numerically often rely on the discretization of the domain [26, 28, 63]; however, grid-based methods are known to be affected by theoretical and practical flaws such as high computational costs and the presence of mesh-dependent artifacts in the reconstruction. The mentioned drawbacks have motivated algorithms that do not rely on domain discretization, but optimize directly on the space of measures. One class of such algorithms, first introduced in [18] and subsequently developed in different directions [10, 30, 40, 52], are named generalized conditional gradient methods (GCG) or Frank–Wolfe-type algorithms. They can be regarded as the infinite-dimensional generalization of the classical Frank–Wolfe optimization algorithm [41] and of GCG in Banach spaces [7, 17, 19, 25, 33, 43]. The basic idea behind such algorithms consists in exploiting the structure of sparse solutions to (4), which are given by finite linear combinations of Dirac deltas supported on \(\Omega \). In this case, Dirac deltas represent the extremal points of the unit ball of the Radon norm regularizer. With this knowledge at hand, the GCG method iteratively minimizes a linearized version of (4); such minimum can be found in the set of extremal points. The iterate is then constructed by adding delta peaks at each iteration, and by subsequently optimizing the coefficients of the linear combination. GCG methods have proven to be successful at solving (4), and have been adapted to related problems in the context of, e.g., super-resolution [1, 49, 52, 59].

1.2 Outline of the Main Contributions

Inspired by GCG methods, the goal of this paper is to develop a dynamic generalized conditional gradient method (DGCG) aimed at solving the dynamic minimization problem at (3). Similarly to the classical GCG approaches, our DGCG algorithm is based on the structure of sparse solutions to (3), and it is Lagrangian in essence, since it does not require a discretization of the space domain. Lagrangian approaches have been proven useful for many different dynamic applications [55], often outperforming Eulerian approaches, where the discretization in space is necessary. Indeed, since Eulerian approaches are based on the optimization of challenging discrete assignment problems in space, Lagrangian approaches allow to lower the computational costs and are more suitable to reconstruct coalescence phenomena in the dynamics. Motivated by similar considerations, our approach aims to reduce the reconstruction artifacts and lower the computational cost when compared to grid-based methods designed to solve similar inverse problems to (3) (see [60]).

The fundamentals of our approach rest on recent results concerning sparsity for variational inverse problems: it has been empirically observed that the presence of a regularizer promotes the existence of sparse solutions, that is, minimizers that can be represented as a finite linear combination of simpler atoms. This effect is evident in reconstruction problems [34, 39, 64,65,66], as well as in variational problems in other applications, such as materials science [37, 38, 46, 53]. Existence of sparse solutions has been recently proven for a class of general functionals comprised of a fidelity term, mapping to a finite dimensional space, and a regularizer: in this case, atoms correspond to the extremal points of the unit ball of the regularizer [11, 14]. In the context of (3), the extremal points of the Benamou–Brenier energy have been recently characterized in [20]; this provides an operative notion of atoms that will be used throughout the paper. More precisely, for every absolutely continuous curve \(\gamma : [0,1] \rightarrow \overline{\Omega }\), we name as atom of the Benamou–Brenier energy the respective pair of measures \(\mu _\gamma := (\rho _\gamma , m_\gamma ) \in \mathcal {M}\) defined by

The notion of atom described above can be regarded as the dynamic counterpart of the Dirac deltas for the Radon norm regularizer. Curves of measures of the form (5) constitute the building blocks used in our DGCG method to generate at iteration step n the sparse iterate \(\mu ^n = (\rho ^n , m^n)\)

converging to a solution of (3), where \(c_j >0\).

The basic DGCG method proposed is comprised of two steps. The first one, called insertion step, operates as follows. Given a sparse iterate \(\mu ^n\) of the form (6), we obtain a descent direction for the energy at (3), by minimizing a version of (3) around \(\mu ^n\), in which the fidelity term is linearized. We show that in order to find such descent direction, it is sufficient to solve

where \(w^n_t := -K_t(K_t^* \rho ^n_t - f_t ) \in C(\overline{\Omega })\) is the dual variable of the problem at the iterate \(\mu ^n\), and \({{\,\mathrm{Ext}\,}}(C_{\alpha ,\beta })\) denotes the set of extremal points of the unit ball of the regularizer in (3), namely the set \(C_{\alpha ,\beta } := \{(\rho ,m) \in \mathcal {M}: \partial _t \rho + {{\,\mathrm{div}\,}}m = 0,\ \ \beta B(\rho ,m) + \alpha \left\Vert \rho \right\Vert _{\mathcal {M}(X)}\le 1\}\). Formula (7) clarifies the connection between atoms and extremal points of \(C_{\alpha ,\beta }\), showing the fundamental role that the latter play in sparse optimization and GCG methods. In view of the characterization Theorem 1, proven in [20], the minimization problem (7) can be equivalently written in terms of atoms

where \(\mathrm{AC}^2\) denotes the set of absolutely continuous curves with values in \(\overline{\Omega }\) and weak derivative in \(L^2(\overline{\Omega };\mathbb {R}^d)\). The insertion step then consists in finding a curve \(\gamma ^*\) solving (8), and considering the respective atom \(\mu _{\gamma ^*}\). Afterward, naming \(\gamma _{N_n+1}:=\gamma ^*\), the coefficients optimization step proceeds at optimizing the conic combination \(\mu ^n + c_{N_{n}+1} \mu _{\gamma _{N_n+1}}\) with respect to (3), among all nonnegative coefficients \(c_j\). Denoting by \(c_1^*,\ldots , c_{N_n+1}^*\) a solution to such problem, the new iterate is defined by \(\mu ^{n+1}:=\sum _j c_j^*\mu _{\gamma _j}\). The two steps of inserting a new atom in the linear combination and optimizing the coefficients are the building blocks of our core algorithm, summarized in Algorithm 1. In Theorem 7, we prove that such algorithm has a sublinear convergence rate, similarly to the GCG method for the BLASSO problem [18], and the produced iterates \(\mu ^n\) converge in the weak* sense of measures to a solution of (3). The core algorithm and its analysis are the subject of Sect. 4.

From the computational point of view, we observe that the coefficients optimization step can be solved efficiently, as it is equivalent to a finite-dimensional quadratic program. Concerning the insertion step, however, even if the complexity of searching for a descent direction for (3) is reduced by only minimizing in the set of atoms, (8) remains a challenging nonlinear and non-local problem. For this reason, we shift our attention to computing stationary points for (8), relying on gradient descent strategies. Specifically, we prove that under additional assumptions on \(H_t\) and \(K_t^*\), problem (8) can be cast in the Hilbert space \(H^1([0,1];\mathbb {R}^d)\), and that the gradient descent algorithm, with appropriate stepsize, outputs stationary points to (8) (see Theorem 14). With this theoretical result at hand, in Sect. 5.1, we formulate a solution strategy for (8) based on multistart gradient descent methods, whose initializations are chosen according to heuristic principles. More precisely, the initial curves are chosen randomly in the regions where the dual variable \(w_t^n\) has larger value, and new starting curves are produced combining pieces of stationary curves for (8) by means of a procedure named crossover.

We complement the core algorithm with acceleration strategies. First, we add multiple atoms in the insertion step (multiple insertion step). Such new atoms can be easily obtained as a byproduct of the multistart gradient descent in the insertion step. Moreover, after optimizing the coefficients in the linear combination, we perform an additional gradient descent step with respect to (3), varying the curves in the iterate \(\mu ^{n+1}\), while keeping the weights fixed. Such procedure, named sliding step, will then be alternated with the coefficients optimization step for a fixed number of iterations, before searching for a new atom in the insertion step. These additional steps are described in Sect. 5.1. We mention that similar strategies were already employed for the BLASSO problem [18, 51]. They are then included in the basic core algorithm to obtain the complete DGCG method in Algorithm 2.

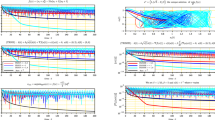

In Section 6, we provide numerical examples. As observation operators \(K^*_t\), we use time-dependent undersampled Fourier measurements, popular in imaging and medical imaging [16, 35], as well as in compressed sensing and super-resolution [1, 22, 23]. Such examples show the effectiveness of our DGCG method in reconstructing spatially sparse data, in the presence of simultaneously strong noise and severe temporal undersampling. Indeed, satisfactory results are obtained for ground-truths with \(20\%\) and \(60\%\) of added Gaussian noise, and heavy temporal undersampling in the sense that at each time t, the observation operator \(K^*_t\) is not able to distinguish sources along lines. With such ill-posed measurements, static reconstruction methods would not be able to accurately recover any ground-truth. In contrast, the time regularization chosen in (3) allows to resolve the dynamics by correlating the information of neighboring data points. As shown by the experiments presented, our DGCG algorithm produces accurate reconstructions of the ground-truth. In case of \(20\%\) and \(60\%\) of added noise, we note a surge of low intensity artifacts; nonetheless, the obtained reconstruction is close, in the sense of the measures, to the original ground-truth. Moreover, in all of the tried out examples, a linear convergence rate has been observed; this shows that the algorithm is, in practice, faster than the theoretical guarantees (Theorem 7), and a linear rate has to be expected in most of the cases.

We conclude the paper with Sect. 7, in which we discuss future perspectives and open questions, such as the possibility of improving the theoretical convergence rate for Algorithm 1, and alternative modeling choices.

1.3 Organization of the Paper

The paper is organized as follows. In Sect. 2, we summarize all the relevant notations and preliminary results regarding the Benamou–Brenier energy that are needed in the paper. In particular, we recall the characterization of the extremal points of the unit ball of the Benamou–Brenier energy obtained in [20]. In Sect. 3, we introduce the dynamic inverse problem under consideration and its regularization (3), following the approach of [15]. We further establish basic theory needed to setup the DGCG method. In Sect. 4, we provide the definition of atoms, and we give a high-level description of the DGCG method we propose in this paper, see Algorithm 1, proving its sublinear convergence. In Sect. 5, we describe the strategy employed to solve the insertion step problem (8), based on a multistart gradient descent method. Moreover, we outline the mentioned acceleration steps. Incorporating these procedures in the core algorithm, we obtain the complete DGCG method, see Algorithm 2. In Sect. 6, we show numerical results supporting the effectiveness of Algorithm 2. Finally, we present the reader some open questions in Sect. 7.

2 Preliminaries and Notation

In this section, we introduce the mathematical concepts and results we need to formulate our minimization problem and consequent algorithms. Throughout the paper, \(\Omega \subset \mathbb {R}^d\) denotes an open-bounded domain with \(d\in \mathbb {N}, d \ge 1\). We define the time-space cylinder \(X:= (0,1) \times \overline{\Omega }\). Following [3], given a metric space Y, we denote by \(\mathcal {M}(Y)\), \(\mathcal {M}(Y ; \mathbb {R}^d)\), \(\mathcal {M}^+(Y)\), the spaces of bounded Borel measures, bounded vector Borel measures, and positive measures, respectively. For a scalar measure \(\rho \), we denote by \(\left\Vert \rho \right\Vert _{\mathcal {M}(Y)}\) its total variation. In addition, we employ the notations \(\mathbb {R}_+\) and \(\mathbb {R}_{++}\) to refer to the nonnegative and positive real numbers, respectively.

2.1 Time-Dependent Measures

We say that \(\{\rho _t\}_{t \in [0,1]}\) is a Borel family of measures in \(\mathcal {M}(\overline{\Omega })\) if \(\rho _t \in \mathcal {M}(\overline{\Omega })\) for every \(t \in [0,1]\) and the map \(t \mapsto \int _{\overline{\Omega }} \varphi (x)\, d\rho _t(x)\) is Borel measurable for every function \(\varphi \in C(\overline{\Omega })\). Given a measure \(\rho \in \mathcal {M}(X)\), we say that \(\rho \) disintegrates with respect to time if there exists a Borel family of measures \(\{\rho _t\}_{t \in [0,1]}\) in \(\mathcal {M}(\overline{\Omega })\) such that

We denote such disintegration with the symbol \(\rho =\mathrm{d}t \otimes \rho _t\). Further, we say that a curve of measures \(t \in [0,1] \mapsto \rho _t \in \mathcal {M}(\overline{\Omega })\) is narrowly continuous if, for all \(\varphi \in C(\overline{\Omega })\), the map \(t \mapsto \int _{\overline{\Omega }} \varphi (x) \, d\rho _t(x)\) is continuous. The family of narrowly continuous curves will be denoted by \(C_\mathrm{w}\). We denote by \(C_\mathrm{w}^+\) the family of narrowly continuous curves with values in \(\mathcal {M}^+(\overline{\Omega })\).

2.2 Optimal Transport Regularizer

Introduce the space

We denote elements of \(\mathcal {M}\) by \(\mu =(\rho ,m)\) with \(\rho \in \mathcal {M}(X)\), \(m \in \mathcal {M}(X;\mathbb {R}^d)\), and by 0 the pair (0, 0). Define the set of pairs in \(\mathcal {M}\) satisfying the continuity equation as

where the solutions of the continuity equation are intended in a distributional sense, that is,

The above weak formulation includes no flux boundary conditions for the momentum m on \(\partial \Omega \), and no initial and final data for \(\rho \). Notice that by standard approximation arguments, it is equivalent to test (9) against maps in \(C^1_c ( X )\) (see [4, Remark 8.1.1]).

We now introduce the Benamou–Brenier energy, as originally done in [8]. To this end, define the convex, one-homogeneous and lower semicontinuous map \(\Psi :\mathbb {R}\times \mathbb {R}^d \rightarrow [0,\infty ]\) as

The Benamou–Brenier energy \(B :\mathcal {M}\rightarrow [0,\infty ]\) is defined by

where \(\lambda \in \mathcal {M}^+(X)\) is any measure satisfying \(\rho ,m \ll \lambda \). Note that (11) does not depend on the choice of \(\lambda \), as \(\Psi \) is one-homogeneous. Following [15], we introduce a coercive version of B: for fixed parameters \(\alpha ,\beta >0\) define the functional \(J_{\alpha , \beta } :\mathcal {M}\rightarrow [0,\infty ]\) as

As recently shown [15], \(J_{\alpha ,\beta }\) can be employed as a regularizer for dynamic inverse problems in spaces of measures.

2.3 Extremal Points of \(J_{\alpha ,\beta }\)

Define the convex unit ball

and the set of measures concentrated on \(\mathrm{AC}^2\) curves in \(\overline{\Omega }\)

where we denote by \(\mu _\gamma \) the pair \((\rho _\gamma ,m_\gamma )\) with

Here, \(\mathrm{AC}^2([0,1];\mathbb {R}^d)\) denotes the space of curves having metric derivative in \(L^2((0,1);\mathbb {R}^d)\). We can identify \(\mathrm{AC}^2([0,1];\mathbb {R}^d)\) with the Sobolev space \(H^1((0,1);\mathbb {R}^d)\) (see [4, Remark 1.1.3]). For brevity, we will denote by \(\mathrm{AC}^2:=\mathrm{AC}^2([0,1];\overline{\Omega })\) the set of curves \(\gamma \) belonging to \(\mathrm{AC}^2([0,1];\mathbb {R}^d)\) such that \(\gamma ([0,1]) \subset \overline{\Omega }\). For the extremal points of \(C_{\alpha ,\beta }\), we have the following characterization result, originally proven in [20, Theorem 6].

Theorem 1

Let \(\alpha ,\beta >0\) be fixed. Then, it holds \(\,{{\,\mathrm{Ext}\,}}(C_{\alpha ,\beta })=\{0\} \cup \mathcal {C}_{\alpha ,\beta }\).

We now show that \(J_{\alpha ,\beta }\) is linear on nonnegative combinations of points in \(\mathcal {C}_{\alpha ,\beta }\). Such property will be crucial for several computations in this paper, and the proof is postponed to Sect. 1

Lemma 2

Let \(N \in \mathbb {N}, N \ge 1\), \(c_j \in \mathbb {R}\) with \(c_j >0\), and \(\gamma _j \in \mathrm{AC}^2\) for \(j=1,\ldots ,N\). Let \(\mu _{\gamma _j}=(\rho _{\gamma _j},m_{\gamma _j}) \in \mathcal {C}_{\alpha ,\beta }\) be defined according to (14). Then, \(J_{\alpha ,\beta }(\mu _j)=1\) and

3 The Dynamic Inverse Problem and Conditional Gradient Method

In this section, we introduce the dynamic inverse problem we aim at solving, following the approach of [15]. Moreover, we set up the functional analytic framework necessary to state the numerical algorithm presented in Sect. 4. Recall that \(\Omega \subset \mathbb {R}^d\) is an open-bounded domain, \(d \in \mathbb {N}\), \(d \ge 1\). Let \(\{H_t\}_{t \in [0,1]}\) be a family of real Hilbert spaces, \(K_t^* :\mathcal {M}(\overline{\Omega }) \rightarrow H_t\) a family of linear continuous forward operators parametrized by \(t\in [0,1]\). Given some data \(f_t \in H_t\) for a.e. \(t \in (0,1)\), consider the dynamic inverse problem of finding a curve \(t \mapsto \rho _t \in \mathcal {M}(\overline{\Omega })\) such that

It has been recently proposed [15] to regularize the above problem with the optimal transport energy \(J_{\alpha ,\beta }\) defined in (12), where \(\alpha ,\beta >0\) are fixed parameters. This leads to consider the Tikhonov functional \(T_{\alpha ,\beta } :\mathcal {M}\rightarrow [0,+\infty ]\) with associated minimization problem

where the fidelity term \(\mathcal {F}:\mathcal {M}\rightarrow [0,+\infty ]\) is defined by

In the following, we will denote by f, \(K^*\rho \), Kf the maps \(t\mapsto f_t\), \(t \mapsto K_t^* \rho _t\), \(t \mapsto K_tf_t\), respectively, where \(f_t \in H_t\) for a.e. \(t \in (0,1)\) and \(\rho _t\) is the disintegration of \(\rho \) with respect to time. The fidelity term \(\mathcal {F}\) serves to track the discrepancy in (15) continuously in time. Following [15], this is achieved by introducing the Hilbert space of square integrable maps \(f :[0,1] \rightarrow H:=\cup _t H_t\), denoted by \(L^2_H\). The data f are then assumed to belong to \(L^2_H\). The assumptions under which this procedure can be made rigorous are briefly summarized in Sect. 3.1, see (H1)–(H3), (K1)–(K3). Under these assumptions, we have that \(\mathcal {F}\) is well-defined, see Remark 1. Such framework allows to model a variety of time-dependent acquisition strategies in dynamic imaging, as shown in Sect. 1. We are now ready to recall an existence result for (\(\mathcal {P}\)) (see [15, Theorem 4.4]).

Theorem 3

Assume (H1)–(H3), (K1)–(K3) as in Sect. 3.1. Let \(f \in L^2_H\) and \(\alpha , \beta >0\). Then, \(T_{\alpha ,\beta }\) is weak* lower semicontinuous on \(\mathcal {M}\), and there exists \(\mu ^* \in \mathcal {D}\) that solves the minimization problem (\(\mathcal {P}\)). Moreover, \(\rho ^*\) disintegrates in \(\rho ^*=\mathrm{d}t \otimes \rho _t^*\) with \((t \mapsto \rho ^*_t) \in C_\mathrm{w}^+\). If in addition \(K_t^*\) is injective for a.e. \(t \in (0,1)\), then \(\mu ^*\) is unique.

The proposed numerical approach for (\(\mathcal {P}\)) is based on the conditional gradient method, which consists in seeking minimizers of local linear approximations of the target functional. As standard practice [18, 19, 51], we first replace (\(\mathcal {P}\)) with a surrogate minimization problem, by defining the functional \(\tilde{T}_{\alpha ,\beta }\) as in (\(\tilde{\mathcal {P}}\)) below. The key step in a conditional gradient method is then to find the steepest descent direction for a linearized version of \(\tilde{T}_{\alpha ,\beta }\). In Sect. 3.3, we show that in order to find such direction, it is sufficient to solve the minimization problem

where \(C_{\alpha ,\beta }:=\{J_{\alpha ,\beta } \le 1\}\), \(w_t:=-K_t(K_t^* \tilde{\rho _t} - f_t) \in C(\overline{\Omega })\) is the dual variable associated with the current iterate \((\tilde{\rho },\tilde{m})\), and the linear term \(\mu \mapsto \langle \rho , w \rangle \) is defined in (23). Finally, in Sect. 3.4, we define the primal-dual gap G associated with (\(\mathcal {P}\)), and prove optimality conditions.

3.1 Functional Analytic Setting for Time-Continuous Fidelity Term

In order to define the continuous sampling fidelity term \(\mathcal {F}\) at (16), the authors of [15] introduce suitable assumptions on the measurement spaces \(H_t\) and on the forward operators \(K_t^* :\mathcal {M}(\overline{\Omega }) \rightarrow H_t\).

Assumption 1

For a.e. \(t \in (0,1)\), let \(H_t\) be a real Hilbert space with norm \(\left\Vert \cdot \right\Vert _{H_t}\) and scalar product \( \langle \cdot , \cdot \rangle _{H_t}\). Let D be a real Banach space with norm denoted by \(\left\Vert \cdot \right\Vert _{D}\). Assume that for a.e. \(t \in (0,1)\), there exists a linear continuous operator \(i_t : D \rightarrow H_t\) with the following properties:

-

(H1)

\(\left\Vert i_t\right\Vert \le C\) for some constant \(C>0\) not depending on t,

-

(H2)

\(i_t(D)\) is dense in \(H_t\),

-

(H3)

the map \(t \in [0,1] \mapsto \langle i_t\varphi , i_t \psi \rangle _{H_t} \in \mathbb {R}\) is Lebesgue measurable for every fixed \(\varphi ,\psi \in D\).

Setting \(H := \bigcup _{t\in [0,1]} H_t\), it is possible to define the space of square integrable maps \(f :[0,1] \rightarrow H\) such that \(f_t \in H_t\) for a.e. \(t \in (0,1)\), that is,

The strong measurability mentioned in (18) is an extension to time-dependent spaces of the classical notion of strong measurability for Bochner integrals. The common subset D is employed to construct step functions in a suitable way. An important property of strong measurability is that \(t \mapsto \langle f_t, g_t \rangle _{H_t}\) is Lebesgue measurable whenever f, g are strongly measurable [15, Remark 3.4]. Moreover, \(L^2_H\) is a Hilbert space with inner product and norm given by

respectively [15, Theorem 3.13]. We refer the interested reader to [15, Section 3] for more details on the construction of such spaces and their properties. We will now state the assumptions required for the measurement operators \(K_t^*\).

Assumption 2

For a.e. \(t \in (0,1)\), the linear continuous operators \(K_t^* : \mathcal {M}(\overline{\Omega }) \rightarrow H_t\) satisfy:

-

(K1)

\(K_t^*\) is weak*-to-weak continuous, with pre-adjoint denoted by \(K_t: H_t \rightarrow C(\overline{\Omega })\),

-

(K2)

\(\left\Vert K^*_t\right\Vert \le C\) for some constant \(C>0\) not depending on t,

-

(K3)

the map \(t \in [0,1] \mapsto K_t^* \rho \in H_t\) is strongly measurable for every fixed \(\rho \in \mathcal {M}(\overline{\Omega })\).

Remark 1

After assuming (H1)–(H3), (K1)–(K3), the fidelity term \(\mathcal {F}\) introduced at (16) is well-defined. Indeed, the conditions \(\rho =\mathrm{d}t \otimes \rho _t\) and \((t \mapsto \rho _t) \in C_\mathrm{w}\) imply that \(t \mapsto K_t^*\rho _t\) belongs to \(L^2_H\) by Lemma 12. We further remark that \(\mathcal {F}(\mu )\) is finite whenever \(J_{\alpha ,\beta }(\mu )<+\infty \), as in this case we have \(\rho =\mathrm{d}t \otimes \rho _t\) with \((t \mapsto \rho _t) \in C_\mathrm{w}^+\), by Lemmas 9, 10.

3.2 Surrogate Minimization Problem

Let \(f \in L^2_H\) and define the map \(\varphi :\mathbb {R}_+\rightarrow \mathbb {R}_{+}\)

where we set \(M_0 := T_{\alpha ,\beta }(0)\). Notice that by (A1), we have \(J_{\alpha ,\beta }(0)=0\), so that

highlighting the dependence of \(\varphi \) on f. Recalling the definition of \(\mathcal {F}\) at (16), define the surrogate minimization problem

Notice that (\(\mathcal {P}\)) and (\(\tilde{\mathcal {P}}\)) share the same set of minimizers, and they are thus equivalent. This is readily seen after noting that solutions to (\(\mathcal {P}\)) and (\(\tilde{\mathcal {P}}\)) belong to the set \(\{\mu \in \mathcal {M}:J_{\alpha ,\beta }(\mu ) \le M_0\}\), thanks to the estimate \(t \le \varphi (t)\), and that \(T_{\alpha ,\beta }\) and \(\tilde{T}_{\alpha ,\beta }\) coincide on the said set.

We remark that the surrogate minimization problem (\(\tilde{\mathcal {P}}\)) is a technical modification of (\(\mathcal {P}\)) introduced just to ensure that the partially linearized problem defined in the following section is coercive.

3.3 Linearized Problem

Fix some data \(f \in L^2_H\) and a curve \((t \mapsto \tilde{\rho }_t) \in C_\mathrm{w}\). We define the associated dual variable \(t \mapsto w_t\) by

and the map \(\mu \in \mathcal {M}\mapsto \langle \rho , w\rangle \in \mathbb {R}\cup \{\pm \infty \}\) as

Remark 2

The above map is well-defined; indeed, assuming that \((t \mapsto \rho _t) \in C_\mathrm{w}\), we have \((t \mapsto K_t^*\rho _t ) \in L^2_H\) by Lemma 12. Similarly, also \((t \mapsto K_t^*\tilde{\rho }_t) \in L^2_H\). Thus, recalling (K1), we infer

which is well-defined and finite, being a scalar product in the Hilbert space \(L^2_H\) (see (19)). Moreover if \(J_{\alpha ,\beta }(\mu )<+\infty \), then \( \langle \rho , w \rangle \) is finite, since \(\rho =\mathrm{d}t \otimes \rho _t\) for \((t \mapsto \rho _t) \in C_\mathrm{w}^+\), by Lemmas 9, 10.

Let \(\varphi \) and \(M_0\) be as in (20)-(21). We consider the following linearized version of (\(\tilde{\mathcal {P}}\))

which is well-posed by Theorem 13.

The objective of this section is to prove the existence of a solution to (25) belonging, up to a multiplicative constant, to the extremal points of the sublevel set \(C_{\alpha ,\beta }:=\{J_{\alpha ,\beta } \le 1\}\).

To this end, consider the problem

In the following proposition, we prove that (26) admits a minimizer \(\mu ^* \in {{\,\mathrm{Ext}\,}}(C_{\alpha ,\beta })\). Moreover, we show that a suitably rescaled version of \(\mu ^*\) solves (25).

Proposition 4

Assume (H1)–(H3), (K1)–(K3) as in Sect. 3.1. Let \(f \in L^2_H\), \(\alpha , \beta >0\). Then, there exists a solution \(\mu ^* \in {{\,\mathrm{Ext}\,}}(C_{\alpha ,\beta })\) to (26). Moreover, \(M \mu ^*\) is a minimizer for (25), where

The above statement is reminiscent of the classical Bauer Maximum Principle [2, Theorem 7.69]. In our case, however, there is no clear topology that makes the set \(C_{\alpha ,\beta }\) compact and the linearized map defined in (23) continuous (or upper semicontinuous). Therefore, an ad hoc proof is required.

Proof

Let \( \hat{\mu }\in C_{\alpha ,\beta }\) be a solution to (26), which exists thanks to Theorem 13 with the choice \(\varphi (t):=\upchi _{(-\infty ,1]}(t)\). Consider the set \(S := \left\{ \mu \in C_{\alpha ,\beta } : \langle \rho ,w\rangle =\langle \hat{\rho },w\rangle \right\} \) of all solutions to (26). Note that S is bounded with respect to the total variation on \(\mathcal {M}\), due to (A2) and definition of \(C_{\alpha ,\beta }\). In particular, the weak* topology of \(\mathcal {M}\) is metrizable in S. We claim that S is compact in the same topology. Indeed, given a sequence \(\{\mu ^n\}\) in S we have by definition that

Therefore, (28) and Lemma 11 imply that, up to subsequences, \(\mu ^n\) converges to some \(\mu \in \mathcal {M}\) in the sense of (A3). By (28) and by the weak* sequential lower semicontinuity of \(J_{\alpha ,\beta }\) (Lemma 11), we infer \(\mu \in C_{\alpha ,\beta }\). Moreover by (28), (24) and Lemma 12, we also conclude that \(\mu \in S\), hence proving compactness. Also notice that S is convex due to the convexity of \(J_{\alpha ,\beta }\) (Lemma 11) and linearity of the constraint. Since \(S \ne \emptyset \), by Krein–Milman’s theorem, we have that \({{\,\mathrm{Ext}\,}}(S) \ne \emptyset \). Let \(\mu ^* \in {{\,\mathrm{Ext}\,}}(S)\). If we show that \(\mu ^* \in {{\,\mathrm{Ext}\,}}(C_{\alpha ,\beta })\), the thesis is achieved by definition of S. Hence, assume that \(\mu ^*\) can be decomposed as

with \(\mu ^j =(\rho ^j,m^j)\in C_{\alpha ,\beta }\) and \(\lambda \in (0,1)\). Assume that \(\mu ^1\) belongs to \(C_{\alpha ,\beta } \smallsetminus S\). By (29) and the minimality of the points in S for (26), we infer \(-\langle \hat{\rho },w\rangle <-\langle \rho ^*, w\rangle \), which is a contradiction since \(\mu ^* \in S\). Therefore, \(\mu ^1 \in S\). Similarly also \(\mu ^2 \in S\). Since \(\mu ^* \in {{\,\mathrm{Ext}\,}}(S)\), from (29), we infer \(\mu ^* = \mu ^1=\mu ^2\), showing that \(\mu ^* \in {{\,\mathrm{Ext}\,}}(C_{\alpha ,\beta })\).

Assume now that \(\mu ^* \in {{\,\mathrm{Ext}\,}}(C_{\alpha ,\beta })\) minimizes in (26). If \(\mu ^*=0\), it is straightforward to check that 0 minimizes in (25). Hence, assume \(\mu ^* \ne 0\), so that \(J_{\alpha ,\beta }(\mu ^*)>0\) by (A2). Since the functional at (26) is linear and \(\mu ^*\) is a minimizer, we can scale by \(J_{\alpha ,\beta }(\mu ^*)\) and exploit the one-homogeneity of \(J_{\alpha ,\beta }\) to obtain \(J_{\alpha ,\beta }(\mu ^*)=1\). For every \(\mu =(\rho ,m) \in \mathcal {M}\) such that \(J_{\alpha ,\beta }(\mu )<+\infty \), one has

since \(\mu ^*\) is a minimizer, \(J_{\alpha ,\beta }\) is nonnegative and one-homogeneous, and since \(J_{\alpha ,\beta }(\mu )=0\) if and only if \(\mu =0\) by Lemma 10. Again one-homogeneity implies

It is immediate to check that M defined in (27) is a minimizer for the right-hand side problem in (31).

Hence from (30)–(31), one-homogeneity of \(J_{\alpha ,\beta }\) and the fact that \(J_{\alpha ,\beta }(\mu ^*) = 1\), we conclude that \(M \mu ^*\) is a minimizer for (25). \(\square \)

3.4 The Primal-Dual Gap

In this section, we introduce the primal-dual gap associated with (\(\mathcal {P}\)).

Definition 1

The primal-dual gap is defined as the map \(G :\mathcal {M}\rightarrow [0,+\infty ]\) such that

for \(\mu \in \mathcal {M}\). Here \(w_t := -K_t(K_t^* \rho _t - f_t )\), the product \(\langle \cdot ,\cdot \rangle \) is defined in (23), the map \(\varphi \) at (20), and \( \hat{\mu }\in \mathcal {M}\) is a solution to (25).

Notice that G is well-defined. Indeed, assume that \(\mu \in \mathcal {M}\) is such that \(J_{\alpha ,\beta }(\mu )<+\infty \) and let \( \hat{\mu }\in \mathcal {M}\) be a solution to (25). In particular, \(J_{\alpha ,\beta }(\hat{\mu })<+\infty \) by Theorem 13. Therefore, the scalar product in (32) is finite, see Remark 2. The purpose of G becomes clear in its relationship with the functional distance associated with \(T_{\alpha ,\beta }\), which is defined by

for all \(\mu \in \mathcal {M}\). Such relationship is described in the following lemma. We remark that a similar result is standard in the context of Frank–Wolfe-type algorithms and generalized conditional gradient methods (see e.g., [18, Lemma 5.5] and [43, Section 2]). Due to the specificity of our dynamic problem and for sake of completeness, we present it in our setting as well.

Lemma 5

Let \(\mu \in \mathcal {M}\) be such that \(J_{\alpha ,\beta }(\mu )<+\infty \). Then,

Moreover, \(\mu ^*\) solves (\(\mathcal {P}\)) if and only if \(G(\mu ^*)=0\).

Proof

Let w and \(\hat{\rho }\) be as in (32). By using the Hilbert structure of \(L^2_H\), for any \(\tilde{\mu }\) such that \(J_{\alpha ,\beta }(\tilde{\mu })<+\infty \), we have, by the polarization identity,

Let \(\mu ^*\) be a minimizer for \(T_{\alpha ,\beta }\), which exists by Theorem 3. Since \(J_{\alpha ,\beta }(\mu ^*) \le T_{\alpha ,\beta }(\mu ^*) \le T_{\alpha ,\beta }(0)=M_0\), by definition of \(\varphi \), we have \(\varphi (J_{\alpha ,\beta }(\mu ^*)) = J_{\alpha ,\beta }(\mu ^*)\). Using (35) and the definition of G in (32), where \(\hat{\mu }\) is chosen to be a solution to (25), we obtain

proving (34). If \(G(\mu ^*)=0\), then \(\mu ^*\) minimizes in (\(\mathcal {P}\)) by (34). Conversely, assume that \(\mu ^*\) is a solution of (\(\mathcal {P}\)) and denote by \(w^*_t:=-K_t(K_t^*\rho _t^* - f_t)\) the associated dual variable. Let \(\mu \) be arbitrary and such that \(J_{\alpha ,\beta }(\mu )<+\infty \). Let \(s \in [0,1]\) and set \(\mu ^s:=\mu ^*+s(\mu -\mu ^*)\). By convexity of \(J_{\alpha ,\beta }\) (see Lemma 11), we have that \(J_{\alpha ,\beta }(\mu ^s)<+\infty \). As \(\mu ^*\) is optimal in (\(\mathcal {P}\)), we infer

where we used convexity of \(J_{\alpha ,\beta }\), and the identity at (35) with respect to \(w^*, \rho ^*\) and \(\rho ^s\). Dividing the above inequality by s and letting \(s \rightarrow 0\) yields

which holds for all \(\mu \) with \( J_{\alpha ,\beta }(\mu )<+\infty \). Now notice that \(J_{\alpha ,\beta }(\mu ^*) \le T_{\alpha ,\beta }(\mu ^*) \le M_0\), since \(\mu ^*\) solves (\(\mathcal {P}\)). Therefore, \(\varphi (J_{\alpha ,\beta }(\mu ^*))=J_{\alpha ,\beta }(\mu ^*)\). Moreover, \(t \le \varphi (t)\) for all \(t \ge 0\). As a consequence of (36), we then infer

proving that \(\mu ^*\) minimizes in (25) with respect to \(w^*\). Therefore, by definition, \(G(\mu ^*)=0\). \(\square \)

4 The Algorithm: Theoretical Analysis

In this section, we give a theoretical description of the dynamic generalized conditional gradient algorithm anticipated in the introduction, which we call core algorithm. The proposed algorithm aims at finding minimizers to \(T_{\alpha ,\beta }\) as defined in (\(\mathcal {P}\)), for some fixed data \(f \in L^2_H\) and parameters \(\alpha ,\beta >0\). It is comprised of an insertion step, where one seeks a minimizer to (26) among the extremal points of the set \(C_{\alpha ,\beta }:=\{J_{\alpha ,\beta }\le 1\}\), and of a coefficients optimization step, which will yield a finite dimensional quadratic program. As a result, each iterate will be a finite linear combination, with nonnegative coefficients, of points in \({{\,\mathrm{Ext}\,}}(C_{\alpha ,\beta })\). We remind the reader that \({{\,\mathrm{Ext}\,}}(C_{\alpha ,\beta })= \{0\} \cup \mathcal {C}_{\alpha ,\beta }\) in view of Theorem 1, where \(\mathcal {C}_{\alpha ,\beta }\) is defined at (13). From the definition of \(\mathcal {C}_{\alpha ,\beta }\), we see that except for the zero element, the extremal points are in 1-on-1 correspondence with the space of curves \(\mathrm{AC}^2:=\mathrm{AC}^2([0,1]; \overline{\Omega })\). This observation motivates us to define the atoms of our problem.

Definition 2

(Atoms) We denote by \(\mathrm{{AC}}_{\infty }^2\) the one-point extension of the set \(\mathrm{AC}^2\), where we include a point denoted by \(\gamma _\infty \). For any \(\gamma \in \mathrm{AC}^2\), we name as atom the respective extremal point \(\mu _\gamma =(\rho _\gamma , m_\gamma ) \in \mathcal {M}\) defined according to (14).

For \(\gamma _{\infty }\), the corresponding atom is defined by \(\mu _{\gamma _\infty }:=(0,0)\). We call sparse any measure \(\mu \in \mathcal {M}\) such that

for some \(N \in \mathbb {N}\), \(c_j > 0\) and \(\gamma _j \in \mathrm{AC}^2\), with \(\gamma _i \ne \gamma _j\) for \(i \ne j\).

Note that \(\gamma _\infty \) can be regarded as the infinite length curve: indeed if \(\{\gamma ^n\}\) in \(\mathrm{AC}^2\) is a sequence of curves with diverging length, that is, \(\int _0^1 \left|\dot{\gamma }^n\right| \,\mathrm{d}t \rightarrow \infty \) as \(n \rightarrow \infty \), then \(\rho _{\gamma ^n} {\mathop {\rightharpoonup }\limits ^{*}}\rho _{\gamma _\infty }\), since \(a_{\gamma ^n} \rightarrow 0\) by Hölder’s inequality.

Additionally, it is convenient to introduce the following map associating vectors of curves and coefficients with the corresponding sparse measure:

where \(N \in \mathbb {N}\) is fixed and \(\varvec{c}:= (c_1, \ldots , c_{N})\) with \(c_j > 0\), \(\varvec{\gamma } := (\gamma _1, \ldots , \gamma _{N})\), with \(\gamma _j \in \mathrm{AC}^2\).

Remark 3

The decomposition in extremal points of a given sparse measure might not be unique, that is, the map at (38) is not injective. For example, let \(N:=2\), \(\Omega :=(0,1)^2\) and

Note that injectivity fails for \(((\gamma _1,\gamma _2),(1,1))\) and \(((\tilde{\gamma }_1,\tilde{\gamma }_2),(1,1))\), given that they map to the same measure \(\mu ^*\), but \(\gamma _1\) and \(\gamma _2\) cross at \(t=1/2\), while \(\tilde{\gamma }_1\) and \(\tilde{\gamma }_2\) rebound. This observation is relevant for the algorithms presented, seeing that they operate in terms of extremal points: if for example, \(\mu ^*\) was the unique solution to (\(\mathcal {P}\)) for some data f, due to the lack of unique sparse representation for \(\mu ^*\), the numerical reconstruction could favor the representation having the least energy in terms of the regularizer \(J_{\alpha ,\beta }\). This is not surprising, since our method aims at reconstructing sparse measures, rather than their extremal points. A numerical example displaying the behavior of our algorithm on crossings, such as the case of \(\mu ^*\), is given in Sect. 6.2.3.

The rest of the section is organized as follows. In Sect. 4.1, we present the core algorithm, describing its basic steps and summarizing it in Algorithm 1. In Sect. 4.2, we discuss the equivalence of the coefficients optimization step to a quadratic program, while in Sect. 4.3, we show sublinear convergence of Algorithm 1 in terms of the residual defined at (33). In Sect. 4.4, we detail on a theoretical stopping criterion for our algorithm. To conclude, in Sect. 4.5, we give a description of how to alter Algorithm 1 in case the fidelity term \(\mathcal {F}\) at (\(\mathcal {P}\)) is replaced by a time-discrete version. All the results presented in this section and in the above will hold also for this particular case, with minor modifications.

4.1 Core Algorithm

The core algorithm consists of two steps. In the first one, named the insertion step, an atom is added to the current iterate, this atom being the minimizer of the linearized problem defined at (26). In the second step, named the coefficients optimization step, the atoms are fixed and their associated weights are optimized to minimize the target functional \(T_{\alpha ,\beta }\) defined in (\(\mathcal {P}\)). In what follows, \(f \in L^2_H\) is a given datum and \(\alpha ,\beta >0\) are fixed parameters.

4.1.1 Iterates

We initialize the algorithm to the zero atom \(\mu ^0 := 0\). The n-th iteration \(\mu ^n\) is a sparse element of \(\mathcal {M}\) according to (37), that is,

where \(N_n \in \mathbb {N}\cup \{0\}\), \(\gamma _j^n \in \mathrm{AC}^2\), \(c_j^n >0\) and \(\gamma _i \ne \gamma _j\) if \(i \ne j\). Notice that \(N_n\) is counting the number of atoms present at the n-th iteration, and is not necessarily equal to n, since the optimization step could discard atoms by setting their associated weights to zero. In practice, Algorithm 1 operates in terms of curves and weights. That is, the n-th iteration outputs pairs \((\gamma _j,c_j)\) with \(\gamma _j \in \mathrm{AC}^2\), \(c_j >0\): the iterate at (39) can be then constructed via the map (38).

4.1.2 Insertion Step

Assume \(\mu ^n\) is the current iterate. Define the dual variable associated with \(\mu ^n\) as in (22), that is,

With it, consider the minimization problem of the form (17), that is,

where the term \( \langle \cdot , \cdot \rangle \) is defined in (23). We recall that (41) admits solution by Proposition 4. Thanks to the characterization \({{\,\mathrm{Ext}\,}}(C_{\alpha ,\beta })=\{0\}\cup \mathcal {C}_{\alpha ,\beta }\) provided by Theorem 1, problem (41) can be cast on the space \(\mathrm{{AC}}_{\infty }^2\). Indeed, given \(\mu \in \mathcal {C}_{\alpha ,\beta }\), following the notations at (13)–(14), we have that \(\mu =\mu _\gamma \) for some \(\gamma \in \mathrm{AC}^2\). The curve \(t \mapsto \delta _{\gamma (t)}\) belongs to \(C_\mathrm{w}^+\), and hence \( \langle \rho _\gamma , w \rangle \) is finite (see Remark 2). Thus, by definition, we have

showing that (41) is equivalent to

The insertion step consists in finding a curve \(\gamma ^* \in \mathrm{{AC}}_{\infty }^2\) that solves (43). To such curve, we associate a new atom \(\mu _{\gamma ^*}\) via Definition 2. Note that \(\gamma ^*\) depends on the current iterate \(\mu ^n\), as well as on the datum f and parameters \(\alpha ,\beta \): however, in order to simplify notations, we omit such dependencies. After, we have a stopping condition:

-

if \(\langle \rho _{\gamma ^*}, w^n\rangle \le 1\), then \(\mu ^n\) is solution to (\(\mathcal {P}\)). The algorithm outputs \(\mu ^n\) and stops,

-

if \(\langle \rho _{\gamma ^*}, w^n\rangle > 1\), then \(\mu ^n\) is not a solution to (\(\mathcal {P}\)) and \(\gamma ^* \in \mathrm{AC}^2\). The found atom \(\mu _{\gamma ^*}\) is inserted in the n-th iterate \(\mu ^n\) and the algorithm continues.

The optimality statements in the above stopping condition correspond to positivity conditions on the subgradient of \(T_{\alpha ,\beta }\) and are rigorously proven in Sect. 4.4. Moreover, the mentioned stopping condition can be made quantitative as discussed in Remark 7.

Remark 4

In this section, we will always assume the availability of an exact solution \(\gamma ^*\) to (43). In particular, this allows to obtain a sublinear convergence rate for the core algorithm (Theorem 7), and make the stopping condition rigorous. In practice, however, obtaining \(\gamma ^*\) is not always possible, due to the nonlinearity and non-locality of the functional at (43). For this reason, in Sect. 5.1, we propose a strategy aimed at obtaining stationary points of (43). Based on such strategy, a relaxed version of the insertion step is proposed for Algorithm 2, which we employ for the numerical simulations of Sect. 6.

4.1.3 Coefficients Optimization Step

This step is realized after the stopping condition is checked, with the condition \(\langle \rho _{\gamma ^*}, w^n\rangle > 1\) being satisfied. In particular, as observed above, in this case \(\gamma ^* \in \mathrm{AC}^2\). We then set \(\gamma _{N_n+1}^n := \gamma ^*\) and consider the coefficients optimization problem

where \(\mu _{\gamma _j^n}\) for \(j=1,\ldots ,N_n\) are the atoms present in the n-th iterate \(\mu ^n\). If \(c \in \mathbb {R}_{+}^{N_n+1}\) is a solution to the above problem, the next iterate is defined by

thus discarding the curves that do not contribute to (44).

Remark 5

Problem (44) is equivalent to a quadratic program of the form

where \(\Gamma \in \mathbb {R}^{(N_n+1) \times (N_n+1)}\) is a positive-semidefinite and symmetric matrix and \(b \in \mathbb {R}^{N_n+1}\), as proven in Proposition 6.

Therefore, throughout the paper, we will always assume the availability of an exact solution to (44). In practice, we solved (44) by means of the free Python software package CVXOPT [5, 6].

4.1.4 Algorithm Summary

As discussed in Sect. 4.1.1, the iterates of Algorithm 1 are pairs of curves and weights \((\gamma _j,c_j)\) for \(\gamma \in \mathrm{AC}^2\), \(c_j>0\) and \(j=1, \ldots , N_n\). In the pseudo-code, we denote such iterates with the tuples \(\varvec{\gamma } = (\gamma _1, \ldots , \gamma _{N_n})\) and \(\varvec{c} = (c_1, \ldots , c_{N_n})\). Note that such tuples vary in size at each iteration, and the initial iterate of the algorithm, that is, the zero atom, corresponds to the empty tuples \(\varvec{\gamma } = ()\) and \(\varvec{c} = ()\). We denote the number of elements contained in a tuple \(\varvec{\gamma }\) with the symbol \(\left|\varvec{\gamma }\right|\). Via the map (38), the iterates \((\varvec{\gamma },\varvec{c})\) define a sequence of sparse measures \(\{\mu ^n\}\) of the form (39). The generated sequence \(\{\mu ^n\}\) weakly* converges (up to subsequences) to a minimizer of \(T_{\alpha ,\beta }\) for the datum \(f \in L^2_H\), as shown in Theorem 7. Notice that the assignments at lines 4 and 9 are meant to choose one element in the respective argmin set. The function \(\texttt {delete\_zero\_weighted}( \varvec{\gamma }, \varvec{c}) \) at line 11 in Algorithm 1 is designed to input a tuple \((\varvec{\gamma }, \varvec{c})\) and output another tuple where the curves \(\gamma _j\) and corresponding weights \(c_j\) are deleted if \(c_j = 0\).

4.2 Quadratic Optimization

We prove the statement in Remark 5. To be more precise, assume (H1)–(H3), (K1)–(K3) from Sect. 3.1 and let \(f \in L^2_H\), \(\alpha ,\beta >0\) be given. Fix \(N \in \mathbb {N}\), \(\gamma _1,\ldots ,\gamma _N \in \mathrm{AC}^2\) with \(\gamma _i \ne \gamma _j\) for all \(i\ne j\), and consider the coefficients optimization problem

where \(\mu _{\gamma _j}\) is defined according to (14). For (47), the following holds.

Proposition 6

Problem (47) is equivalent to

where \(\Gamma =(\Gamma _{i,j}) \in \mathbb {R}^{N \times N}\) is a positive semi-definite symmetric matrix and \(b=(b_i) \in \mathbb {R}^N\), with

Proof

As \(\gamma _j\) is continuous, the curve \(t \mapsto \delta _{\gamma _j(t)}\) belongs to \(C_\mathrm{w}^+\). Hence, the map \(t \mapsto K_t^* \delta _{\gamma _j(t)}\) belongs to \(L^2_H\) by Lemma 12 and the quantities at (49) are well-defined. Thanks to definition of \(T_{\alpha ,\beta }\) and Lemma 2, we immediately see that

where \(M_0\ge 0\) is defined at (21). This shows that (47) and (48) are equivalent. The rest of the statement follows since \(\Gamma \) is the Gramian with respect to the vectors \(K^*\rho _{\gamma _1}, \ldots , K^*\rho _{\gamma _N}\) in \(L^2_H\). \(\square \)

4.3 Convergence Analysis

We prove sublinear convergence for Algorithm 1. The convergence rate is given in terms of the functional distance (33) associated with \(T_{\alpha ,\beta }\). Throughout the section, we assume that \(f \in L^2_H\) is a given datum, \(\alpha , \beta >0\) are fixed regularization parameters and (H1)–(H3), (K1)–(K3) as in Sect. 3.1 hold. The convergence result is stated as follows.

Theorem 7

Let \(\{\mu ^n\}\) be a sequence generated by Algorithm 1. Then, \(\{T_{\alpha ,\beta }(\mu ^n)\}\) is non-increasing and the residual at (33) satisfies

where \(C>0\) is a constant depending only on \(\alpha ,\beta \), f and \(K_t^*\). Moreover, each accumulation point \(\mu ^*\) of \(\mu ^n\) with respect to the weak* topology of \(\mathcal {M}\) is a minimizer for \(T_{\alpha ,\beta }\). If \(T_{\alpha ,\beta }\) admits a unique minimizer \(\mu ^*\), then \(\mu ^n {\mathop {\rightharpoonup }\limits ^{*}}\mu ^*\) along the whole sequence.

Remark 6

The proof of Theorem 7 follows similar steps to [18, Theorem 5.8] and [51, Theorem 5.4]. We highlight that the proof of monotonicity of \(\{T_{\alpha ,\beta }(\mu ^n)\}\) and of the decay estimate (50) does not make full use of the coefficients optimization step (Sect. 4.1.3) of Algorithm 1. Rather, the proof relies on energy estimates for the surrogate iterate \(\mu ^s := \mu ^n + s(M\mu _{\gamma *} - \mu ^n)\), where \(\gamma ^*\in \mathrm{AC}^2\) is a solution of the insertion step (43), \(M \ge 0\) a suitable constant, and the step-size s is chosen according to the Armijo–Goldstein condition (see [18, Section 5]). Since \(\mu ^s\) is a candidate for problem (44), the added coefficients optimization step in Algorithm 1 does not worsen the convergence rate.

Proof

Fix \(n \in \mathbb {N}\), and let \(\{\mu ^n\}\) be a sequence generated by Algorithm 1, which by construction is of the form (39). Recall that \(w^n_t:=-K_t(K_t^* \rho _t^n - f_t) \in C(\overline{\Omega })\) is the associated dual variable. Let \(\mu ^*=(\rho ^*,m^*)\) in \({{\,\mathrm{Ext}\,}}(C_{\alpha ,\beta })\) be a solution to the insertion step, that is, \(\mu ^*\) solves (41). The existence of such \(\mu ^*\) is guaranteed by Proposition 4. Without loss of generality, we can assume that the algorithm does not stop at iteration n, that is, \( \langle \rho ^*, w^n \rangle >1\) according to the stopping condition in Sect. 4.1.2. In particular, \(\rho ^*\ne 0\). Recalling that \({{\,\mathrm{Ext}\,}}(C_{\alpha ,\beta })=\{0\} \cup \mathcal {C}_{\alpha ,\beta }\) (Theorem 1), we then have \(\mu ^*=\mu _{\gamma ^*}\) for some \(\gamma ^* \in \mathrm{AC}^2\). By the coefficients optimization step, the next iterate is of the form

and the coefficients \((c_1^{n+1},\ldots , c_{N_n}^{n+1},c^*)\) solve the quadratic problem

Claim. There exists a constant \(\tilde{C}>0\) independent of n such that, if \(T_{\alpha ,\beta }(\mu ^n) \le M_0\), then

To prove the above claim, assume that \(T_{\alpha ,\beta }(\mu ^n) \le M_0\). Define \(\hat{\mu }:=M\mu _{\gamma ^*}\), with \(M:=M_0 \langle \rho _{\gamma ^*}, w^n \rangle \). Since \(\mu _{\gamma ^*}\) solves (41) and \( \langle \rho _{\gamma ^*}, w^n \rangle >1\), by Proposition 4, we have that \(\hat{\mu }\) minimizes in (25) with respect to \(w^n\). Therefore, according to (32),

given that \(J_{\alpha ,\beta }(\mu ^n)\le T_{\alpha ,\beta }(\mu ^n) \le M_0 <+\infty \). For \(s \in [0,1]\), set \(\mu ^s:=\mu ^n + s (\hat{\mu }- \mu ^n)\). By convexity of \(J_{\alpha ,\beta }\) (see Lemma 11), we have \(J_{\alpha ,\beta }(\mu ^s)<+\infty \). Hence, we can apply the polarization identity at (35) with respect to \(w^n,\rho ^n,\rho ^s\) to obtain

where in the second line, we used convexity of \(J_{\alpha ,\beta }\) and the inequality \(t \le \varphi (t)\) for all \(t \ge 0\). Note that \(\mu ^s\) is a competitor for (51), so that \(T_{\alpha ,\beta }(\mu ^{n+1}) \le T_{\alpha ,\beta }(\mu ^s)\). Recalling (53), we then obtain

which holds for all \(s \in [0,1]\). Choose the stepsize \(\hat{s}\) according to the Armijo–Goldstein condition (see e.g., [51, Definition 4.1]) as

with the convention that \(C/0 = +\infty \) for \(C>0\). If \(\hat{s} <1\), by (54) and (34), we obtain

Since we are assuming \(T_{\alpha ,\beta }(\mu ^n) \le M_0\), by definition of \(T_{\alpha ,\beta }\), we deduce that \(\Vert \rho ^n\Vert _{\mathcal {M}(X)} \le M_0/\alpha \). Moreover, since \(\hat{\mu }= M\mu _{\gamma ^*}\), by (K2) in Sect. 3.1, the estimate \(a_{\gamma ^*} \le 1/\alpha \), and the Cauchy–Schwarz inequality yield

where \(C>0\) is the constant in (K2). Thus, we can estimate

with \(\tilde{C}>0\) not depending on n. Inserting the above estimate in (56) yields (52) and the claim follows. Assume now \(\hat{s}=1\), so that \( \Vert K^*(\hat{\rho }- \rho ^n)\Vert ^2_{L^2_H} \le G(\mu ^n)\). From (54) and (34), we obtain

As \(T_{\alpha ,\beta }(\mu ^n) \le M_0\), we also have \(r(\mu ^n) \le T_{\alpha ,\beta }(\mu ^n) \le M_0\). Therefore, we can find a constant \(\tilde{C}>0\) not depending on n such that \(\tilde{C}r(\mu ^n)^2 \le r(\mu ^n)\). Substituting the latter in (57) yields (52), and the proof of the claim is concluded.

Finally, we are in position to prove (50). Since \(\mu ^0=0\), we have that \(T_{\alpha ,\beta }(\mu ^0) \le M_0\). Thus, we can inductively apply (52) and obtain that \(T_{\alpha ,\beta }(\mu ^n) \le M_0\) for all \(n\in \mathbb {N}\). In particular, (52) holds for every \(n\in \mathbb {N}\) and, as a consequence, the sequence \(\{r(\mu ^n)\}\) is non-increasing. Setting \(r_n := r(\mu ^n)\), we then get

and (50) follows. The remaining claims follow from the weak* lower semicontinuity of \(T_{\alpha ,\beta }\) (Theorem 3) and estimate (A2), given that \(\{\mu ^n\}\) is a minimizing sequence for (\(\mathcal {P}\)). \(\square \)

4.4 Stopping Condition

We prove the optimality statements in the stopping criterion for the core algorithm anticipated in Sect. 4.1.2. In the following, G denotes the primal-dual gap introduced in (32). We denote by \(\mu ^n\) the n-th iterate of Algorithm 1, which is of the form (39), and by \(w^n\) the corresponding dual variable (40). Moreover, let \(\mu _{\gamma ^*}\) be the atom associated with the curve \(\gamma ^* \in \mathrm{{AC}}_{\infty }^2\) solving the insertion step (43).

Lemma 8

For all \(n \in \mathbb {N}\), we have

where \(M_0\ge 0\) is defined at (21). In particular, \(\mu ^n\) is a solution of (\(\mathcal {P}\)) if and only if \( \langle \rho _{\gamma ^*}, w^n \rangle \le 1\).

Before proving Lemma 8, we give a quantitative version of the stopping condition of Sect. 4.1.2.

Remark 7

With the same notations as above, consider the condition

where \(\mathrm{TOL}>0\) is a fixed tolerance. Notice that \(G(\mu ^n) =\Lambda ( \langle \rho _{\gamma ^*}, w^n \rangle )\) by Lemma 8. Thus, assuming (59), and using (34), we see that the functional residual defined at (33) satisfies \(r(\mu ^n) <\mathrm{TOL}\), i.e., \(\mu ^n\) almost minimizes (\(\mathcal {P}\)), up to the tolerance. Therefore, the condition at (59) can be employed as a quantitative stopping criterion for Algorithm 1.

Proof of Lemma 8

We start by computing \(G(\mu ^n)\) for a fixed \(n \in \mathbb {N}\). Set \(\hat{\mu }:=M\mu _{\gamma ^*}\), where M is defined as in (27) with \(w=w^n\). Since \(\mu _{\gamma ^*} \in {{\,\mathrm{Ext}\,}}(C_{\alpha ,\beta })\) solves (41), we have that \(\hat{\mu }\) solves (25) with respect to \(w^n\) (see Proposition 4). By Theorem 7, the sequence \(\{T_{\alpha ,\beta }(\mu ^n)\}\) is non-increasing. Thus, \(J_{\alpha ,\beta }(\mu ^n) \le T_{\alpha ,\beta }(\mu ^n) \le T_{\alpha ,\beta }(\mu ^0) = M_0<+\infty \), since \(\mu ^0=0\). Then, (32) reads

Notice that by one-homogeneity of \(J_{\alpha ,\beta }\) (see Lemma 11) and the fact that \(\mu _{\gamma ^*} \in {{\,\mathrm{Ext}\,}}(C_{\alpha ,\beta })\), we have \(J_{\alpha ,\beta }(\hat{\mu })=M\). Recalling the definition of \(\varphi \) at (20), by direct calculation, we obtain

We now compute the remaining terms in (60). By (44) and (45) at the step \(n-1\), we know that the coefficients \(c^n=(c_1^n,\ldots ,c_{N_n}^n) \in \mathbb {R}^{N_n}_{++}\) of \(\mu ^{n}\) solve the minimization problem

Since \(\gamma _j^n\) in (39) is continuous, we have that \((t \mapsto \delta _{\gamma _j^n(t)}) \in C_\mathrm{w}^+\) and thus \(t \mapsto K_t^* \delta _{\gamma _j^n(t)}\) belongs to \(L^2_H\), by Lemma 12. In view of (39), definition of \(T_{\alpha ,\beta }\) and Lemma 2, we can expand the expression at (62), differentiate with respect to each component of c, and recall that \(c^n\) is optimal in (62), to obtain

which holds for all \(i=1,\ldots ,N_n\). By Lemma 2 and linearity of \( \langle \cdot , \cdot \rangle \), we obtain the identity \(J_{\alpha ,\beta }(\mu ^n) = \langle \rho ^n, w^n \rangle \). The latter, together with (60) and (61), yields (58). For the remaining part of the statement, notice that by (58), we have \(G(\mu ^n)=0\) if and only if \( \langle \rho _{\gamma ^*}, w^n \rangle \le 1\). Therefore, the thesis follows by Lemma 5. \(\square \)

4.5 Time-Discrete Version

The minimization problem (\(\mathcal {P}\)) presented in Sect. 3 is posed for time-continuous measurements \(f \in L^2_H\), whose discrepancy to the reconstructed curve \(t \mapsto \rho _t\) is modeled by the fidelity term \(\mathcal {F}\) at (16). In real-world applications, however, the measured data are time-discrete, i.e., we can assume that measurements are taken at times \(0 = t_0< t_1< \ldots < t_T = 1\), with \(T \in \mathbb {N}\) fixed. Hence, the data are of the form \(f = (f_{t_0}, f_{t_1},\ldots , f_{t_T})\), where \(f_{t_i} \in H_{t_i}\).

For this reason, and with the additional goal of lowering the computational cost, we decided to present numerical experiments (Sect. 6) where the time-continuous fidelity term \(\mathcal {F}\) is replaced by a discrete counterpart. In Sect. 4.5.1, we show how to modify the mathematical framework discussed so far, in order to deal with the time-discrete case. Consequently, it is immediate to adapt Algorithm 1 to the resulting time-discrete functional, as discussed in Sect. 4.5.2. We remark that all the results up to this point will hold, in a slightly modified version, also for the discrete setting discussed below.

4.5.1 Time-Discrete Framework

We replace problem (\(\mathcal {P}\)) with

where the time-discrete fidelity term \(\mathcal {F}^{\mathcal {D}} :\mathcal {M}\rightarrow [0,+\infty ]\) is defined by

Here, \(H_{t_i}\) are real Hilbert spaces, and the given data vector \(f=(f_{t_0},\ldots ,f_{t_T})\) satisfies \(f_{t_i} \in H_{t_i}\) for all \(i=0,\ldots ,T\). The forward operators \(K_{t_i}^* :\mathcal {M}(\overline{\Omega }) \rightarrow H_i\) are assumed to be linear continuous and weak*-to-weak continuous, for each \(i=0,\ldots ,T\). The minimization problem (\(\mathcal {P}_{discr}\)) is well-posed by the direct method of calculus of variations: indeed \(T^{\mathcal {D}}_{\alpha ,\beta }\) is proper, \(J_{\alpha ,\beta }\) is weak* lower semicontinuous and coercive in \(\mathcal {M}\) (Lemma 11), and \(\mathcal {F}^{\mathcal {D}}\) is lower semicontinuous with respect to the convergence in (A3). In particular, a solution \(\mu ^*= (\rho ^*, m^*)\) to (\(\mathcal {P}_{discr}\)) will satisfy \(\rho ^*=\mathrm{d}t \otimes \rho _t^*\) with \((t \mapsto \rho _t^* )\in C_\mathrm{w}^+\). We now define the other quantities which are needed to formulate the discrete counterpart of the theory developed so far. For a given curve of measures \((t \rightarrow \tilde{\rho }_t) \in C_\mathrm{w}\), the corresponding dual variable (22) is redefined to be

for each \(i=0,\ldots ,T\). Consequently, we redefine the associated scalar product (23) to

It is straightforward to check that all the results in Sect. 3 hold with \(T_{\alpha ,\beta }\) and \( \langle \cdot , \cdot \rangle \) replaced by \(T^{\mathcal {D}}_{\alpha ,\beta }\) and \( \langle \cdot , \cdot \rangle _{\mathcal {D}}\), respectively, with the obvious modifications. In particular, the problem

admits a solution \(\mu ^*\), where \(C_{\alpha ,\beta }:=\{ J_{\alpha ,\beta }(\mu )\le 1\}\). One can perform a similar computation to the one at (42) to obtain equivalence between (65) and

Remark 8

Assume additionally that \(\overline{\Omega }\) is convex. Then, a solution \(\gamma ^* \in \mathrm{{AC}}_{\infty }^2\) to problem (66) is either \(\gamma ^*=\gamma _\infty \), or \(\gamma ^* \in \mathrm{AC}^2\) with \(\gamma ^*\) linear in each interval \([t_i,t_{i+1}]\). Indeed, given any curve \(\gamma \in \mathrm{AC}^2\), denote by \(\tilde{\gamma }\) the piecewise linear version of \(\gamma \) sampled at \(t_i\) for \(i=0,\ldots ,T\). Then \(\langle \rho _\gamma ,w \rangle _{\mathcal {D}} \le \langle \rho _{\tilde{\gamma }} ,w \rangle _{\mathcal {D}}\), due to the inequality \(\int _0^1 \left|\dot{\tilde{\gamma }}(t)\right|^2 \, \mathrm{d}t \le \int _0^1 \left|\dot{\gamma }(t)\right|^2 \, \mathrm{d}t\).

4.5.2 Adaption of Algorithm 1 to the Time-Discrete Setting

The core algorithm in Sect. 4.1 is readily adaptable to the task of minimizing (\(\mathcal {P}_{discr}\)). To this end, let \(f_{t_i} \in H_{t_i}\) be a given datum and \(\alpha ,\beta >0\) be fixed parameters for (\(\mathcal {P}_{discr}\)). Assume that \(\mu ^n\) is the current sparse iterate, of the form (39). The dual variable associated with \(\mu ^n\) is defined, according to (64), by \(w_{t_i}^n := -K_{t_i}( K_{t_i}^* \rho _{t_i}^n - f_{t_i})\). Similarly to Sect. 4.1.2, the insertion step in the time-discrete version consists in finding a curve \(\gamma ^* \in \mathrm{{AC}}_{\infty }^2\) which solves (66) with respect to \(w^n_i\). Such curve defines a new atom \(\mu _{\gamma ^*}\) according to Definition 2. Adapting the proofs of Lemmas 5, 8 to the discrete setting, one deduces the following stopping condition:

-

if \(\langle \rho _{\gamma ^*}, w^n\rangle _{\mathcal {D}} \le 1\), then \(\mu ^n\) is solution to (\(\mathcal {P}_{discr}\)). The algorithm outputs \(\mu ^n\) and stops,

-

if \(\langle \rho _{\gamma ^*}, w^n\rangle _{\mathcal {D}} > 1\), then \(\mu ^n\) is not a solution to (\(\mathcal {P}_{discr}\)) and \(\gamma ^* \in \mathrm{AC}^2\). The found atom \(\mu _{\gamma ^*}\) is inserted in the n-th iterate \(\mu ^n\) and the algorithm continues.

Set \(\gamma _{N_n+1}^n := \gamma ^*\). The time-discrete version of the coefficients optimization step discussed in Sect. 4.1.3 consists in solving

By proceeding as in Sect. 4.2, one can check that (67) is equivalent to a quadratic program of the form (46), where the matrix \(\Gamma \in \mathbb {R}^{(N_n +1) \times (N_n+1)}\) and the vector \(b \in \mathbb {R}^{N_n+1}\) are given by

In view of Remark 8 and of the above construction, we note that the iterates of the discrete algorithms are of the form (39) with \(\gamma _j^n \in \mathrm{AC}^2\) piecewise linear. Finally, we remark that the time-discrete algorithm obtained with the above modifications has the same sublinear rate of convergence stated in Theorem 7.

5 The Algorithm: Numerical Implementation

This aim of this section is twofold. First, in Sect. 5.1, we describe how to approach the minimization of the insertion step problem (43) by means of gradient descent strategies. This analysis is performed under additional assumptions on the operators \(K_t :H_t \rightarrow C(\overline{\Omega })\), which, loosely speaking, require that \(K_t\) map into the space \(C^{1,1}(\overline{\Omega })\) of differentiable functions with bounded Lipschitz gradient. The strategies proposed will result in the multistart gradient descent Subroutine 1 (Sect. 5.1.5), which, given a dual variable w, outputs a set of stationary points for (43). Then, in Sect. 5.2, we present two acceleration steps that can be added to Algorithm 1 to, in principle, enhance its performance. The first acceleration strategy, called the multiple insertion step, proceeds by adding all the outputs of Subroutine 1 to the current iterate. The second strategy, termed sliding step, consists in locally descending the target functional \(T_{\alpha ,\beta }\) at (\(\mathcal {P}\)) in a neighborhood of the curves composing the current iterate, while keeping the coefficients fixed. These strategies are finally added to Algorithm 1. The outcome is Algorithm 2, presented in Sect. 5.3, which we name dynamic generalized conditional gradient (DGCG).

5.1 Insertion Step Implementation

We aim at minimizing the linearized problem (43) in the insertion step, which is of the form

where the dual variable \(t \mapsto w_t\) is defined for a.e. \(t \in (0,1)\) by \(w_t:=-K_t(K_t^* \tilde{\rho }_t - f_t) \in C(\overline{\Omega })\), for some curve \((t \mapsto \tilde{\rho }_t) \in C_\mathrm{w}\) and data \(f \in L^2_H\) fixed. In (68), we also identified \(\mathrm{AC}^2\) with \(H^1([0,1];\overline{\Omega })\), and employed the notations at (14). We remind the reader that although (68) admits solutions (see Sect. 4.1.1), in practice, they may be difficult to compute numerically (Remark 4). Therefore, we turn our attention at finding stationary points for the functional F at (68), relying on gradient descent methods. To make this approach feasible, we require additional assumptions on the operators \(K_t^*\) (see Assumption 3), which allow to extend the functional F to the Hilbert space \(H^1:=H^1([0,1];\mathbb {R}^d)\) and make it Fréchet differentiable, without altering the value of the minimum at (68). In particular, this allows for a gradient descent procedure to be well-defined (Sect. 5.1.1). With this at hand, in Sect. 5.1.2, we define a descent operator \(\mathcal {G}_w\) associated with F, which, for a starting curve \(\gamma \in H^1\), outputs either a stationary point of F or the infinite length curve \(\gamma _\infty \). The starting curves for \(\mathcal {G}_w\) are of two types:

-

random starts which are guided by the values of the dual variable w (Sect. 5.1.3),

-

crossovers between known stationary points (Sect. 5.1.4).

We then propose a minimization strategy for (68), which is implemented in the multistart gradient descent algorithm contained in Subroutine 1 (Sect. 5.1.5). Such algorithm inputs a set of curves and a dual variable w, and returns a set \(\mathcal {S} \subset \mathrm{AC}^2\), which is either empty or contains stationary curves for F at (68).

5.1.1 Minimization Strategy

The additional assumptions required on \(K_t^*\) are as follows.

Assumption 3

For a.e. \(t \in (0,1)\), the linear continuous operator \(K_t^* :C^{1,1}(\overline{\Omega })^* \rightarrow H_t\) satisfies

-

(F1)

\(K_t^*\) is weak*-to-weak continuous, with pre-adjoint denoted by \(K_t: H_t \rightarrow C^{1,1}(\overline{\Omega })\),

-

(F2)

\(\left\Vert K^*_t\right\Vert \le C\) for some constant \(C>0\) not depending on t,

-

(F3)

the map \(t \mapsto K_t^*\rho \) is strongly measurable for every fixed \(\rho \in \mathcal {M}(\overline{\Omega })\),

-

(F4)

there exists a closed convex set \(E \Subset \Omega \) such that \( {{\,\mathrm{supp}\,}}(K_t f), {{\,\mathrm{supp}\,}}(\nabla (K_t f))\)\(\subset E\) for all \(f \in H_t\) and a.e. \(t \in (0,1)\), where \(\nabla \) denotes the spatial gradient.

Notice that (F1)–(F3) imply (K1)–(K3) of Sect. 3.1, due to the embedding \(\mathcal {M}(\overline{\Omega }) \hookrightarrow C^{1,1}(\overline{\Omega })^*\). Also note that (F4) has no counterpart in the assumptions of Sect. 3.1, and is only assumed for computational convenience, as discussed below. The problem of solving (68) under (F1)–(F4) is addressed in Appendix 1; here, we summarize the main results obtained. First, we extend F to the Hilbert space \(H^1\), by setting \(w_t(x):=0\) for all \(x\in \mathbb {R}^d \smallsetminus \overline{\Omega }\) and a.e. \(t \in (0,1)\). Due to (F4), we have that \(w_t \in C^{1,1}(\mathbb {R}^d)\). We then show that F is continuously Fréchet differentiable on \(H^1\), with locally Lipschitz derivative (Proposition 16).

Denote by \(D_\gamma F \in (H^1)^*\), the Fréchet derivative of F at \(\gamma \) (see (A13) for the explicit computation) and introduce the set of stationary points of F with nonzero energy

In Proposition 17, we prove that all the points in \(\mathfrak {S}_F\) satisfy \(\gamma ([0,1]) \subset \Omega \). As a consequence, \(\mathfrak {S}_F\) contains all the solutions to the insertion problem (68) whenever

Remark 9

The case (69) is the only one of interest: indeed Algorithm 1 stops if (69) is not satisfied, with the current iterate being a solution to the target problem (\(\mathcal {P}\)) (see Sect. 4.1.2). In Proposition 19, we prove that (69) is equivalent to

As P(w) is easily computable, condition (70) provides an implementable test for (69). Moreover, note that (69) is satisfied when F is computed from the dual variable \(w^n\) associated with the n-th iterate \(\mu ^n=\sum _{j=1}^{N_n}c_j^n \delta _{\gamma _j^n}\) of Algorithm 1, and \(n \ge 1\). This is because \(F(\gamma _j^n)=-1\) for all \(j=1,\ldots ,N_n\), as shown in (63).

If (69) is satisfied, we aim at computing points in \(\mathfrak {S}_F\) by gradient descent. To this end, we say that \(\{\gamma ^n\}\) in \(H^1\) is a descent sequence if

where \(D_{\gamma ^n}F \in (H^1)^*\) is identified with its Riesz representative in \(H^1\), and \(\{\delta _n\}\) is a stepsize chosen according to the Armijo–Goldstein or Backtracking-Armijo rules. In Theorem 14, we prove that if \(\{\gamma ^n\}\) is a descent sequence, there exists at least a subsequence such that \(\gamma ^{n_k} \rightarrow \gamma ^*\) strongly in \(H^1\); moreover, any such accumulation point \(\gamma ^*\) belongs to \(\mathfrak {S}_F\). To summarize, descent sequences in the sense of (71) enable us to compute points in \(\mathfrak {S}_F\), which are candidate solutions to (68) whenever (69) holds.

5.1.2 Descent Operator

Fix a dual variable w and consider the functional F defined as in (68). The descent operator \(\mathcal {G}_w :H^1([0,1];\overline{\Omega }) \rightarrow H^1 \cup \{\gamma _\infty \}\) associated with w is defined by

where \(\gamma ^*\) is an accumulation point for the descent sequence \(\{\gamma ^n\}\) defined according to (71) with starting point \(\gamma ^0:=\gamma \). In view of the discussion in the previous section, we know that the image of \(\mathcal {G}_w\) is contained in \(\mathfrak {S}_F\cup \{\gamma _\infty \}\). Note that if \(P(w)>0\), in principle, the image of \(\mathcal {G}_w\) will contain at least one stationary point \(\gamma ^* \in \mathfrak {S}_F\), as in this case (69) holds (Remark 9). However, in simulations, we can only compute \(\mathcal {G}_w\) on some finite family of curves \(\{ \gamma _i\}_{i \in I}\), which we name the starts. Thus, in general, we have no guarantee of finding points in \(\mathfrak {S}_F\), even if (69) holds. The situation improves if \(w=w^n\) is the dual variable associated with the n-th iterate \(\mu ^n=\sum _{j=1}^{N_n}c_j^n \delta _{\gamma _j^n}\) of Algorithm 1 and \(n \ge 1\). In this case, setting \(\mathcal {A}:=\{\gamma _{1}^n, \ldots , \gamma _{N_n}^n\}\), we have that \(\mathcal {G}_{w^n}(\mathcal {A}) \subset \mathfrak {S}_F\) by definition (72) and Remark 9. Therefore, by including the curves in \(\mathcal {A}\) in the set of considered starts, we are guaranteed of obtaining at least one point in \(\mathfrak {S}_F\).

5.1.3 Random Starts

We now describe how we randomly generate starting points \(\gamma \) in \(H^1([0,1];\overline{\Omega })\) for the descent operator \(\mathcal {G}\) at (72). We start by selecting time nodes \(0=t_0< t_1< \ldots < t_T=1\) drawn uniformly in [0, 1]. (If operating in the time-discrete setting, we sample instead with a uniform probability on the finite set of sampling times on which the fidelity term of (\(\mathcal {P}_{discr}\)) is defined.) We choose the value of a random start \(\gamma \) at time \(t_i\) seeking to maximize the dual variable \(w_{t_i}\). To achieve this, let \(Q: \mathbb {R}\rightarrow \mathbb {R}_+\) be non-decreasing and monotonous, and define the probability measure on E

for \(A \subset E\) Borel measurable and \(E \Subset \Omega \) introduced in Assumption 3. We then draw samples from \(\mathbb {P}_{w_{t_i}}\) with the rejection-sampling algorithm, and assign those samples to \(\gamma (t_i)\). Using that E is a convex set, the random curve \(\gamma \in \mathrm{AC}^2\) is obtained by interpolating linearly the values \(\gamma (t_i) \in E\). This procedure is executed by the routine sample, which inputs a dual variable w and outputs a randomly generated curve \(\gamma \in \mathrm{AC}^2\).

5.1.4 Crossovers Between Stationary Points

It is heuristically observed that stationary curves have a tendency to share common “routes,” as for example seen in the reconstructions presented in Figs. 4 and 5. It is then a reasonable ansatz to combine curves which are sharing routes, in order to increase the likelihood for the newly obtained crossovers to share common routes with the sought global minimizers of F. Such crossovers will then be employed as starts for the descent operator G at (72). Formally, the crossover is achieved as follows. We fix small parameters \(\varepsilon >0\) and \(0<\delta <1\). For \(\gamma _1, \gamma _2 \in \mathrm{AC}^2\) define the set

We say that \(\gamma _1\) and \(\gamma _2\) share routes if \(R_\varepsilon (\gamma _1,\gamma _2) \ne \emptyset \). If \(R_\varepsilon (\gamma _1,\gamma _2) = \emptyset \), we perform no operations on \(\gamma _1\) and \(\gamma _2\). If instead \(R_\varepsilon (\gamma _1,\gamma _2) \ne \emptyset \), first notice that \(R_\varepsilon (\gamma _1,\gamma _2)\) is relatively open in [0, 1]. Denote by I any of its connected components. Then, I is an interval with endpoints \(t^-\) and \(t^+\), satisfying \(0\le t^- < t^+\le 1\). The crossovers of \(\gamma _1\) and \(\gamma _2\) in I are the two curves \(\gamma _3, \gamma _4 \in \mathrm{AC}^2\) defined by

and linearly interpolated in \((\hat{t} - \delta \tilde{t}, \hat{t} + \delta \tilde{t})\), where \(\hat{t} :=(t^++t^-)/2\) and \(\tilde{t} :=(t^+-t^-)/2\), i.e.,