Abstract

In many situations, it is crucial to estimate the variance properly. Ordinary variance estimators perform poorly in the presence of shifts in the mean. We investigate an approach based on non-overlapping blocks, which yields good results in change-point scenarios. We show the strong consistency and the asymptotic normality of such blocks-estimators of the variance under independence. Weak consistency is shown for short-range dependent strictly stationary data. We provide recommendations on the appropriate choice of the block size and compare this blocks-approach with difference-based estimators. If level shifts occur frequently and are rather large, the best results can be obtained by adaptive trimming of the blocks.

Similar content being viewed by others

1 Introduction

We consider a sequence of random variables \(Y_1,\ldots ,Y_N\) generated by the model

Most of the time we assume that \(X_1,\ldots ,X_N\) are i.i.d. random variables with \(E\left( X_t\right) =\mu \) and \({\hbox {Var}}\left( X_t\right) = \sigma ^2\), but this will be relaxed occasionally to allow for a short-range dependent strictly stationary sequence. The observed data \(y_1,\ldots ,y_N\) are affected by an unknown number K of level shifts of possibly different heights \(h_1,\ldots ,h_K\) at different time points \(t_1,\ldots ,t_K\). Our goal is the estimation of the variance \(\sigma ^2\). Without loss of generality, we will set \(\mu = 0\) in the following.

In Sect. 2 we analyse estimators of \(\sigma ^2\) from the sequence of observations \((Y_t)_{t\ge 1}\) by combining estimates obtained from splitting the data into several blocks. Without the need of explicit distributional assumptions the mean of the blockwise estimates turns out to be consistent if the size and the number of blocks increases, and the number of jumps increases slower than the number of blocks. If many jumps in the mean are expected to occur, an adaptively trimmed mean of the blockwise estimates can be used, see Sect. 3. In Sect. 4 a simulation study is conducted to assess the performance of the proposed approaches. In Sect. 5 the estimation procedures are applied to real data sets, while Sect. 6 summarizes the results of this paper.

2 Estimation of the variance by averaging

When dealing with independent identically distributed data the sample variance is the common choice for estimation of \(\sigma ^2\). However, if we are aware of a possible presence of level shifts at unknown locations, it is reasonable to divide the sample \(Y_1,\ldots ,Y_N\) into m non-overlapping blocks of size \(n = \lfloor N / m\rfloor \) and to calculate the average of the m sample variances derived from the different blocks. A similar approach has been used in Dai et al. (2015) in the context of repeated measurements data and in Rooch et al. (2019) for estimation of the Hurst parameter.

The blocks-estimator \(\widehat{\sigma ^2}_{\mathrm{Mean}}\) of the variance investigated here is defined as

where \(S_j^2 = \frac{1}{n-1}\sum _{t = 1}^{n} (Y_{j, t} - \overline{Y}_j)^2\), \(\overline{Y}_j = \frac{1}{n}\sum _{t = 1}^{n} Y_{j,t}\) and \(Y_{j, 1},\ldots ,Y_{j, n}\) are the observations in the jth block. We are interested in finding the block size n which yields a low mean squared error (MSE) under certain assumptions.

In what follows, we will concentrate on the situation where all jump heights are positive. This is a worse scenario than having both, positive and negative jumps, since the data are more spread in the former case resulting in a larger positive bias of most scale estimators.

2.1 Asymptotic properties

We will use some algebraic rules for derivation of the expectation and the variance of quadratic forms in order to calculate the MSE of \(\widehat{\sigma ^2}_{\mathrm{Mean}}\), see Seber and Lee (2012). Let B be the number of blocks with jumps in the mean and \(K\ge B\) the total number of jumps. The expected value and the variance of \(\widehat{\sigma ^2}_{\mathrm{Mean}}\) are given as follows:

where \(\nu _4 = E\left( X_1^4\right) ,\, A = {\mathbb {I}}_n - \frac{1}{n}1_n1_n^{\mathrm{T}}\), \({\mathbb {I}}_n\) is the unit matrix, \(1_n = (1,\ldots ,1)^{\mathrm{T}}\) and \(\mu _j\) contains the expected values of the random variables in the perturbed block \(j=1,\ldots ,B\), i.e., \(\mu _j = (\mu _{j, 1}, \ldots , \mu _{j, n})^{\mathrm{T}} = (E(Y_{j, 1}), \ldots , E(Y_{j, n}))^{\mathrm{T}}\). The term \(\mu _j^{\mathrm{T}} A \mu _j/(n-1)\) is the empirical variance of the expected values \(E(Y_{j, 1}), \ldots , E(Y_{j, n})\) in block j. In a jump-free block, we have \(\mu _j^{\mathrm{T}} A \mu _j = 0\), since all expected values and therefore the elements of \(\mu _j\) are equal.

The blocks-estimator (2) estimates the variance consistently if the number of blocks grows sufficiently fast as is shown in Theorem 1.

Theorem 1

Let \(Y_{1},\ldots ,Y_{N}\)with \(Y_t = X_t + \sum _{k = 1}^{K} h_k I_{t \ge t_k}\)from Model (1) be segregated into m blocks of size n, where \(t_1,\ldots ,t_K\)are the time points of the jumps of size \(h_1,\ldots ,h_K\), respectively. Let B out of m blocks be contaminated by \(\widetilde{K}_1,\ldots ,\widetilde{K}_B\)jumps, respectively, with \(\sum _{j = 1}^B \widetilde{K}_j = K = K(N)\). Moreover, let \(E(|X_1|^4)<\infty \), \( K \left( \sum _{k = 1}^K h_k \right) ^2 = o\left( m \right) \)and \(m\rightarrow \infty \), whereas the block size n can be fixed or increasing as \(N\rightarrow \infty \). Then \(\widehat{\sigma ^2}_{\mathrm{Mean}} = \frac{1}{m}\sum _{j = 1}^{m} S_j^2 \rightarrow \sigma ^2\)almost surely.

Proof

Without loss of generality assume that the first B out of m blocks are contaminated by \(\widetilde{K}_1,\ldots ,\widetilde{K}_B\) jumps, respectively. Let the term \(S^2_{j, 0}\) denote the empirical variance of the uncontaminated data in block j, while \(S^2_{j, h}\) is the empirical variance when \(\widetilde{K}_j\) level shifts are present. Moreover, \(Y_{j, 1},\ldots ,Y_{j, n}\) are the observations in the jth block, \(\mu _{j,t} = E\left( Y_{j, t}\right) \) and \(\overline{\mu }_{j} = \frac{1}{n} \sum _{t = 1}^n E\left( Y_{j, t}\right) \). Then we have

For the second term in the last Eq. (3), we have almost surely

The term \(\frac{1}{B} \sum _{j = 1}^{B} \frac{1}{n} \sum _{t = 1}^n \left| (X_{j, t} - \overline{X}_j)\right| \) in (4) is a random variable with finite moments if n and B are fixed. This random variable converges to the term \(E\left( \frac{1}{n}\sum _{t = 1}^n \left| (X_{j, t} - \overline{X}_j)\right| \right) \) almost surely if \(B\rightarrow \infty \). In the case of \(B\rightarrow \infty \) and \(n\rightarrow \infty \) this term converges to \( E\left( |X_1|\right) \) almost surely due to Theorem 2 of Hu et al. (1989) and the condition \(E(|X_1|^4)<\infty \), since \(S_j^2 - E\left( S_j^2\right) \) are uniformly bounded with \(P(|S_j^2 - E\left( S_j^2\right) |>t)\rightarrow 0\,\forall t\) due to Chebyshev’s inequality and \({\hbox {Var}}(S_j^2) \rightarrow 0\). Moreover, we used the fact that \(B \left| \sum _{k = 1}^K h_k \right| \le K \left| \sum _{k = 1}^K h_k \right| = o(m)\).

The following is valid for the third term in (3):

The first term of the last equation in (3) converges almost surely to \(\sigma ^2\) due to the results on triangular arrays in Theorem 2 of Hu et al. (1989), assuming that the condition \(E(|X_1|^4)<\infty \) holds, since \(S_j^2 - E\left( S_j^2\right) \) are uniformly bounded with \(P(|S_j^2 - E\left( S_j^2\right) |>t)\rightarrow 0\,\forall t\) due to Chebyshev’s inequality and \({\hbox {Var}}(S_j^2) \rightarrow 0\). Application of Slutsky’s Theorem proves the result. \(\square \)

Remark 2

-

1.

If the jump heights are bounded by a constant \(h\ge h_k,\, k = 1,\ldots ,K,\) the strongest restriction arises if all heights equal this upper bound resulting in the constraint \( K \left( \sum _{k = 1}^K h_k \right) ^2 = K^3h^2 = o\left( m \right) \). Consistency is thus guaranteed if the number of blocks grows faster than \(K^3\).

-

2.

By the Central Limit Theorem, the estimator \(\widehat{\sigma ^2}_{\mathrm{Mean}}\) is asymptotically normal if no level shifts are present and the block size n is fixed. Its asymptotic efficiency relative to the ordinary sample variance is

$$\begin{aligned} \frac{{\hbox {Var}}(S^2)}{{\hbox {Var}}\left( \widehat{\sigma ^2}_{\mathrm{Mean}}\right) } = \frac{\frac{\nu _4}{N} - \frac{\sigma ^4(N-3)}{N(N-1)} }{\frac{1}{m}\left( \frac{\nu _4}{n} - \frac{\sigma ^4(n-3)}{n(n-1)}\right) } = \frac{ \nu _4 - \frac{\sigma ^4(N-3)}{ (N-1)} }{ \nu _4 - \frac{\sigma ^4(n-3)}{ (n-1)} } \overset{N \rightarrow \infty }{\longrightarrow } \frac{ \nu _4 - \sigma ^4 }{ \nu _4 - \frac{\sigma ^4(n-3)}{ (n-1)} } \end{aligned}$$in case of i.i.d data with finite fourth moments, where

$$\begin{aligned} {\hbox {Var}}\left( \widehat{\sigma ^2}_{\mathrm{Mean}}\right) = {\hbox {Var}}\left( \frac{1}{m} \sum _{j = 1}^m S_j^2\right) = \frac{1}{m} {\hbox {Var}}\left( S_1^2\right) = \frac{1}{m}\left( \frac{\nu _4}{n} - \frac{\sigma ^4(n-3)}{n(n-1)}\right) \end{aligned}$$(see Angelova (2012) for the variance \({\hbox {Var}}(S_1^2)\) of the sample variance). E.g., under normality the efficiency of the blocks estimater with fixed n is \( (n-1)n^{-1}<1.\)

-

3.

The asymptotic efficiency of the blocks-estimator relative to the sample variance is 1 if \(n\rightarrow \infty \).

The next Theorem shows that \(\widehat{\sigma ^2}_{\mathrm{Mean}}\) is asymptotically not only normal but even fully efficient in case of a growing block size.

Theorem 3

Assume that the i.i.d. random variables \(Y_1,\ldots ,Y_N\)are segregated into m blocks of size n, with \(m,\, n\rightarrow \infty \)such that \(m = o\left( n\right) , n = o\left( N\right) \). Moreover, assume that \(\nu _4 = E\left( X_1^4\right) <\infty \). Then we have

Proof

Rewriting the estimator \(\widehat{\sigma ^2}_{\mathrm{Mean}}\) we get

For the second term of the numerator in (5), we have that

since \(m = o(n)\). Convergence of the term (6) in mean implies convergence in probability to zero. Application of the Central Limit Theorem to the remaining terms of (5) yields the desired result. \(\square \)

Remark 4

In the proof of Theorem 3, we have assumed that \(m=o(n)\), i.e., the block size grows faster than the number of blocks. This condition can be dropped using the Lyapunov condition under the assumption of finite eighth moments, as will be shown in the following. We set

with \(E(T_{i,j}) = 0\) and \(\sum _{j = 1}^{m_i} E(T_{i,j}^2) = 1\,\, \forall i,\) where i denotes the ith row of the triangular array. The Lyapunov condition [see corollary 1.9.3 in Serfling (1980)] is the following:

With \(\delta = 2\) and existing moments \(\nu _1,\ldots ,\nu _8\) of \(X_1\) we get

where g is a function of the existing moments \(\nu _1,\ldots ,\nu _8\) with \(g(\nu _1,\ldots ,\nu _8) = O(1)\). See Angelova (2012) for the fourth central moment of the sample variance. Therefore, the condition \(m = o(n)\) can be dropped.

2.2 Choice of the block size

When choosing blocks of length \(n = 2\), the estimator \(\widehat{\sigma }^2_{\mathrm{Mean}}\) results in a difference-based estimator which considers \(\lfloor N/2\rfloor \) consecutive non-overlapping differences:

Difference-based estimators have been considered in many papers, see Von Neumann et al. (1941), Rice (1984), Gasser et al. (1986), Hall et al. (1990), Dette et al. (1998), Munk et al. (2005), Tong et al. (2013), among many others. Dai and Tong (2014) discussed estimation approaches based on differences in nonparametric regression context, Wang et al. (2017) considered an estimation technique which involves differences of second order, while Tecuapetla-Gómez and Munk (2017) proposed a difference-based estimator for m-dependent data. An ordinary difference-based estimator of first order, which considers all \(N-1\) consecutive differences, is [see, e.g., Von Neumann et al. (1941)]:

where \(A = \tilde{A}^{\mathrm{T}} \tilde{A} \) and \(\tilde{A}\) such that \(\tilde{A} Y = \left( Y_2 - Y_1,\ldots ,Y_N - Y_{N-1} \right) ^{\mathrm{T}} .\) Theorems 1.5 and 1.6 in Seber and Lee (2012) are used to calculate the expectation and the variance of the estimator \(\widehat{\sigma ^2}_{\mathrm{Diff}}\):

where \(\mu = E(Y) \), \(\nu _i = E\left( X_1^i\right) \) and a is a vector of the diagonal elements of A.

When no changes in the mean are present both, the difference-based and the averaging estimators, are unbiased. For the variance of the estimators we have

For example, for \(N = 100\) and a block size \(n = 10,\) we get \({\hbox {Var}}\left( \widehat{\sigma ^2}_{\mathrm{Mean}}\right) = 0.0222\), while \({\hbox {Var}}\left( \widehat{\sigma ^2}_{\mathrm{Diff}}\right) = 0.0302\). Therefore, when no changes in the mean are present the block-estimator can have smaller variance than the difference-based estimator. In Sect. 4 we compare the performance of the proposed estimation procedures with that of the difference-based estimator (7) in different change-point scenarios.

In the following, we investigate the proper choice of the block size for the estimator \(\widehat{\sigma ^2}_{\mathrm{Mean}} \). All calculations in this paper have been performed with the statistical software +R+, version 3.5.2, R Core Team (2018).

For known jump positions the MSE of the blocks-variance estimator \(\widehat{\sigma ^2}_{\mathrm{Mean}} \) can be determined analytically. The position of the jump is relevant for the performance of this approach. Therefore, it is reasonable to consider different positions of the K jumps to get an overall assessment of the performance of the blocks-estimator. For every \(K\in \{1, 3, 5\}\), we generate K jumps of equal heights \(h = \delta \cdot \sigma \), with \(\delta \in \{0, 0.1,0.2,\ldots ,4.9,5\}\), at positions sampled randomly from a uniform distribution on the values \(\max _{n}\left( N - \lfloor N / n\rfloor n \right) + 1,\ldots ,N - \max _{n}\left( N - \lfloor N / n\rfloor n \right) \) without replacement, and calculate the MSE for every reasonable block size \(n\in \{2,3,4,\ldots ,\lfloor N/2\rfloor \}\). This is repeated 1000 times, leading to 1000 MSE values for every h and n based on different jump positions. The average of these MSE values is taken for each h and n. Data are generated from the standard normal or the \(t_5\)-distribution.

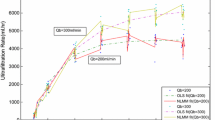

Panel (a) of Fig. 1 shows the block size \(n_{\mathrm{opt}}\) which yields the least theoretical MSE value of the estimator \(\widehat{\sigma ^2}_{\mathrm{Mean}}\) depending on the jump height \(h = \delta \cdot \sigma \) with \(K \in \{1, 3, 5\}\) jumps for \(N = 1000\) observations and normal distribution. We observe that \(n_{\mathrm{opt}}\) decreases for \(\widehat{\sigma ^2}_{\mathrm{Mean}}\) as the jump height grows. Blocks of size 2 (resulting in a non-overlapping difference-based estimator) are preferred when \(h \approx 4\sigma \) and \(K = 5\), while larger blocks lead to better results in case of smaller or less jumps.

a MSE-optimal block length \(n_{\mathrm{opt}}\) of \(\widehat{\sigma ^2}_{\mathrm{Mean}}\), b MSE regarding \(n_{\mathrm{opt}}\) of \(\widehat{\sigma ^2}_{\mathrm{Mean}}\) and c MSE of \(\widehat{\sigma ^2}_{\mathrm{Mean}}\) when choosing \( n = \frac{\sqrt{N}}{K + 1}\) for \(K = 1\) (solid line), \(K = 3\) (dashed line) and \(K=5\) (dotted line) with \(N=1000\), \(Y_t = X_t + \sum _{k = 1}^{K} h I_{t \ge t_k}\), where \(X_t \sim {N}(0, 1)\) and \(h = \delta \cdot \sigma ,\, \delta \in \{0, 0.1, \ldots , 5\}\).

Panel (b) of Fig. 1 depicts the MSE of \(\widehat{\sigma ^2}_{\mathrm{Mean}}\) for the respective MSE-optimal block size \(n_{\mathrm{opt}}\). In all three scenarios the MSE increases if the jump height gets larger. Different values for the optimal block size \(n_{\mathrm{opt}}\) are obtained in different scenarios, i.e., for different K and \(h = \delta \cdot \sigma \). As the true number and height of the jumps in the mean are usually not known in practice, we wish to choose a block size which yields good results in many scenarios. We do not consider very high jumps any further, since they can be detected easily and are thus not very interesting. The square root of the sample size N has proven to be a good choice for the block size in many applications, see e.g. Rooch et al. (2019). Moreover, in the upper panel of Fig. 1, we observe that smaller block sizes n are preferred when the number of change-points is high. If the estimation of the variance is in the focus of the application, we suggest to choose the block size depending on K:

which gets smaller if the number of jumps increases, resulting in many jump-free and only few contaminated blocks. Otherwise, if testing is of interest in view of Theorem 3, we suggest a block size which grows slightly faster than \(\sqrt{N}\), e.g. \(n = \max \left\{ \left\lfloor \frac{N^{6 / 10}}{K + 1}\right\rfloor , 2 \right\} \), which yields similar results as (8).

Remark 5

For large N we get \(m = N / n = \sqrt{N}(K+1)\). In this case the number of jumps needs to satisfy \(K = o\left( m^{1/3} \right) = o\left( N^{1/6} K^{1/3} \right) \), i.e., \(K = o\left( N^{1/4} \right) \) can be tolerated, see Remark 2. An even larger rate of shifts can be tolerated by choosing \(m = N / c\) for some constant c, i.e., a fixed block length n. However, this reduces the efficiency of the resulting estimator in the presence of a small rate of shifts, see Fig. 1. For example, for the standard normal distribution, the asymptotic relative efficiency of the blocks-estimator \(\widehat{\sigma ^2}_{\mathrm{Mean}}\) is \((n - 1)n^{-1} < 1\) and that of the difference-based estimator [as defined in (7)] is 2/3 [see e.g. Seber and Lee (2012)].

Panel (c) of Fig. 1 shows the MSE of the estimator \(\widehat{\sigma ^2}_{\mathrm{Mean}}\) with the block size n chosen according to (8). For \(K \in \{3, 5\}\) there is only a moderate loss of performance when choosing n according to (8) instead of \(n_{\mathrm{opt}}\) which depends on the number of jumps K and the height \(h = \delta \cdot \sigma \). In case of \(K = 1\) change in the mean the performance of the blocks-estimator worsens slightly.

Table 1 shows the average MSE of the ordinary sample variance for normally distributed data and different values of K and h, with \(N = 1000\). Again, 1000 simulation runs are performed where jumps are added to the generated data at randomly chosen positions. We observe that the MSE becomes very large when the number and height of the level shifts increases. Obviously, the blocks-estimator \(\widehat{\sigma ^2}_{\mathrm{Mean}}\) performs much better than the sample variance.

The results for data from the \(t_5\)-distribution are similar to those obtained for the normal distribution, see Fig. 7 in “Appendix.” Again, the blocks-estimator with the block size (8) [Panel (c)] performs well and does not lose too much performance compared to Panel (b) of Fig. 7, where the optimal block size is considered. Similar results are obtained for \(N = 2500\), see Figs. 9 and 10 in “Appendix”.

As the number of change-points K is not known exactly in real applications, there are several possibilities to set K in formula (8):

-

1.

Use \(n = \max \left\{ \left\lfloor \frac{\sqrt{N}}{K + 1} \right\rfloor , 2 \right\} \) with a large guess on the value of K.

-

2.

Pre-estimate K with an appropriate procedure.

-

3.

Use prior knowledge about plausible values of K.

We will discuss the first two approaches in the following two subsections.

2.3 Using a large guess on the number of jumps

If many change-points are present, a small block size should be chosen, while larger blocks are preferred in the case of only a few level shifts. If a practitioner does not have knowledge about the number of jumps in the mean we recommend choosing a rather high value K in the formula (8), which results in small blocks. Doing so we are usually on the safe side, since choosing too few blocks can result in a very high MSE, while the performance of the estimator does not worsen so much when choosing many blocks.

As an example we generate 1000 time series of length \(N = 1000\) with \(K = 3\) jumps at random positions. Figure 2 shows the MSE of \(\widehat{\sigma ^2}_{\mathrm{Mean}}\) depending on the jump height \(h = \delta \sigma ,\, \delta \in \{0, 0.1,\ldots ,5\},\) when choosing \( n = \frac{\sqrt{N}}{K + 1}\) with values \(K\in \{0, 1, \ldots , 6\}\). We observe that choosing a too small number of blocks (i.e., a too large block size) results in large MSE values if the jumps are rather high. On the other hand, the results do not worsen as much when choosing unnecessarily many and thus small blocks. Figure 8 in “Appendix” shows similar results for \(K = 5\) jumps.

Choosing \(n=2\) results in a non-overlapping difference-based estimator which performs also well but loses efficiency compared to the block-estimator with growing block size, which can be fully efficient, see Remarks 2 and 5.

We will not consider the block-estimator with the block size n depending on the choice of some large value of K instead of the true one in the following investigation, since it is a subjective choice of a practitioner.

MSE of \(\widehat{\sigma ^2}_{\mathrm{Mean}}\) when choosing \( n = \frac{\sqrt{N}}{K + 1}\) for true \(K = 3\) (—), \(K = 0\) ( ) \(K = 1\) (

) \(K = 1\) ( ) \(K = 2\) (

) \(K = 2\) ( ) \(K = 4\) (

) \(K = 4\) ( ) \(K = 5\) (

) \(K = 5\) ( ) and \(K = 6\) (

) and \(K = 6\) ( ) with \(N=1000\) and \(h = \delta \cdot \sigma ,\, \delta \in \{0, 0.1, \ldots , 5\}\), \(Y_t = X_t + \sum _{k = 1}^{3} h I_{t \ge t_k}\), where a \(X_t \sim {N}(0, 1)\) and b \(X_t \sim t_3\)

) with \(N=1000\) and \(h = \delta \cdot \sigma ,\, \delta \in \{0, 0.1, \ldots , 5\}\), \(Y_t = X_t + \sum _{k = 1}^{3} h I_{t \ge t_k}\), where a \(X_t \sim {N}(0, 1)\) and b \(X_t \sim t_3\)

2.4 Pre-estimation of the number of jumps

We will investigate the MOSUM procedure for the detection of multiple change-points proposed by Eichinger and Kirch (2018) to pre-estimate the number of change-points K. According to the simulations in the aforementioned paper, this procedure yields very good results in comparison with many other procedures for change-point estimation. We will describe the procedure briefly in the following.

At time point t a statistic \(T_{t, N}\) is calculated as

where \(G = G(N)\) is a bandwidth parameter and \(G\le t \le N - G\). In what follows, we will set the bandwidth parameter to \(G = \sqrt{N}\). The estimated number of change-points \(\widehat{K}\) is the number of pairs \((v_i, w_i)\) which fulfil

where \(D_N(G,\delta _N)\) is a critical value depending on the bandwidth parameter G and a sequence \(\delta _N\rightarrow 0\) and \(\widehat{\tau ^2}_{t, N}\) is a local estimator of the variance at location t. In a window of length 2G, the location t is treated as a possible change-point location. A mean correction is performed before and after the time point t for computing \(\widehat{\tau ^2}_{t, N}\).

The corresponding estimated change-point locations \(\widehat{t}_1, \ldots , \widehat{t}_{\widehat{K}}\) are

More information on the procedure can be found in Eichinger and Kirch (2018).

We will use the MOSUM procedure to estimate the number of change-points K in the formula (8) for the block size n. The corresponding blocks-estimator is denoted as \(\widehat{\sigma ^2}_{\mathrm{Mean} }^{\mathrm{mosum}}\). In Sect. 4 we will see that the performance of the two blocks-estimators \(\widehat{\sigma ^2}_{\mathrm{Mean} }\) [as defined in (2)] and \(\widehat{\sigma ^2}_{\mathrm{Mean} }^{\mathrm{mosum}}\) is similar in many cases.

Moreover, we introduce another estimation procedure which is fully based on the MOSUM method, for comparison. We divide the data into \(\widehat{K} + 1\) blocks at the estimated locations \(\widehat{t}_1, \ldots , \widehat{t}_{\widehat{K}}\) of the level shifts. In every block \(j = 1,\ldots ,\widehat{K} + 1,\) the empirical variance \(S_j^2\) is calculated. A weighted average \(\widehat{\sigma ^2}_{\mathrm{W} }^{\mathrm{mosum}}\) of those values can be computed to estimate the variance, i.e.,

where \(n_j\) is the size of block \(j = 1,\ldots ,\widehat{K}.\)

2.5 Extension to short-range dependent data

Since many real datasets exhibit autocorrelation, we investigate the averaging estimation procedure under dependence. We consider a strictly stationary linear process \((X_t)_{t\ge 1}\) with \(X_t = \sum _{i = 0}^\infty \psi _i \zeta _{t - i}\), where \(\zeta _{t}\) are i.i.d. with mean zero and finite variance and \((\psi _i)_{i\ge 0}\) is a sequence of constants with \( \sum _{i = 0}^\infty \psi _i<\infty \). The autocovariance function is defined as \(\gamma (\delta ) = E((X_t - E(X_t))(X_{t+\delta } - E(X_{t+\delta }))) ,\, \delta \in {\mathbb {N}}\), and is absolutely summable in this case, i.e., \(\sum _{\delta = 0}^{\infty } |\gamma (\delta )| < \infty \).

Theorem 6

Consider a strictly stationary linear process \((X_t)_{t\ge 1}\)with an absolutely summable autocovariance function and \(Y_t = X_t + \sum _{k = 1}^{K} h_k I_{t \ge t_k}\), i.e., data with K level shifts. Moreover, let \(K\left( \sum _{k = 1}^{K} h_k\right) ^2 = o(m\log (N)^{-1})\). Then \(\widehat{\sigma ^2}_{\mathrm{Mean}} = \frac{1}{m}\sum _{j = 1}^{m} S_j^2 \rightarrow \sigma ^2\)in probability.

Proof

Without loss of generality assume that the first \(B\le K\) out of m blocks are perturbed by K jumps in the mean. Furthermore, let \(\mu = E(X_{j,t})\, \forall j, t\), \(\mu _{j,t} = E\left( Y_{j, t}\right) \) and \(\overline{\mu }_{j} = \frac{1}{n} \sum _{t = 1}^n E\left( Y_{j, t}\right) \). Let the term \(S^2_{j, 0}\) denote the empirical variance of the uncontaminated data \(X_{j, 1},\ldots ,X_{j,n}\) in the block \(j = 1,\ldots ,m\), while \(S^2_{j, h}\) is the empirical variance in the perturbed block \(j = 1,\ldots ,B\).

Then

For the first term \(A_{1,N}\) of (10), we have:

The first term of (11) converges to zero, since the term \(\frac{1}{N}\sum _{j = 1}^{m } \sum _{t = 1}^n (X_{j, t} - \mu )^2\) is a consistent estimator of \(\sigma ^2\) and \(\frac{N}{m(n-1)} = \frac{N}{N - m}\rightarrow 1\). For the second term of (11) we have

due to absolute summability of the autocovariance function \(\gamma \), which implies convergence in probability. Therefore, the second term of (11) converges to zero. Altogether, the sum (11) converges to zero.

The second and the third terms \(A_{2,N}\) and \(A_{3,N}\) of (10) converge to zero due to the following considerations:

due to absolute summability of the autocovariance function \(\gamma \) and the condition \(K\left( \sum _{k = 1}^{K} h_k\right) ^2 = o(m\log (N)^{-1})\). Therefore, the term \(A_{2, N}\) converges to zero in rth mean with \(r = 2\), which implies convergence in probability. The argument in the probability \(A_{3,N}\) is deterministic. Set \(\epsilon _N = \log (N)^{-1}.\) For large N we have

since \(K\left( \sum _{k = 1}^{K} h_k\right) ^2 = o(m\log (N)^{-1})\) holds. Altogether we get the result \(\widehat{\sigma ^2}_{\mathrm{Mean}} \overset{p}{ \rightarrow } \sigma ^2\). \(\square \)

Remark 7

If the jump heights are bounded by a constant \(h\ge h_k,\, k = 1,\ldots ,K,\) the strongest restriction arises if all heights equal this upper bound resulting in the constraint \( K^3h^2 = o\left( m \log (N)^{-1} \right) \).

3 Trimmed estimator of the variance

So far we have considered cases where the number of changes in the mean K is rather small with respect to the number of the blocks m and thus to the number of observations N. However, there might be situations in which level shifts occur frequently. Asymptotically, the blocks-estimator \(\widehat{\sigma ^2}_{\mathrm{Mean}}\) [see (2)] is still a good choice for the estimation of the variance as long as the number of level shifts grows slowly, see e.g. Theorem 1. However, if many jumps are present in a finite sample the blocks-estimator is no longer a good choice and will become strongly biased. We propose an asymmetric trimmed mean of the blockwise estimates instead of their ordinary average, i.e., large estimates are removed and the average value of the remaining ones is calculated. We do not consider a symmetric trimmed mean, since the sample variance is positively biased in the presence of level shifts, so that estimates from blocks containing a level shift are expected to show up as upper outliers. Moreover, we suggest using rather many small blocks to account for potentially many level shifts. In the next Sects. 3.1 and 3.2, the choice of the trimming fraction is discussed.

3.1 Estimation with a fixed trimming fraction

The trimmed blocks-estimator is given as

where \(S^2_{\left( 1\right) }\le \cdots \le S^2_{\left( m\right) }\) are the ordered blockwise estimates, m is the number of blocks and \(C_{N, {\mathrm{Tr}},\alpha }\) is a sample and distribution dependent correction factor to ensure unbiasedness in the absence of level shifts. In practice this constant can be simulated under the assumption of observing data from a known location-scale family. For example, for standard normal distribution, \(\alpha = 0.2\), \(N = 1000\) and \(n = 20\) (\(m = 50\)) we generate 1000 samples of length \(N = 1000\) and calculate the average of the uncorrected trimmed variance estimates. The reciprocal of this average value yields \(C_{1000, {\mathrm{Tr}}, 0.2} = 1.198 \).

As an example, we generate 1000 time series of length \(N \in \{ 1000,2500\}\) from normal and \(t_5\)-distribution. We add \(K = N\cdot p \) jumps to the generated data at randomly chosen positions, as was done in Sect. 2.2, with \(p\in \{0, 2/1000, 4/1000, 6/1000, 10/1000\}\) and height \(h\in \{0, 2, 3, 5\}\). We choose \(n = 20\) to ensure that the number of jump-contaminated blocks is sufficiently smaller than the total number of blocks.

Table 2 shows the simulated MSE of the trimmed estimator (12) for \(\alpha \in \{0.1, 0.3,0.5\}\). Clearly, the performance of the trimmed estimator depends on the number of jumps in the mean and the trimming parameter \(\alpha \). Larger values of \(\alpha \) are required when dealing with many jumps but lead to an increased MSE if there are only a few jumps. Therefore, it is reasonable to choose \(\alpha \) adaptively, as will be described in the next Sect. 3.2.

3.2 Adaptive choice of the trimming fraction

Instead of using a fixed trimming fraction, we can choose \(\alpha \) adaptively, yielding the adaptive trimmed estimator \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}\) with

where \(S^2_{\left( 1\right) }\le \cdots \le S^2_{\left( m\right) }\) are the ordered blockwise sample variances and \(\alpha _{\mathrm{adapt}}\) is the adaptively chosen percentage of the blocks-estimates which will be removed.

3.2.1 Estimation under normality

We use the approach for outlier detection discussed in Davies and Gather (1993) to determine \(\alpha _{\mathrm{adapt}}\), assuming that the underlying distribution is normal. In this case, the distribution of the sample variance is well known, i.e., in block j we have that

Since the true variance \(\sigma ^2\) is not known, we propose to replace \(\sigma ^2\) by an appropriate initial estimate, such as the median of the blocks-estimates, i.e.,

where \(C_{N,{\mathrm{Med}}}\) is a finite sample correction factor. Subsequently, we remove those values

which exceed \(q_{\chi ^2_{n-1}, 1 - \beta _m}\), the \((1 - \beta _m)\)-quantile of the \(\chi ^2_{n-1}\)-distribution, with

We will refer to the adaptively trimmed estimator based on the approach of Davies and Gather (1993) under normality as \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{norm}}\).

The choice of \(\beta _m\) results in a probability of \(1-\beta \) that no observation (block in our case) is trimmed if \(T_1,\ldots ,T_m\) are i.i.d. \(\chi ^2_{n-1}\)-distributed, i.e.,

Furthermore, we expect that roughly \(m\cdot \beta _m\) blocks are trimmed on average in the absence of level shifts. The following simulations suggest that the adaptive trimming fraction is slightly larger than \(\beta _m\) which can be explained by the fact that we need to use an estimate such as \(\widehat{\sigma ^2}_{\mathrm{Med}} \) instead of the unknown \(\sigma ^2\). We generated 10,000 sequences of observations of size \(N \in \{1000, 2500\}\) for \(\beta \in \{0.05,\, 0.1\}\). Table 3 shows the average number of trimmed blocks in the absence of level shifts.

We suggest choosing a small block size, e.g. \(n = 20\), to cope with a possibly large number of change-points. In this way, it is ensured that the number of uncontaminated blocks is much larger than the number of perturbed blocks. Moreover, we choose \(\beta = 0.05 \).

The correction factor in (13) needs to be simulated taking into account that the percentage of the omitted block-estimates is no longer fixed. Therefore, for given N and \(\beta \) we generate 1000 sequences of observations. In each simulation run we calculate the block-estimates \(S_j^2,\, j=1,\ldots ,m,\) and the initial estimate of the variance \(\widehat{\sigma ^2}_{\mathrm{Med}} \). Subsequently, we remove the values \(\widehat{T}_j = (n-1)S^2_j / \widehat{\sigma ^2}_{\mathrm{Med}} \) which exceed the quantile \(q_{\chi ^2_{n-1}, 1 - \beta _{m}}\). Then the average value of the remaining block-estimates is computed. The procedure yields 1000 estimates. The correction factor is the reciprocal of the average of these values. For \(N = 1000\) and \(\beta = 0.05\) the simulated correction factor is \(C_{1000, {\mathrm{Tr,ad}}}^{0.05} = 1.0020\), while for \(N=2500\) we have \(C_{2500, {\mathrm{Tr,ad}}}^{0.05} = 1.0009 \), so both are nearly 1 and could be neglected with little loss.

3.2.2 Estimation under unknown distribution

When no distributional assumptions are made and the block size n is large, one can use that \(\sqrt{n}\left( S^2_j - \sigma ^2\right) / \sqrt{\nu _4-\sigma ^4}\) is approximately standard normal if the fourth moment exists.

The fourth central moment \(\nu _4 = E\left( \left( X_1 - E(X_1)\right) ^4\right) \) needs to be estimated properly in the presence of level shifts. We can estimate it in blocks and then compute the median of the blocks-estimates \(\widehat{\mu }_{4,{\mathrm{Med}}}\), as was done in (14). Then, values

which exceed the \((1 - \beta _m)\)-quantile of the standard normal distribution are removed. The corresponding adaptively trimmed estimator is denoted as \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{other}}\), where, again, \(\beta = 0.05 \) [see (16)] will be used in what follows.

Table 4 shows the average number of trimmed blocks in the absence of level shifts for normally distributed data, analogously to Table 3. We observe that the average number of trimmed block-estimates is much larger than the values for the trimming procedure which is based on the normality assumption.

Remark 8

We do not use distribution dependent correction factors for the estimators \(\widehat{\mu }_{4,{\mathrm{Med}}}\) and \(\widehat{\sigma ^2}_{\mathrm{Med}}\), since the underlying distribution is not known. The asymptotic distribution of the blockwise empirical variances is normal and therefore, for large block sizes, the distribution is expected to be roughly symmetric. A correction factor can then be neglected with little loss, since the sample median of symmetrically distributed random variables estimates their expected value.

3.3 Choice of the trimming fraction based on the MOSUM procedure

In Sect. 2.4 we have used the MOSUM procedure proposed by Eichinger and Kirch (2018) to estimate the unknown number of jumps K. The corresponding estimate \(\widehat{K}\) can also be used to determine the trimming fraction \(\alpha \) of the trimmed estimator in (12), i.e., we can set \(\alpha = \widehat{K} / m\). We will denote the corresponding estimator as \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{mos}}\). We compare the average number of trimmed blocks for the three estimators \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{mos}}\), \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{norm}}\) and \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{other}}\) in a simulation study. We generate 1000 sequences of observations of length \(N = 1000\) for different values of K and h. To every sequence we add K jumps of height h at randomly chosen positions, as was done in Sect. 2.2. Table 5 shows the average number of trimmed blocks for data sampled from the standard normal distribution and the corresponding MSE in different scenarios. Moreover, the MSE of the averaging estimator \(\widehat{\sigma ^2}_{\mathrm{Mean}}\) is displayed in the table for comparison.

We observe that \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{mos}}\) trims more blocks on average and yields a smaller MSE than \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{norm}}\) and \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{other}}\) if the jumps are small. The MSE of the averaging estimator \(\widehat{\sigma ^2}_{\mathrm{Mean}}\) is similar to that of \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{mos}}\) in that case. As opposed to this, the three trimmed estimators trim similarly many blocks on average if the jumps are large, with \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{norm}}\) and \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{other}}\) leading to smaller MSE values than \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{mos}}\). This can be explained by the fact that the former methods base the decision whether to trim a block or not directly on the criterion whether the empirical variance in a block is unusual or not. The averaging estimator \(\widehat{\sigma ^2}_{\mathrm{Mean}}\) is outperformed by the three trimmed estimators when dealing with large jumps.

For the heavy-tailed \(t_5\)-distribution, the results are found in Table 6. The MOSUM-based procedure yields slightly better results for large jump heights, i.e., \(h>2\), since the adaptively trimmed estimators overestimate the number of contaminated blocks in many cases. For small jumps, the adaptively trimmed procedures yield smaller MSE values. The three trimmed estimators perform better than \(\widehat{\sigma ^2}_{\mathrm{Mean}}\) in every case.

The results for the data generated by the AR(1) model with \(\phi = 0.5\) are displayed in Table 7. The MOSUM-based procedure trims considerably more blocks than the adaptively trimmed estimators \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{norm}}\) and \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{other}}\) yielding higher MSE values. Moreover, the averaging estimator \(\widehat{\sigma ^2}_{\mathrm{Mean}}\) is outperformed by the adaptively trimmed estimators \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{norm}}\) and \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{other}}\) in every case.

We conclude that the estimators \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{norm}}\) and \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{other}}\) perform better when dealing with large jumps and normally distributed data. For small jumps less contaminated blocks are trimmed by the adaptively trimmed estimators resulting in a higher MSE values in many scenarios. In this case the MOSUM-based estimator \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{mos}}\) yields the best results which are similar to those of \(\widehat{\sigma ^2}_{\mathrm{Mean}}\). For heavy-tailed data, the MOSUM-based procedure is preferable under large jumps but is inferior to the other trimmed estimates if the jumps are small. When dealing with positively correlated processes the adaptively trimmed estimators \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{norm}}\) and \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{other}}\) perform best. Therefore, the choice of the most appropriate estimator depends on the knowledge about the underlying distribution and the height of the jumps.

In the simulation study in Sect. 4, we concentrate on the adaptively trimmed estimators \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{norm}}\) and \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{other}}\) since they yield very good results in many scenarios, and on the averaging estimator \(\widehat{\sigma ^2}_{\mathrm{Mean}}\) since it performs well when dealing with a few small jumps and independent data.

4 Simulations

In this section we compare the estimators \(\widehat{\sigma ^2}_{\mathrm{Mean} }\), \(\widehat{\sigma ^2}_{\mathrm{Diff} }\), \(\widehat{\sigma ^2}_{\mathrm{Tr}, 0.5}\) with the block size \(n= 20\), \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{norm}}\) with the block size \(n= 20\) and \(\beta = 0.05\), \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{other}}\) with the block size \(n= 40\) and \(\beta = 0.05\), \(\widehat{\sigma ^2}_{\mathrm{Mean} }^{\mathrm{mosum}}\) and \(\widehat{\sigma ^2}_{\mathrm{W} }^{\mathrm{mosum}}\) in different scenarios. We generate 1000 sequences of observations of length \(N\in \{200,1000,2500\}\) from the standard normal and the \(t_5\) distribution. We add K jumps of heights \(h \in \{0, 2, 3, 5, 8\}\) to the data at randomly chosen positions as was done in Sect. 2.2. K is chosen dependent on the number of observations, i.e., \(K = p\cdot N\) with \(p\in \{0, 2/1000, 4/1000, 6/1000, 10/1000\}\).

Table 8 shows the simulated MSE for the normal distribution. The estimators \(\widehat{\sigma ^2}_{\mathrm{Mean} }\) and \(\widehat{\sigma ^2}_{\mathrm{Mean} }^{\mathrm{mosum}}\) yield similar results. We conclude that the estimation of the number of jumps K [required in the rule (8)] does not have a large effect on the estimator. The estimators \(\widehat{\sigma ^2}_{\mathrm{Mean} }\) and \(\widehat{\sigma ^2}_{\mathrm{Mean} }^{\mathrm{mosum}}\) yield, the best results if the jump heights are not very large, i.e., \(h\le 2\). However, the MSE of taking the ordinary average is much larger than that of the other estimators if the jump heights are large. Large jumps result in large blockwise estimates, which have a strong impact on the ordinary average.

The trimmed estimator \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{norm}}\) yields the best results among all methods considered here for normally distributed data if the jumps are rather high. When many small level shifts are present \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{other}}\) outperforms \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{norm}}\), although the latter makes use of the exact normality assumption. The estimator \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{other}}\) tends to remove more block-estimates than \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{norm}}\) in the absence of level shifts, see Tables 3 and 4. Therefore, we also expect that more blocks are trimmed away by \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{other}}\) if level shifts are present, reducing the risk of including perturbed blocks in the trimmed estimator \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{other}}\).

The trimmed estimator \(\widehat{\sigma ^2}_{\mathrm{Tr}, 0.5}\) with a fixed trimming fraction also yields good results. However, this estimation procedure requires the knowledge of the underlying distribution to compute the finite sample correction factor, see Sect. 3.1. The difference-based estimator \(\widehat{\sigma ^2}_{\mathrm{Diff} }\) performs well as long as the jumps are moderately high.

Table 9 shows the results for the \(t_5\) distribution. The estimation procedures \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{norm}}\) and \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{other}}\) yield the best results in this scenario.

In Table 10 the simulated MSE is presented when the data is generated from the autoregressive (AR) model with \(\phi = 0.5\), i.e., the data is positively correlated. The performance of the difference-based estimator worsens considerably then. This is due to the fact that this estimation procedure makes explicit use of the assumption of uncorrelatedness. While \(\widehat{\sigma ^2}_{\mathrm{Diff} }\) underestimates the true variance drastically (resulting in a high MSE value) when no changes in the mean are present, the performance seems to improve slightly when dealing with many high jumps. This can be explained by the fact that the positive bias, which arises from the jumps, compensates for the negative bias which arises from the (incorrect) assumption of uncorrelatedness. The blocks-estimator \(\widehat{\sigma ^2}_{\mathrm{Mean} }\) exhibits a similar behaviour, since the block size is small when the number of jumps is high, while correlated data require large block sizes to ensure satisfying results. For dependent data the best results are obtained when using the adaptively trimmed estimators. Since the variance \(\sigma ^2\) is underestimated when the data are dependent, the values \(\widehat{T}_j\) in (15) and (17) get larger resulting in a higher trimming parameter \(\alpha _{\mathrm{adapt}}\). Therefore, more blocks are trimmed away ensuring that the perturbed ones are not involved in the calculation of the overall estimate.

Figure 3 shows the simulated MSE dependent on the AR-parameter \(\phi \in \{0.1,\ldots ,0.8\}\) in four different scenarios: no jumps, few small jumps, many small jumps, and many high jumps. We observe that the performance of all estimators \(\widehat{\sigma ^2}_{\mathrm{Mean} }\), \(\widehat{\sigma ^2}_{\mathrm{Diff} }\), \(\widehat{\sigma ^2}_{\mathrm{Tr}, 0.5}\), \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{norm}}\), \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{other}}\), \(\widehat{\sigma ^2}_{\mathrm{Mean} }^{\mathrm{mosum}}\) and \(\widehat{\sigma ^2}_{\mathrm{W} }^{\mathrm{mosum}}\) worsens when the strength of the correlation (expressed by the parameter \(\phi \)) grows. When no changes in the mean are present [see Panel (a)] the estimators underestimate the variance more as \(\phi \) gets larger. On the other hand, large level shifts result in a positive bias of the estimators. Therefore, when many high jumps are present [Panel (d)] the results are better than in the case of many small jumps [Panel (c)]. This is more obvious for the estimators \(\widehat{\sigma ^2}_{\mathrm{Mean} }\), \(\widehat{\sigma ^2}_{\mathrm{Diff} }\) and \(\widehat{\sigma ^2}_{\mathrm{Mean} }^{\mathrm{mosum}}\). The trimmed estimation procedures do not suffer much from the increasing strength of correlation in our example. The averaging approach \(\widehat{\sigma ^2}_{\mathrm{Mean} }\) yields good results if the dependence is rather weak and if only few small jumps are present. The performance of the weighted average \(\widehat{\sigma ^2}_{\mathrm{W} }^{\mathrm{mosum}}\) worsens drastically when many high jumps are present. During this estimation procedure, the data are segregated into few blocks at estimated change-point locations. The approach is highly biased in the case of many and high level shifts if the number of change-points is underestimated. This is sometimes the case if two level shifts are not sufficiently far away from each other, see Eichinger and Kirch (2018).

Based on the simulation results we recommend using the averaging estimation procedure \(\widehat{\sigma ^2}_{\mathrm{Mean} }\) if the data are not expected to be correlated or heavy-tailed and the jump heights are rather small. Otherwise, if either no information on the distribution, the dependence structure of the data and the jump heights is given or the jumps are expected to be rather large, the adaptively trimmed procedure \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{other}}\) can be recommended.

Simulated MSE of \(\widehat{\sigma ^2}_{\mathrm{Mean} }\) (—), \(\widehat{\sigma ^2}_{\mathrm{Diff} }\) (- - -), \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{norm}}\) (\({\cdot \cdot \cdot }\)) , \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{other}}\) (\({{-} \cdot {-}}\)), \(\widehat{\sigma ^2}_{\mathrm{Tr}, 0.5}\) (– – – ), \(\widehat{\sigma ^2}_{\mathrm{Mean} }^{\mathrm{mosum}}\) with bandwidth \(G = \sqrt{N}\) ( ) and \(\widehat{\sigma ^2}_{\mathrm{W} }^{\mathrm{mosum}}\) with bandwidth \(G = \sqrt{N}\) (

) and \(\widehat{\sigma ^2}_{\mathrm{W} }^{\mathrm{mosum}}\) with bandwidth \(G = \sqrt{N}\) ( ) for a \(K =0, h = 0\), b \(K = 2, h = 2 \), c \(K = 10, h = 2 \) and d \(K = 10, h = 8 \) with \(N=1000\), \(Y_t = X_t + \sum _{k = 1}^{K} h I_{t \ge t_k}\), where \(X_t \) originates from the AR(1)-process with parameter \(\phi \in \{0.1,0.2,\ldots ,0.8\}\)

) for a \(K =0, h = 0\), b \(K = 2, h = 2 \), c \(K = 10, h = 2 \) and d \(K = 10, h = 8 \) with \(N=1000\), \(Y_t = X_t + \sum _{k = 1}^{K} h I_{t \ge t_k}\), where \(X_t \) originates from the AR(1)-process with parameter \(\phi \in \{0.1,0.2,\ldots ,0.8\}\)

5 Application

In this section, we apply the blocks-approach to two datasets in order to estimate the variance.

5.1 Nile river flow data

The first dataset contains the widely discussed Nile river flow records in Aswan from 1871 to 1984, see e.g. Hassan (1981), Hipel and McLeod (1994), Syvitski and Saito (2007), among many others. We consider the \(N=114\) annual maxima of the average monthly discharge in \({\text {m}}^3 / {\text {s}}\), since these values are often assumed to be independent in hydrology. The maxima are determined from January to December. The flooding season is from July to September, see Hassan (1981). Figure 4 shows the annual maxima of the average monthly discharge of the Nile river for the years 1871–1984.

The construction of the two Aswan dams in 1902 and from 1960 to 1969 obviously caused changes in the river flow, see Hassan (1981) and Hipel and McLeod (1994). We used Levene’s test [see Section 12.4.2 in Fox (2015)] to check the three segments of the data (divided by the years 1902 and 1960) for equality of variances. The null hypothesis of equal variances was not rejected with a p value of \(p = 0.40\).

A Q–Q plot of the data indicates that the deviation from a normal distribution is not large, see Fig. 11 in “Appendix”. With \(\beta = 0.05\) and \(n=10\) (\(m = 11\) blocks) no blocks are trimmed away during the trimming procedures \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{norm}}\) and \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{other}}\). The ordinary sample variance of the entire data yields the value 3,243,866, see Table 11. For the blocks-estimator of the variance from (2) we choose the block size according to (8) with \(K = 2\) getting \(n = \lfloor \sqrt{114} / 3 \rfloor = 3\). All blockwise estimators examined in this paper yield much smaller variance estimates for the whole observation period, ranging from 2,075,819 to 2,684,368.

We conclude that the procedures \(\widehat{\sigma ^2}_{\mathrm{Mean} }\), \(\widehat{\sigma ^2}_{\mathrm{Diff} }\), \(\widehat{\sigma ^2}_{\mathrm{Tr}, 0.5}\), \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{norm}}\), \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{other}}\), \(\widehat{\sigma ^2}_{\mathrm{Mean} }^{\mathrm{mosum}}\) and \(\widehat{\sigma ^2}_{\mathrm{W} }^{\mathrm{mosum}}\) perform better than the ordinary sample variance, since the estimated values on the whole dataset are similar to those for the period 1903–1960 in between the changes.

5.2 PAMONO data

In the second example, we use data obtained from the PAMONO (Plasmon Assisted Microscopy of Nano-Size Objects) biosensor, see Siedhoff et al. (2014). This technique is used for detection of small particles, e.g. viruses, in a sample fluid. For more details, see Siedhoff et al. (2011). PAMONO data sets are sequences of grayscale images. A particle adhesion causes a sustained local intensity change. This results in an obvious level shift in the time series of grayscale values for each corresponding pixel coordinate. To the best of our knowledge, a change of the variance after a jump in the mean is not expected to occur. A Q–Q plot of the data indicates that the assumption of a normal distribution is reasonable, see Fig. 12 in “Appendix”.

In Panel (a) of Fig. 5 we see a time series corresponding to one pixel which exhibits a virus adhesion, therefore revealing several level shifts in the mean of the time series. \(N = 1000\) observations are available. Panel (b) of Fig. 5 shows a boxplot of 101,070 values of the ordinary sample variance for time series which correspond to pixels without virus adhesion.

a Intensity over time for one pixel and b a boxplot of variances for the virus-free pixels together with the ordinary sample variance of the above data ( ) and values of \(\widehat{\sigma ^2}_{\mathrm{Mean} }\) and \(\widehat{\sigma ^2}_{\mathrm{Diff} }\) (—), \(\widehat{\sigma ^2}_{\mathrm{Tr}, 0.5}\) (- - -), \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{norm}}\) (\({\cdot \cdot \cdot \, \cdot }\)), \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{other}} \) (- \({\cdot }\) -), \(\widehat{\sigma ^2}_{\mathrm{Mean} }^{\mathrm{mosum}}\) (– – –) and \(\widehat{\sigma ^2}_{\mathrm{W} }^{\mathrm{mosum}}\) (– - –)

) and values of \(\widehat{\sigma ^2}_{\mathrm{Mean} }\) and \(\widehat{\sigma ^2}_{\mathrm{Diff} }\) (—), \(\widehat{\sigma ^2}_{\mathrm{Tr}, 0.5}\) (- - -), \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{norm}}\) (\({\cdot \cdot \cdot \, \cdot }\)), \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{other}} \) (- \({\cdot }\) -), \(\widehat{\sigma ^2}_{\mathrm{Mean} }^{\mathrm{mosum}}\) (– – –) and \(\widehat{\sigma ^2}_{\mathrm{W} }^{\mathrm{mosum}}\) (– - –)

Since changes in the mean are not expected there, we use these data to get some insight into the typical value range of the variance. The sample variance of the contaminated data (upper panel) is \(1.59\times 10^{-4}\) which is not within the typical range of values, since it exceeds the upper whisker of the boxplot. The other estimation procedures discussed in this paper yield values within the interval \([1.1 \times 10^{-4},\, 1.2\times 10^{-4}]\) which are well within the interquartile range. We conclude that these approaches yield reasonable estimates for these data.

5.3 PAMONO data with trend

Again, we consider a PAMONO dataset, see Sect. 5.2. Panel (a) of Fig. 6 shows a time series corresponding to a pixel, which seems to exhibit a virus adhesion as well as a linear trend. Panel (b) of Fig. 6 shows the differenced data, i.e., \(Y_t - Y_{t-1},\, t = 2,\ldots ,388\).

The differences of first order appear to be independent and scattered around a fixed mean. Few large differences can be observed which presumably originate from the jumps in the mean at the corresponding time points. The existence of the trend could be explained by the fact that the surface, on which the fluid for virus adhesion is placed, was heated up over time. \(N = 388\) observations are available. We apply the estimation procedures \(\widehat{\sigma ^2}_{\mathrm{Mean} }\) [using \(K \in \{1,\ldots , 5\}\) in the formula (8)], \(\widehat{\sigma ^2}_{\mathrm{Diff} }\), \(\widehat{\sigma ^2}_{\mathrm{Tr}, 0.5}\), \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{norm}}\), \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{other}}\), \(\widehat{\sigma ^2}_{\mathrm{Mean} }^{\mathrm{mosum}}\) and \(\widehat{\sigma ^2}_{\mathrm{W} }^{\mathrm{mosum}}\) to the data and get estimated values for the variance, which range from \(\widehat{\sigma ^2}_{\mathrm{Mean} } = 0.95 \times 10^{-6}\) (with \(K = 5\)) to \(\widehat{\sigma ^2}_{\mathrm{W} }^{\mathrm{mosum}} = 1.66 \times 10^{-6}\). The empirical variance of the observations has the value \(26.48 \times 10^{-6}\), which is much larger than the other estimates. According to our experience the PAMONO data can be assumed to be uncorrelated after differencing. The sample variance of differenced data is \(1.93 \times 10^{-6}\), which is an estimator of \(2\sigma ^2\), yielding the value \(0.97 \times 10^{-6}\) as an estimate for \(\sigma ^2\), which is near the estimated value of \(\widehat{\sigma ^2}_{\mathrm{Mean} }\).

We conclude that the proposed procedures yield reasonable results even in this situation, where a linear trend is present.

6 Conclusion

In the presence of level shifts, ordinary variance estimators like the empirical variance perform poorly. In this paper, we considered several estimation procedures in order to account for possible changes in the mean.

Estimation of \(\sigma ^2\) based on pairwise differences is popular in nonparametric regression and works well in the presence of level shifts and an unknown error distribution if the data are independent and the fraction of shifts is asymptotically negligible. However, we have identified scenarios where estimation based on longer blocks is to be preferred.

If only a few small level shifts are expected in a long sequence of observations our recommendation is to use the mean of the blocks-variances \(\widehat{\sigma ^2}_{\mathrm{Mean}}\). This estimation procedure does not require knowledge of the underlying distribution, performs well in the aforementioned situation and is asymptotically even as efficient as the ordinary sample variance if there are no level shifts.

If many or large level shifts are expected to occur we recommend using the adaptive trimmed estimators \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{norm}} \) and \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{other}} \). These procedures are constructed for independent data and use either the exact \(\chi ^2\)-distribution or the asymptotic normal distribution of the blockwise estimates, where the second and the fourth moments need to be estimated. We have found these trimming approaches to work reasonably well even under moderate autocorrelations, although many blocks are trimmed away then, presumably due to the underestimation of the unknown variance in the formula (17). Therefore, when no changes in the mean are present the trimmed estimators suffer efficiency loss. On the other hand, we expect that many perturbed blocks are trimmed away in the presence of level shifts reducing the bias of the estimator. The trimming approach could be extended to dependent data in future work.

In many applications we rather wish to estimate the standard deviation \(\sigma \), e.g. for standardization. If only few jumps of moderate heights are expected to occur, either the average value of the blockwise standard deviations or the square root of the blocks-variance estimator \(\widehat{\sigma ^2}_{\mathrm{Mean}}\) can be used. Otherwise, the square root of the trimmed estimator \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}^{\mathrm{other}} \) can be recommended. For a large sample size N the finite sample correction factors can be neglected with little loss, see “Appendix”.

An interesting extension will be to consider situations where not only the level but also the variability of the data can change. Suitable approaches for such scenarios might be constructed by combining the ideas discussed here with those presented by Wornowizki et al. (2017), where tests for changes in variability have been investigated using blockwise approaches, assuming a constant mean. This will be an issue for future work.

References

Angelova, J.A.: On moments of sample mean and variance. Int. J. Pure Appl. Math. 79(1), 67–85 (2012)

Dai, W., Tong, T.: Variance estimation in nonparametric regression with jump discontinuities. J. Appl. Stat. 41(3), 530–545 (2014)

Dai, W., Ma, Y., Tong, T., Zhu, L.: Difference-based variance estimation in nonparametric regression with repeated measurement data. J. Stat. Plan. Inference 163, 1–20 (2015)

Davies, L., Gather, U.: The identification of multiple outliers. J. Am. Stat. Assoc. 88(423), 782–792 (1993)

Dette, H., Munk, A., Wagner, T.: Estimating the variance in nonparametric regression—what is a reasonable choice? J. R. Stat. Soc. Ser. B (Stat. Methodol.) 60(4), 751–764 (1998)

Eichinger, B., Kirch, C., et al.: A mosum procedure for the estimation of multiple random change points. Bernoulli 24(1), 526–564 (2018)

Fox, J.: Applied Regression Analysis and Generalized Linear Models. Sage Publications, Thousand Oaks (2015)

Gasser, T., Sroka, L., Jennen-Steinmetz, C.: Residual variance and residual pattern in nonlinear regression. Biometrika 73(3), 625–633 (1986)

Hall, P., Kay, J., Titterinton, D.: Asymptotically optimal difference-based estimation of variance in nonparametric regression. Biometrika 77(3), 521–528 (1990)

Hassan, F.A.: Historical nile floods and their implications for climatic change. Science 212(4499), 1142–1145 (1981)

Hipel, K.W., McLeod, A.I.: Time Series Modelling of Water Resources and Environmental Systems, vol. 45. Elsevier, Amsterdam (1994)

Hu, T.C., Moricz, F., Taylor, R.: Strong laws of large numbers for arrays of rowwise independent random variables. Acta Math. Hung. 54(1–2), 153–162 (1989)

Miller, K.S.: Multidimensional Gaussian Distributions. Wiley, New York (1964)

Munk, A., Bissantz, N., Wagner, T., Freitag, G.: On difference-based variance estimation in nonparametric regression when the covariate is high dimensional. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 67(1), 19–41 (2005)

Olver, F.W., Lozier, D.W., Boisvert, R.F., Clark, C.W.: NIST Handbook of Mathematical Functions Hardback and CD-ROM. Cambridge University Press, Cambridge (2010)

R Core Team (2018) R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing, Vienna, Austria. https://www.R-project.org/

Rice, J., et al.: Bandwidth choice for nonparametric regression. Ann. Stat. 12(4), 1215–1230 (1984)

Rooch, A., Zelo, I., Fried, R.: Estimation methods for the lrd parameter under a change in the mean. Stat. Pap. 60(1), 313–347 (2019)

Seber, G.A., Lee, A.J.: Linear Regression Analysis, vol. 329. Wiley, New York (2012)

Serfling, R.: Approximation Theorems of Mathematical Statistics. Wiley, New York (1980)

Siedhoff, D., Weichert, F., Libuschewski, P., Timm, C.: Detection and classification of nano-objects in biosensor data. In: Proceedings of the International Workshop on Microscopic Image Analysis with Applications in Biology (MIAAB), p. 9 (2011)

Siedhoff, D., Zybin, A., Shpacovitch, V., Libuschewski, P.: Pamono sensor data 200nm\_10apr13.sfb876 (2014). https://doi.org/10.15467/e9ofqnvl6o. https://sfb876.tu-dortmund.de/auto?self=%24e45xtiwhs0

Syvitski, J.P., Saito, Y.: Morphodynamics of deltas under the influence of humans. Glob. Planet. Change 57(3–4), 261–282 (2007)

Tecuapetla-Gómez, I., Munk, A.: Autocovariance estimation in regression with a discontinuous signal and m-dependent errors: a difference-based approach. Scand. J. Stat. 44(2), 346–368 (2017)

Tong, T., Ma, Y., Wang, Y., et al.: Optimal variance estimation without estimating the mean function. Bernoulli 19(5A), 1839–1854 (2013)

Von Neumann, J., Kent, R., Bellinson, H., Bt, Hart: The mean square successive difference. Ann. Math. Stat. 12(2), 153–162 (1941)

Wang, W., Lin, L., Yu, L.: Optimal variance estimation based on lagged second-order difference in nonparametric regression. Comput. Stat. 32(3), 1047–1063 (2017)

Wornowizki, M., Fried, R., Meintanis, S.G.: Fourier methods for analyzing piecewise constant volatilities. AStA Adv. Stat. Anal. 101(3), 289–308 (2017)

Acknowledgements

Open Access funding provided by Projekt DEAL. This work has been supported by the Collaborative Research Center “Statistical modelling of nonlinear dynamic processes” (SFB 823) of the German Research Foundation (DFG), which is gratefully acknowledged. The authors would like to thank Sermad Abbas for providing the R+code to extract the PAMONO time series and the referees for their constructive comments which lead to substantial improvements.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 Blockwise estimation of the standard deviation

In many applications, we do not wish to estimate the variance \(\sigma ^2\) but rather the standard deviation \(\sigma \), e.g. for standardization.

1.1.1 Estimation by the blockwise average

We will consider the two blocks-estimators

where \(C_{N, 1}\) and \(C_{N, 2}\) are sample dependent correction factors to ensure unbiasedness when no changes in the mean are present. The block size n can be chosen according to the rule (8). If the number of change-points is not known in practice, it can be estimated as is done in Sect. 2.4.

For normally distributed data, the correction factors \(C_{N, 1}\) and \(C_{N, 2}\) can be determined analytically. To derive the correction factor \(C_{N, 1}\) for the estimator \(\widehat{\sigma }_{\mathrm{Mean}, 1}^{\mathrm{corr}},\) we will first consider the exact distribution of the empirical variance when dealing with jumps in the mean in order to derive the distribution of \( \widehat{\sigma }_{\mathrm{Mean}, 1} \) in (18). Given independent identically normally distributed data \(X_{j,1},\ldots ,X_{j,n}\) it is well known that \(\frac{(n - 1) S_j^2}{\sigma ^2} \sim \chi ^2_{n-1}\) in a jth jump-free block with n observations. The situation is different in the presence of jumps. Without loss of generality the following lemma is expressed in terms of the first block consisting of the observation times \(t=1,\ldots ,n\) and containing \(\widetilde{K}_1\le K\) jumps.

Lemma 9

Assume that \(X_{1},\ldots ,X_{n} \sim {\mathcal {N}}(0, \sigma ^2)\)and \(Y_{t} = X_t + \sum _{k = 1}^{\widetilde{K}_1} h_{k} I_{t \ge t_{k}} \)for \( t = 1,\ldots ,n\). Then we have for \(S_1^2 = \frac{1}{n-1} \sum _{t = 1}^n(Y_t - \overline{Y}_1)^2\)that

where \(\lambda _1 = \frac{1}{\sigma ^2}\sum _{t = 1}^{n} \left( \mu _{1,t} - \overline{\mu }_{1}\right) ^2,\, \mu _{1,t} = \sum _{k = 1}^{\widetilde{K}_1} h_{k} I_{t \ge t_{k}} \)and \(\overline{\mu }_{1} = \frac{1}{n}\sum _{t = 1}^{n}\sum _{k = 1}^{\widetilde{K}_1} h_{k} I_{t \ge t_k}\).

Proof

\(\overline{Y}_1 = \overline{X}_1 + \overline{\mu }_{1} \) and \(Y_{t} - \overline{Y}_1 = X_{t} - \overline{X}_1 - \overline{\mu }_{1} + \mu _{1, t},\, t = 1,\ldots ,n,\) are independent, since \(\overline{X}_1\) and \(X_{t} - \overline{X}_1\) are independent and the remaining terms are deterministic constants. Hence, \(S_1^2\) and \(\overline{Y}_1\) are independent. Furthermore,

with \(\sum _{t = 1}^n\left( \frac{Y_{ t} - \overline{\mu }_{1}}{\sigma }\right) ^2\sim \chi ^2_{n, \lambda _1} \), since \(Y_{ t}\, \forall t\) are independent and \( \frac{n}{\sigma ^2} \overline{X}_1^2 \sim \chi ^2_1\). The moment-generating function at \(z \in {\mathbb {R}}\) of both sides and the independence of \(S_1^2 \) and \(\overline{Y}_1\) yield:

In the following, we assume that \(B\le K\) blocks are contaminated by \(\widetilde{K}_1,\ldots ,\widetilde{K}_B\) jumps, respectively, with \(\sum _{k = 1}^B \widetilde{K}_k = K\). Without loss of generality assume that the jumps are contained in the first B blocks, while the last \(m-B>0\) blocks do not contain any jumps. The square root of a \(\chi ^2_{n-1, \lambda _j}\)-distributed random variable \((n - 1)S^2_j / \sigma ^2 \) is \(\chi \)-distributed with \(n-1\) degrees of freedom and non-centrality parameter \(\sqrt{\lambda _j}\), see e.g. Miller (1964). We hence have \(\sqrt{n - 1}S_j / \sigma \sim \chi _{n-1, \sqrt{\lambda _j}}\), \(j=1,\ldots ,m\), where \(\lambda _j=0\) for the last blocks \(j=B+1,\ldots ,m\), i.e., \(\sqrt{n-1} S_j / \sigma \sim \chi _{n-1}\). The expected value of \(S_j\) is given as

where \(F_{1, 1}(a,b,z)\) represents the generalized hypergeometric function, see Olver et al. (2010) for more details. When no changes in the mean are present we have that \(\lambda _j = 0\, \forall \, j\) and therefore \( F_{1, 1}(-\,0.5, 0.5 (n - 1), -\,0.5 \lambda _j) = 1\). The exact finite sample correction factor is given as

which is the reciprocal of the term \(C_{n, \lambda _j}\) in (20) when no level shifts are present, since \(F_{1, 1}(-\,0.5, 0.5 (n - 1), -\,0.5 \lambda _j) = 1\), \(j = 1,\ldots ,m\), in this case.

For the second estimator (19), we have the following statements on its expectation and a suitable finite sample correction factor:

We used the fact that \(\widehat{\sigma }_{\mathrm{Mean}, 2}\) follows a scaled \(\chi _{u, v}\) distribution with \(u = m(n-1)\) degrees of freedom and the non-centrality parameter \(v = \sqrt{\sum _{j = 1}^{B}\lambda _j}\), since \(\frac{n-1}{\sigma ^2}\sum _{j = B + 1}^{m} S_{j}^2\sim \chi ^2_{(m-B)(n-1)}\) and \(\frac{n-1}{\sigma ^2} \sum _{j = 1}^{B} S_{j}^2 \sim \chi ^2_{B(n-1),\sum _{j = 1}^{B}\lambda _j} \). Using this information we can determine the expectation of the estimator straightforwardly, see Miller (1964) and Olver et al. (2010). The correction factor is the reciprocal of \(D_{n, \lambda _{1},\ldots ,\lambda _B},\) where we have \( F_{1, 1}\left( -\,0.5, 0.5 m(n - 1), -\,0.5 \sum _{j = 1}^B\lambda _j \right) = 1\) in the absence of level shifts.

The following consistency statements are valid for the two introduced uncorrected estimators \(\widehat{\sigma }_{\mathrm{Mean}, 1} = \frac{1}{m}\sum _{j = 1}^{m} S_j\) [as defined in (18)] and \( \widehat{\sigma }_{\mathrm{Mean}, 2} = \sqrt{\frac{1}{m}\sum _{j = 1}^{m} S_j^2} \) [as defined in (19)] of \(\sigma \):

Corollary 10

Under the conditions of Theorem 1the estimators \( \widehat{\sigma }_{\mathrm{Mean}, 1}\)and \( \widehat{\sigma }_{\mathrm{Mean}, 2}\)converge almost surely to \(\sigma \), as \(N \rightarrow \infty \).

Proof

The strong consistency of \( \widehat{\sigma }_{\mathrm{Mean}, 2}\) follows immediately from the Continuous Mapping Theorem.

For \( \widehat{\sigma }_{\mathrm{Mean}, 1}\), we have due to Theorem 2 of Hu et al. (1989) that

since \(S_j - E\left( S_j\right) \) are uniformly bounded with \(P(|S_j - E\left( S_j\right) |>t)\rightarrow 0\,\forall t\) due to Chebyshev’s inequality and \({\hbox {Var}}(S_j) \rightarrow 0\).

Let \(S_{j, h}\) be the sample standard deviation in the perturbed block while \(S_{j, 0}\) is the estimate in the uncontaminated block. We have that

i.e., it suffices to show \(\frac{1}{m} \left( \sum _{j = B+1}^{m}E\left( S_{j, 0} \right) + \sum _{j = 1}^{B} E\left( S_{j, h} \right) \right) \rightarrow \sigma \). For the first of these two terms, we have

since the consistency and the decreasing variance of \(S_{1,0}\) implies convergence of the expectation, see Lemma 1.4A in Serfling (1980).

Using Jensen’s inequality we get for the second term

where \(\mu _{j,t}\) and \(\overline{\mu }_{j}\) are defined in the proof of Theorem 1.

Remark 11

The correction factors \(C_{N, 1}\) and \(C_{N, 2}\) from (18) and (19) satisfy

where \(C_{N, 1} = \sigma / E(\widehat{\sigma }_{\mathrm{Mean}, 1})\) and \(C_{N, 2} = \sigma / E(\widehat{\sigma }_{\mathrm{Mean}, 2})\) in the absence of level shifts.

This can be shown with Lemma 1.4A in Serfling (1980), since \(\widehat{\sigma }_{\mathrm{Mean}, 1}\) and \(\widehat{\sigma }_{\mathrm{Mean}, 2}\) are consistent estimators and their variances tend to zero

which implies convergence of the means and thus the above statement. Therefore, for large N and n we can neglect the correction factors and use the estimators \(\widehat{\sigma }_{\mathrm{Mean}, 1}\) and \(\widehat{\sigma }_{\mathrm{Mean}, 2}\) instead of \(\widehat{\sigma }_{\mathrm{Mean}, 1}^{\mathrm{corr}}\) and \(\widehat{\sigma }_{\mathrm{Mean}, 1}^{\mathrm{corr}}\) with block sizes \(n\rightarrow \infty \).

1.1.2 Trimmed estimation

When dealing with a large number of level shifts, as is discussed in Sect. 3, the square root of the variance estimator \(\widehat{\sigma ^2}_{\mathrm{Tr,ad}}\) from (13) can be used to estimate the standard deviation \(\sigma \). For large N and n, a correction factor to ensure unbiasedness when no changes in the mean are present can be neglected. Table 12 shows the simulated finite sample correction factors for normally and \(t_5\)-distributed data as well as for the stationary AR(1)-process with normal errors and parameter \(\phi \in \{0.3, 0.6\}\). We observe that the correction factors are nearly one except for strongly correlated data, i.e., AR-process with parameter \(\phi = 0.6\).

See Figs. 7, 8, 9, 10, 11 and 12.

a MSE-optimal block length \(n_{\mathrm{opt}}\) of \(\widehat{\sigma ^2}_{\mathrm{Mean}}\), b MSE regarding \(n_{\mathrm{opt}}\) of \(\widehat{\sigma ^2}_{\mathrm{Mean}}\) and c MSE of \(\widehat{\sigma ^2}_{\mathrm{Mean}}\) when choosing \( n = \frac{\sqrt{N}}{K + 1}\) for \(K = 1\) (—), \(K = 3\) (- - -) and \(K=5\) (\({\cdot \cdot \cdot }\)) with \(N=1000\), \(Y_t = X_t + \sum _{k = 1}^{K} h I_{t \ge t_k}\), where \(X_t \sim t_5\) and \(h = \delta \cdot \sigma ,\, \delta \in \{0, 0.1, \ldots , 5\}\)

MSE of \(\widehat{\sigma ^2}_{\mathrm{Mean}}\) when choosing \( n = \frac{\sqrt{N}}{K + 1}\) for true \(K = 5\) (—), \(K = 0\) ( ) \(K = 1\) (

) \(K = 1\) ( ) \(K = 2\) (

) \(K = 2\) ( ) \(K = 3\) (

) \(K = 3\) ( ) \(K = 4\) (

) \(K = 4\) ( ) and \(K = 6\) (

) and \(K = 6\) ( ) with \(N=1000\) and \(h = \delta \cdot \sigma ,\, \delta \in \{0, 0.1, \ldots , 5\}\), \(Y_t = X_t + \sum _{k = 1}^{5} h I_{t \ge t_k}\), where a \(X_t \sim {N}(0, 1)\) and b \(X_t \sim t_3\)

) with \(N=1000\) and \(h = \delta \cdot \sigma ,\, \delta \in \{0, 0.1, \ldots , 5\}\), \(Y_t = X_t + \sum _{k = 1}^{5} h I_{t \ge t_k}\), where a \(X_t \sim {N}(0, 1)\) and b \(X_t \sim t_3\)

a MSE-optimal block length \(n_{\mathrm{opt}}\) of \(\widehat{\sigma ^2}_{\mathrm{Mean}}\), b MSE regarding \(n_{\mathrm{opt}}\) of \(\widehat{\sigma ^2}_{\mathrm{Mean}}\) and c MSE of \(\widehat{\sigma ^2}_{\mathrm{Mean}}\) when choosing \( n = \frac{\sqrt{N}}{K + 1}\) for \(K = 1\) (—), \(K = 3\) (- - -) and \(K=5\) (\({\cdot \cdot \cdot }\)) with \(N=2500\), \(Y_t = X_t + \sum _{k = 1}^{K} h I_{t \ge t_k}\), where \(X_t \sim {N}(0,1)\) and \(h = \delta \cdot \sigma ,\, \delta \in \{0, 0.1, \ldots , 5\}\)

a MSE-optimal block length \(n_{\mathrm{opt}}\) of \(\widehat{\sigma ^2}_{\mathrm{Mean}}\), b MSE regarding \(n_{\mathrm{opt}}\) of \(\widehat{\sigma ^2}_{\mathrm{Mean}}\) and c MSE of \(\widehat{\sigma ^2}_{\mathrm{Mean}}\) when choosing \( n = \frac{\sqrt{N}}{K + 1}\) for \(K = 1\) (—), \(K = 3\) (- - -) and \(K=5\) (\({\cdot \cdot \cdot }\)) with \(N=2500\), \(Y_t = X_t + \sum _{k = 1}^{K} h I_{t \ge t_k}\), where \(X_t \sim t_5\) and \(h = \delta \cdot \sigma ,\, \delta \in \{0, 0.1, \ldots , 5\}\)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Axt, I., Fried, R. On variance estimation under shifts in the mean. AStA Adv Stat Anal 104, 417–457 (2020). https://doi.org/10.1007/s10182-020-00366-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10182-020-00366-5