Abstract

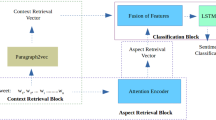

Different embeddings capture various linguistic aspects, such as syntactic, semantic, and contextual information. Taking into account the diverse linguistic facets, we propose a novel hybrid model. This model hinges on the amalgamation of multiple embeddings through an attention encoder, subsequently channeled into an LSTM framework for sentiment classification. Our approach entails the fusion of Paragraph2vec, ELMo, and BERT embeddings to extract contextual information, while FastText is adeptly employed to capture syntactic characteristics. Subsequently, these embeddings were fused with the embeddings obtained from the attention encoder which forms the final embeddings. LSTM model is used for predicting the final classification. We conducted experiments utilizing both the Twitter Sentiment140 and Twitter US Airline Sentiment datasets. Our fusion model’s performance was evaluated and compared against established models such as LSTM, Bi-directional LSTM, BERT and Att-Coder. The test results clearly demonstrate that our approach surpasses the baseline models in terms of performance.

Similar content being viewed by others

References

Cambria E, Das D, Bandyopadhyay S, Feraco A et al (2017) A practical guide to sentiment analysis. Springer, Berlin

Zárate JM, Santiago SM (2019) Sentiment analysis through machine learning for the support on decision-making in job interviews. In: International conference on human–computer interaction. Springer, Berlin, pp 202–213

Xiong S, Wang K, Ji D, Wang B (2018) A short text sentiment-topic model for product reviews. Neurocomputing 297:94–102

Groß-Klußmann A, König S, Ebner M (2019) Buzzwords build momentum: global financial twitter sentiment and the aggregate stock market. Expert Syst Appl 136:171–186

Lin C, He Y (2009) Joint sentiment/topic model for sentiment analysis. In: Proceedings of the 18th ACM conference on information and knowledge management, pp 375–384

Shoukry A, Rafea A (2012) Sentence-level Arabic sentiment analysis. In: 2012 International conference on collaboration technologies and systems (CTS). IEEE, pp 546–550

Schouten K, Frasincar F (2015) Survey on aspect-level sentiment analysis. IEEE Trans Knowl Data Eng 28(3):813–830

Zhang L, Wang S, Liu B (2018) Deep learning for sentiment analysis: a survey. Wiley Interdiscip Rev: Data Mini Knowl Discov 8(4):e1253

Da Silva NF, Hruschka ER, Hruschka ER Jr (2014) Tweet sentiment analysis with classifier ensembles. Decis Support Syst 66:170–179

Joachims T (1998) Text categorization with support vector machines: learning with many relevant features. In: European conference on machine learning. Springer, Berlin, pp 137–142

Bengio Y, Ducharme R, Vincent P, Jauvin C (2003) A neural probabilistic language model. J Mach Learn Res 3(Feb):1137–1155

Neethu M, Rajasree R (2013) Sentiment analysis in twitter using machine learning techniques. In: 2013 fourth international conference on computing, communications and networking technologies (ICCCNT). IEEE, pp 1–5

Jadav BM, Vaghela VB (2016) Sentiment analysis using support vector machine based on feature selection and semantic analysis. Int J Comput Appl 146(13):26–30

Ajit P (2016) Prediction of employee turnover in organizations using machine learning algorithms. Algorithms 4(5):C5

Chen P, Xu B, Yang M, Li S (2016) Clause sentiment identification based on convolutional neural network with context embedding. In: 2016 12th international conference on natural computation, fuzzy systems and knowledge discovery (ICNC-FSKD). IEEE, pp 1532–1538

Jain PK, Saravanan V, Pamula R (2021) A hybrid CNN-LSTM: a deep learning approach for consumer sentiment analysis using qualitative user-generated contents. Trans Asian Low-Resour Lang Inf Process 20(5):1–15

Zhang M, Zhang Y, Vo D-T (2016) Gated neural networks for targeted sentiment analysis. In: Thirtieth AAAI conference on artificial intelligence

Tang D, Qin B, Feng X, Liu T (2015) Effective LSTMS for target-dependent sentiment classification. arXiv preprint arXiv:1512.01100

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser Ł, Polosukhin I (2017) Attention is all you need. In: Advances in neural information processing systems, pp 5998–6008

Wang J, Li J, Li S, Kang Y, Zhang M, Si L, Zhou G (2018) Aspect sentiment classification with both word-level and clause-level attention networks. IJCAI 2018:4439–4445

Ma Y, Peng H, Cambria E (2018) Targeted aspect-based sentiment analysis via embedding commonsense knowledge into an attentive LSTM. In: Thirty-second AAAI conference on artificial intelligence

Soni J, Mathur K (2022) Sentiment analysis based on aspect and context fusion using attention encoder with LSTM. Int J Inf Technol 14(7):3611–3618

Sukhbaatar S, Szlam A, Weston J, Fergus R (2015) End-to-end memory networks, arXiv preprint arXiv:1503.08895

Chen P, Sun Z, Bing L, Yang W (2017) Recurrent attention network on memory for aspect sentiment analysis. In: Proceedings of the 2017 conference on empirical methods in natural language processing, pp 452–461

Zhu P, Qian T (2018) Enhanced aspect level sentiment classification with auxiliary memory. In: Proceedings of the 27th international conference on computational linguistics, pp 1077–1087

Naseem U, Razzak I, Musial K, Imran M (2020) Transformer based deep intelligent contextual embedding for twitter sentiment analysis. Futur Gener Comput Syst 113:58–69

Dowlagar S, Mamidi R (2021) Cmsaone@ dravidian-codemix-fire2020: a meta embedding and transformer model for code-mixed sentiment analysis on social media text, arXiv preprint arXiv:2101.09004

He J, Mai S, Hu H (2021) A unimodal reinforced transformer with time squeeze fusion for multimodal sentiment analysis. IEEE Signal Process Lett 28:992–996

Bacco L, Cimino A, Dell’Orletta F, Merone M (2021) Extractive summarization for explainable sentiment analysis using transformers. In 18th extended semantic web conference 2021

Yang J, Li Y, Gao C, Zhang Y (2021) Measuring the short text similarity based on semantic and syntactic information. Futur Gener Comput Syst 114:169–180

Lin T, Sun A, Wang Y (2023) EDU-capsule: aspect-based sentiment analysis at clause level. Knowl Inf Syst 65(2):517–541

Das R, Singh TD (2023) Multimodal sentiment analysis: a survey of methods, trends and challenges. ACM Comput Surv 270:1–38

Le Q, Mikolov T (2014) Distributed representations of sentences and documents. In: International conference on machine learning. PMLR, pp 1188–1196

Chen X, Hui K, He B, Han X, Sun L, Ye Z (2021) Co-BERT: a context-aware BERT retrieval model incorporating local and query-specific context, arXiv preprint arXiv:2104.08523

Elbedwehy S, Thron C, Alrahmawy M, Hamza T (2022) Real-time detection of first stories in twitter using a fasttext model. In: Artificial intelligence for data science in theory and practice. Springer, Berlin, pp 179–218

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780

Dua D, Graff C (2017) UCI machine learning repository (online). Available: http://archive.ics.uci.edu/ml

Graves A, Fernández S, Schmidhuber J (2005) Bidirectional LSTM networks for improved phoneme classification and recognition. In: International conference on artificial neural networks. Springer, Berlin, pp 799–804

Devlin J, Chang M-W, Lee K, Toutanova K (2018) Bert: pre-training of deep bidirectional transformers for language understanding, arXiv preprint arXiv:1810.04805

Liu Y, Ott M, Goyal N, Du J, Joshi M, Chen D, Levy O, Lewis M, Zettlemoyer L, Stoyanov V (2019) Roberta: a robustly optimized BERT pretraining approach, arXiv preprint arXiv:1907.11692

Meyes R, Lu M, de Puiseau CW, Meisen T (2019) Ablation studies in artificial neural networks, arXiv preprint arXiv:1901.08644

Ruan D, Yan Y, Lai S, Chai Z, Shen C, Wang H (2021) Feature decomposition and reconstruction learning for effective facial expression recognition. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 7660–7669

Grzegorowski M, Dominik, (2019) On resilient feature selection: computational foundations of rc-reducts. Inf Sci 499:25–44

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Soni, J., Mathur, K. Enhancing sentiment analysis via fusion of multiple embeddings using attention encoder with LSTM. Knowl Inf Syst (2024). https://doi.org/10.1007/s10115-024-02102-w

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10115-024-02102-w