Abstract

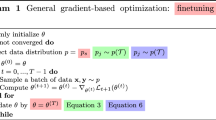

Optimization-based meta-learning aims to learn a meta-initialization that can adapt quickly a new unseen task within a few gradient updates. Model Agnostic Meta-Learning (MAML) is a benchmark meta-learning algorithm comprising two optimization loops. The outer loop leads to the meta initialization and the inner loop is dedicated to learning a new task quickly. ANIL (almost no inner loop) algorithm emphasized that adaptation to new tasks reuses the meta-initialization features instead of rapidly learning changes in representations. This obviates the need for rapid learning. In this work, we propose that contrary to ANIL, learning new features may be needed during meta-testing. A new unseen task from a non-similar distribution would necessitate rapid learning in addition to the reuse and recombination of existing features. We invoke the width-depth duality of neural networks, wherein we increase the width of the network by adding additional connection units (ACUs). The ACUs enable the learning of new atomic features in the meta-testing task, and the associated increased width facilitates information propagation in the forward pass. The newly learned features combine with existing features in the last layer for meta-learning. Experimental results confirm our observations. The proposed MAC method outperformed the existing ANIL algorithm for non-similar task distribution by \(\approx\) 12% (5-shot task setting).

Similar content being viewed by others

Data availability

Data can be available on demand.

References

Woźniak M, Siłka J, Wieczorek M (2023) Deep neural network correlation learning mechanism for ct brain tumor detection. Neural Comput Appl 35(20):14611–14626

Woźniak M, Wieczorek M, Siłka J (2023) Bilstm deep neural network model for imbalanced medical data of IoT systems. Futur Gener Comput Syst 141:489–499

Abe M, Nakayama H (2018) Deep learning for forecasting stock returns in the cross-section. In: Advances in knowledge discovery and data mining: 22nd Pacific-Asia conference, PAKDD 2018, Melbourne, VIC, Australia, June 3-6, 2018, Proceedings, Part I 22, pp 273–284. Springer

Bojarski M, Del Testa D, Dworakowski D, Firner B, Flepp B, Goyal P, Jackel LD, Monfort M, Muller U, Zhang J et al (2016) End to end learning for self-driving cars. arXiv preprint. arXiv:1604.07316

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser Ł, Polosukhin I (2017) Attention is all you need. Adv Neural Inf Process Syst 30:1–2

Woźniak M, Wieczorek M, Siłka J (2022) Deep neural network with transfer learning in remote object detection from drone. In: Proceedings of the 5th international ACM mobicom workshop on drone assisted wireless communications for 5G and beyond, pp 121–126

Ambalavanan V et al (2020) Cyber threats detection and mitigation using machine learning. In: Handbook of research on machine and deep learning applications for cyber security, pp 132–149. IGI Global

Koch G, Zemel R, Salakhutdinov R et al (2015) Siamese neural networks for one-shot image recognition. In: ICML deep learning workshop, vol. 2, Lille

Vinyals O, Blundell C, Lillicrap T, Wierstra D et al (2016) Matching networks for one shot learning. Adv Neural Inf Process Syst 29

Snell J, Swersky K, Zemel R (2017) Prototypical networks for few-shot learning. Adv Neural Inf Process Syst 30

Finn C, Abbeel P, Levine S (2017) Model-agnostic meta-learning for fast adaptation of deep networks. In: International conference on machine learning, pp 1126–1135. PMLR

Santoro A, Bartunov S, Botvinick M, Wierstra D, Lillicrap T (2016) Meta-learning with memory-augmented neural networks. In: International conference on machine learning, pp 1842–1850. PMLR

Ravi S, Larochelle H (2016) Optimization as a model for few-shot learning. In: International conference on learning representations

Nichol A, Achiam J, Schulman J (2018) On first-order meta-learning algorithms. arXiv preprint. arXiv:1803.02999,

Raghu A, Raghu M, Bengio S, Vinyals O (2019) Rapid learning or feature reuse? towards understanding the effectiveness of maml. arXiv preprint. arXiv:1909.09157,

Bengio S, Bengio Y, Cloutier J, Gecsei J (1995) On the optimization of a synaptic learning rule. In: Preprints conference optimality in artificial and biological neural networks, vol. 2

Hochreiter S, Younger AS, Conwell PR (2001) Learning to learn using gradient descent. In: International conference on artificial neural networks, pp 87–94. Springer

Munkhdalai T, Yu H (2017) Meta networks. In: International conference on machine learning, pp 2554–2563. PMLR

Tiwari S, Gogoi M, Verma S, Singh KP (2022) Meta-learning with hopfield neural network. In: 2022 IEEE 9th Uttar Pradesh section international conference on electrical, electronics and computer engineering (UPCON), pp 1–5. IEEE

Sung F, Yang Y, Zhang L, Xiang T, Torr PHS, Hospedales TM (2018) Learning to compare: Relation network for few-shot learning. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1199–1208

Tang H, Li Z, Peng Z, Tang J (2020) Blockmix: meta regularization and self-calibrated inference for metric-based meta-learning. In: Proceedings of the 28th ACM international conference on multimedia, pp 610–618

Peng Z, Li Z, Zhang J, Li Y, Qi GJ, Tang J (2019) Few-shot image recognition with knowledge transfer. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 441–449

Li Z, Tang H, Peng Z, Qi GJ, Tang J (2023) Knowledge-guided semantic transfer network for few-shot image recognition. IEEE Trans Neural Networks Learn Syst

Nichol A, Schulman J (2018) Reptile: a scalable metalearning algorithm. arXiv preprint. arXiv:1803.02999

Li Z, Zhou F, Chen F, Li H (2017) Meta-sgd: learning to learn quickly for few-shot learning. arXiv preprint. arXiv:1707.09835

Chen WY, Liu YC, Kira Z, Wang YCF, Huang JB (2019) A closer look at few-shot classification. arXiv preprint. arXiv:1904.04232

Tian Y, Wang Y, Krishnan D, Tenenbaum JB, Isola P (2020) Rethinking few-shot image classification: a good embedding is all you need? In: European conference on computer vision. pp 266–282. Springer, 2020

Fan FL, Lai R, Wang G (2020) Quasi-equivalence of width and depth of neural networks. arXiv preprint. arXiv:2002.02515

Nguyen T, Raghu M, Kornblith S (2020) Do wide and deep networks learn the same things? uncovering how neural network representations vary with width and depth. arXiv preprint. arXiv:2010.15327

Nguyen Q, Hein M (2017) The loss surface of deep and wide neural networks. In: International conference on machine learning, pp 2603–2612. PMLR

Lake B, Salakhutdinov R, Gross J, Tenenbaum J (2011) One shot learning of simple visual concepts. In: Proceedings of the annual meeting of the cognitive science society, vol 33

Oh J, Yoo H, Kim C, Yun SY (2020) Boil: towards representation change for few-shot learning. arXiv preprint. arXiv:2008.08882

Miranda B, Wang YX, Koyejo S (2021) Does maml only work via feature re-use? a data centric perspective. arXiv preprint. arXiv:2112.13137

Deleu T, Würfl T, Samiei M, Cohen JP, Bengio Y (2019) Torchmeta: a meta-learning library for PyTorch. Available at: https://github.com/tristandeleu/pytorch-meta

Arnold S, Iqbal S, Sha F (2021) When maml can adapt fast and how to assist when it cannot. In: International conference on artificial intelligence and statistics, pp 244–252. PMLR

Funding

No funds or grants were received from any organization.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no Conflict of interest to declare to the best of their knowledge.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Tiwari, S., Gogoi, M., Verma, S. et al. MAC: a meta-learning approach for feature learning and recombination. Pattern Anal Applic 27, 63 (2024). https://doi.org/10.1007/s10044-024-01271-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10044-024-01271-2