Abstract

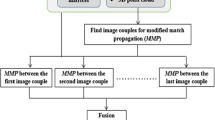

This paper describes a three-dimensional (3D) modeling method for sequentially and spatially understanding situations in unknown environments from an image sequence acquired from a camera. The proposed method chronologically divides the image sequence into sub-image sequences by the number of images, generates local 3D models from the sub-image sequences by the Structure from Motion and Multi-View Stereo (SfM–MVS), and integrates the models. Images in each sub-image sequence partially overlap with previous and subsequent sub-image sequences. The local 3D models are integrated into a 3D model using transformation parameters computed from camera trajectories estimated by the SfM–MVS. In our experiment, we quantitatively compared the quality of integrated models with a 3D model generated from all images in a batch and the computational time to obtain these models using three real data sets acquired from a camera. Consequently, the proposed method can generate a quality integrated model that is compared with a 3D model using all images in a batch by the SfM–MVS and reduce the computational time.

Similar content being viewed by others

References

TEPCO (2023) The status of fuel debris retrieval. https://www.tepco.co.jp/en/hd/decommission/progress/retrieval/index-e.html. Accessed by Nov 10

Kawabata K (2020) Toward technological contributions to remote operations in the decommissioning of the fukushima daiichi nuclear power station. Jpn J Appl Phys 59(5):050501

Asama H, Kawashima M (2021) Remote-controlled technology and robot technology for accident response and decommissioning of fukushima nuclear power plant. In: Insights concerning the Fukushima Daiichi Nuclear Accident, Vol. 2, Environmental effects and reconsideration of nuclear safety, pp 199–209

Ullman S (1979) The interpretation of structure from motion. Proc R Soc Lond Ser B Biol Sci 203(1153):405

Seitz SM, Curless B, Diebel J, Scharstein D, Szeliski R (2006) A comparison and evaluation of multi-view stereo reconstruction algorithms. In: 2006 IEEE computer society conference on computer vision and pattern recognition (CVPR’06), vol 1 (IEEE), pp 519–528

Hanari T, Kawabata K, Nakamura K (2022) Image selection method from image sequence to improve computational efficiency of 3d reconstruction: analysis of inter-image displacement based on optical flow for evaluating 3d reconstruction performance. In: 2022 IEEE/SICE International Symposium on System Integration (SII) (IEEE), pp 1041–1045

Nakamura K, Hanari T, Kawabata K, Baba K (2023) 3d reconstruction considering calculation time reduction for linear trajectory shooting and accuracy verification with simulator. Artif Life Robot 28(2):352

Matsumoto T, Hanari T, Kawabata K, Yashiro H, Keita N (2023) Automatic process for 3d environment modeling from acquired image sequences. In: International Symposium on Aritificial Life and Robotics (AROB2023), pp 768–773

Fukui R, Goto M, Yoshida T, Yokomura R, S W (2021) Development of bendable modularized rail structure for continuous observation in pcv. In: Proceedings of the 2021 JSME Conference on Robotics and Mechatronics (In Japanese)

Matsumoto T, Hanari T, Kawabata K, Yashiro H, Keita N (2023) Integration of 3d environment models generated from the sections of the image sequence based on the consistency of the estimated camera trajectories. In: The 22nd International Federation Automatic Control World Congress (IFAC2023), pp 12,107–12,112

Cantzler H (1981) Random sample consensus (ransac), Institute for Perception. Division of Informatics, University of Edinburgh, Action and Behaviour, p 3

Davison AJ, Reid ID, Molton ND, Stasse O (2007) Monoslam: real-time single camera slam. IEEE Trans Pattern Anal Mach Intell 29(6):1052

Whelan T, Leutenegger S, Salas-Moreno R, Glocker B, Davison A (2015) Elasticfusion: Dense slam without a pose graph. Robot Sci Syst

Wright T, Hanari T, Kawabata K, Lennox B (2020) Fast in-situ mesh generation using orb-slam2 and openmvs. In: 2020 17th international conference on ubiquitous robots (UR) (IEEE), pp 315–321

Mildenhall B, Srinivasan PP, Tancik M, Barron JT, Ramamoorthi R, Ng R (2020) Nerf: representing scenes as neural radiance fields for view synthesis. In: ECCV

Kerbl B, Kopanas G, Leimkühler T, Drettakis G (2023) 3d gaussian splatting for real-time radiance field rendering. ACM Trans Graph 42(4). https://repo-sam.inria.fr/fungraph/3d-gaussian-splatting/

Schonberger JL, Frahm JM (2016) Structure-from-motion revisited. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4104–4113

Remondino F, Karami A, Yan Z, Mazzacca G, Rigon S, Qin R (2023) A critical analysis of nerf-based 3d reconstruction. Remote Sens 15(14):3585

Lim H, Jung Sh, Seo J (2019) A simple and efficient merge of two sparse 3d models with overlapped images. In: 2019 International Conference on Information and Communication Technology Convergence (ICTC), pp 1474–1476. https://doi.org/10.1109/ICTC46691.2019.8939592

Fang M, Pollok T, Qu C (2019) Merge-sfm: merging partial reconstructions. In: BMVC, p 29

Lu XX (2018) A review of solutions for perspective-n-point problem in camera pose estimation. In: Journal of Physics: Conference Series, IOP Publishing, vol 1087, p 052009

Verbeke J, Cools R (1995) The newton-raphson method. Int J Math Educ Sci Technol 26(2):177

Mapillary (2021) OpenSfM. https://opensfm.org/. Accessed by 10 Nov 2023

cdcseacave (2021) OpenMVS. https://github.com/cdcseacave/openMVS. Accessed by 10 Nov 2023

SymPy (2021) SymPy. https://www.sympy.org/en/index.html. Accessed by 10 Nov 2023

FARO (2023) FARO Focus Laser Scanners. https://www.faro.com/en/Products/Hardware/Focus-Laser-Scanners. Accessed by 10 Nov 2023

Besl PJ, McKay ND (1992) Method for registration of 3-d shapes. In: Sensor fusion IV: control paradigms and data structures, 1611, pp 586–606

CloudCompare (2023) CloudCompare (version 2.13) [GPL software]. http://www.cloudcompare.org/. Accessed by 10 Nov 2023

Zhou QY, Park J, Koltun V (2018) Open3D: a modern library for 3D data processing. arXiv:1801.09847

Aucremanne L, Brys G, Hubert M, Rousseeuw PJ, Struyf A (2004) A study of belgian inflation, relative prices and nominal rigidities using new robust measures of skewness and tail weight. In: Theory and applications of recent robust methods (Springer), pp 13–25

VICON. Vero. http://www.vicon.jp/products/camera-systems/vero.html. Accessed by 10 Nov 2023. (in Japanese)

Sturm J, Engelhard N, Endres F, Burgard W, Cremers D (2012) A benchmark for the evaluation of rgb-d slam systems. In: 2012 IEEE/RSJ international conference on intelligent robots and systems (IEEE), pp 573–580

Acknowledgements

We would like to thank Masatoshi Honda and Norihito Shirasaki from the Naraha Center for Remote Control Technology Development of Japan Atomic Energy Agency for helping us measure the camera pose. We appreciate their kindness. This work was supported by the Nuclear Energy Science & Technology and Human Resource Development Project (through concentrating wisdom) from the Japan Atomic Energy Agency/Collaborative Laboratories for Advanced Decommissioning Science.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

About this article

Cite this article

Matsumoto, T., Hanari, T., Kawabata, K. et al. Estimated camera trajectory-based integration among local 3D models sequentially generated from image sequences by SfM–MVS. Artif Life Robotics (2024). https://doi.org/10.1007/s10015-024-00949-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10015-024-00949-4