Abstract

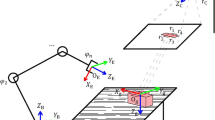

In recent years, many studies have used Convolutional Neural Networks (CNN) as an approach to automate bin picking tasks by robots. In these previous studies, all types of objects in a bin were used as training data to optimize CNN parameters. Therefore, as the number of types of objects in a bin increases under the condition that the total number of training data is retained, CNN is not sufficiently optimized. In this study, we propose a learning method of CNN to achieve a bin picking task for multi-types of objects. Unlike previous learning method using multi-types of objects, we use a single type of object with a well-designed shape to obtain training data. It is true that when an object is used for training, then the learning for the corresponding object proceeds very well. However, the training does not contribute so much to the leaning of other types of objects. we expect the CNN to learn many types of grasping methods simultaneously by the training data of the single well-designed object. In that case, the total number of training data for each type of objects in a bin can be retained even if the number of types of objects increases. To verify the idea, we construct 12 different CNN models which are trained by different types of objects. Through simulations and robot experiments, bin picking tasks to pick multi-types of objects were performed using those CNN models. As a result, the training method which uses a complex-shaped object achieved higher grasping success rate than the training method which uses a primitive-shaped object. Moreover, the training method which uses a complex-shaped object achieved higher grasping success rate than the previous training method which uses all types of objects in a bin.

Similar content being viewed by others

Data availability

The data that support the findings of this study are available from the corresponding author, TH, upon reasonable request.

References

Hanh LD, Hieu KTG (2021) 3D matching by combining cad model and computer vision for autonomous bin picking. Int J Interact Des Manuf (IJIDeM) 15:239–247

Liu M-Y, Tuzel O, Veeraraghavan A, Taguchi Y, Marks TK, Chellappa R (2012) Fast object localization and pose estimation in heavy clutter for robotic bin picking. Int J Robot Res 31(8):951–973

Domae Y, Okuda H, Taguchi Y, Sumi K, Hirai T (2014) Fast graspability evaluation on single depth maps for bin picking with general grippers. In: 2014 IEEE International Conference on Robotics and Automation (ICRA), pp 1997–2004

Shao Q, Hu J (2019) Combining RGB and points to predict grasping region for robotic bin-picking. arXiv:1904.07394 [cs.RO]

Kleeberger K, Völk M, Moosmann M, Thiessenhusen E, Roth F, Bormann R, Huber MF (2020) Transferring experience from simulation to the real world for precise pick-and-place tasks in highly cluttered scenes. In: 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp 9681–9688

Tachikake H, Watanabe W (2020) A learning-based robotic bin-picking with flexibly customizable grasping conditions. In: 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp 9040–9047

Moosmann M, Spenrath F, Kleeberger K, Khalid MU, Mönnig M, Rosport J, Bormann R (2020) Increasing the robustness of random bin picking by avoiding grasps of entangled workpieces. Procedia CIRP 93:1212–1217 (53rd CIRP Conference on Manufacturing Systems 2020)

Jamzuri ER, Pinandita A, Analia R, Susanto S. Object detection and pose estimation using rotatable object detector DRBox-v2 for bin-picking robot. In: Proceedings of the 5th International Conference on Applied Engineering, ICAE 2022, 5 October 2022, Batam, Indonesia, 6 2023

Mahler J, Goldberg K (2017) Learning deep policies for robot bin picking by simulating robust grasping sequences. In: Proceedings of the 1st Annual Conference on Robot Learning, vol 78, pp 515–524

Saxena A, Driemeyer J, Andrew YN (2008) Robotic grasping of novel objects using vision. Int J Robot Res 27(2):157–173

Miki K, Nagata F, Ikeda T, Watanabe K, Habib MK (2021) Molded article picking robot using image processing technique and pixel-based visual feedback control. Artif Life Robot 26(4):390–395

Mahler J, Liang J, Niyaz S, Laskey M, Doan R, Liu X, Ojea JA, Goldberg K (2017) Dex-Net 2.0: Deep learning to plan robust grasps with synthetic point clouds and analytic grasp metrics. arXiv:1703.09312 [cs.RO]

Schwarz M, Behnke S (2017) Data-efficient deep learning for RGB-D object perception in cluttered bin picking. In: Warehouse Picking Automation Workshop (WPAW), IEEE International Conference on Robotics and Automation (ICRA)

Tang B, Corsaro M, Konidaris G, Nikolaidis S, Tellex S (2021) Learning collaborative pushing and grasping policies in dense clutter. In: 2021 IEEE International Conference on Robotics and Automation (ICRA), pp 6177–6184

Matsumura R, Harada K, Domae Y, Wan W (2019) Learning based industrial bin-picking trained with approximate physics simulator. In: Intelligent Autonomous Systems, vol 15. Cham, pp 786–798

Chebotar Y, Handa A, Makoviychuk V, Macklin M, Issac J, Ratliff N, Fox D (2019) Closing the sim-to-real loop: Adapting simulation randomization with real world experience. In: 2019 International Conference on Robotics and Automation (ICRA), pp 8973–8979

Badrinarayanan V, Handa A, Cipolla R (2015) SegNet: A deep convolutional encoder-decoder architecture for robust semantic pixel-wise labelling. arXiv:1505.07293 [cs.CV]

Acknowledgements

We would like to acknowledge the late Professor Mitsuru Jindai from University of Toyama for contributing to the development of the study design.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

About this article

Cite this article

Bungo, I., Hayakawa, T. & Yasuda, T. Investigation of a single object shape for efficient learning in bin picking of multiple types of objects. Artif Life Robotics (2024). https://doi.org/10.1007/s10015-024-00945-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10015-024-00945-8