Abstract

Functional portfolio generation, initiated by E.R. Fernholz almost 20 years ago, is a methodology for constructing trading strategies with controlled behavior. It is based on very weak and descriptive assumptions on the covariation structure of the underlying market, and needs no estimation of model parameters. In this paper, the corresponding generating functions \(G\) are interpreted as Lyapunov functions for the vector process \(\mu \) of relative market weights; that is, via the property that the process \(G (\mu )\) is a supermartingale under an appropriate change of measure. This point of view unifies, generalizes, and simplifies many existing results, and allows the formulation of conditions under which it is possible to outperform the market portfolio over appropriate time horizons. From a probabilistic point of view, the approach offered here yields results concerning the interplay of stochastic discount factors and concave transformations of semimartingales on compact domains.

Similar content being viewed by others

1 Introduction

Almost 20 years ago, E.R. Fernholz [8] introduced a portfolio construction that was both remarkable and remarkably easy to establish. He showed that for a certain class of so-called “functionally generated” portfolios, it is possible to express the wealth these portfolios generate, discounted by (that is, denominated in terms of) the total market capitalization, solely in terms of the individual companies’ market weights – and to do so in a pathwise manner that does not involve stochastic integration. This fact can be proved by a somewhat determined application of Itô’s rule. Once the result is known, its proof becomes a moderate exercise in stochastic calculus.

The discovery paved the way for finding simple and very general structural conditions on large equity markets – that involve more than one stock, and typically thousands – under which it is possible strictly to outperform the market portfolio. Put a little differently, conditions under which strong relative arbitrage with respect to the market portfolio is possible, at least over sufficiently long time horizons. Fernholz [7–9] showed also how to implement this strong relative arbitrage, or “outperformance”, using portfolios that can be constructed solely in terms of observable quantities, and without any need for estimation or optimization. Pal and Wong [21] related functional generation to optimal transport in discrete time; and Schied et al. [27] developed a path-dependent version of the theory, based on pathwise functional stochastic calculus.

Although well known, celebrated and quite easy to prove, Fernholz’s construction has been viewed over the past 15 years as somewhat “mysterious.” In this paper, we hope to help make the result a bit more celebrated and a bit less mysterious, via an interpretation of portfolio-generating functions \(G\) as Lyapunov functions for the vector process \(\mu \) of relative market weights. Namely, via the property that \(G (\mu ) \) is a supermartingale under an appropriate change of measure; see Remark 3.4 for elaboration. We generalize this functional generation from portfolios to trading strategies which may involve short-selling, as well as to situations where some, but not all, of the market weights can vanish. Along the way, we simplify the underlying arguments considerably; we introduce the new notion of “additive functional generation” of strategies, and compare it to the “multiplicative” generation in [7–9]; and we answer an old question of [7, Problem 4.2.3], see also [21] in discrete time. Conditions for strong relative arbitrage with respect to the market over appropriate time horizons become extremely simple via this interpretation, as do the strategies that implement such relative arbitrage and the accompanying proofs that establish these results; see Theorems 5.1 and 5.2.

We have cast all our results in the framework of continuous semimartingales for the market weights; this seems to us a very good compromise between generality on the one hand, and conciseness and readability on the other. The reader will easily decide which of the results can be extended to general semimartingales, and which cannot.

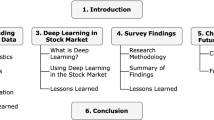

Here is an outline of the paper. Section 2 presents the market model and recalls the financial concepts of trading strategies, relative arbitrage, and deflators. Section 3 then introduces the notions of regular and Lyapunov functions. Section 4 discusses how such functions generate trading strategies, both “additively” and “multiplicatively”; and Sect. 5 uses these observations to formulate conditions guaranteeing the existence of relative arbitrage with respect to the market over sufficiently long time horizons. Section 6 contains several relevant examples for regular and Lyapunov functions and the corresponding generated strategies. Section 7 proves that concave functions satisfying certain additional assumptions are indeed Lyapunov, and provides counterexamples if those additional assumptions are not satisfied. Finally, Sect. 8 concludes.

2 The setup

2.1 Market model

On a given probability space \((\varOmega, \mathscr {F}, \mathbb {P})\) endowed with a right-continuous filtration \(\mathfrak {F} = ( \mathscr {F} (t) )_{ t \geq0}\) that satisfies \(\mathscr {F}(0) = \{ \emptyset, \varOmega\}\) mod. ℙ, we consider a vector process \({S} = {( S_{1} , \dots, S_{d} )}^{\prime}\) of continuous, nonnegative semimartingales with \(S_{1} (0)>0, \dots, S_{d} (0)>0\) and

We interpret these processes as the capitalizations of a fixed number \(d \ge2\) of companies in a frictionless equity market. A company’s capitalization \(S_{i} \) is allowed to vanish, but the total capitalization \(\varSigma \) of the equity market is not; to wit, we insist that

Throughout this paper, we study trading strategies that only invest in these \(d\) assets, and we abstain from introducing a money market explicitly: the financial market of available investment opportunities is represented here by the \(d\)-dimensional continuous semimartingale \(S \).

We next define the vector process \(\mu = ( \mu_{1} , \dots, \mu_{d} )'\) that consists of the various companies’ relative market weight processes

These are continuous, nonnegative semimartingales in their own right; each of them takes values in the unit interval \([0,1]\), and they satisfy \(\mu_{1} + \cdots+ \mu_{d} \equiv1\). In other words, the vector process \(\mu \) takes values in the lateral face \({ \varDelta }^{d}\) of the unit simplex in \(\mathbb {R}^{d}\). We are using throughout the notation

and note that by assumption, \(\mu(0) \in{ \varDelta }^{d}_{+}\).

An important special case of the above setup arises when each semimartingale \(S_{i} \) is strictly positive; equivalently, when the process \(\mu \) takes values in \({ \varDelta }^{d}_{+}\), that is,

2.2 Trading strategies

Let \(X = ( X_{1} , \dots, X_{d} )'\) denote a generic \([0,\infty)^{d}\)-valued continuous semimartingale. For the purposes of this section, \(X \) will stand either for the vector process \(S \) of capitalizations, or for the vector process \(\mu\) of market weights. We consider a predictable process \(\vartheta = ( \vartheta_{1} , \dots, \vartheta_{d} )' \) with values in \(\mathbb {R}^{d}\), and interpret \(\vartheta_{i} ( t)\) as the number of shares held at time \(t \geq0\) in the stock of company \(i=1, \dots, d\). Then the total value or “wealth” of this investment, in a market whose price processes are given by the vector process \(X \) and are not affected by such trading, is

Definition 2.1

Suppose that the \(\mathbb {R}^{d}\)-valued, predictable process \(\vartheta \) is integrable with respect to the continuous semimartingale \(X \), and write \(\vartheta \in\mathscr {L} (X)\) to express this. We call \(\vartheta \in\mathscr {L} (X)\) a trading strategy with respect to \(X \) if it is “self-financed”, i.e., if

holds. We denote by \(\mathscr {T} (X)\) the collection of all such trading strategies.

The following result can be proved via a somewhat determined application of Itô’s rule. It formalizes the intuitive idea that the concept of trading strategy should not depend on the manner in which prices or capitalizations are quoted. We refer to [12, Proposition 1] or [14, Lemma 2.9] for a proof.

Proposition 2.2

An \(\mathbb {R}^{d}\)-valued process \(\vartheta = (\vartheta_{1} , \dots, \vartheta _{d} )'\) is a trading strategy with respect to the \(\mathbb {R}^{d}\)-valued semimartingale \(S \) if and only if it is a trading strategy with respect to the \(\mathbb {R}^{d}\)-valued semimartingale \(\mu\) given in (2.2). In particular, \(\mathscr {T} (\mathcal{S})= \mathscr {T} (\mathcal{\mu})\); and in this case, we have

Suppose we are given an element \(\vartheta = {( \vartheta_{1} , \dots, \vartheta_{d} )}^{\prime}\) in the space \(\mathscr {L} (\mu) \) of predictable processes which are integrable with respect to the continuous semimartingale \(\mu = ( \mu_{1} , \dots, \mu_{d} )' \) of (2.2). Let us consider for each \(T \in[0, \infty)\) the quantity

which measures the “defect of self-financibility” of this process \(\vartheta \) relative to \(\mu \) over \([0,T]\). If \(Q^{\vartheta}(\cdot\, ; \mu) \equiv0\) fails, the process \(\vartheta \in\mathscr {L} (\mu)\) is not a trading strategy with respect to \(\mu \). How do we modify it then, in order to turn it into a trading strategy? Our next result describes a way which adjusts each component of \(\vartheta \) by the defect of self-financibility and by an arbitrary real constant; see also [14, Theorem 2.14].

Proposition 2.3

For a process \(\vartheta \in\mathscr {L} (\mu)\), a constant \(C \in \mathbb {R}\) and with the notation of (2.6), we introduce the processes

The resulting \(\mathbb {R}^{d}\)-valued, predictable process \(\varphi = ( \varphi_{1} , \dots, \varphi_{d} )'\) is then a trading strategy with respect to the vector process \(\mu \) of market weights; to wit, \(\varphi \in\mathscr {T} (\mu)\). Moreover, the value process \(V^{\varphi}(\cdot\, ; \mu) = \sum_{i=1}^{d} \varphi_{i} \mu_{i} \) of this trading strategy satisfies

Proof

Consider first the vector process \(\widetilde{\vartheta}= ( \widetilde{\vartheta}_{1} , \dots, \widetilde{\vartheta}_{d} )'\) with components given by \(\widetilde{\vartheta}_{i} = - C -Q^{\vartheta}(\cdot\, ; \mu)\) for each \(i = 1, \dots, d\). Then this \(\widetilde{\vartheta}\) is predictable, since both \(V^{\vartheta}(\cdot\, ; \mu)\) and \(\int_{0}^{ \cdot} \sum_{i=1}^{d} \vartheta_{i} ( t) \mathrm{d} \mu_{i} (t)\) are. Moreover, Lemma 4.13 in [28] yields \(\widetilde{\vartheta}\in \mathscr {L} (\mu)\); thus, \(\varphi = \vartheta +\widetilde{ \vartheta} \in\mathscr {L} (\mu)\). Furthermore, \(\int_{0}^{\cdot}\sum_{i=1}^{d} \widetilde{ \vartheta}_{i} ( t) \mathrm{d} \mu _{i} (t) \equiv0\) holds thanks to \(\sum_{i=1}^{d} \mu_{i} \equiv1\), and therefore so does

Now the first equality in (2.8) is a simple consequence of (2.7), (2.5) and (2.6). We also have \(\varphi_{i} (0) = \vartheta_{i} (0) - C\) for each \(i=1, \dots , d\), hence \(V^{\varphi}(0 ; \mu ) =V^{\vartheta}(0 ; \mu) - C\). This equality, in conjunction with (2.9), yields the second equality in (2.8). In particular, \(\varphi \) is indeed a trading strategy. □

2.3 Relative arbitrage with respect to the market

Let us fix a real number \(T > 0\). We say that a given trading strategy \(\varphi \in \mathscr {T} (S)\) is relative arbitrage with respect to the market over the time horizon \([0,T]\) if we have

in the notation of (2.1), along with

Whenever a given trading strategy \(\varphi \in \mathscr {T} (S )\) satisfies these conditions, and if the second probability in (2.11) is not just positive but actually equal to 1, that is, if

we say that this \(\varphi \) is strong relative arbitrage with respect to the market over the time horizon \([0,T]\).

Remark 2.4

It follows from Proposition 2.2 that the above requirements (2.10)–(2.12) can be cast, respectively, as

and \(\mathbb {P}[ V^{\varphi}(T; S) > \varSigma(T) ] =1\).

2.4 Deflators

For some of our results, we need the notion of deflator for the vector process \(\mu \) of market weights in (2.2). This is a strictly positive and adapted process \(Z \) with RCLL paths and \(Z(0)=1\) for which

thus \(Z \) is also a local martingale itself. An apparently stronger condition is that

Whenever a deflator for \(\mu\) exists, there exists also a continuous deflator; see Proposition 3.2 in [17]. Hence, in what follows we may (and do) assume that the process \(Z \) is continuous.

Proposition 2.5

The conditions in (2.13) and (2.14) are equivalent.

Proof

Let us suppose (2.13) holds; then \(Z \) is a local martingale, so there exists a nondecreasing sequence \((\tau _{n})_{n \in \mathbb {N}}\) of stopping times with \(\lim_{n \uparrow\infty} \tau _{n} = \infty\) and the property that \(Z( \cdot\wedge\tau_{n})\) is a uniformly integrable martingale for each \(n\in \mathbb {N}\). The recipe \(\mathbb {Q}_{n} (A)= \mathbb {E}^{\mathbb {P}}[ Z(\tau_{n}) \mathbf{ 1}_{A}]\) for each \(A\in \mathscr {F} (\tau_{n})\) defines a probability measure on \(\mathscr {F} (\tau_{n})\), under which \(\mu_{i} ( \cdot\wedge\tau_{n})\) is a martingale, for each \(i=1, \dots, d\) and \(n \in \mathbb {N}\). However, the “stopped” version \(\int_{0}^{ \cdot\wedge\tau_{n}} \sum _{i=1}^{d} \vartheta_{i} ( t) \mathrm{d} \mu_{i} (t)\) of the stochastic integral as in (2.14) is then also a \(\mathbb {Q}_{n}\)-local martingale for each \(n \in \mathbb {N}\); therefore, each product \(Z( \cdot\wedge\tau _{n})\int_{0}^{ \cdot\wedge\tau_{n}} \sum_{i=1}^{d} \vartheta_{i} ( t) \mathrm {d} \mu_{i} (t)\) is a ℙ-local martingale, and the property of (2.14) follows. The reverse implication is trivial. □

Remark 2.6

If a deflator \(Z \) exists and is a martingale, then for any \(T>0\), we can define a probability measure on \(\mathscr {F} (T) \) via \(\mathbb {Q}_{T} (A)= \mathbb {E}^{\mathbb {P}}[ Z(T) \mathbf{ 1}_{A}]\), \(A\in \mathscr {F} (T)\). Under this measure, the market weights \(\mu_{i} ( \cdot \wedge T), i=1, \dots , d\), are local martingales, thus actual martingales, as they take values in [0,1].

Now let us introduce the stopping times

Whenever a deflator for the vector \(\mu \) of market weights exists, each continuous process \(Z \mu_{i} \), being nonnegative and a local martingale, is a supermartingale. From this and from the strict positivity of \(Z\), we have then

Thus, the vector process \(\mu \) of market weights starts life at a point \(\mu(0) \in{ \varDelta }^{d}_{+}\). It may then – that is, when a deflator exists – begin a “descent” into simplices of successively lower dimensions, possibly all the way up until the time the entire market capitalization concentrates in just one company, to wit,

3 Regular and Lyapunov functions for semimartingales

For a generic \(d\)-dimensional semimartingale \(X \) with continuous paths, \(\mathrm{supp\,}( X)\) denotes the support of \(X \), that is, the smallest closed set \(\mathfrak {S} \subset \mathbb {R}^{d}\) with the property

The process \(\mu\) of market weights in (2.2) always satisfies \(\mathrm{supp\,} ( \mu)\subset \varDelta ^{d}\), with the notation in (2.3).

Definition 3.1

We say that a continuous function \(G : \mathrm{supp\,} (X )\) \(\rightarrow \mathbb {R}\) is regular for the \(d\)-dimensional continuous semimartingale \(X\) if

-

(i)

there exists a measurable function \(DG = {( D_{1} G, \dots, D_{d} G)}^{\prime}: \mathrm{supp\,} (X) \rightarrow \mathbb {R}^{d}\) such that the process \({ \vartheta} ={( { \vartheta}_{1} , \dots, { \vartheta }_{d} )}^{\prime}\) with components

$$ { \vartheta}_{i} ( t) := D_{i} G \big( X ( t) \big), \qquad i=1, \dots, d, t \ge0, $$(3.1)is in \(\mathscr {L} (X)\), and

-

(ii)

the continuous, adapted process

$$ \varGamma^{G} (T):= G \big( X (0) \big) - G \big( X (T) \big) + \int_{0}^{ T} \sum_{i=1}^{d} \vartheta_{i} (t) \mathrm{d} X_{i} (t) , \qquad T \ge0, $$(3.2)has finite variation on compact intervals.

Given a regular function \(G : \mathrm{supp\,} (X )\rightarrow \mathbb {R}\) for \(X \), the processes \({ \vartheta}_{i} \), \(i = 1, \dots, d\), of (3.1) provide the components of the “gradient” term in the Taylor expansion

for the process \(G(X )\) in the manner of (3.2), and the finite-variation process \(- \varGamma^{G} \) provides the “second-order” term of this expansion.

Definition 3.2

Consider as above a \(d\)-dimensional semimartingale \(X \) with continuous paths, as well as a regular function \(G : \mathrm{supp\,} (X )\rightarrow \mathbb {R}\). We say that the function \(G\) is balanced for the \(d\)-dimensional semimartingale \(X\) if

Definition 3.3

We say that a regular function \(G\) as in Definition 3.1 is a Lyapunov function for the \(d\)-dimensional semimartingale \(X \) if for some function \(DG\) as in Definition 3.1, the finite-variation process \(\varGamma^{G} \) of (3.2) is actually nondecreasing.

For instance, suppose \(G\) is a Lyapunov function as in Definition 3.3 and the process \({ \vartheta} \) in (3.1) is locally orthogonal to \(X \) in the sense that \(\int_{0}^{ \, \cdot} \sum _{i=1}^{d} \vartheta_{i} (t) \mathrm{d} X_{i} (t) \equiv0\). It follows then from (3.2) that the process \(G ( X ) = G ( X (0) ) - \varGamma^{G} \) in (3.3) is nonincreasing, so \(G\) is a Lyapunov function in the “classical” sense.

Remark 3.4

Suppose there exists a probability measure ℚ under which the market weights \(\mu_{1} , \dots, \mu_{d} \) are (local) martingales. Then for any function \(G : \mathrm{supp\,} (\mu)\rightarrow \mathbb {R}\) which is regular for \(\mu \), we see from (3.2) with \(X = \mu\) that the continuous process

is a ℚ-local martingale. If, furthermore, this \(G\) is actually a Lyapunov function for \(\mu\), then it follows that the process \(G ( \mu )\) is a ℚ-local supermartingale – thus in fact a ℚ-supermartingale, as it is bounded from below due to the continuity of \(G\).

A bit more generally, let us assume that there exists a deflator \(Z \) for the market weight process \(\mu\). From Proposition 2.5, the product \(Z \int_{0}^{\cdot}\sum_{i=1}^{d} D_{i} G ( \mu( t) ) \mathrm{d} \mu_{i} (t) \) is a ℙ-local martingale. If now \(G \) is a nonnegative Lyapunov function for \(\mu\), integration by parts shows that the process

is a ℙ-local supermartingale, thus also a ℙ-supermartingale as it is nonnegative.

The process \(\varGamma^{G} \) in ( 3.2 ) might depend on the choice of \(DG\) . For example, consider the situation where each component of \(\mu\) is of finite first variation, but not constant; then it is easy to see that different choices of \(DG\) lead to different processes \(\varGamma^{G} \) in (3.2) for \(X = \mu \). However, if a deflator for \(\mu\) exists, we have the following uniqueness result.

Proposition 3.5

If a function \(G : \mathrm{supp\,} (\mu) \rightarrow \mathbb {R}\) is regular for the vector process \(\mu = { ( \mu_{1} , \dots, \mu_{d} )}^{\prime}\) of market weights, and if a deflator for \(\mu \) exists, then the continuous, adapted, finite-variation process \(\varGamma^{G} \) of (3.2) does not depend on the choice of \(DG\).

Proof

Suppose that there exist a deflator \(Z \) for the vector process \(\mu \) of market weights, as well as two functions \(DG\), \(\widetilde{DG}\) as in Definition 3.1 for \(X = \mu\) with corresponding processes \({ \vartheta} \), \(\widetilde{{ \vartheta}} \) in (3.1) and \(\varGamma^{G} \), \(\widetilde{ \varGamma}^{G} \) in (3.2). Recall that \(Z \) may be assumed to be continuous. We need to show \(\varGamma^{G} = \widetilde{ \varGamma}^{G} \), or equivalently, \(\varUpsilon \equiv 0\), up to indistinguishability, with the notation \(\varUpsilon := \int_{0}^{\cdot}\sum_{i=1}^{d} { \phi}_{i} (t)\, \mathrm{d} \mu _{i} (t) \) and \({ \phi} := { \vartheta} -\widetilde{{ \vartheta}} \).

Now, thanks to (3.2), this continuous process \(\varUpsilon \) is of finite variation; so the product rule gives

As a consequence of Proposition 2.5, the process on the right-hand side is a local martingale; on the other hand, the process \(\int_{0}^{\cdot}Z (t)\, \mathrm{d} \varUpsilon(t)\) is continuous and of finite variation on compact intervals, and thus identically equal to zero. The strict positivity of \(Z \) leads to \(\varUpsilon \equiv0\). □

3.1 Sufficient conditions for a function to be regular or Lyapunov

Example 3.6

Suppose that a continuous function \(G :\mathrm{supp\,} (\mu) \rightarrow \mathbb {R}\) can be extended to a twice continuously differentiable function on some open set \(\mathcal {U} \subset \mathbb {R}^{d}\) with

Elementary calculus then expresses the processes \(\vartheta_{i} \) of (3.1) and the finite-variation process of (3.2) as

respectively, now with the notation \(D_{i} G = \partial G / \partial x_{i}\), \(D_{ij}^{2} G = \partial^{2} G / (\partial x_{i} \partial x_{j})\). (See Propositions 4 and 6 in [4] for slight generalizations of this result.) Therefore, such a function \(G\) is regular; if it is also concave, then the process \(\varGamma^{G} \) in (3.5) is nondecreasing, and \(G\) becomes a Lyapunov function.

Quite a bit more generally, we have the following results.

Theorem 3.7

A given continuous function \(G : \mathrm{supp\,} (\mu) \rightarrow \mathbb {R}\) is a Lyapunov function for the vector process \(\mu \) of market weights if one of the following conditions holds:

-

(i)

\(G\) can be extended to a continuous, concave function on the set \({ \varDelta }^{d}_{+}\) of (2.3), and (2.4) holds.

-

(ii)

\(G\) can be extended to a continuous, concave function on the set

$$ { \varDelta }^{d}_{e} := \bigg\{ { (x_{1}, \dots, x_{d} )}^{\prime}\in \mathbb {R}^{d}: \sum _{i=1}^{d} x_{i} =1 \bigg\} . $$(3.6) -

(iii)

\(G\) can be extended to a continuous, concave function on the set \(\varDelta ^{d}\) of (2.3), and there exists a deflator for the vector process \(\mu = ( \mu_{1} , \dots, \mu_{d} )'\) of market weights.

We refer to Sect. 7 for a review of some basic notions from convexity, and for the proof of Theorem 3.7. The existence of a deflator is essential for the sufficiency in Theorem 3.7(iii) (that is, whenever the market-weight process \(\mu \) is “allowed to hit a boundary”), as illustrated by Example 7.3 below.

3.2 Rank-based regular and Lyapunov functions

Let us introduce now the “rank operator” ℜ, namely, the mapping

with values in the polyhedral chamber

We denote by

the components of the vector \(x= (x_{1}, \dots, x_{d})'\), ranked in descending order with a clear, unambiguous rule for breaking ties (say, the lexicographic rule that always favors the smallest “index” \(i=1, \dots, d\)). Moreover, for each \(x \in \varDelta ^{d}\), we denote by

the number of components of the vector \(x= (x_{1}, \dots, x_{d})'\) that coalesce in a given rank \(\ell= 1, \dots, d\). Finally, we introduce the process \({\boldsymbol{\mu}} \) of market weights ranked in descending order, namely

We note that \({\boldsymbol{\mu}} \) is a continuous, \(\varDelta ^{d}\)-valued semimartingale in its own right (see [2], as well as (3.10) below), and can thus be interpreted again as a market model. However, this rank-based model may fail to admit a deflator, even when the original vector process of market weights \(\mu \) does. This is due to the appearance, in the dynamics for \({\boldsymbol{\mu}} \), of local time terms which correspond to the reflections whenever two or more components of the original process \(\mu\) collide; cf. (3.10) below.

Theorem 3.8

Consider a function \({\boldsymbol {G}} : \mathrm{supp\,} ( \boldsymbol{\mu}) \rightarrow \mathbb {R}\). Then \({\boldsymbol {G}}\) is a Lyapunov function for the ranked market weight process \({\boldsymbol{\mu}} \) in (3.9) if either

-

(i)

\(\boldsymbol {G}\) can be extended to a continuous, concave function on the set \({ \varDelta }^{d}_{+}\) of (2.3), and (2.4) holds, or

-

(ii)

\(\boldsymbol {G}\) can be extended to a continuous, concave function on the set \({ \varDelta }^{d}_{e}\) of (3.6).

Under any of these conditions, the composition \(G = {\boldsymbol {G}} \circ\mathfrak {R}\) is a regular function for the vector process \(\mu\). More generally, if \(\boldsymbol {G}\) is a regular function for \({\boldsymbol{\mu}} \), then \(G = {\boldsymbol {G}} \circ\mathfrak {R}\) is a regular function for \(\mu\).

We refer again to Sect. 7 for the proof of Theorem 3.8. A simple modification of Example 7.3 illustrates that a function \(\boldsymbol {G}\) can be concave and continuous on \(\mathbb{W}^{d}\) without being regular for \({\boldsymbol {\mu}} \). Indeed, this can happen even when a deflator for \(\mu\) exists, as Example 7.4 illustrates.

Example 3.9

Example 3.6 has an equivalent formulation for the rank-based case. Assume again that the function \(\boldsymbol {G} :\mathrm{supp\,} ( \boldsymbol{\mu}) \rightarrow \mathbb {R}\) can be extended to a twice continuously differentiable function on some open set \(\mathcal {U} \subset \mathbb {R}^{d}\) with \(\mathbb {P}[\boldsymbol{\mu}(t) \in \mathcal {U}, \forall t \geq0 ] =1\). Then \(\boldsymbol {G}\) is regular for \(\boldsymbol{\mu}\). Indeed, as in Example 3.6, applying Itô’s formula yields

with \(D_{\ell}\boldsymbol {G} = \partial\boldsymbol {G} / \partial x_{\ell}\), \(D_{k\ell}^{2} \boldsymbol {G} = \partial^{2} \boldsymbol {G} / (\partial x_{k} \partial x_{\ell})\), and the regularity of \(\boldsymbol {G}\) for \(\boldsymbol{\mu}\) follows.

Next, let \(\varLambda^{(k, \ell)} \) denote the local time process of the continuous semimartingale \(\mu_{(k)} - \mu_{(\ell)} \ge0 \) at the origin, for \(1 \le k < \ell \le d\) (the “collision local time for order \(\ell- k + 1\)”). Then with the notation of (3.8), Theorem 2.3 in [2] yields the semimartingale representation

or the ranked market weight processes; here and below, we agree that empty summations are equal to zero. Thus, we obtain for the function \(G = \boldsymbol {G} \circ\mathfrak {R}\) the representations of (3.1)–(3.3), with

In particular, \(G\) is regular for \(\mu\); this confirms the last statement of Theorem 3.8 in the present case.

Let us consider now the special case when the collision local times of order 3 or higher vanish, i.e.,

This will happen, of course, when actual triple collisions never occur. It will also happen when triple- or higher-order collisions do occur but are sufficiently “weak”, so as not to lead to the accumulation of collision local time; see [15, 16] for examples of this situation. Under (3.13), only the term corresponding to \(k = \ell+1\) appears in the second summation on the right-hand side of (3.12), and only the term corresponding to \(k = \ell-1\) appears in the third summation.

Example 3.10

Let us consider the function \(\boldsymbol {G}: \mathbb{W}^{d} \to[0,1]\) defined by \(\boldsymbol {G} (x ):= x_{1}\). This \(\boldsymbol {G}\) is twice continuously differentiable and concave. In particular, as in Example 3.6, \(\boldsymbol {G}\) is a Lyapunov function for the process \(\boldsymbol{\mu}\) in (3.9).

However, the composite function \(G = \boldsymbol {G} \circ\mathfrak {R}\), which has the representation \(G(x) = \max_{i=1, \dots,d} x_{i}\) for all \(x \in \varDelta ^{d}\), is regular for \(\mu\), but typically not Lyapunov. Indeed, in the notation of Example 3.9, we have \(D_{1} \boldsymbol {G} = 1\), \(D_{\ell}\boldsymbol {G}= 0\) for \(\ell= 2, \dots, d\), and \(D_{k \ell}^{2} \boldsymbol {G} = 0\) for all \(1 \le k,\ell\le d\). Thus, (3.11) gives

in the notation of Example 3.9, and the expression in (3.12) simplifies to

Unless the local time \(\varLambda^{(1,2)} \) is identically equal to zero, this process \(\varGamma^{G} \) is nonincreasing. If we now additionally assume the existence of a deflator, then by Proposition 3.5, the process \(\varGamma^{G} \) does not depend on the choice of \(D G\); thus, \(\varGamma^{G} \) is then determined uniquely by the above expression, so \(G\) cannot be a Lyapunov function for \(\mu \). Example 6.2 below generalizes this setup.

4 Functionally generated trading strategies

We introduce in this section the novel notion of additive functional generation of trading strategies, and study its properties. To simplify notation, and when it is clear from the context, we write from now on \(V^{\vartheta}\) (respectively, \(Q^{\vartheta}\)), to denote the value process \(V^{\vartheta}(\cdot; \mu)\) given in (2.5) (respectively, the defect of self-financibility process \(Q^{\vartheta}(\cdot;\mu)\) of (2.6)) for \(X = \mu \). Proposition 2.2 allows us then to interpret \(V^{\vartheta}=V^{\vartheta}(\cdot; \mu) = V^{\vartheta}(\cdot; S) / \varSigma \) as the “relative value” of the trading strategy \(\vartheta \in \mathscr {T} (S)\) with respect to the market portfolio.

4.1 Additive generation

For any given function \(G : \mathrm{supp\,} ( \mu) \rightarrow \mathbb {R}\) which is regular for the vector process \(\mu \) of market weights as in Definition 3.1, we consider the vector \({ \vartheta} ={( { \vartheta}_{1} , \dots, { \vartheta}_{d} )}^{\prime}\) of processes \({ \vartheta}_{i} := D_{i} G ( \mu )\) as in (3.1), and the trading strategy \({ \varphi} ={( { \varphi}_{1} , \dots, { \varphi}_{d} )}^{\prime}\) with components

in the manner of (2.7) and (2.6), and with the real constant

In other words, we adjust each \({ \vartheta}_{i} (t) \) both for the “defect of self-financibility” \(Q^{ { \vartheta} } (t) = Q^{ { \vartheta} } (t; \mu) \) at time \(t\ge0\), and for the “defect of balance” \({ C}(0)\) at time \(t=0\).

Definition 4.1

We say that the trading strategy \({ \varphi} = ( { \varphi}_{1} , \ldots, { \varphi}_{d} )' \in\mathscr {T} (\mu)\) of (4.1) is additively generated by the regular function \(G : \mathrm {supp\,} ( \mu) \rightarrow \mathbb {R}\).

Remark 4.2

There might be two different trading strategies \({ \varphi} \neq \widetilde{ \varphi} \), both generated additively by the same regular function \(G\). This is because the function \(DG\) in Definition 3.1 need not be unique. However, if there exists a deflator for \(\mu\), then the process \(\varGamma^{G} \) is uniquely determined up to indistinguishability by Proposition 3.5, and (4.3) below yields \(V^{{ \varphi}} = V^{\widetilde{ \varphi}} \).

Proposition 4.3

The trading strategy \({ \varphi} = ( { \varphi}_{1} , \dots, { \varphi }_{d} )' \), generated additively as in (4.1) by a function \(G : \mathrm{supp\,} (\mu) \rightarrow \mathbb {R}\) which is regular for the process of market weights, has relative value process

and can be represented, for \(i=1, \dots, d\), in the form

If in addition \(G\) is a nonnegative (respectively, strictly positive) Lyapunov function for the process \(\mu\) of market weights, then the value process \(V^{{ \varphi}} \) in (4.3) is nonnegative (respectively, strictly positive).

Proof

We substitute from (4.1) and (3.1) into (2.8), and recall (3.2) and (4.2) to obtain

that is, (4.3). Using (4.1), (2.6) and (2.8), we also obtain

leading to (4.5). The last claim is obvious from the nondecrease of \(\varGamma^{G} \). □

Remark 4.4

The expression for \(\varphi_{i} (t) \) in (4.4) adjusts \({ \vartheta}_{i} (t)= D_{i} G ( \mu(t) ) \) by adding to each component the “cumulative earnings” \(\varGamma^{G} (t)\), then compensates by subtracting the “defect of balance”

The expressions for \(\varphi_{i} (t) \) in (4.4), (4.5) motivate the interpretation of \(\varphi \) as “delta hedge” for a given generating function \(G\). Indeed, if we interpret \(DG\) as the gradient of \(G\), then for each \(i = 1, \dots, d\) and \(t \ge0\), the quantity \(\varphi_{i}( t)\) is exactly the “derivative” \(D_{i} G ( \mu( t) )\) in the \(i\)th direction, plus the global correction term

the same across all stocks \(i=1, \dots, d\); this ensures that \(\varphi \) is self-financed, i.e., a trading strategy.

To implement the trading strategy \(\varphi\) in (4.5) at some given time \(t > 0\), let us assume it has been implemented up to time \(t\). It now suffices to compute \(D_{i} G(\mu(t))\) for each \(i = 1, \dots , d\), and to buy exactly \(D_{i} G(\mu(t))\) shares of the \(i\)th asset. If not all wealth gets invested this way, that is, if the quantity \(w (t)\) is positive, then one buys exactly \(w(t)\) shares of each asset, costing exactly \(\sum _{i=1}^{d} w(t) \mu_{i}(t) = w(t)\). If \(w(t)\) is negative, one sells those \(|w(t)|\) shares instead of buying them. Thus, the implementation of the functionally generated strategy does not require the computation of any stochastic integral.

If the function \(G\) is nonnegative and concave, the following result guarantees that the strategy it generates holds a nonnegative amount of each asset, even if \(D_{i} G(\mu(t))\) is negative for some \(i = 1, \dots , d\).

Proposition 4.5

Assume that one of the three conditions in Theorem 3.7 holds for some continuous function \(G : \mathrm{supp\,} (\mu) \rightarrow[0,\infty)\). Then there exists a trading strategy \({ \varphi} \), additively generated by \(G\), which is “long-only”, i.e., satisfies \({ \varphi }_{i} \ge0 \) for each \(i = 1, \dots, d\).

The proof of Proposition 4.5 requires some convex analysis and is presented in Sect. 7.1 below.

Remark 4.6

Let \(G\) be a regular function for the vector process \(\mu \), generating the trading strategy \({ \varphi} \) as in (4.1) and (4.5). Whenever \(V^{{ \varphi}} >0 \) holds (for example, when \(G\) is a Lyapunov function taking values in \((0,\infty)\)), the portfolio weights

of the trading strategy \({ \varphi} \) can be cast with the help of (4.3) and (4.5) as

4.2 Multiplicative generation

Let us study now, in the generality of the present paper, the class of functionally generated portfolios introduced in [7–9]. Suppose the function \(G : \mathrm{supp\,} (\mu) \rightarrow[0, \infty) \) is regular for the vector process \(\mu \) of market weights in (2.2), and that \(1/G(\mu)\) is locally bounded. This holds if \(G\) is bounded away from zero, or if (2.4) is satisfied and \(G\) is strictly positive on \(\varDelta ^{d}_{+}\). We introduce the predictable portfolio weights

These processes satisfy \(\sum_{i=1}^{d} { \varPi}_{i} \equiv1\) rather trivially; and it is shown as in Proposition 4.5 that they are nonnegative if one of the three conditions in Theorem 3.7 holds.

In order to relate these portfolio weights to a trading strategy, let us consider the vector process \(\eta = ( \eta_{1} , \dots, \eta_{d} )' \) given in the notation of (3.1) by

for \(i=1, \dots, d \). We note that the integral is well defined, as \(1/G(\mu)\) is locally bounded by assumption. We have moreover \(\eta \in\mathscr {L} (\mu)\), since \({ \vartheta} \in\mathscr {L} (\mu)\) and the exponential process is locally bounded.

As before, we turn the predictable process \({ \eta} \) into a (self-financed) trading strategy \({ \psi} = ( { \psi}_{1} , \dots, { \psi}_{d} )' \) by setting

in the manner of (2.7) and (2.6), and with the real constant \(C(0)\) given by (4.2).

Definition 4.7

The trading strategy \({ \psi} = ( { \psi}_{1} , \dots, { \psi}_{d} )' \in\mathscr {T} (\mu)\) of (4.10), (4.9) is said to be multiplicatively generated by the function \(G : \mathrm{supp\,} (\mu ) \rightarrow[0, \infty)\).

Proposition 4.3 has the following counterpart.

Proposition 4.8

The trading strategy \({ \psi} = ( { \psi}_{1} , \dots, { \psi}_{d} )' \), generated as in (4.10) by a function \(G : \mathrm{supp\,} (\mu) \rightarrow(0, \infty) \) which is regular for the process \(\mu \) of market weights and such that the process \(1/G(\mu)\) is locally bounded, has relative value process

and can be represented for \(i=1, \dots, d\) in the form

Proof

With \(K := \exp( \int_{0}^{\cdot} ( 1/G(\mu( t)))\, \mathrm{d} \varGamma^{G} (t) )\), the product rule yields

where the second equality uses (3.3) and the second-to-last relies on (2.8). Since (4.11) holds at time zero, namely

in view of (2.5), (4.10), (2.6) and (4.2), it follows from the above display that (4.11) holds in general.

On the other hand, starting with (4.10), we obtain

We have used here (2.8), the definition \(\eta_{i} = K D_{i} G ( \mu )\), and the definition of \(Q^{ \eta} \) in (2.6). Since \(V^{ { \psi}} = K G ( \mu ) \) holds from (4.11), the last display leads to the representation (4.12). □

It is easy to see how the portfolio process \({ \varPi} \) in (4.8) is obtained from (4.12) in the same manner as (4.6), since \(V^{ \psi} \) is strictly positive. The representation in (4.11) is a generalized master equation in the spirit of Theorem 3.1.5 in [7]; both it and its additive version (4.3) have the remarkable property that they do not involve any stochastic integration at all.

4.3 Comparison of additive and multiplicative functional generation

It is instructive at this point to compare additive and multiplicative functional generation. On a purely formal level, the multiplicative generation of Definition 4.7 requires a regular function \(G\) with the property that \(1/G(\mu )\) is locally bounded. On the other hand, additive functional generation requires only the regularity of the function \(G\).

At time \(t=0\), the additively generated strategy agrees with the multiplicatively generated one; that is, we have \(\varphi(0) = \psi (0)\) in the notation of (4.5) and (4.12). However, at any time \(t>0\) with \(\varGamma^{G}(t) \neq0\), these two strategies usually differ; this is seen most easily by looking at their corresponding portfolios (4.7) and (4.8). More precisely, the two strategies differ in the way they allocate the proportion of their wealth captured by the finite-variation “cumulative earnings” process \(\varGamma^{G} \). The additively generated strategy tries to allocate this proportion uniformly across all assets in the market, whereas the multiplicatively generated strategy tends to correct for this amount by proportionally adjusting the asset holdings.

To see this, consider again (4.12) and assume for concreteness that the regular function \(G\) is also balanced for the vector process \(\mu \) of market weights; see, for instance, the geometric mean function of (4.13) right below. We have then from (4.12) the representation

thus, in this situation, the multiplicatively generated \({\psi}(t)\) does not invest in assets with \(D_{i} G(\mu(t)) = 0\), for any \(t \geq0\), but instead adjusts the holdings proportionally. By contrast, the additively generated trading strategy \({\varphi}(\cdot)\) of (4.5) buys

shares of the different assets at time \(t\), and does not shun stocks with \(D_{i} G(\mu(t)) = 0\).

Ramifications: The above difference in the two strategies leads to two observations.

First, if one is interested in a trading strategy that invests through time only in a subset of the market, such as, for example, the set of “small-capitalization stocks”, then strategies generated multiplicatively by functions \(G\) that satisfy the “balance” property \(\sum_{j=1}^{d} x_{j} D_{j} G ( x) = G(x)\) for all \(x \in \varDelta ^{d}\) are appropriate. If, on the other hand, one wants to invest the trading strategy’s earnings in a proportion of the whole market, additive generation is better suited. This is illustrated further by Examples 6.2 and 6.3.

Secondly, the trading strategy which holds equal weights across all assets can be generated multiplicatively, as long as (2.4) holds, by the “geometric mean” function

indeed, the portfolio weights in (4.8) become now \({ \varPi}_{i} = 1/d\) for all \(i=1, \dots, d\) (the so-called “equal-weighted” portfolio). However, such a trading strategy cannot be additively generated; for instance, the portfolio in (4.7), namely

for all \(i=1, \dots, d, t \ge0\), that corresponds to the strategy generated additively by this geometric mean function \(G \), distributes the cumulative earnings captured by \(\varGamma^{G} \) uniformly across stocks, and this destroys equal weighting.

Comparison of portfolios: Let us compare the two portfolios in (4.7) and (4.8) more closely. These portfolios differ only in the denominators inside the brackets on their right-hand sides.

Computing the quantities of (4.8) needs, at any given time \(t \geq0\), knowledge of the configuration of market weights \(\mu_{1} (t), \dots, \mu_{d} (t)\) prevalent at that time – and nothing else. By contrast, the quantities of (4.7) need, in addition to the current market weights \(\mu_{1} (t), \dots, \mu_{d} (t)\), the current value \(V^{{ \varphi}} (t)\) of the wealth generated by the portfolio. One computes this value from the entire history of the market weights during the interval \([0,t]\), via the Lebesgue–Stieltjes integrals in, say, (3.5). This is also the case when these portfolios in (4.7), (4.8) are expressed as trading strategies, as in (4.5), (4.12).

5 Sufficient conditions for relative arbitrage

We have developed by now the machinery required in order to present sufficient conditions for the possibility of outperforming the market as in Sect. 2.3 – at least over sufficiently long time horizons.

In this section, \(G: \mathrm{supp\,} (\mu) \rightarrow[0,\infty)\) is a nonnegative function, regular for the market-weight process \(\mu \), and with \(G(\mu(0)) = 1\). This normalization ensures that the initial wealth of a functionally generated strategy starts with one dollar, as required by (2.10); see (4.3) and (4.11). Such a normalization can always be achieved upon replacing \(G\) by \(G+1\) if \(G(\mu(0)) = 0\), or by \(G/G(\mu(0))\) if \(G(\mu(0)) > 0 \).

Theorem 5.1

Fix a Lyapunov function \(G: \mathrm{supp\,} (\mu) \rightarrow[0,\infty )\) which satisfies \(G(\mu(0)) = 1\), and suppose that for some real number \(T_{*} >0\), we have

Then the additively generated strategy \({ \varphi} = {( { \varphi}_{1} , \dots, { \varphi}_{d} )}^{\prime}\) of Definition 4.1 is strong arbitrage relative to the market over every time horizon \([0,T] \) with \(T \geq T_{*}\).

Proof

We recall the observations in Remark 2.4 and note that (4.3) yields \(V^{ { \varphi}} (0 ) =1\), \(V^{ { \varphi}} (\cdot ) \geq0\), and \(V^{ { \varphi}} ( T ) = G ( \mu( T ) ) + \varGamma^{ G} ( T )\ge\varGamma^{G} (T_{*}) > 1\) for all \(T \geq T_{*}\). □

The following result complements Theorem 5.1.

Theorem 5.2

Fix a regular function \(G: \mathrm{supp\,} (\mu) \rightarrow[0,\infty )\) satisfying \(G(\mu(0)) = 1\), and suppose that for some real number \(T_{*} >0\), there exists an \(\varepsilon= \varepsilon(T_{*}) >0\) such that

Then there exists a constant \(c = c(T_{*}, \varepsilon)>0\) with the property that the trading strategy \({ \psi}^{(c)} = ( { \psi}^{(c)}_{1} , \dots, { \psi}^{(c)}_{d} )'\), multiplicatively generated by the regular function \(G^{(c)} := (G+c) / (1+c) \) as in Definition 4.7, is strong arbitrage relative to the market over the time horizon \([0,T_{*}] \), as well as over every time horizon \([0,T] \) with \(T \geq T_{*}\) if in addition \(G\) is a Lyapunov function.

Proof

For \(c>0\), the representation (4.11) yields the comparisons \(V^{ { \psi}^{(c)}} (0 ) =1\), \(V^{ { \psi}^{(c)}} > 0\) and

Here \(\kappa\) is an upper bound on the function \(G\), which is assumed to be continuous on the compact set \(\mathrm{supp\,} (\mu)\), and we have used in the first inequality the bound \(G \geq0\) as well as the identity \(\varGamma^{G^{(c)}} = \varGamma^{G} /(1+c)\). With the help of Remark 2.4, we may conclude again as soon as we have argued the existence of a constant \(c>0\) such that the last term in (5.2) is greater than one. In order to see this, we take logarithms there to obtain

for all \(c >0\), since \(1>c \log(1+1/c)\). However, the right-hand side of (5.3) is positive for sufficiently large \(c\); this implies that \(V^{ { \psi }^{(c)}} ( T_{*} )>1\) holds pathwise and concludes the proof.

If \(G\) is a Lyapunov function, then \(\mathbb {P}[ \varGamma^{G} (T ) > 1 + \varepsilon] = 1\) and the inequalities in (5.2) are valid for all \(T \ge T_{*}\), and the same reasoning as above works once again. □

5.1 Entropic and quadratic functions

We illustrate here Theorems 5.1 and 5.2 with two examples.

Example 5.3

Consider the Gibbs entropy function

with values in \([0, \log d]\) and the understanding \(0 \log\infty = 0\). This function is concave and continuous on \(\varDelta ^{d}\), and strictly positive on \({ \varDelta }^{d}_{+}\). It is a Lyapunov function for \(\mu \) provided that, as we assume from now on in this example, either a deflator for \(\mu\) exists, or (2.4) holds; see Theorem 3.7(iii) and (i).

Indeed, under any of these two assumptions, computations show that the process in (3.2) with \(X=\mu\) takes the form

This is the cumulative excess growth of the market, a trace-like quantity which plays a very important role in stochastic portfolio theory. It measures the market’s cumulative “relative variation” – stock-by-stock, then averaged according to each stock’s market weight. It is immediate that the process \(\varGamma^{H} \) is nondecreasing, which confirms that the Gibbs entropy is indeed a Lyapunov function for any market \(\mu\) that allows a deflator or satisfies (2.4).

The additively generated strategy \(\varphi \) of (4.5) invests a number

of shares in each asset at time \(t\), and generates the strictly positive value process

This strict positivity is obvious if (2.4) holds; to see it when (2.4) does not hold but a deflator for \(\mu \) exists, consider the stopping time \(\tau:= \inf\{ t \ge0 : V^{{ \varphi}} ( t) =0\}>0\), where the last inequality follows from the assumption \(\mu(0) \in{ \varDelta }^{d}_{+}\). On the event \(\{ \tau< \infty\}\), we have both \(H (\mu (\tau) ) =0\) and \(\varGamma^{H} (\tau) =0\). From the properties of the entropy function, the first of these requirements implies that at time \(\tau\), the process of market weights is at one of the vertices of the simplex, i.e., \(\tau\ge\mathscr{D}_{*}\) in the notation of (2.16). The second requirement gives \(\varGamma^{H} (\mathscr{D}_{*}) =0\), thus \(\varGamma^{H} (\mathscr{D}) =0\) in the notation of (2.15). However,

implies that \(\langle\mu_{i} \rangle(\mathscr{D})=0\) holds on the event \(\{ \tau< \infty\}\), for each \(i=1, \dots, d\); the existence of a deflator leads then to \(\mu_{i} (t)=\mu_{i} (0)\) for all \(0 \le t \le\mathscr{D}\), and this to \(\mathbb {P}[\tau< \infty]=0\).

Multiplicative generation, on the other hand, needs a regular function that is bounded away from zero; so let us consider \(H^{(c)} = H + c\) for some \(c>0\) as in [10]. According to (4.12), the multiplicatively generated strategy invests a number

of shares in each of the various assets at time \(t\), for \(i=1, \dots, d \). We can compute now the portfolio weights corresponding to these two strategies from (4.7) and (4.8), respectively, as

with the previous understanding \(0 \log\infty = 0\). The process \({ \varPi }^{(c)} \) has been termed “entropy-weighted portfolio”; see, for example, [7, Chap. 3] or [10].

Let us now consider the question of relative arbitrage. By definition, a trading strategy that strongly outperforms the market starts with wealth of one dollar; see (2.10). Hence we consider the Lyapunov function \(G = H/H(\mu(0))\) along with its nondecreasing cumulative earnings process \(\varGamma^{G} = \varGamma^{H} /H(\mu(0))\). Theorems 5.1 and 5.2 yield then the existence of such a strategy over the time horizon \([0,T]\) as long as we have, respectively,

for some \(\varepsilon>0\). In the first case, this strong relative arbitrage is additively generated through the trading strategy \(\varphi /H(\mu(0))\); in the second, it is multiplicatively generated through the trading strategy \(\psi^{(c)} /(H(\mu(0))+c)\) for some sufficiently large \(c = c(T, \varepsilon)> 0\).

For example, if \(\mathbb {P}[ \varGamma^{H} (t) \ge\eta t , \forall t \geq0 ] =1\) holds for some real constant \(\eta>0\), strong relative arbitrage with respect to the market exists over any time horizon \([0,T]\) with \(T> H (\mu(0))/\eta\). It is worth noting that the additively generated strategy \(\varphi \) is the same for all these time horizons, whereas the multiplicatively generated strategy \(\psi^{(c)} \) needs the “offline” computation of the constant \(c = c(T, \varepsilon)> 0\) for each of those horizons separately.

Remark 5.4

It has been a long-standing open problem, dating to [10], whether the validity of \(\mathbb {P}[ \varGamma^{H} (t) \ge\eta t , \forall t \geq0 ] =1\) for some real constant \(\eta>0\) can guarantee the existence of a strategy that implements relative arbitrage with respect to the market over any time horizon \([0,T]\), of arbitrary length \(T\in(0, \infty)\). For explicit examples showing that this is not possible in general, see the companion paper [11].

Example 5.5

Fix for the moment a constant \(c \in \mathbb {R}\) and consider, in the manner of [7, Example 3.3.3], the quadratic function

with values in \([ c-1, c- 1/d]\). The term \(\sum_{i=1}^{d} \mu_{i}^{2} \) is the weighted average capitalization of the market and may be used to quantify the concentration of capital in a market.

Clearly, \(Q^{(c)}\) is concave and Theorem 3.7(ii), or alternatively Example 3.6, yields that \(Q^{(c)}\) is a Lyapunov function for \(\mu\), without any additional assumption. The nondecreasing process of (3.2) is

in this case, and the additively generated strategy \(\varphi^{(c)} \) of (4.5) is given by

If \(c>1\), the multiplicatively generated strategy \(\psi^{(c)} \) of (4.12) is well defined for \(i=1, \dots, d\) via

Since \(Q^{(1)} \geq0\), we obtain as in Example 5.3 that the condition

yields a strategy which is strong relative arbitrage with respect to the market on \([0,T]\), and is additively generated by the function \(Q^{(1)}/Q^{(1)}(\mu(0))\). Moreover, the requirement

for some \(\varepsilon>0\) yields a strategy which is strong relative arbitrage with respect to the market on \([0,T]\), and is multiplicatively generated by the function \(Q^{(c)}/Q^{(c)}(\mu(0))\) for some sufficiently large \(c >1\).

For example, if \(\mathbb {P}[ \sum_{i=1}^{d} \langle\mu_{i} \rangle( t ) \ge\eta t , \forall t \geq0 ]=1\), there exist both additively and multiplicatively generated strong relative arbitrage with respect to the market over any time horizon \([0,T]\) with

Let us assume now that the market is diverse, namely, that we have

for some constant \(\delta\in(0,1/2)\). Then the bound \(Q^{(c)} \geq c-1 + 2\delta(1-\delta)\) holds. Thus, in particular, \(Q^{(1-2\delta (1-\delta))} \geq0\) and we may replace \(Q^{(1)}\) in (5.4) and (5.5) by \(Q^{(1-2\delta(1-\delta))}\). This in turn allows us to replace the bound in (5.6) by the improved bound

Finally, we remark for future reference that the modification

of the above quadratic function satisfies \(Q^{\star}= Q^{(2 + 1/d)}/2\).

6 Further examples

In this section, we collect several examples, illustrating a variety of Lyapunov functions and the trading strategies these functions generate. Unlike their counterparts in Examples 5.3 and 5.5, the regular functions considered in this section are not twice differentiable; as a result, their corresponding earnings processes have components which are typically singular with respect to Lebesgue measure, and are expressed in terms of local times.

Example 6.1

Let us revisit [7, Example 4.2.2] in our context. We consider the Gini function

this is concave on \({ \varDelta }^{d}\), and a Lyapunov function by Theorem 3.7(ii). It is used widely as a measure of inequality; the quadratic function of (5.7) can be seen as its “smoothed version.” For this Gini function and with the help of the Itô–Tanaka formula, the processes of (3.1) and (3.2) take the form

respectively. Here \(\varLambda_{i} \) stands for the local time accumulated by the process \(\mu_{i} \) at the point \(1/d\), and “sgn” for the left-continuous version of the sign function. It is fairly easy to write down the strategies of (4.5) and (4.12) generated by this function. It is harder, though, to posit a condition of the type (5.1), as the sum of local times \(\sum _{i=1}^{d} \varLambda_{i} \) does not typically admit a strictly positive lower bound.

We now present examples of functional generation of trading strategies based on ranks.

Example 6.2

In this example, we construct a capitalization-weighted portfolio of large stocks. Let us recall the notation of (3.7), fix an integer \(m\in\{ 1, \dots, d-1\}\) and consider, in the manner of [7, Example 4.3.2], the function \(\mathbf{ G}^{L}:\mathbb {W}^{d} \rightarrow (0,\infty)\) given by

If \(m=1\), we are exactly in the setup of Example 3.10. The function \(G^{L} := \mathbf{ G}^{L} \circ\mathfrak {R}\) in the notation of (3.9) is regular, thanks to Theorem 3.8 or, alternatively, Example 3.9. In the notation of that example, the corresponding function \(DG^{L}\) can be computed by (3.11) as

for all \(x \in \varDelta ^{d}\) and \(i = 1, \dots, d\). Thanks to (3.12), the process \(\varGamma^{G^{L}} \) is given by

Here the second equality swaps the summation in the first term, relabels the indices, and uses the fact that \(N_{\ell}(\mu ) = N_{k}(\mu )\) holds on the support of the collision local time \(\varLambda^{(\ell,k)} \), for each \(1 \le\ell < k \le d\). The last equality uses the fact that \(N_{\ell}(\mu ) = N_{m}(\mu)\) holds on the support of \(\varLambda^{(\ell ,k)} \), for each \(\ell= 1, \dots, m\) and \(k = m+1, \dots, d\).

If there are no triple points at all, that is, if \(\mu_{(\ell)} - \mu _{(\ell+2)} > 0\) holds for all \(\ell= 1, \dots, d-2\), then \(N_{m}(\mu)\) takes values in \(\{1,2\}\) and we get

Thus, unless \(\varLambda^{(m,m+1)}\equiv0\), the function \(G^{L}\) is regular but not Lyapunov.

For the additively generated strategy \(\varphi(\cdot)\) in (4.5), we get

and for the multiplicatively generated strategy \(\psi(\cdot)\) in (4.12), we have

Hence, the additively generated strategy invests in all assets (possibly by selling them), provided \(\varGamma^{G^{L}} \) is not identically equal to zero, while the multiplicatively generated strategy only invests in the \(m\) largest stocks. Whereas the wealth \(G^{L} (\mu) +\varGamma^{G^{L}}\) of the additively generated strategy might become negative, the multiplicatively generated strategy yields always strictly positive wealth; see (4.11). Thus, we may express the multiplicatively generated strategy \(\psi \) in terms of proportions

as in (4.8). We note that this trading strategy only invests in the \(m\) largest stocks, and in proportion to each of these stocks’ capitalization – apart from the times when several stocks share the \(m\)-th position, in which case the corresponding capital is uniformly distributed over these stocks.

In the context of the present example, we might think of \(d= {7{,}500}\), as in the entire US market, and of \(m=500\), as in the S&P 500. Alternatively, we might consider \(m=1\), when we are adamant about investing only in the market’s biggest company. The nonincreasing process \(\varGamma^{G^{L}} \) captures the “leakage” that such a trading strategy suffers every time it has to sell – at a loss – a stock that has dropped out of the higher capitalization index and been relegated to the “minor (capitalization) leagues.”

Example 6.3

Instead of large stocks as in Example 6.2, we now consider a portfolio consisting of stocks with small capitalization. With the notation recalled in the previous example, we fix again an integer \(m\in\{ 1, \dots, d -1\}\) and consider the function \(\mathbf{ G}^{S}:\mathbb {W}^{d} \rightarrow(0,\infty)\) given by

The function \(G^{S} := \mathbf{ G}^{S} \circ\mathfrak {R}\) is regular. Exactly as above, we compute

Thus, \(G^{S}\) is not only regular, but also a Lyapunov function. The nondecreasing process \(\varGamma^{G^{S}} \) expresses the cumulative earnings that the additively generated strategy generates; whenever it sells a stock, this strategy sells it at a profit – the stock has been promoted to the “major (capitalization) leagues.”

It is easy to see again that the additively generated strategy invests in all assets, provided that \(\varGamma^{G^{S}} \) is not identically equal to zero, and that the multiplicatively generated strategy only invests in the \(d-m\) smallest stocks.

Example 6.4

Under the setup of Example 6.3, consider more generally a function \(\boldsymbol{ H} : [0,1]^{d-m} \to \mathbb {R}\) that is regular for the truncated vector process of ranked market weights \((\mu_{(m+1)} , \dots, \mu_{(d)} )'\), for example, twice continuously differentiable. Then it is clear that the function \(\mathbf{ G} :\mathbb {W}^{d} \rightarrow \mathbb {R}\) given by

is regular for the full vector process of ranked market weights \(\boldsymbol{\mu}= (\mu_{(1)} , \dots, \mu_{(d)} )'\) with \(\varGamma^{\boldsymbol {G}} = \varGamma^{\boldsymbol {H}} \), \(D_{\ell}\boldsymbol {G} = 0\) for all \(\ell= 1, \dots, m\), and \(D_{\ell}\boldsymbol {G} = D_{\ell}\boldsymbol {H}\) for all \(\ell= m+1, \dots, d\). Here we write \(D \boldsymbol {H} = {(D_{m+1} \boldsymbol {H}, \dots, D_{d} \boldsymbol {H})}^{\prime}\) in Definition 3.1. Hence, by Theorem 3.8, the function \(G : = \boldsymbol {G} \circ \mathfrak {R} : \varDelta ^{d} \to \mathbb {R}\), that is,

is also regular for the vector process \(\mu\) of market weights. As in (3.12) of Example 3.9, we obtain

Let us now assume that \(\boldsymbol {H}\) is concave, differentiable and invariant under permutations of its variables; that is, \(G\) is a symmetric function of the \(d-m\) smallest components of its argument. Then we may assume that for every \(x \in[0,1]^{d-m}\) with \(x_{k} = x_{\ell}\), we have \(D_{k} \boldsymbol {H}(x) = D_{\ell}\boldsymbol {H}(x)\) for all \(m+1\le\ell,k \le d\); in particular, we get \(D_{k} \boldsymbol {G} ( \boldsymbol{\mu}) = D_{\ell}\boldsymbol {G} ( \boldsymbol{\mu})\) on the support of \(\varLambda^{(\ell ,k)} \) for each \(k= \ell+1, \dots, d\) and \(\ell=m+1, \dots, d\). Since also \(D_{\ell}\boldsymbol {G} = 0\) for each \(\ell= 1, \dots, m\), we now have

The function \(\boldsymbol {H}\) is assumed to be concave, so this finite-variation process is nondecreasing; thus \(G\) is a Lyapunov function. If \(\boldsymbol {H}\) is nonnegative and \(G(\mu(0)) > 0\), Theorem 5.1 now shows that for some given number \(T>0\), strong relative arbitrage exists with respect to the market over the horizon \([0,T]\), provided that \(\mathbb {P}[\varGamma^{\boldsymbol {H}}(T) > G ( { \mu} (0) )] = 1\). For example, if \(\boldsymbol {H}\) is twice differentiable, we have

Section 4 in [29] develops in detail a special case of such a construction, for a multiplicatively generated trading strategy.

7 Concave transformations of semimartingales

Consider a function \(G: \varDelta ^{d} \to \mathbb {R}\). The superdifferential of \(G\) at some point \(x \in \varDelta ^{d} \), denoted by \(\partial G(x)\), is the set of all “supergradients” at that point, namely, the set of vectors \(\xi \in \mathbb {R}^{d}\) such that

If \(G\) is concave, we have \(\partial G(x) \neq\emptyset\) for all \(x \in \varDelta ^{d}_{+}\).

7.1 The proofs of Theorems 3.7 and 3.8 and of Proposition 4.5

Proof

of Theorem 3.7 We proceed in three steps.

Step 1: We find it useful to identify the set \({ \varDelta }^{d}_{+} \subset \mathbb {R}^{d}_{+}\) of (2.3) with the set

The identification is based on the one-to-one “projection operator” \(\mathfrak {P}\), namely, the mapping \({ \varDelta }^{d}_{e} \ni(x_{1}, \dots, x_{d} ) \mapsto (x_{1}, \dots, x_{d-1} )\in \mathbb {R}^{d-1}\) with the notation of (3.6). In this manner, a real-valued function \(G\) on \({ \varDelta }^{d}_{+}\) or on \({ \varDelta }^{d}_{e}\) is identified with the function \(G_{\star}= G \circ \mathfrak {P}^{-1}\) on \({ \varDelta }^{d}_{\star+}\) or on \(\mathbb {R}^{d-1}\), respectively, and vice versa. Note that \(G\) is concave on \({ \varDelta }^{d}_{+}\) or on \({ \varDelta }^{d}_{e}\) if and only if \(G_{\star}\) is concave on \({ \varDelta }^{d}_{\star+} \) or on \(\mathbb {R}^{d-1}\), respectively.

Step 2: Let us start by imposing either condition (i) or (ii). We recall from Theorem 10.4 in [24] (see also [30] as well as [23]) that the concave function \(G_{\star}= G \circ \mathfrak {P}^{-1}\) is locally Lipschitz on the open set \({ \varDelta }^{d}_{\star+}\) of (7.2) or on \(\mathbb {R}^{d-1}\), respectively. Theorem VI.8 in [19], along with [6, Remark VII.34(a)], now yields that the process \(G(\mu)\) is a semimartingale.

We let \(\mathit{DG} = ( D_{1} G, \dots, D_{d} G)' : { \varDelta }^{d} \rightarrow \mathbb {R}^{d}\) denote any measurable “supergradient” of \(G\), that is, \(\mathit{DG}\) is measurable and satisfies \(D G(x) \in\partial G (x)\) for all \(x \in{ \varDelta }^{d}_{+}\) in Theorem 3.7(i), and for all \(x \in{ \varDelta }^{d}_{e}\) in Theorem 3.7(ii); such a \(\mathit{DG}\) exists thanks to [3]. The Itô-type formula implicit in (3.4), namely

with a continuous, adapted and nondecreasing process \(\varGamma^{G} \), is established as in [3, 4]; see also [13] for an alternative treatment, and [1] for the special case where \(G\) is once continuously differentiable. With the obvious notation \(\mathit{DG}^{\star}\), we use here for the process \(\mu^{\star}= {( \mu_{1} , \dots, \mu_{d-1} )}^{\prime}\) the identity of stochastic integration

Step 3: We place ourselves now under the assumptions of (iii). For simplicity, we assume here \(\mathrm{supp\,} (\mu) = \varDelta ^{d}\); the general case follows in exactly the same manner. We recall the stopping time \(\mathscr {D}\) in (2.15) and note that any component \(\mu_{i} \) with \(\mu_{i}(\mathscr {D}) = 0\) is absorbed at the origin, i.e., \(\mu(\mathscr {D}+t) = 0\) holds for all \(t \geq0\) on the event \(\{ \mathscr {D} < \infty\}\) (see Sect. 2.4). We use the notation

for the \(\mathscr {F}(\mathscr {D})\)-measurable random variable that records the number of assets which have not been absorbed by time \(\mathscr {D}\), namely, the number of all indices \(i \in\{1, \dots, d\}\) such that \(\mu_{i}(\mathscr {D})>0\).

Assume we have shown that

Then, after time \(\mathscr {D}\), the process \(G( \mu )\) can be identified with \(\widetilde{G}(\widetilde{\mu})\), where the process \(\widetilde{\mu} \) takes values in \({ \varDelta }^{ \mathfrak {m}}\), the domain of a concave function \(\widetilde{G}\), where \({\mathfrak {m}}\) is the mapping of (7.4). An iteration of the argument then yields the statement, since the Itô-type formula in (7.3) follows again exactly as in Step 2. Indeed, as above, \(\mathit{DG}\) may denote any measurable supergradient of \(G\) on \(\varDelta ^{d}_{+}\). On \(\varDelta ^{d} \setminus \varDelta ^{d}_{+}\), the concave function \(G\) can be identified with a concave function \(\widehat{G}\) on \(\varDelta ^{n}\) for some \(n < d\). Thus, for each \(x \in \varDelta ^{d}\) and \(i = 1, \dots, d\), if \(x_{i} \in\{0,1\}\), we can set the \(i\)th component of \(\mathit{DG}(x)\) to zero (any arbitrary number would work), and if \(x_{i} \in(0,1)\), to the corresponding component of the supergradient of \(\widehat{G}\).

We still need to justify the claim in (7.5). Since \(G\) is continuous and thus bounded on the compact set \(\varDelta ^{d}\), we may assume without loss of generality that \(G\) is nonnegative. Let \(Z \) denote a deflator for the vector process \(\mu \). Next, we introduce the increasing sequence of stopping times

with \(\lim_{n \uparrow\infty} \mathscr {S}_{n} = \mathscr {D}\). As in Remark 3.4, the process \(Z( \cdot\wedge\, \mathscr {S}_{n}) G( \mu( \cdot\wedge\mathscr {S}_{n}) )\) is a local supermartingale for each \(n \in \mathbb {N}\), and thus \(( Z(t) G ( \mu( t ) ))_{0 \le t <\mathscr {D}}\) is a local supermartingale bounded from below. The supermartingale convergence theorem (see [18, Lemma 4.14]) yields that \(Z ( \cdot \wedge\mathscr {D}) G ( \mu( \cdot \wedge\mathscr {D}) ) \) is also a local supermartingale. From this, and from the fact that the reciprocal \(1/Z(\cdot\wedge \mathscr {D})\) is a semimartingale, the claim in (7.5) follows. □

The proof of Theorem 3.7 shows that every continuous, concave function \(G\) is regular, and the \(\mathit{DG}\) in the corresponding Itô formula of (3.2) may be chosen (at least in the set \(\varDelta ^{d}_{+}\)) to be a measurable supergradient of \(G\). This observation motivates also the following question.

Remark 7.1

Assume that a function \(G\) is regular and weakly differentiable with gradient \(\widetilde{\mathit{DG}}\) . Is it then possible to choose \(\mathit{DG} = \widetilde{\mathit{DG}}\) in ( 3.1 ) and ( 3.2 )?

The answer is, of course, affirmative if the function \(G\) is actually twice continuously differentiable, as in Example 3.6. It is also affirmative if \(G\) is concave, thanks to [3].

Concerning a representation of the finite-variation process \(\varGamma^{G} \), the proof of Theorem 3.7 does not yield any deep insights (the arguments in [3, 4, 13] yield a representation of \(\varGamma^{G} \) as a limit of mollified second-order terms). This leads to yet another question as follows.

Remark 7.2

In the context of Theorem 3.7, we conjecture that under appropriate weak conditions, the process \(- 2 \varGamma^{G} \) of (3.2) can be written as the sum of the covariations of the processes \({ \vartheta}_{i} \) as in (3.1) and \(\mu_{j} \) as in (2.2), namely,

whenever the limits below exist in probability, for all \(T \geq0\) and \(1 \le i,j \le d\):

Here \(( \mathbb{D}^{(N)})_{N \in \mathbb {N}}\) is a sequence of partitions of \([0, \infty)\) of the form

with \(\mathbb{D}^{(N+1)} \) a refinement of \(\mathbb{D}^{(N)}\) and with mesh \(\Vert\mathbb{D}^{(N)} \Vert= \max_{n \in \mathbb {N}_{0}} | t^{(N)}_{n+1} - t^{(N)}_{n }|\) decreasing to zero as \(N \uparrow\infty\). The representation (7.6) is valid in the “smooth” case of Example 3.6; see [22, Theorem V.20].

The representation (7.6) is also valid in the Russo/Vallois [25, 26] framework of stochastic integration and with their interpretation of the brackets \([ { \vartheta}_{i} , \mu_{j} ] \), whenever \(G\) is of class \(C^{1}\) and the continuous semimartingale \(\mu\) is “reversible” in the sense that \(\mu(T-t),\, 0 \le t \le T\), is a continuous semimartingale in its own filtration for every \(T\in(0, \infty)\); see [25, Theorem 2.3].

Proof

of Theorem 3.8 First, we note that (2.4) is equivalent to the condition

Hence, the sufficiency of conditions (i), (ii) here is a simple corollary of the sufficiency of conditions (i), (ii) in Theorem 3.7, with \(G\) replaced by \({\boldsymbol {G}}\) and applied to the \(\varDelta ^{d}\)-valued process \(\boldsymbol{\mu}= \mathfrak{R}({ \mu} )\).

It remains to be argued that if the function \(\boldsymbol {G}\) is regular for the vector process \({\boldsymbol{\mu}} \), then \(G = {\boldsymbol {G}} \circ\mathfrak {R}\) is regular for the vector process \(\mu \). To this end, we generalize the arguments in Example 3.9. First, in a manner similar to (3.10), we recall from [2, Theorem 2.3] the existence of measurable functions \(h_{\ell}: \varDelta ^{d} \rightarrow[0,1]\) and of finite-variation processes \(B_{\ell}\) with \(B_{\ell}(0) = 0\) such that we have for all \(\ell= 1, \dots, d\) the representation

We obtain

where \(D \boldsymbol {G}\) and \(\varGamma^{\boldsymbol {G}} \) are as in Definition 3.1; in particular, \(\varGamma^{\boldsymbol {G}} \) is a finite-variation process. By analogy with (3.11) and (3.12), we now define the process

and the functions

and note \({\boldsymbol {G}} ( \boldsymbol{\mu}(0)) = G(\mu(0))\). This yields (3.4), thus also the regularity of \(G\) for \(\mu\). □

Proof

of Proposition 4.5 Theorem 3.7 shows that \(G\) is a Lyapunov function; its proof also reveals that \(\mathit{DG}\) can be chosen to be a supergradient of \(G\) if (i) or (ii) hold. If neither (i) nor (ii) holds, but (iii) does, we may choose \(\mathit{DG}\) to be a supergradient of \(G\) in \(\varDelta ^{d}_{+}\). In that case, for \(x \in \varDelta ^{d} \setminus \varDelta ^{d}_{+}\) and \(i = 1, \dots, d\), we define \(D_{i} G(x)\) as follows: if \(x_{i} \in(0,1)\), we declare \(D_{i} G(x)\) to be the corresponding component of the supergradient of a concave function \(\widetilde{G}\) with domain \(\varDelta ^{m}\) for some \(m< d\); and if \(x_{i} \in\{0,1\}\), we declare \(D_{i} G(x)\) to be the term \(\sum_{j: x_{j} \in(0,1)} x_{j} D_{j} G(x)\).

Once we fix this choice of \(\mathit{DG}\), we note from (4.5) that the nondecrease of \(\varGamma^{G} \) gives

Hence it suffices to show, for every given \(i = 1, \dots, d\) and \(x \in{ \varDelta }^{d}\), the inequality

We first consider the case \(x_{i} \in(0,1)\), and let \(\mathfrak {e}^{(i)}\in \varDelta ^{d}\) denote the \(i\)th unit vector of \(\mathbb{R}^{d}\). Observe that if \(x_{j} = 0\) for some \(j = 1, \dots, d\), then the \(j\)th component of any linear combination of \(x\) and \(\mathfrak {e}^{(i)}\) is also zero. This fact, the nonnegativity of \(G\) and the property of supergradients given in (7.1) lead to

for all \(u \in(0,1]\). Letting \(u \downarrow0\) yields (7.7) if \(x_{i} \in(0,1)\).

If \(x_{i} = 1\), then \(x_{j} = 0\) for all \(j = 1, \dots, d\) with \(j \neq i\); the left-hand side of (7.7) is then equal to \(G(x)\), which is nonnegative by assumption.

Finally, we consider the case \(x_{i} = 0\). Under condition (i), no argument is required since \(\mu_{i} > 0\) with probability one. Under condition (ii), the same computations as in (7.8) and (7.9) hold. Under condition (iii), we observe again that the the left-hand side of (7.7) equals \(G(x)\), by the definition of \(\mathit{DG}\). As above, the nonnegativity of \(G\) yields (7.7). □

7.2 Two counterexamples

Example 7.3

A condition such as the existence of a deflator in Theorem 3.7(iii) is needed for the result to hold. Even for a one-dimensional semimartingale \(X \) taking values in the unit interval \([0,1]\) and absorbed when it hits one of its endpoints, and with a concave function \(G: [0,1] \to[0,1]\), the process \(G(X )\) need not be a semimartingale.

For example, let \(X\) be a deterministic continuous semimartingale with \(X(0) = 1\) and \(X(t) = \lim_{s \uparrow1} X(s) = 0\) for all \(t \geq 1\), constructed as follows. Let \(a_{n}\) be the smallest odd integer in the interval \([\sqrt{n}, 3 \sqrt{n})\), for all \(n \in \mathbb {N}\). On \([1-1/n, 1-1/(n+1)]\), let \(X \) have exactly \(a_{n}\) oscillations between \(1/n\) and \(1/(n+1)\), for each \(n \in \mathbb {N}\). In particular, \(X(1-1/n) = 1/n\) and \(X(t) \in[1/(n+1), 1/n]\) for all \(t \in[1-1/n,1 - 1/(n+1)]\), for each \(n \in \mathbb {N}\). Then \(X\) is clearly continuous and takes values in the compact interval \([0,1]\). Since the first variation of \(X \) is exactly

the process \(X \) is indeed a continuous, deterministic finite-variation semimartingale.

Now consider the concave and bounded function \(\widehat{G}: [0,1] \rightarrow[0,1]\) with \(\widehat{G}( x ) := \sqrt{x} \). Then the first variation of the process \(\widehat{G}(X ) \) is exactly

for some \(\kappa> 0\), where the last inequality follows from l’Hôpital’s rule. Thus \(\widehat{G}(X )\) is deterministic, but of infinite variation and thus not a semimartingale. It follows that without further assumptions, a concave and continuous transformation defined on the convex set \([0,1]\), of a continuous semimartingale taking values in \([0,1]\), is not necessarily a semimartingale.

To put this example in the context of Theorem 3.7, just set \(d=2\), \(\mu_{1} := X \) and \(\mu_{2} := 1- \mu_{1} \). Then there exists no deflator for the process \(\mu=(\mu_{1} , \mu_{2} )\), and the concave and continuous function \(G(x_{1}, x_{2}) := \sqrt{x_{1}}\), \((x_{1}, x_{2}) \in \varDelta ^{2}\), is not regular for the process \(\mu\).

Example 7.4

We now modify Example 7.3 to obtain a setup in which a deflator for the vector process \(\mu \) exists, the function \(\boldsymbol {G}: \mathbb {W}^{d} \rightarrow[0,1]\) is continuous and concave, but \(\boldsymbol {G}\) is not regular for \(\boldsymbol{\mu}= \mathfrak {R}(\mu)\) in the notation of (3.7) and (3.9), and neither is \(G = {\boldsymbol {G}} \circ\mathfrak {R}\) regular for the process \(\mu\).

To this end, set \(d = 2\) and let \(B \) denote a Brownian motion starting at \(B(0)=1\), and stopped when hitting 0 or 2. We set \(\mu_{1} := B /2\) and \(\mu_{2} := 1-B /2 = 1 - \mu_{1} \). Since \(\mu_{1} \) and \(\mu_{2} \) are martingales, there exists a deflator for the vector process \(\mu\); indeed, \(Z \equiv1\) will serve as one. Next, consider the function \(\boldsymbol {G}(x_{1}, x_{2}) := \sqrt{ x_{1} - x_{2} \,}\) for all \((x_{1},x_{2}) \in\mathbb {W}^{2} = \mathrm{supp\,}( \boldsymbol{\mu})\). Clearly, \(\boldsymbol {G}\) is concave and continuous on \(\mathbb {W}^{2}\). However, by virtue of Lemma 7.5 below, the process \(G( \mu ) = \boldsymbol {G}(\boldsymbol{\mu}) = \sqrt{|1-B |}\) is not a semimartingale; thus, \(\boldsymbol {G}\) is not regular for \(\boldsymbol {\mu}\), and neither is \(G\) regular for \(\mu\).

Lemma 7.5

Let \(W \) denote a Brownian motion starting in zero and \(\tau\) a strictly positive stopping time. Then the process \(\sqrt{|W(\cdot \wedge\tau)|\,}\) is not a semimartingale.

Proof

Formally at least, the conclusion follows from the results in [5], since, of course, the function \(f: \mathbb {R}\ni x \mapsto\sqrt {|x|}\) is not the difference of two convex functions.

For the sake of completeness, we provide here a direct argument. Note that the quadratic variation of \(2 f(W(\cdot\wedge\tau))\) can be bounded from below by the quadratic variation of the semimartingales

From Itô’s formula, their quadratic variation is \(\int_{0}^{\cdot \wedge\tau} \mathbf {1}_{\{|W(t)| > \varepsilon\}} (1/|W(t)| )\,\mathrm {d} t\), for each \(\varepsilon>0\). Thus, the quadratic variation of \(2 f(W(\cdot \wedge\tau))\) is greater than or equal to \(\int_{0}^{\cdot\wedge\tau} \mathbf {1}_{\{W(t) \neq0\}} ( 1/|W(t)| )\,\mathrm {d} t\). An application of the occupation time formula, in conjunction with the continuity of the local time for \(W\), then shows that \(f(W(\cdot\wedge\tau))\) has infinite quadratic variation and thus cannot be a semimartingale. □

Results in a similar vein appear in [5], especially Theorems 5.8 and 5.9, as well as in [20].

8 Conclusion

Fernholz [7–9] provided a systematic approach for generating trading strategies that can be implemented without the need of statistical estimates, and whose performance in a frictionless market can be guaranteed by suitable, weak assumptions on the market’s volatility structure. The present paper takes a systematic approach to functional generation, and makes the following three contributions.

-

1.

Introduces an alternative, “additive” approach to the functional generation of trading strategies, and compares it to the “multiplicative” functional generation of E.R. Fernholz. Given a sufficiently large time horizon \(T_{*}>0\) and suitable conditions on the volatility structure of the market, the multiplicative version yields, for each \(T>T_{*}\), a portfolio that strongly outperforms the market on \([0,T]\); this portfolio, however, depends on the length \(T\) of the time horizon. By contrast, the additive version yields a single trading strategy which strongly outperforms the market over every horizon \([0,T]\) with \(T \geq T_{*}\).

-

2.

Extends the class of functions that generate trading strategies. This paper introduces the notion of regular function. Such a function can generate a trading strategy. Modulo necessary technical conditions on boundary behavior, concave functions are shown to be regular (in fact Lyapunov, in the sense also introduced in the present work). This weakens the assumption of twice continuous differentiability, normally used in the extant work on this subject, and provides a unified framework for standard and rank-based generation, a long-standing open issue.

-

3.

Weakens the assumptions on the market model. Functional generation is shown to work in markets where asset prices are continuous semimartingales which may also completely devalue. Moreover, major technical assumptions in rank-based generation are removed; for example, it is not necessary anymore to exclude models for which the set of times at which any two given asset prices are identical has strictly positive Lebesgue measure.

References

Aboulaïch, R., Stricker, C.: Fonctions convexes et semimartingales. Stochastics 8, 291–296 (1983)

Banner, A.D., Ghomrasni, R.: Local times of ranked continuous semimartingales. Stoch. Process. Appl. 118, 1244–1253 (2008)

Bouleau, N.: Semi-martingales à valeurs \({\mathbf {R}}^{d}\) et fonctions convexes. C. R. Acad. Sci. Paris Sér. I Math. 292, 87–90 (1981)

Bouleau, N.: Formules de changement de variables. Ann. Inst. Henri Poincaré Probab. Stat. 20, 133–145 (1984)

Çinlar, E., Jacod, J., Protter, P., Sharpe, M.J.: Semimartingales and Markov processes. Z. Wahrscheinlichkeitstheor. Verw. Geb. 54, 161–219 (1980)

Dellacherie, C., Meyer, P.A.: Probabilities and Potential B. Theory of Martingales. North-Holland Mathematics Studies, vol. 72. North-Holland Publishing Co., Amsterdam (1982)

Fernholz, E.R.: Stochastic Portfolio Theory. Applications of Mathematics (New York), vol. 48. Springer, New York (2002)

Fernholz, R.: Portfolio generating functions. In: Avellaneda, M. (ed.) Quantitative Analysis in Financial Markets, pp. 344–367. World Scientific, Singapore (1999)

Fernholz, R.: Equity portfolios generated by functions of ranked market weights. Finance Stoch. 5, 469–486 (2001)

Fernholz, R., Karatzas, I.: Relative arbitrage in volatility-stabilized markets. Ann. Finance 1, 149–177 (2005)

Fernholz, R., Karatzas, I., Ruf, J.: Volatility and arbitrage. Ann. Appl. Probab. (2016), forthcoming. Available online at https://arxiv.org/abs/1608.06121