Abstract

Given a graph G where each node is associated with a set of attributes, attributed network embedding (ANE) maps each node \(v \in G\) to a compact vector \(X_v\), which can be used in downstream machine learning tasks. Ideally, \(X_v\) should capture node v’s affinity to each attribute, which considers not only v’s own attribute associations, but also those of its connected nodes along edges in G. It is challenging to obtain high-utility embeddings that enable accurate predictions; scaling effective ANE computation to massive graphs with millions of nodes pushes the difficulty of the problem to a whole new level. Existing solutions largely fail on such graphs, leading to prohibitive costs, low-quality embeddings, or both. This paper proposes \(\texttt {PANE}\), an effective and scalable approach to ANE computation for massive graphs that achieves state-of-the-art result quality on multiple benchmark datasets, measured by the accuracy of three common prediction tasks: attribute inference, link prediction, and node classification. \(\texttt {PANE}\) obtains high scalability and effectiveness through three main algorithmic designs. First, it formulates the learning objective based on a novel random walk model for attributed networks. The resulting optimization task is still challenging on large graphs. Second, \(\texttt {PANE}\) includes a highly efficient solver for the above optimization problem, whose key module is a carefully designed initialization of the embeddings, which drastically reduces the number of iterations required to converge. Finally, \(\texttt {PANE}\) utilizes multi-core CPUs through non-trivial parallelization of the above solver, which achieves scalability while retaining the high quality of the resulting embeddings. The performance of \(\texttt {PANE}\) depends upon the number of attributes in the input network. To handle large networks with numerous attributes, we further extend \(\texttt {PANE}\) to \(\texttt{PANE}^{++}\), which employs an effective attribute clustering technique. Extensive experiments, comparing 10 existing approaches on 8 real datasets, demonstrate that \(\texttt {PANE}\) and \(\texttt{PANE}^{++}\) consistently outperform all existing methods in terms of result quality, while being orders of magnitude faster.

Similar content being viewed by others

Notes

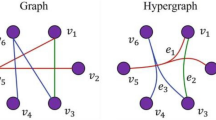

In the degenerate case that \(v_l\) is not associated with any attribute, e.g., \(v_1\) in Fig. 1, we simply restart the random walk from the source node \(v_i\) and repeat the process.

The PMI quantifies how much more or less likely we are to see the two events co-occur, given their individual probabilities, and relative to the case where they are completely independent.

References

Arora, S., Ge, R., Kannan, R., Moitra, A.: Computing a nonnegative matrix factorization-provably. STOC, pp. 145–161 (2012)

Bandyopadhyay, S., Vivek, S.V., Murty, M.: Outlier resistant unsupervised deep architectures for attributed network embedding. WSDM, pp. 25–33 (2020). https://doi.org/10.1145/3336191.3371788

Bielak, P., Tagowski, K., Falkiewicz, M., Kajdanowicz, T., Chawla, N..V.: FILDNE: A framework for incremental learning of dynamic networks embeddings. Knowl. Based Syst 236, 107–453 (2022). https://doi.org/10.1016/j.knosys.2021.107453

Bojchevski, A., Klicpera, J., Perozzi, B., Kapoor, A., Blais, M., Rózemberczki, B., Lukasik, M., Günnemann, S.: Scaling graph neural networks with approximate pagerank. In: KDD, pp. 2464–2473 (2020). https://doi.org/10.1145/3394486.3403296

Bottou, L.: Large-scale machine learning with stochastic gradient descent. COMPSTAT pp. 177–186 (2010). https://doi.org/10.1007/978-3-7908-2604-3_16

Cen, Y., Zou, X., Zhang, J., Yang, H., Zhou, J., Tang, J.: Representation learning for attributed multiplex heterogeneous network. KDD pp. 1358–1368 (2019). https://doi.org/10.1145/3292500.3330964

Chang, S., Han, W., Tang, J., Qi, G.J., Aggarwal, C.C., Huang, T.S.: Heterogeneous network embedding via deep architectures. KDD pp. 119–128 (2015). https://doi.org/10.1145/2783258.2783296

Church, K.W., Hanks, P.: Word association norms, mutual information, and lexicography. Comput. Linguist., pp. 22–29 (1990)

Comon, P., Luciani, X., De Almeida, A.L.: Tensor decompositions, alternating least squares and other tales. J. Chemom., pp. 393–405 (2009)

Cortes, C., Vapnik, V.: Support-vector networks. Mach. Learn. 20(3), 273–297 (1995)

Davison, M.L.: Introduction to Multidimensional Scaling (1983)

Dempster, A., Laird, N., Rubin, D.: Maximum likelihood from incomplete data via the em algorithm. J. R. Stat. Soc. 39(1), 1–38 (1977)

Dong, Y., Chawla, N.V., Swami, A.: metapath2vec: scalable representation learning for heterogeneous networks. In: KDD, pp. 135–144. ACM (2017). https://doi.org/10.1145/3097983.3098036

Dong, Y., Hu, Z., Wang, K., Sun, Y., Tang, J.: Heterogeneous network representation learning. In: C. Bessiere (ed.) IJCAI, pp. 4861–4867. ijcai.org (2020). https://doi.org/10.24963/ijcai.2020/677

Du, L., Wang, Y., Song, G., Lu, Z., Wang, J.: Dynamic network embedding : An extended approach for skip-gram based network embedding. In: J. Lang (ed.) IJCAI, pp. 2086–2092. ijcai.org (2018). https://doi.org/10.24963/ijcai.2018/288

Duan, Z., Sun, X., Zhao, S., Chen, J., Zhang, Y., Tang, J.: Hierarchical community structure preserving approach for network embedding. Inf. Sci. 546, 1084–1096 (2021). https://doi.org/10.1016/j.ins.2020.09.053

Fu, T., Lee, W., Lei, Z.: Hin2vec: Explore meta-paths in heterogeneous information networks for representation learning. In: E. Lim, M. Winslett, M. Sanderson, A.W. Fu, J. Sun, J.S. Culpepper, E. Lo, J.C. Ho, D. Donato, R. Agrawal, Y. Zheng, C. Castillo, A. Sun, V.S. Tseng, C. Li (eds.) CIKM, pp. 1797–1806. ACM (2017). https://doi.org/10.1145/3132847.3132953

Gao, H., Huang, H.: Deep attributed network embedding. IJCAI pp. 3364–3370 (2018). https://doi.org/10.24963/ijcai.2018/467

Gao, H., Pei, J., Huang, H.: Progan: Network embedding via proximity generative adversarial network. KDD pp. 1308–1316 (2019). https://doi.org/10.1145/3292500.3330866

Golub, G.H., Reinsch, C.: Singular value decomposition and least squares solutions. Linear Algebra, pp. 134–151 (1971)

Golub, G.H., Van Loan, C.F.: Matrix Computations, 1996. Johns Hopkins University, Press, Baltimore, MD, USA (1996)

Gong, M., Chen, C., Xie, Y., Wang, S.: Community preserving network embedding based on memetic algorithm. TETCI 4(2), 108–118 (2020). https://doi.org/10.1109/TETCI.2018.2866239

Goodfellow, I., Bengio, Y., Courville, A.: Deep Learning. MIT press (2016)

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., Bengio, Y.: Generative adversarial nets. NeurIPS pp. 2672–2680 (2014)

Goyal, P., Kamra, N., He, X., Liu, Y.: Dyngem: Deep embedding method for dynamic graphs. CoRR abs/1805.11273 (2018). http://arxiv.org/abs/1805.11273

Cortes, C., Vapnik, V.: Support-vector networks. Mach. Learn. 20(3), 273–297 (1995)

Guo, X., Zhou, B., Skiena, S.: Subset node representation learning over large dynamic graphs. In: F. Zhu, B.C. Ooi, C. Miao (eds.) KDD, pp. 516–526. ACM (2021). https://doi.org/10.1145/3447548.3467393

Hagen, L., Kahng, A..B.: New spectral methods for ratio cut partitioning and clustering. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst 11(9), 1074–1085 (1992). https://doi.org/10.1109/43.159993

Hamilton, W., Ying, Z., Leskovec, J.: Inductive representation learning on large graphs. NeurIPS, pp. 1025–1035 (2017)

Hochreiter, S., Schmidhuber, J.: Long short-term memory. Neural Comput., pp. 1735–1780 (1997)

Holland, P.W., Laskey, K.B., Leinhardt, S.: Stochastic blockmodels: first steps. Soc. Netw 5(2), 109–137 (1983)

Hou, Y., Chen, H., Li, C., Cheng, J., Yang, M.C.: A representation learning framework for property graphs. KDD pp. 65–73 (2019). https://doi.org/10.1145/3292500.3330948

Huang, W., Li, Y., Fang, Y., Fan, J., Yang, H.: Biane: Bipartite attributed network embedding. In: SIGIR, pp. 149–158 (2020). https://doi.org/10.1145/3397271.3401068

Huang, X., Li, J., Hu, X.: Accelerated attributed network embedding. SDM, pp. 633–641 (2017). https://doi.org/10.1137/1.9781611974973.71

Hussein, R., Yang, D., Cudré-Mauroux, P.: Are meta-paths necessary?: Revisiting heterogeneous graph embeddings. In: A. Cuzzocrea, J. Allan, N.W. Paton, D. Srivastava, R. Agrawal, A.Z. Broder, M.J. Zaki, K.S. Candan, A. Labrinidis, A. Schuster, H. Wang (eds.) CIKM, pp. 437–446. ACM (2018). https://doi.org/10.1145/3269206.3271777

Jeh, G., Widom, J.: Scaling personalized web search. TheWebConf, pp. 271–279 (2003). https://doi.org/10.1145/775152.775191

Jin, D., Li, B., Jiao, P., He, D., Zhang, W.: Network-specific variational auto-encoder for embedding in attribute networks. IJCAI, pp. 2663–2669 (2019). https://doi.org/10.24963/ijcai.2019/370

Kaggle: Kdd cup (2012). https://www.kaggle.com/c/kddcup2012-track1

Kanatsoulis, C.I., Sidiropoulos, N.D.: Gage: Geometry preserving attributed graph embeddings. In: WSDM, pp. 439–448 (2022). https://doi.org/10.1145/3488560.3498467

Kipf, T.N., Welling, M.: Semi-supervised classification with graph convolutional networks. ICLR (2016)

Lerer, A., Wu, L., Shen, J., Lacroix, T., Wehrstedt, L., Bose, A., Peysakhovich, A.: PyTorch-BigGraph: a large-scale graph embedding system. SysML, pp. 120–131 (2019)

Leskovec, J., Mcauley, J.J.: Learning to discover social circles in ego networks. NeurIPS, pp. 539–547 (2012)

Li, J., Huang, L., Wang, C., Huang, D., Lai, J., Chen, P.: Attributed network embedding with micro-meso structure. TKDD 15(4), 72:1-72:26 (2021). https://doi.org/10.1145/3441486

Li, Z., Zheng, W., Lin, X., Zhao, Z., Wang, Z., Wang, Y., Jian, X., Chen, L., Yan, Q., Mao, T.: Transn: Heterogeneous network representation learning by translating node embeddings. In: ICDE, pp. 589–600. IEEE (2020). https://doi.org/10.1109/ICDE48307.2020.00057

Liang, X., Li, D., Madden, A.: Attributed network embedding based on mutual information estimation. In: M. d’Aquin, S. Dietze, C. Hauff, E. Curry, P. Cudré-Mauroux (eds.) CIKM, pp. 835–844. ACM (2020). https://doi.org/10.1145/3340531.3412008

Liao, L., He, X., Zhang, H., Chua, T..S.: Attributed social network embedding. TKDE 30(12), 2257–2270 (2018). https://doi.org/10.1109/TKDE.2018.2819980

Liu, J., He, Z., Wei, L., Huang, Y.: Content to node: Self-translation network embedding. KDD, pp. 1794–1802 (2018). https://doi.org/10.1145/3219819.3219988

Liu, X., Yang, B., Song, W., Musial, K., Zuo, W., Chen, H., Yin, H.: A block-based generative model for attributed network embedding. World Wide Web 24(5), 1439–1464 (2021). https://doi.org/10.1007/s11280-021-00918-y

Liu, Z., Huang, C., Yu, Y., Dong, J.: Motif-preserving dynamic attributed network embedding. In: TheWebConf, pp. 1629–1638 (2021)

Lutkepohl, H.: Handbook of matrices. Comput. Stat. Data Anal. 2(25), 243 (1997)

Ma, J., Cui, P., Wang, X., Zhu, W.: Hierarchical taxonomy aware network embedding. KDD, pp. 1920–1929 (2018). https://doi.org/10.1145/3219819.3220062

Mahdavi, S., Khoshraftar, S., An, A.: dynnode2vec: Scalable dynamic network embedding. In: N. Abe, H. Liu, C. Pu, X. Hu, N.K. Ahmed, M. Qiao, Y. Song, D. Kossmann, B. Liu, K. Lee, J. Tang, J. He, J.S. Saltz (eds.) IEEE BigData, pp. 3762–3765. IEEE (2018). https://doi.org/10.1109/BigData.2018.8621910

Meng, Z., Liang, S., Bao, H., Zhang, X.: Co-embedding attributed networks. WSDM, pp. 393–401 (2019). https://doi.org/10.1145/3289600.3291015

Mikolov, T., Chen, K., Corrado, G., Dean, J.: Efficient estimation of word representations in vector space. arXiv preprint arXiv:1301.3781 (2013)

Mikolov, T., Sutskever, I., Chen, K., Corrado, G.S., Dean, J.: Distributed representations of words and phrases and their compositionality. NeurIPS, pp. 3111–3119 (2013)

Musco, C., Musco, C.: Randomized block krylov methods for stronger and faster approximate singular value decomposition. NeurIPS, pp. 1396–1404 (2015)

Pan, S., Hu, R., Long, G., Jiang, J., Yao, L., Zhang, C.: Adversarially regularized graph autoencoder for graph embedding. IJCAI, pp. 2609–2615 (2018). https://doi.org/10.24963/ijcai.2018/362

Perozzi, B., Al-Rfou, R., Skiena, S.: Deepwalk: Online learning of social representations. KDD, pp. 701–710 (2014). https://doi.org/10.1145/2623330.2623732

Qiu, J., Dhulipala, L., Tang, J., Peng, R., Wang, C.: Lightne: a lightweight graph processing system for network embedding. In: SIGMOD, pp. 2281–2289 (2021). https://doi.org/10.1145/3448016.3457329

Qiu, J., Dong, Y., Ma, H., Li, J., Wang, K., Tang, J.: Network embedding as matrix factorization: Unifying deepwalk, line, pte, and node2vec. WSDM, pp. 459–467 (2018). https://doi.org/10.1145/3159652.3159706

Rozemberczki, B., Allen, C., Sarkar, R.: Multi-scale attributed node embedding. J. Complex Netw. 9(1), 1–22 (2021). https://doi.org/10.1093/comnet/cnab014

Salton, G., McGill, M.J.: Introduction to modern information retrieval (1986)

Sameh, A.H., Wisniewski, J.A.: A trace minimization algorithm for the generalized eigenvalue problem. J. Numer. Anal. 19(6), 1243–1259 (1982)

Sheikh, N., Kefato, Z.T., Montresor, A.: A simple approach to attributed graph embedding via enhanced autoencoder. Complex Netw., pp. 797–809 (2019). https://doi.org/10.1007/978-3-030-36687-2_66

Shi, Y., Zhu, Q., Guo, F., Zhang, C., Han, J.: Easing embedding learning by comprehensive transcription of heterogeneous information networks. In: Y. Guo, F. Farooq (eds.) KDD, pp. 2190–2199. ACM (2018). https://doi.org/10.1145/3219819.3220006

Sinha, A., Shen, Z., Song, Y., Ma, H., Eide, D., Hsu, B.J., Wang, K.: An overview of microsoft academic service (mas) and applications. TheWebConf, pp. 243–246 (2015). https://doi.org/10.1145/2740908.2742839

Strang, G., Strang, G., Strang, G., Strang, G.: Introduction to Linear Algebra, vol. 3. Wellesley-Cambridge Press, Cambridge (1993)

Tang, J., Qu, M., Mei, Q.: PTE: predictive text embedding through large-scale heterogeneous text networks. In: L. Cao, C. Zhang, T. Joachims, G.I. Webb, D.D. Margineantu, G. Williams (eds.) KDD, pp. 1165–1174. ACM (2015). https://doi.org/10.1145/2783258.2783307

Tang, J., Qu, M., Wang, M., Zhang, M., Yan, J., Mei, Q.: Line: Large-scale information network embedding. TheWebConf, pp. 1067–1077 (2015). https://doi.org/10.1145/2736277.2741093

Tong, H., Faloutsos, C., Pan, J.Y.: Fast random walk with restart and its applications. ICDM, pp. 613–622 (2006). https://doi.org/10.1109/ICDM.2006.70

Tsitsulin, A., Mottin, D., Karras, P., Müller, E.: Verse: Versatile graph embeddings from similarity measures. TheWebConf, pp. 539–548 (2018). https://doi.org/10.1145/3178876.3186120

Tsitsulin, A., Munkhoeva, M., Mottin, D., Karras, P., Oseledets, I., Müller, E.: Frede: anytime graph embeddings. PVLDB 14(6), 1102–1110 (2021). https://doi.org/10.14778/3447689.3447713

Veličković, P., Fedus, W., Hamilton, W.L., Liò, P., Bengio, Y., Hjelm, R.D.: Deep graph infomax. ICLR (2019)

Von Luxburg, U.: A tutorial on spectral clustering. Stat. Comput. 17(4), 395–416 (2007)

Wang, H., Chen, E., Liu, Q., Xu, T., Du, D., Su, W., Zhang, X.: A united approach to learning sparse attributed network embedding. ICDM, pp. 557–566 (2018). https://doi.org/10.1109/ICDM.2018.00071

Wang, J., Qu, X., Bai, J., Li, Z., Zhang, J., Gao, J.: Sages: Scalable attributed graph embedding with sampling for unsupervised learning. TKDE, (01), 1–1 (2022). https://doi.org/10.1109/TKDE.2022.3148272

Wang, X., Cui, P., Wang, J., Pei, J., Zhu, W., Yang, S.: Community preserving network embedding. In: S. Singh, S. Markovitch (eds.) AAAI, pp. 203–209. AAAI Press (2017). http://aaai.org/ocs/index.php/AAAI/AAAI17/paper/view/14589

Wang, Y., Duan, Z., Liao, B., Wu, F., Zhuang, Y.: Heterogeneous attributed network embedding with graph convolutional networks. In: AAAI, pp. 10,061–10,062 (2019)

Wright, S.J.: Coordinate descent algorithms. Math. Program., pp. 3–34 (2015)

Wu, J., He, J.: Scalable manifold-regularized attributed network embedding via maximum mean discrepancy. CIKM, pp. 2101–2104 (2019). https://doi.org/10.1145/3357384.3358091

Wu, W., Li, B., Chen, L., Zhang, C.: Efficient attributed network embedding via recursive randomized hashing. IJCAI, pp. 2861–2867 (2018). https://doi.org/10.24963/ijcai.2018/397

Xie, Y., Yu, B., Lv, S., Zhang, C., Wang, G., Gong, M.: A survey on heterogeneous network representation learning. Pattern Recognit. 116, 107–936 (2021). https://doi.org/10.1016/j.patcog.2021.107936

Xue, G., Zhong, M., Li, J., Chen, J., Zhai, C.: Dynamic network embedding survey. Neurocomputing 472, 212–223 (2022). https://doi.org/10.1016/j.neucom.2021.03.138

Yang, C., Liu, Z., Zhao, D., Sun, M., Chang, E.: Network representation learning with rich text information. IJCAI, pp. 2111–2117 (2015)

Yang, C., Xiao, Y., Zhang, Y., Sun, Y., Han, J.: Heterogeneous network representation learning: a unified framework with survey and benchmark. TKDE (2020)

Yang, C., Xiao, Y., Zhang, Y., Sun, Y., Han, J.: Heterogeneous network representation learning: a unified framework with survey and benchmark. TKDE 34(10), 4854–4873 (2022). https://doi.org/10.1109/TKDE.2020.3045924

Yang, C., Zhong, L., Li, L.J., Jie, L.: Bi-directional joint inference for user links and attributes on large social graphs. TheWebConf, pp. 564–573 (2017). https://doi.org/10.1145/3041021.3054181

Yang, H., Pan, S., Chen, L., Zhou, C., Zhang, P.: Low-bit quantization for attributed network representation learning. IJCAI, pp. 4047–4053 (2019). https://doi.org/10.24963/ijcai.2019/562

Yang, H., Pan, S., Zhang, P., Chen, L., Lian, D., Zhang, C.: Binarized attributed network embedding. ICDM, pp. 1476–1481 (2018). https://doi.org/10.1109/ICDM.2018.8626170

Yang, J., McAuley, J., Leskovec, J.: Community detection in networks with node attributes. ICDM, pp. 1151–1156 (2013). https://doi.org/10.1109/ICDM.2013.167

Yang, R., Shi, J., Xiao, X., Yang, Y., Bhowmick, S..S.: Homogeneous network embedding for massive graphs via reweighted personalized pagerank. PVLDB 13(5), 670–683 (2020). https://doi.org/10.14778/3377369.3377376

Yang, R., Shi, J., Xiao, X., Yang, Y., Bhowmick, S.S., Liu, J.: No pane, no gain: Scaling attributed network embedding in a single server. ACM SIGMOD Record 51(1), 42–49 (2022)

Yang, R., Shi, J., Xiao, X., Yang, Y., Liu, J., Bhowmick, S..S.: Scaling attributed network embedding to massive graphs. Proc. VLDB Endow. 14(1), 37–49 (2020). https://doi.org/10.14778/3421424.3421430

Yang, R., Shi, J., Yang, Y., Huang, K., Zhang, S., Xiao, X.: Effective and scalable clustering on massive attributed graphs. In: TheWebConf, pp. 3675–3687 (2021). https://doi.org/10.1145/3442381.3449875

Ye, D., Jiang, H., Jiang, Y., Wang, Q., Hu, Y.: Community preserving mapping for network hyperbolic embedding. Knowl. Based Syst. 246, 108–699 (2022). https://doi.org/10.1016/j.knosys.2022.108699

Yin, Y., Wei, Z.: Scalable graph embeddings via sparse transpose proximities. KDD, pp. 1429–1437 (2019). https://doi.org/10.1145/3292500.3330860

Zhang, C., Swami, A., Chawla, N.V.: Shne: Representation learning for semantic-associated heterogeneous networks. In: WSDM, pp. 690–698 (2019). https://doi.org/10.1145/3289600.3291001

Zhang, D., Yin, J., Zhu, X., Zhang, C.: Homophily, structure, and content augmented network representation learning. ICDM, pp. 609–618 (2016). https://doi.org/10.1109/ICDM.2016.0072

Zhang, Z., Cui, P., Li, H., Wang, X., Zhu, W.: Billion-scale network embedding with iterative random projection. ICDM, pp. 787–796 (2018). https://doi.org/10.1109/ICDM.2018.00094

Zhang, Z., Cui, P., Wang, X., Pei, J., Yao, X., Zhu, W.: Arbitrary-order proximity preserved network embedding. KDD, pp. 2778–2786 (2018). https://doi.org/10.1145/3219819.3219969

Zhang, Z., Yang, H., Bu, J., Zhou, S., Yu, P., Zhang, J., Ester, M., Wang, C.: Anrl: Attributed network representation learning via deep neural networks. IJCAI, pp. 3155–3161 (2018). https://doi.org/10.24963/ijcai.2018/438

Zheng, S., Guan, D., Yuan, W.: Semantic-aware heterogeneous information network embedding with incompatible meta-paths. WWW 25(1), 1–21 (2022). https://doi.org/10.1007/s11280-021-00903-5

Zhou, C., Liu, Y., Liu, X., Liu, Z., Gao, J.: Scalable graph embedding for asymmetric proximity. AAAI, pp. 2942–2948 (2017)

Zhou, S., Yang, H., Wang, X., Bu, J., Ester, M., Yu, P., Zhang, J., Wang, C.: Prre: Personalized relation ranking embedding for attributed networks. CIKM, pp. 823–832 (2018). https://doi.org/10.1145/3269206.3271741

Zhu, R., Zhao, K., Yang, H., Lin, W., Zhou, C., Ai, B., Li, Y., Zhou, J.: Aligraph: a comprehensive graph neural network platform. PVLDB 12(12), 2094–2105 (2019). https://doi.org/10.14778/3352063.3352127

Zhu, Z., Xu, S., Tang, J., Qu, M.: Graphvite: A high-performance cpu-gpu hybrid system for node embedding. TheWebConf, pp. 2494–2504 (2019). https://doi.org/10.1145/3308558.3313508

Acknowledgements

This work is supported by the National University of Singapore SUG grant R-252-000-686-133, Singapore Government AcRF Tier-2 Grant MOE2019-T2-1-029, NPRP grant #NPRP10-0208-170408 from the Qatar National Research Fund (Qatar Foundation), and the financial support of Hong Kong RGC ECS (No. 25201221) and Start-up Fund (P0033898) by PolyU. The findings herein reflect the work, and are solely the responsibility of the authors.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Work was done when the first author was a doctoral student at NTU, Singapore, and a research fellow at NUS.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yang, R., Shi, J., Xiao, X. et al. PANE: scalable and effective attributed network embedding. The VLDB Journal 32, 1237–1262 (2023). https://doi.org/10.1007/s00778-023-00790-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00778-023-00790-4