Abstract

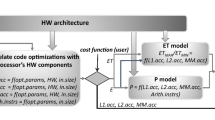

Today’s compilers have a plethora of optimizations-transformations to choose from, and the correct choice, order as well parameters of transformations have a significant/large impact on performance; choosing the correct order and parameters of optimizations has been a long standing problem in compilation research, which until now remains unsolved; the separate sub-problems optimization gives a different schedule/binary for each sub-problem and these schedules cannot coexist, as by refining one degrades the other. Researchers try to solve this problem by using iterative compilation techniques but the search space is so big that it cannot be searched even by using modern supercomputers. Moreover, compiler transformations do not take into account the hardware architecture details and data reuse in an efficient way. In this paper, a new iterative compilation methodology is presented which reduces the search space of six compiler transformations by addressing the above problems; the search space is reduced by many orders of magnitude and thus an efficient solution is now capable to be found. The transformations are the following: loop tiling (including the number of the levels of tiling), loop unroll, register allocation, scalar replacement, loop interchange and data array layouts. The search space is reduced (a) by addressing the aforementioned transformations together as one problem and not separately, (b) by taking into account the custom hardware architecture details (e.g., cache size and associativity) and algorithm characteristics (e.g., data reuse). The proposed methodology has been evaluated over iterative compilation and gcc/icc compilers, on both embedded and general purpose processors; it achieves significant performance gains at many orders of magnitude lower compilation time.

Similar content being viewed by others

References

Triantafyllis S, Vachharajani M, Vachharajani N, August DI (2003) Compiler optimization-space exploration. In: Proceedings of the international symposium on Code generation and optimization: feedback-directed and runtime optimization, CGO ’03. IEEE Computer Society, Washington, DC, pp 204–215. http://dl.acm.org/citation.cfm?id=776261.776284

Cooper KD, Subramanian D, Torczon L (2001) Adaptive optimizing compilers for the 21st Century. J Supercomput 23:2002

Kisuki T, Knijnenburg PMW, O’Boyle MFP, Bodin F, Wijshoff HAG (1999) A feasibility study in iterative compilation. In: Proceedings of the Second International Symposium on High Performance Computing, ISHPC ’99. Springer, London, UK, pp 121–132. http://dl.acm.org/citation.cfm?id=646347.690219

Kulkarni PA, Whalley DB, Tyson GS, Davidson JW (2009) Practical exhaustive optimization phase order exploration and evaluation. ACM Trans Archit Code Optim 6(1):1–36. doi:10.1145/1509864.1509865

Kulkarni P, Hines S, Hiser J, Whalley D, Davidson J, Jones D (2004) Fast searches for effective optimization phase sequences. SIGPLAN Not 39(6):171–182. doi:10.1145/996893.996863

Park E, Kulkarni S, Cavazos J (2011) An evaluation of different modeling techniques for iterative compilation. In: Proceedings of the 14th international conference on Compilers, architectures and synthesis for embedded systems, CASES ’11. ACM, New York, NY, pp 65–74. doi:10.1145/2038698.2038711

Monsifrot A, Bodin F, Quiniou R (2002) A machine learning approach to automatic production of compiler heuristics. In: Proceedings of the 10th International Conference on Artificial Intelligence: Methodology, Systems, and Applications, AIMSA ’02. Springer, London, pp 41–50. http://dl.acm.org/citation.cfm?id=646053.677574

Stephenson M, Amarasinghe S, Martin M, O’Reilly UM (2003) Meta optimization: improving compiler heuristics with machine learning. SIGPLAN Not 38(5):77–90. doi:10.1145/780822.781141

Tartara M, Crespi Reghizzi S (2013) Continuous learning of compiler heuristics. ACM Trans Archit Code Optim 9(4):46:1–46:25. doi:10.1145/2400682.2400705

Agakov F, Bonilla E, Cavazos J, Franke B, Fursin G, O’Boyle MFP, Thomson J, Toussaint M, Williams CKI (2006) Using machine learning to focus iterative optimization. In: Proceedings of the International Symposium on Code Generation and Optimization, CGO ’06. IEEE Computer Society, Washington, DC, pp 295–305. doi:10.1109/CGO.2006.37

Almagor L, Cooper KD, Grosul A, Harvey TJ, Reeves SW, Subramanian D, Torczon L, Waterman T (2004) Finding effective compilation sequences. SIGPLAN Not 39(7):231–239. doi:10.1145/998300.997196

Cooper KD, Schielke PJ, Subramanian D (1999) Optimizing for reduced code space using genetic algorithms. SIGPLAN Not 34(7):1–9. doi:10.1145/315253.314414

Cooper KD, Grosul A, Harvey TJ, Reeves S, Subramanian D, Torczon L, Waterman T (2005) ACME: adaptive compilation made efficient. SIGPLAN Not 40(7):69–77. doi:10.1145/1070891.1065921

Cooper KD, Grosul A, Harvey TJ, Reeves S, Subramanian D, Torczon L, Waterman T (2006) Exploring the structure of the space of compilation sequences using randomized search algorithms. J Supercomput 36(2):135–151. doi:10.1007/s11227-006-7954-5

Kulkarni PA, Whalley DB, Tyson GS (2007) Evaluating heuristic optimization phase order search algorithms. In: Proceedings of the International Symposium on Code Generation and Optimization, CGO ’07. IEEE Computer Society, Washington, DC, pp 157–169. doi:10.1109/CGO.2007.9

Chen C, Chame J, Hall M (2005) Combining models and guided empirical search to optimize for multiple levels of the memory hierarchy. In: Proceedings of the 2013 IEEE/ACM International Symposium on Code Generation and Optimization (CGO), pp 111–122. doi:10.1109/CGO.2005.10

Haneda M, Khnijnenburg PMW, Wijshoff HAG (2005) Automatic selection of compiler options using non-parametric inferential statistics. In: Proceedings of the 14th International Conference on Parallel Architectures and Compilation Techniques, PACT ’05. IEEE Computer Society, Washington, DC, pp 123–132. doi:10.1109/PACT.2005.9

Cavazos J, Fursin G, Agakov F, Bonilla E, O’Boyle MFP, Temam O (2007) Rapidly selecting good compiler optimizations using performance counters. In: Proceedings of the International Symposium on Code Generation and Optimization, CGO ’07. IEEE Computer Society, Washington, DC, pp 185–197. doi:10.1109/CGO.2007.32

de Mesmay F, Voronenko Y, Püschel M (2010) Offline library adaptation using automatically generated heuristics. In: International Parallel and Distributed Processing Symposium (IPDPS), pp 1–10

Dubach C, Jones TM, Bonilla EV, Fursin G, O’Boyle MFP (2009) Portable compiler optimisation across embedded programs and microarchitectures using machine learning. In: Proceedings of the 42Nd Annual IEEE/ACM International Symposium on Microarchitecture, MICRO 42. ACM, New York, NY, pp 78–88. doi:10.1145/1669112.1669124

Knijnenburg PMW, Kisuki T, Gallivan K, O’Boyle MFP (2004) The effect of cache models on iterative compilation for combined tiling and unrolling. J CCPE 16(2–3):247–270

Kim D, Renganarayanan L, Rostron D, Rajopadhye S, Strout MM (2007) Multi-level Tiling: M for the Price of One. In: Proceedings of the 2007 ACM/IEEE Conference on Supercomputing, SC ’07. ACM, New York, NY, pp 51:1–51:12. doi:10.1145/1362622.1362691

Renganarayanan L, Kim D, Rajopadhye S, Strout MM (2007) Parameterized tiled loops for free. SIGPLAN Not 42(6):405–414. doi:10.1145/1273442.1250780

Fursin G, O’Boyle MFP, Knijnenburg PMW (2002) Evaluating iterative compilation. In: Languages and Compilers for Parallel Computing, 15th Workshop, (LCPC), Revised Papers. College Park, MD, , pp 362–376

Leather H, Bonilla E, O’Boyle M (2009) Automatic feature generation for machine learning based optimizing compilation. In: Proceedings of the 7th Annual IEEE/ACM International Symposium on Code Generation and Optimization, CGO ’09. IEEE Computer Society, Washington, DC, pp 81–91. doi:10.1109/CGO.2009.21

Stephenson M, Amarasinghe S (2005) Predicting Unroll Factors Using Supervised Classification. In: Proceedings of the International Symposium on Code Generation and Optimization, CGO ’05. IEEE Computer Society, Washington, DC, pp 123–134. doi:10.1109/CGO.2005.29

Park E, Pouche LN, Cavazos J, Cohen A, Sadayappan P (2011) Predictive Modeling in a Polyhedral Optimization Space. In: Proceedings of the 9th Annual IEEE/ACM International Symposium on Code Generation and Optimization, CGO ’11. IEEE Computer Society, Washington, DC, pp 119–129. http://dl.acm.org/citation.cfm?id=2190025.2190059

Jantz MR, Kulkarni PA (2013) Exploiting phase inter-dependencies for faster iterative compiler optimization phase order searches. In: Proceedings of the 2013 International Conference on Compilers, Architectures and Synthesis for Embedded Systems, CASES ’13. IEEE Press, Piscataway, NJ, pp 7:1–7:10. http://dl.acm.org/citation.cfm?id=2555729.2555736

Kulkarni S, Cavazos J (2012) Mitigating the compiler optimization phase-ordering problem using machine learning. SIGPLAN Not 47(10):147–162. doi:10.1145/2398857.2384628

Parello D, Temam O, Cohen A, Verdun JM (2004) Towards a Systematic, Pragmatic and Architecture-Aware Program Optimization Process for Complex Processors. In: Proceedings of the 2004 ACM/IEEE Conference on Supercomputing, SC ’04. IEEE Computer Society, Washington, DC, p 15. doi:10.1109/SC.2004.61

Rong H, Douillet A, Gao GR (2005) Register allocation for software pipelined multi-dimensional loops. SIGPLAN Not 40(6):154–167. doi:10.1145/1064978.1065030

Hack S, Goos G (2006) Optimal register allocation for SSA-form Programs in polynomial time. Inf Process Lett 98(4):150–155. doi:10.1016/j.ipl.2006.01.008

Nagarakatte SG, Govindarajan R (2007) Register Allocation and Optimal Spill Code Scheduling in Software Pipelined Loops Using 0-1 Integer Linear Programming Formulation. In: Proceedings of the 16th International Conference on Compiler Construction, CC’07. Springer, Berlin, Heidelberg, pp 126–140. http://dl.acm.org/citation.cfm?id=1759937.1759949

Sarkar V, Barik R (2007) Extended Linear Scan: An Alternate Foundation for Global Register Allocation. Compiler Construction, 16th International Conference. Proceedings. Braga, Portugal, pp 141–155

Smith MD, Ramsey N, Holloway G (2004) A generalized algorithm for graph-coloring register allocation. SIGPLAN Not 39(6):277–288. doi:10.1145/996893.996875

Wang L, Yang X, Xue J, Deng Y, Yan X, Tang T, Nguyen QH (2008) Optimizing scientific application loops on stream processors. SIGPLAN Not 43(7):161–170. doi:10.1145/1379023.1375679

Baradaran N, Diniz PC A register allocation algorithm in the presence of scalar replacement for fine-grain configurable architectures. arXiv:0710.4702

Lozano RC, Schulte C Survey on Combinatorial Register Allocation and Instruction Scheduling. arXiv:1409.7628

Sherwood T, Calder B, Emer J (1999) Reducing cache misses using hardware and software page placement. In: Proceedings of the 13th international conference on Supercomputing, ICS ’99. ACM, New York, NY, pp 155–164. doi:10.1145/305138.305189

Cacaval C, Padua DA (2003) Estimating cache misses and locality using stack distances. In: Proceedings of the 17th annual international conference on Supercomputing, ICS ’03. ACM, New York, NY, pp 150–159. doi:10.1145/782814.782836

Ghosh S, Martonosi M, Malik S (1997) Cache miss equations: an analytical representation of cache misses. In: Proceedings of the 11th international conference on Supercomputing, ICS ’97. ACM, New York, NY, pp 317–324. doi:10.1145/263580.263657

Song F, Moore S, Dongarra J (2007) L2 cache modeling for scientific applications on chip multi-processors. In: Parallel Processing, International Conference on 51. doi:10.1109/ICPP.2007.52

Hankins RA, Patel JM (2003) Data morphing: an adaptive, cache-conscious storage technique. In: Proceedings of the 29th international conference on Very large data bases—vol 29, VLDB ’2003, VLDB Endowment, 2003, pp 417–428. http://dl.acm.org/citation.cfm?id=1315451.1315488

Franz M, Kistler T (1998) Splitting data objects to increase cache utilization. Department of Information and Computer Science University of California, Tech. rep

Rubin S, Bodík R, Chilimbi T (2002) An efficient profile-analysis framework for data-layout optimizations. SIGPLAN Not 37:140–153. doi:10.1145/565816.503287

Chang SK (2003) Data structures and algorithms, vol 13 of series on software engineering and knowledge engineering. World Scientific, Singapore

Kelefouras V, Kritikakou A, Goutis C (2015) A methodology for speeding up loop kernels by exploiting the software information and the memory architecture. Comput Lang Syst Struct 41:21–41. http://dblp.uni-trier.de/db/journals/cl/cl41.html

Whaley RC, Petitet A, Dongarra JJ (2001) Automated Empirical Optimization of Software and the ATLAS Project. Parallel Comput 27(1–2):3–35

Kelefouras V, Kritikakou A, Mporas I, Vasileios K (2016) A high-performance matrix-matrix multiplication methodology for CPU and gpu architectures. J Supercomput 72(3):804–844. doi:10.1007/s11227-015-1613-7

Kelefouras VI, Kritikakou A, Papadima E, Goutis CE (2015) A methodology for speeding up matrix vector multiplication for single/multi-core architectures. J Supercomput 71(7):2644–2667. http://dblp.uni-trier.de/db/journals/tjs/tjs71.html

O. S. University, Polybench/c benchmark suite (2012). http://web.cs.ucla.edu/~pouchet/software/polybench/

Kelefouras VI, Kritikakou A, Goutis C (2014) A methodology for speeding up edge and line detection algorithms focusing on memory architecture utilization. Supercomput Springer. doi:10.1007/s11227-013-1049-x

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kelefouras, V. A methodology pruning the search space of six compiler transformations by addressing them together as one problem and by exploiting the hardware architecture details. Computing 99, 865–888 (2017). https://doi.org/10.1007/s00607-016-0535-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00607-016-0535-4

Keywords

- Loop unroll

- Loop tiling

- Scalar replacement

- Register allocation

- Data reuse

- Cache

- Loop transformations

- Iterative compilation