Abstract

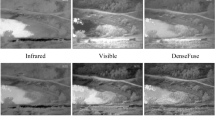

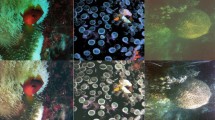

Infrared images are full of energy information, which can intuitively reflect the difference between objects and scenes. Visible images are full of color information, which can intuitively reflect the details of objects and scenes. To achieve the comprehensive utilization of infrared energy information when transforming infrared images to visible images, this paper proposes a method called infrared-energy-to-color (IETC) based on Cycle-Consistent Generative Adversarial Networks (CycleGAN). We compare some state-of-the-art methods in image transformation; the results show that the proposed model can generate visible images of ocean objects and scenes in the visual expression. Furthermore, in the quantitative evaluation, the proposed method performs better than others. In addition, the classic object detection algorithms also show that the proposed IETC method can achieve more robust results.

Similar content being viewed by others

References

Yang, J., Wen, J., Wang, Y., et al.: Fog-based marine environmental information monitoring toward ocean of things[J]. IEEE Internet Things J. 7(5), 4238–4247 (2019)

Li, Y., Yang, J.: Few-shot cotton pest recognition and terminal realization[J]. Comput. Electron. Agric. 169, 105240 (2020)

LeCun, Y., Bengio, Y., Hinton, G.: Deep learning[J]. Nature 521(7553), 436–444 (2015)

Li, Y., Nie, J., Chao, X.: Do we really need deep CNN for plant diseases identification?[J]. Comput. Electron. Agric. 178, 105803 (2020)

Li, Y., Chao, X.: ANN-based continual classification in agriculture[J]. Agriculture 10(5), 178 (2020)

Liu, Y., Li, Y., Yuan, Y.H., et al.: A new robust deep canonical correlation analysis algorithm for small sample problems[J]. IEEE Access 7, 33631–33639 (2019)

Yang, J., Man, J., Xi, M., et al.: Precise measurement of position and attitude based on convolutional neural network and visual correspondence relationship[J]. IEEE Trans. Neural Netw. Learn. Syst. 31(6), 2030–2041 (2019)

Yang, Y., Zhang, Z., Mao, W., et al.: Radar target recognition based on few-shot learning[J]. Multimed. Syst. (2021). https://doi.org/10.1007/s00530-021-00832-3

Yang, J., Xi, M., Jiang, B., et al.: FADN: fully connected attitude detection network based on industrial video[J]. IEEE Trans. Industr. Inf. 17(3), 2011–2020 (2020)

Li, Y., Chao, X.: Semi-supervised few-shot learning approach for plant diseases recognition[J]. Plant Methods 17(1), 1–10 (2021)

Yang, J., Zhao, Z., Zhang, H., et al.: Data augmentation for X-ray prohibited item images using generative adversarial networks[J]. IEEE Access 7, 28894–28902 (2019)

Yang, J., Wen, J., Jiang, B., et al.: Blockchain-based sharing and tamper-proof framework of big data networking[J]. IEEE Network 34(4), 62–67 (2020)

Cao, Z.Y., Niu, S.Z., Zhang, J.W.: Masked image inpainting algorithm based on generative adversarial nets[J]. J. Beijing Univ. Posts Telecommun. 41(3), 81 (2018)

Pan, Z., Yu, W., Yi, X., et al.: Recent progress on generative adversarial networks (GANs): a survey[J]. IEEE Access 7, 36322–36333 (2019)

Yang, J., Zhao, Y., Liu, J., et al.: No reference quality assessment for screen content images using stacked autoencoders in pictorial and textual regions[J]. IEEE Trans. Cybernet. (2020). https://doi.org/10.1109/TCYB.2020.3024627

Li, J., Li, C., Yang, T., et al.: Cross-domain co-occurring feature for visible-infrared image matching[J]. IEEE Access 6, 17681–17698 (2018)

Li, Z., Guo, C., Zhao, P., et al.: Mode instability mitigation by counter-pumped scheme in high power fiber laser[J]. Chin. J. Lasers 44(8), 0801010 (2017)

Liang, W., Ding, D., Wei, G.: An improved DualGAN for near-infrared image colorization[J]. Infrared Phys. Technol. 116, 103764 (2021)

Zhang, X., Hu, Z., Zhang, G., et al.: Dose calculation in proton therapy using a discovery cross-domain generative adversarial network (DiscoGAN)[J]. Med. Phys. 48(5), 2646–2660 (2021)

Son, C.H., Zhang, X.P.: Near-infrared coloring via a contrast-preserving mapping model[J]. IEEE Trans. Image Process. 26(11), 5381–5394 (2017)

Li, Y., Huang, J.B., Ahuja, N., et al.: Deep joint image filtering[C]. European Conference on Computer Vision, pp. 154–169. Springer, Cham (2016)

Sun, T., Jung, C., Fu, Q., et al.: Nir to rgb domain translation using asymmetric cycle generative adversarial networks[J]. IEEE Access 7, 112459–112469 (2019)

Zeng, D., Zhu, M.: Multiscale fully convolutional network for foreground object detection in infrared videos[J]. IEEE Geosci. Remote Sens. Lett. 15(4), 617–621 (2018)

Jeong, M., Ko, B.C., Nam, J.Y.: Early detection of sudden pedestrian crossing for safe driving during summer nights[J]. IEEE Trans. Circuits Syst. Video Technol. 27(6), 1368–1380 (2016)

Nataprawira, J., Gu, Y., Goncharenko, I., et al.: Pedestrian detection using multispectral images and a deep neural network[J]. Sensors 21(7), 2536 (2021)

Li, Y., Yang, J.: Meta-learning baselines and database for few-shot classification in agriculture[J]. Comput. Electron. Agric. 182, 106055 (2021)

Yang, J., Zhao, Y.Q., Chan, J.C.W.: Learning and transferring deep joint spectral–spatial features for hyperspectral classification[J]. IEEE Trans. Geosci. Remote Sens. 55(8), 4729–4742 (2017)

Fernandes, K., Cardoso, J.S.: Hypothesis transfer learning based on structural model similarity[J]. Neural Comput. Appl. 31(8), 3417–3430 (2019)

Lu, J., Behbood, V., Hao, P., et al.: Transfer learning using computational intelligence: a survey[J]. Knowl.-Based Syst. 80, 14–23 (2015)

Jameel, A., Riaz, M.M., Ghafoor, A.: Guided filter and IHS-based pan-sharpening[J]. IEEE Sens. J. 16(1), 192–194 (2015)

Xinli, L., Changming, Z., Guotian, Y., et al.: Research of super-resolution processing of invoice image based on generative adversarial network[J]. J. Syst. Simul. 33(6), 1307 (2021)

Pan, Y., Pi, D., Chen, J., et al.: FDPPGAN: remote sensing image fusion based on deep perceptual patchGAN. Neural Comput. Appl. 33, 1–17 (2021)

Ren, S., He, K., Girshick, R., et al.: Faster r-cnn: towards real-time object detection with region proposal networks[J]. Adv. Neural. Inf. Process. Syst. 28, 91–99 (2015)

Liu, M.Y., Breuel, T., Kautz, J.: Unsupervised image-to-image translation networks. Adv. Neural Inf. Process. Syst. 30, 700–708 (2017)

Acknowledgements

This work was supported by the China Postdoctoral Science Foundation (2017M620884, 2019T120127).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Chen, C., Chao, X. Conversion of infrared ocean target images to visible images driven by energy information. Multimedia Systems 29, 2887–2898 (2023). https://doi.org/10.1007/s00530-021-00879-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00530-021-00879-2