Abstract

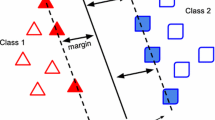

Hyperparameter optimization is vital in improving the prediction accuracy of support vector regression (SVR), as in all machine learning algorithms. This study introduces a new hybrid optimization algorithm, namely PSOGS, which consolidates two strong and widely used algorithms, particle swarm optimization (PSO) and grid search (GS). This hybrid algorithm was experimented on five benchmark datasets. The speed and the prediction accuracy of PSOGS-optimized SVR models (PSOGS-SVR) were compared to those of its constituent algorithms (PSO and GS) and another hybrid optimization algorithm (PSOGSA) that combines PSO and gravitational search algorithm (GSA). The prediction accuracies were evaluated and compared in terms of root mean square error and mean absolute percentage error. For the sake of reliability, the results of the experiments were obtained by performing 10-fold cross-validation on 30 runs. The results showed that PSOGS-SVR yields prediction accuracy comparable to GS-SVR, performs much faster than GS-SVR, and provides better results with less execution time than PSO-SVR. Besides, PSOGS-SVR presents more effective results than PSOGSA-SVR in terms of both prediction accuracy and execution time. As a result, this study proved that PSOGS is a fast, stable, efficient, and reliable algorithm for optimizing hyperparameters of SVR.

Similar content being viewed by others

Data Availability

The datasets analyzed during the current study are available in the UCI Machine Learning Repository, https://archive.ics.uci.edu.

Notes

MathWorks Inc. (2022), MATLAB Statistics and Machine Learning Toolbox User’s Guide, https://www.mathworks.com/help/stats/.

Mirjalili, D., Hybrid Particle Swarm Optimization and Gravitational Search Algorithm, https://www.mathworks.com/matlabcentral/fileexchange/35939-hybrid-particle-swarm-optimization-and-gravitational-search-algorithm-psogsa.

UCI, Machine Learning Repository, https://archive.ics.uci.edu/, 2022.

References

Kuhn M, Johnson K (2013) Applied predictive modeling. Springer, New York, pp 61–65. https://doi.org/10.1007/978-1-4614-6849-3

Vapnik V (1995) The nature of statistical learning theory. Springer, New York. https://doi.org/10.1007/978-1-4757-2440-0

Ben-Hur A, Ong CS, Sonnenburg S, Schölkopf B, Rätsch G (2008) Support vector machines and kernels for computational biology. PLOS Comput Biol 4(10):1–10. https://doi.org/10.1371/journal.pcbi.1000173

Press WH, Teukolsky SA, Vetterling WT, Flannery BP (2007) Numerical recipes: the art of scientific computing, 3rd edn. Cambridge University Press, New York. . Chap. 16.5 Support Vector Machines

Drucker H, Burges C, Kaufman L, Smola A, Vapnik V (1997) Support vector regression machines. Adv Neural Inform Process Syst 28:779–784

Wu C-H, Tzeng G-H, Lin R-H (2009) A novel hybrid genetic algorithm for kernel function and parameter optimization in support vector regression. Expert Syst Appl 36(3):4725–4735. https://doi.org/10.1016/j.eswa.2008.06.046

Wei J, Zhang R, Yu Z, Hu R, Tang J, Gui C, Yuan Y (2017) A BPSO-SVM algorithm based on memory renewal and enhanced mutation mechanisms for feature selection. Appl Soft Comput 58:176–192. https://doi.org/10.1016/j.asoc.2017.04.061

Chang Y-W, Hsieh C-J, Chang K-W, Ringgaard M, Lin C-J (2010) Training and testing low-degree polynomial data mappings via linear SVM. J Mach Learn Res 11(48):1471–1490

Kennedy J, Eberhart R (1995) Particle swarm optimization. In: Proceedings of ICNN’95—international conference on neural networks. IEEE, Perth, WA, Australia, vol 4, pp 1942–1948. https://doi.org/10.1109/ICNN.1995.488968

Kennedy J, Eberhart RC (1997) A discrete binary version of the particle swarm algorithm. In: 1997 IEEE international conference on systems, man, and cybernetics. computational cybernetics and simulation. IEEE, Orlando, FL, USA, vol 5, pp 4104–41085. https://doi.org/10.1109/ICSMC.1997.637339

Yoshida H, Kawata K, Fukuyama Y, Takayama S, Nakanishi Y (2000) A particle swarm optimization for reactive power and voltage control considering voltage security assessment. IEEE Trans Power Syst 15(4):1232–1239. https://doi.org/10.1109/59.898095

Clerc M (2004) Discrete particle swarm optimization, illustrated by the traveling salesman problem. Springer, Berlin, vol 141, pp 219–239. https://doi.org/10.1007/978-3-540-39930-8_8

Hsu C-W, Chang C-C, Lin C-J (2003) A practical guide to support vector classification. Technical report, Department of Computer Science, National Taiwan University (November). http://www.csie.ntu.edu.tw/cjlin/papers.html

Açıkkar M (2020) Prediction of gross calorific value of coal from proximate and ultimate analysis variables using support vector machines with feature selection. Niğde Ömer Halisdemir Üniversitesi Mühendislik Bilimleri Dergisi 9:1129–1141

Zhang J, Song W, Jiang B, Li M (2017) Measurement of lumber moisture content based on PCA and GS-SVM. J For Res 29:557–564

Alam MS, Sultana N, Hossain SMZ (2021) Bayesian optimization algorithm based support vector regression analysis for estimation of shear capacity of FRP reinforced concrete members. Appl Soft Comput 105:107281. https://doi.org/10.1016/j.asoc.2021.107281

Huang C-L, Dun J-F (2008) A distributed PSO-SVM hybrid system with feature selection and parameter optimization. Appl Soft Comput 8(4):1381–1391. https://doi.org/10.1016/j.asoc.2007.10.007

Raj S, Ray KC (2017) ECG signal analysis using DCT-Based DOST and PSO optimized SVM. IEEE Trans Instrum Meas 66(3):470–478. https://doi.org/10.1109/TIM.2016.2642758

Du J, Liu Y, Yu Y, Yan W (2017) A prediction of precipitation data based on support vector machine and particle swarm optimization (PSO-SVM) algorithms. Algorithms 10:57. https://doi.org/10.3390/a10020057

Thom H, Cho M-Y, Alam M, Vu Q (2017) A novel differential particle swarm optimization for parameter selection of support vector machines for monitoring metal-oxide surge arrester conditions. Swarm Evolut Comput 38:120–126. https://doi.org/10.1016/j.swevo.2017.07.006

Sarafrazi S, Nezamabadi-pour H (2013) Facing the classification of binary problems with a GSA-SVM hybrid system. Math Comput Modell 57(1):270–278. https://doi.org/10.1016/j.mcm.2011.06.048

Tao Z, Huiling L, Wenwen W, Xia Y (2019) GA-SVM based feature selection and parameter optimization in hospitalization expense modeling. Appl Soft Comput 75:323–332. https://doi.org/10.1016/j.asoc.2018.11.001

Pan M, Li C, Gao R, Huang Y, You H, Gu T, Qin F (2020) Photovoltaic power forecasting based on a support vector machine with improved ant colony optimization. J Cleaner Prod 277:123948. https://doi.org/10.1016/j.jclepro.2020.123948

Hu G, Xu Z, Wang G, Zeng B, Liu Y, Lei Y (2021) Forecasting energy consumption of long-distance oil products pipeline based on improved fruit fly optimization algorithm and support vector regression. Energy 224:120153. https://doi.org/10.1016/j.energy.2021.120153

Li S, Fang H, Liu X (2018) Parameter optimization of support vector regression based on sine cosine algorithm. Expert Syst Appl 91:63–77. https://doi.org/10.1016/j.eswa.2017.08.038

Sahu B, Panigrahi A, Mohanty S, Panigrahi SS (2020) A hybrid cancer classification based on SVM optimized by PSO and reverse firefly algorithm. Int J Control Autom 13(4):506–517

Fu W, Shao K, Tan J, Wang K (2020) Fault diagnosis for rolling bearings based on composite multiscale fine-sorted dispersion entropy and SVM with hybrid mutation SCA-HHO algorithm optimization. IEEE Access 8:13086–13104. https://doi.org/10.1109/ACCESS.2020.2966582

Al-Thanoon NA, Qasim OS, Algamal ZY (2019) A new hybrid firefly algorithm and particle swarm optimization for tuning parameter estimation in penalized support vector machine with application in chemometrics. Chemom Intell Lab Syst 184:142–152. https://doi.org/10.1016/j.chemolab.2018.12.003

Xiao T, Ren D, Lei S, Zhang J, Liu X (2014) Based on grid-search and PSO parameter optimization for support vector machine. In: Proceeding of the 11th world congress on intelligent control and automation. IEEE, Shenyang, China, pp 1529–1533. https://doi.org/10.1109/WCICA.2014.7052946

Khajeh A, Ghasemi MR, Ghohani Arab H (2017) Hybrid particle swarm optimization, grid search method and univariate method to optimally design steel frame structures. Int J Optim Civil Eng 7:171–189

Demidova L, Klyueva I (2018) Data classification based on the hybrid versions of the particle swarm optimization algorithm. In: 2018 7th mediterranean conference on embedded computing (MECO). IEEE, Budva, Montenegro, pp 1–4. https://doi.org/10.1109/MECO.2018.8406069

Hongmei K, Huibin S, Peng Z (2019) PV prediction based on PSO-GS-SVM hybrid model. J Phys: Conf Ser 1346:012028. https://doi.org/10.1088/1742-6596/1346/1/012028

Mirjalili S, Hashim SZM (2010) A new hybrid PSOGSA algorithm for function optimization. In: 2010 international conference on computer and information application. IEEE, Tianjin, China, pp. 374–377. https://doi.org/10.1109/ICCIA.2010.6141614

Zhu S, Lian X, Wei L, Che J, Shen X, Yang L, Qiu X, Liu X, Gao W, Ren X, Li J (2018) PM2.5 forecasting using SVR with PSOGSA algorithm based on CEEMD, GRNN and GCA considering meteorological factors. Atmos Environ 183:20–32. https://doi.org/10.1016/j.atmosenv.2018.04.004

Chen B, Fu X, Guo X, Gu C, Shao C, Qin X (2019) Zoning elastic modulus inversion for high arch dams based on the PSOGSA-SVM method. Adv Civil Eng 2019:1–13. https://doi.org/10.1155/2019/7936513

Larsen RB, Jouffroy J, Lassen B (2016) On the premature convergence of particle swarm optimization. In: 2016 European control conference (ECC). IEEE, Aalborg, Denmark, pp. 1922–1927. https://doi.org/10.1109/ECC.2016.7810572

Liu R, Liu E, Yang J, Li M, Wang F (2006) Optimizing the hyper-parameters for SVM by combining evolution strategies with a grid search. Springer, Berlin, Heidelberg, pp. 712–721. https://doi.org/10.1007/978-3-540-37256-1_87

Anguita D, Ridella S, Rivieccio F (2005) K-fold generalization capability assessment for support vector classifiers. In: Proceedings. 2005 IEEE international joint conference on neural networks. IEEE, Montreal, QC, Canada, vol 2, pp 855–8582. https://doi.org/10.1109/IJCNN.2005.1555964

Feurer M, Hutter F (2019) Hyperparameter optimization. Springer, Cham, pp 3–33. https://doi.org/10.1007/978-3-030-05318-5_1

Rashedi E, Nezamabadi-Pour H, Saryazdi S (2009) GSA: a gravitational search algorithm. Inf Sci 179(13):2232–2248. https://doi.org/10.1016/j.ins.2009.03.004

Mohd Sabri N, Puteh M, Rusop M (2013) A review of gravitational search algorithm. Int J Adv Soft Comput Appl 5:1–39

Clerc M, Kennedy J (2002) The particle swarm—explosion, stability, and convergence in a multidimensional complex space. IEEE Trans Evolut Comput 6(1):58–73. https://doi.org/10.1109/4235.985692

Bansal JC, Singh PK, Saraswat M, Verma A, Jadon SS, Abraham A (2011) Inertia weight strategies in particle swarm optimization. In: 2011 Third world congress on nature and biologically inspired computing. IEEE, Salamanca, Spain, pp. 633–640. https://doi.org/10.1109/NaBIC.2011.6089659

Funding

No funding was received for conducting this study.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Açıkkar, M., Altunkol, Y. A novel hybrid PSO- and GS-based hyperparameter optimization algorithm for support vector regression. Neural Comput & Applic 35, 19961–19977 (2023). https://doi.org/10.1007/s00521-023-08805-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-023-08805-5