Abstract

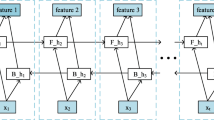

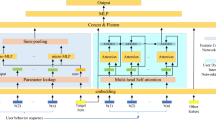

Efficiently extracting user interest from user behavior sequences is the key to improving the click-through rate, and learning sophisticated feature interaction information is also critical in maximizing CTR. However, in terms of interest extraction, the problem of sequence dependence in most existing methods renders low training efficiency. Meanwhile, when exploring high-order feature interactions, the existing method fails to exploit information from all layers of the model. In this study, we propose an interest evolution network (TGRIEN) based on transformer and a gated residual. First, a transformer network supervised by an auxiliary loss function is proposed to extract users’ interests from behavioral sequences in parallel to enhance the training efficiency. Second, a minimal gated unit with an attention forget gate is constructed to detect interests related to target ads and capture the evolution of users’ interests. A gating mechanism is also employed in the residual module to construct a skip gated residual network, which can realize more abundant and effective feature interaction information in several ways. We evaluate the performance of TGRIEN on two real-world datasets. Experimental results demonstrate that our model significantly outperforms state-of-the-art baselines in terms of both prediction and training efficiency.

Similar content being viewed by others

Data availability

The data used in this article are available in the online supplementary material. Supplementary materials are available at http://jmcauley.ucsd.edu/data/amazon/ and https://grouplens.org/datasets/movielens/.

References

Yu Y, Si X, Hu C, Zhang J (2019) A review of recurrent neural networks: LSTM cells and network architectures. Neural Comput 31(7):1235–1270. https://doi.org/10.1162/neco_a_01199

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780. https://doi.org/10.1162/neco.1997.9.8.1735

Chung J, Gulcehre C, Cho K, Bengio Y (2014) Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv preprint arXiv:1412.3555

Schuster M, Paliwal KK (1997) Bidirectional recurrent neural networks. IEEE Trans Signal Process 45(11):2673–2681. https://doi.org/10.1109/78.650093

Wang Q, Liu F, Xing S, Zhao X (2019) Research on CTR prediction based on stacked autoencoder. Appl Intell 49(8):2970–2981. https://doi.org/10.1007/s10489-019-01416-5

Li H, Duan H, Zheng Y, Wang Q, Wang Y (2020) A CTR prediction model based on user interest via attention mechanism. Appl Intell 50(4):1192–1203. https://doi.org/10.1007/s10489-019-01571-9

Tao Z, Wang X, He X, Huang X, Chua T (2020) HoAFM: a high-order attentive factorization machine for CTR prediction. Inf Process Manag 57(6):102076. https://doi.org/10.1016/j.ipm.2019.102076

Shi Y, Yang Y (2020) HFF: hybrid feature fusion model for click-through rate prediction. In: Proceedings of the Third International Conference on Cognitive Computing, pp 3–14

Liu M, Cai S, Lai Z, Qiu L, Hu Z, Ding Y (2021) A joint learning model for click-through prediction in display advertising. Neurocomputing 445:206–219. https://doi.org/10.1016/j.neucom.2021.02.036

Chapelle O, Manavoglu E, Rosales R (2015) Simple and scalable response prediction for display advertising. ACM Trans Intell Syst Technol 5(4):1–34. https://doi.org/10.1145/2532128

Zhang Z, Zhou Y, Xie X (2016) Research on advertising click-through rate estimation based on feature learning. Chin J Comput 39(4):780–794

He X, Bowers S, Candela JQ, Pan J, Jin O, Xu T, Liu B, Xu T, Shi Y, Atallah A, Herbrich R (2014) Practical lessons from predicting clicks on ads at Facebook. In: Proceedings of 20th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, pp 1–9

Chang Y, Hsieh C, Chang K, Ringgaard M, Lin C (2010) Training and testing low-degree polynomial data mappings via linear SVM. J Mach Learn Res 11(1471–1490):20

Rendle S (2012) Factorization machines with libFM. ACM Trans Intell Syst Technol 3(3):1–22. https://doi.org/10.1145/2168752.2168771

Juan Y, Zhuang Y, Chin W-S, Lin C-J (2016) Field-aware factorization machines for CTR prediction. In: Proceedings of the 10th ACM Conference on Recommender Systems, pp 43–50

Xiao J, Ye H, He X, Zhang H, Wu F, Chua T (2017) Attentional factorization machines: learning the weight of feature interactions via attention networks. In: Proceedings of the Twenty-Sixth International Joint Conference on Artificial Intelligence, pp 3119–3125

Moneera A, Maram A, Azizah A, AlOnizan T, Alboqaytah D, Aslam N, Khan IU (2021) Click through rate effectiveness prediction on mobile ads using extreme gradient boosting. Comput Mater Contin 66(2):1681–1696. https://doi.org/10.32604/cmc.2020.013466

Zhang W, Du T, Wang J (2016) Deep learning over multi-field categorical data. In: proceeding of Advances in Information Retrieval Conference, pp 45–57

He X, Chua T (2017) Neural factorization machines for sparse predictive analytics. In: Proceedings of the 40th International ACM SIGIR Conference on Research and Development in Information Retrieval, pp 355–364

Qu Y, Fang B, Zhang W, Tang R, Niu M, Guo H, Yu Y, He X (2019) Product-based neural networks for user response prediction over multi-field categorical data. ACM Trans Inf Syst 37(1):5. https://doi.org/10.1145/3233770

Zou D, Sheng M, Yu H, Mao J, Chen S, Sheng W (2020) Factorized weight interaction neural networks for sparse feature prediction. Neural Comput Appl 32(13):9567–9579. https://doi.org/10.1007/s00521-019-04470-9

Zou D, Wang Z, Zhang L, Zou J, Li Q, Chen Y, Sheng W (2021) Deep field relation neural network for click-through rate prediction. Inf Sci 577:128–139. https://doi.org/10.1016/j.ins.2021.06.079

Huang T, Bi L, Wang N, Zhang D (2021) AutoFM: an efficient factorization machine model via probabilistic auto-encoders. Neural Comput Appl 33(15):9451–9466. https://doi.org/10.1007/s00521-021-05705-4

Cheng H, Koc L, Harmsen J, Shaked T, Chandra T, Aradhye H, Anderson G, Corrado G, Chai W, Ispir M, Anil R, Haque Z, Hong L, Jain V, Liu X, Shah H (2016) Wide & deep learning for recommender systems. In: Proceedings of the 1st Workshop on Deep Learning for Recommender Systems, pp 7–10

Wang R, Fu B, Fu G, Wang M (2017) Deep & Cross network for ad click predictions. In: Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp 1–7

Lian J, Zhou X, Zhang F, Chen Z, Xie X, Sun G (2018) xDeepFM: combining explicit and implicit feature interactions for recommender systems. In: Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pp 1754–1763

Yan C, Chen Y, Wan Y, Wang P (2021) Modeling low- and high-order feature interactions with FM and self-attention network. Appl Intell 51(6):3189–3201. https://doi.org/10.1007/s10489-020-01951-6

Zhang W, Zhang X, Wang H (2019) High-order factorization machine based on cross weights network for recommendation. IEEE Access 7:145746–145756. https://doi.org/10.1109/ACCESS.2019.2941994

Covington P, Adams J, Sargin E (2016) Deep neural networks for YouTube recommendations. In: Proceedings of the 10th ACM Conference on Recommender Systems, pp 191–198

Zhou G, Zhu X, Song C, Fan Y, Zhu H, Ma X, Yan Y, Jin J, Li H, Gai K (2018) Deep interest network for click-through rate prediction. In: Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pp 1059–1068

Lu Q, Li S, Yang T, Xu C (2021) An adaptive hybrid XdeepFM based deep interest network model for click-through rate prediction system. Peerj Comput Sci 7:e716. https://doi.org/10.7717/peerj-cs.716

Zhou G, Mou N, Fan Y, Pi Q, Bian W, Zhou C, Zhu X, Gai K (2019) Deep interest evolution network for click-through rate prediction. In: Proceedings of Thirty-Third AAAI Conference on Artificial Intelligence, pp 5941–5948

Zhang H, Yan J, Zhang Y (2020) CTR prediction models considering the dynamics of user interest. IEEE Access 8:72847–72858. https://doi.org/10.1109/ACCESS.2020.2988115

Yan C, Li X, Chen Y, Zhang Y (2022) JointCTR: a joint CTR prediction framework combining feature interaction and sequential behavior learning. Appl Intell 52(4):4701–4714. https://doi.org/10.1007/s10489-021-02678-8

Li D, Hu B, Chen Q, Wang X, Qi Q, Wang L, Liu H (2021) Attentive capsule network for click-through rate and conversion rate prediction in online advertising. Knowl Based Syst 211:106522. https://doi.org/10.1016/j.knosys.2020.106522

Gan M, Xiao K (2019) R-RNN: extracting user recent behavior sequence for click-through rate prediction. IEEE Access 7:111767–111777. https://doi.org/10.1109/ACCESS.2019.2927717

Jing C, Qiu L, Sun C, Yang Q (2022) ICE-DEN: A click-through rate prediction method based on interest contribution extraction of dynamic attention intensity. Knowl Based Syst 250:109135. https://doi.org/10.1016/j.knosys.2022.109135

Zheng Z, Zhang C, Gao X, Chen G (2022) HIEN: hierarchical intention embedding network for click-through rate prediction. In: Proceedings of the 45th International ACM SIGIR Conference on Research and Development in Information Retrieval, pp 322–331

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser L, Polosukhin I (2017) Attention is all you need. In: Proceedings of the 31st International Conference on Neural Information Processing Systems, pp 6000–6010

Zhou G, Wu J, Zhang C, Zhou Z (2016) Minimal gated unit for recurrent neural networks. Int J Autom Comput 13(3):226–234

Glorot X, Bengio Y (2010) Understanding the difficulty of training deep feedforward neural networks. In: Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, pp 249–256

Veit A, Wilber M, Belongie S (2016) Residual networks behave like ensembles of relatively shallow networks. In: Proceedings of the 30th International Conference on Neural Information Processing Systems, pp 550–558

Oyedotun OK, Al Ismaeil K, Aouada D (2021) Training very deep neural networks: rethinking the role of skip connections. Neurocomputing 441:105–117. https://doi.org/10.1016/j.neucom.2021.02.004

Harper FM, Konstan JA (2016) The MovieLens datasets: history and context. ACM Trans Interact Intell Syst 5(4):1–19. https://doi.org/10.1145/2827872

Diederik PK, Jimmy LB (2014) Adam: a method for stochastic optimization. arXiv preprint arXiv: 1412.6980

Acknowledgements

This work was supported by the National Natural Science Foundation of China under Grant 72062003.

Funding

This work was supported by National Natural Science Foundation of China, 72062003, Chaoyong Qin.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Qin, C., Xie, J., Jiang, Q. et al. A novel interest evolution network based on transformer and a gated residual for CTR prediction in display advertising. Neural Comput & Applic 35, 24665–24680 (2023). https://doi.org/10.1007/s00521-023-08349-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-023-08349-8