Abstract

The application of Deep Neural Networks (DNNs) to a broad variety of tasks demands methods for coping with the complex and opaque nature of these architectures. When a gold standard is available, performance assessment treats the DNN as a black box and computes standard metrics based on the comparison of the predictions with the ground truth. A deeper understanding of performances requires going beyond such evaluation metrics to diagnose the model behavior and the prediction errors. This goal can be pursued in two complementary ways. On one side, model interpretation techniques “open the box” and assess the relationship between the input, the inner layers and the output, so as to identify the architecture modules most likely to cause the performance loss. On the other hand, black-box error diagnosis techniques study the correlation between the model response and some properties of the input not used for training, so as to identify the features of the inputs that make the model fail. Both approaches give hints on how to improve the architecture and/or the training process. This paper focuses on the application of DNNs to computer vision (CV) tasks and presents a survey of the tools that support the black-box performance diagnosis paradigm. It illustrates the features and gaps of the current proposals, discusses the relevant research directions and provides a brief overview of the diagnosis tools in sectors other than CV.

Similar content being viewed by others

Data availability

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

Notes

The link to the code repository is navigable in the online version of the paper.

Abbreviations

- AD:

-

Action detection

- AI:

-

Artificial intelligence

- AP:

-

Average precision

- AUC:

-

Area under the curve

- CAM:

-

Class Activation Map

- CL:

-

Classification

- CV:

-

Computer vision

- DNN:

-

Deep Neural Network

- ET:

-

Error type

- FN:

-

False negative

- FP:

-

False positive

- GT:

-

Ground truth

- IoU:

-

Intersection over union

- IS:

-

Instance segmentation

- MAE:

-

Mean absolute error

- mAP:

-

Mean average precision

- ME:

-

Mean error

- ML:

-

Machine learning

- MSE:

-

Mean squared error

- NAB:

-

Numenta anomaly benchmark

- NLP:

-

Natural language processing

- OD:

-

Object detection

- OT:

-

Object tracking

- PE:

-

Pose estimation

- PR:

-

Precision–recall

- RMSE:

-

Root mean squared error

- ROC:

-

Receiver operating characteristic

- RS:

-

Recommender systems

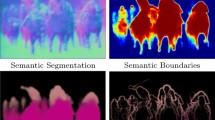

- SS:

-

Semantic segmentation

- TN:

-

True negative

- TP:

-

True positive

- TS:

-

Time series

- VRD:

-

Video relation detection

References

Liu W, Wang Z, Liu X et al (2017) A survey of deep neural network architectures and their applications. Neurocomputing 234:11–26

Chiroma H, Abdullahi UA, Alarood AA et al (2018) Progress on artificial neural networks for big data analytics: a survey. IEEE Access 7:70,535-70,551

Voulodimos A, Doulamis N, Doulamis A et al (2018) Deep learning for computer vision: a brief review. Comput Intell Neurosci

Gharibi G, Walunj V, Nekadi R et al (2021) Automated end-to-end management of the modeling lifecycle in deep learning. Empir Softw Eng 26(2):1–33

Doshi-Velez F, Kim B (2017) Towards a rigorous science of interpretable machine learning. arXiv:1702.08608

Guidotti R, Monreale A, Ruggieri S et al (2019) A survey of methods for explaining black box models. ACM Comput Surv 51(5):93:1-93:42

Qs Zhang, Zhu SC (2018) Visual interpretability for deep learning: a survey. Front Inf Technol Electron Eng 19(1):27–39

Montavon G, Samek W, Müller KR (2018) Methods for interpreting and understanding deep neural networks. Digit Signal Process 73:1–15

Carvalho DV, Pereira EM, Cardoso JS (2019) Machine learning interpretability: a survey on methods and metrics. Electronics 8(8):832

Tjoa E, Guan C (2021) A survey on explainable artificial intelligence (XAI): toward medical XAI. IEEE Trans Neural Netw Learn Syst 32(11):4793–4813

Barredo Arrieta A, Gil-Lopez S, Laña I et al (2021) On the post-hoc explainability of deep echo state networks for time series forecasting, image and video classification. Neural Comput Appl 34:1–21

Zhou B, Khosla A, Lapedriza A et al (2016) Learning deep features for discriminative localization. CVPR

Selvaraju RR, Cogswell M, Das A et al (2017) Grad-cam: visual explanations from deep networks via gradient-based localization. In: 2017 IEEE international conference on computer vision (ICCV), pp 618–626

Chattopadhay A, Sarkar A, Howlader P et al (2018) Grad-cam++: generalized gradient-based visual explanations for deep convolutional networks. In: 2018 IEEE winter conference on applications of computer vision (WACV)

Sun KH, Huh H, Tama BA et al (2020) Vision-based fault diagnostics using explainable deep learning with class activation maps. IEEE Access 8:12,9169-12,9179

Bae W, Noh J, Kim G (2020) Rethinking class activation mapping for weakly supervised object localization. In: Vedaldi A, Bischof H, Brox T et al (eds) Computer vision - ECCV 2020 - 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XV, vol 12360. Lecture Notes in Computer Science. Springer, pp 618–634

Linardatos P, Papastefanopoulos V, Kotsiantis S (2020) Explainable ai: a review of machine learning interpretability methods. Entropy 23(1):18

Verma S, Dickerson J, Hines K (2020) Counterfactual explanations for machine learning: a review. arXiv:2010.10596

Stepin I, Alonso JM, Catala A et al (2021) A survey of contrastive and counterfactual explanation generation methods for explainable artificial intelligence. IEEE Access 9:11,974-12,001

Mehrabi N, Morstatter F, Saxena N et al (2021) A survey on bias and fairness in machine learning. ACM Comput Surv (CSUR) 54(6):1–35

Wu X, Hu Z, Pei K et al (2021) Methods for deep learning model failure detection and model adaption: a survey. In: 2021 IEEE international symposium on software reliability engineering workshops (ISSREW). IEEE, pp 218–223

Wang Z, Liu K, Li J et al (2019) Various frameworks and libraries of machine learning and deep learning: a survey. Archiv Comput Methods Eng 1–24

Gilpin LH, Bau D, Yuan BZ et al (2018) Explaining explanations: an overview of interpretability of machine learning. In: 2018 IEEE 5th international conference on data science and advanced analytics (DSAA). IEEE, pp 80–89

Choo J, Liu S (2018) Visual analytics for explainable deep learning. IEEE Comput Graph Appl 38(4):84–92

Roscher R, Bohn B, Duarte MF et al (2020) Explainable machine learning for scientific insights and discoveries. IEEE Access 8:42,200-42,216

Molnar C (2022) Interpretable machine learning, 2nd edn. Independent publisher

Pessach D, Shmueli E (2022) A review on fairness in machine learning. ACM Comput Surv (CSUR) 55(3):1–44

Balayn A, Soilis P, Lofi C et al (2021) What do you mean? Interpreting image classification with crowdsourced concept extraction and analysis. In: Leskovec J, Grobelnik M, Najork M et al (eds) WWW ’21: the web conference 2021, Virtual Event/Ljubljana, Slovenia, April 19-23, 2021. ACM/IW3C2, pp 1937–1948

Page MJ, McKenzie JE, Bossuyt PM et al (2021) The prisma 2020 statement: an updated guideline for reporting systematic reviews. Int J Surg 88(105):906

Falagas ME, Pitsouni EI, Malietzis GA et al (2008) Comparison of pubmed, scopus, web of science, and google scholar: strengths and weaknesses. FASEB J 22(2):338–342

Dollár P, Wojek C, Schiele B et al (2009) Pedestrian detection: a benchmark. In: 2009 IEEE conference on computer vision and pattern recognition. IEEE, pp 304–311

Hoiem D, Chodpathumwan Y, Dai Q (2012) Diagnosing error in object detectors. In: European conference on computer vision. Springer, pp 340–353

Russakovsky O, Deng J, Huang Z et al (2013) Detecting avocados to zucchinis: what have we done, and where are we going? In: Proceedings of the IEEE international conference on computer vision, pp 2064–2071

Lin TY, Maire M, Belongie S et al (2014) Microsoft coco: common objects in context. In: Fleet D, Pajdla T, Schiele B et al (eds) Computer vision - ECCV 2014. Springer, Cham, pp 740–755

Hariharan B, Arbeláez P, Girshick R et al (2014) Simultaneous detection and segmentation. In: European conference on computer vision. Springer, pp 297–312

Zhu H, Lu S, Cai J et al (2015) Diagnosing state-of-the-art object proposal methods. arXiv:1507.04512

Amershi S, Chickering M, Drucker SM et al (2015) Modeltracker: redesigning performance analysis tools for machine learning. In: Proceedings of the 33rd annual ACM conference on human factors in computing systems, pp 337–346

Redondo-Cabrera C, López-Sastre RJ, Xiang Y et al (2016) Pose estimation errors, the ultimate diagnosis. In: European conference on computer vision. Springer, pp 118–134

Krause J, Perer A, Ng K (2016) Interacting with predictions: visual inspection of black-box machine learning models. In: Proceedings of the 2016 CHI conference on human factors in computing systems, pp 5686–5697

Zhang S, Benenson R, Omran M et al (2016) How far are we from solving pedestrian detection? In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1259–1267

Ruggero Ronchi M, Perona P (2017) Benchmarking and error diagnosis in multi-instance pose estimation. In: Proceedings of the IEEE international conference on computer vision, pp 369–378

Krause J, Dasgupta A, Swartz J et al (2017) A workflow for visual diagnostics of binary classifiers using instance-level explanations. In: 2017 IEEE conference on visual analytics science and technology (VAST). IEEE, pp 162–172

Ren D, Amershi S, Lee B et al (2016) Squares: supporting interactive performance analysis for multiclass classifiers. IEEE Trans Vis Comput Graph 23(1):61–70

Sigurdsson GA, Russakovsky O, Gupta A (2017) What actions are needed for understanding human actions in videos? In: Proceedings of the IEEE international conference on computer vision, pp 2137–2146

Alwassel H, Heilbron FC, Escorcia V et al (2018) Diagnosing error in temporal action detectors. In: Proceedings of the European conference on computer vision (ECCV), pp 256–272

Nekrasov V, Shen C, Reid I (2018) Diagnostics in semantic segmentation. arXiv:1809.10328

Zhang J, Wang Y, Molino P et al (2018) Manifold: a model-agnostic framework for interpretation and diagnosis of machine learning models. IEEE Trans Vis Comput Graph 25(1):364–373

Wexler J, Pushkarna M, Bolukbasi T et al (2019) The what-if tool: interactive probing of machine learning models. IEEE Trans Vis Comput Graph 26(1):56–65

Bolya D, Foley S, Hays J et al (2020) Tide: a general toolbox for identifying object detection errors. In: European conference on computer vision. Springer, pp 558–573

Torres RN, Fraternali P, Romero J (2020) Odin: an object detection and instance segmentation diagnosis framework. In: European conference on computer vision. Springer, pp 19–31

Torres RN, Milani F, Fraternali P (2021) Odin: pluggable meta-annotations and metrics for the diagnosis of classification and localization. In: International conference on machine learning, optimization, and data science. Springer, pp 383–398

Padilla R, Netto SL, da Silva EA (2020) A survey on performance metrics for object-detection algorithms. In: 2020 International conference on systems, signals and image processing (IWSSIP). IEEE, pp 237–242

Yoon H, Lee SH, Park M (2020) Tensorflow with user friendly graphical framework for object detection API. arXiv:2006.06385

Gleicher M, Barve A, Yu X et al (2020) Boxer: interactive comparison of classifier results. In: Computer graphics forum. Wiley Online Library, pp 181–193

Demidovskij A, Tugaryov A, Kashchikhin A, et al (2021) Openvino deep learning workbench: towards analytical platform for neural networks inference optimization. In: Journal of physics: conference series. IOP Publishing, p 012012

Padilla R, Passos WL, Dias TL et al (2021) A comparative analysis of object detection metrics with a companion open-source toolkit. Electronics 10(3):279

Fan H, Yang F, Chu P et al (2021) Tracklinic: diagnosis of challenge factors in visual tracking. In: Proceedings of the IEEE/CVF winter conference on applications of computer vision (WACV), pp 970–979

Chen S, Pascal M, Snoek CG (2021) Diagnosing errors in video relation detectors. In: BMVC

Kräter M, Abuhattum S, Soteriou D et al (2021) Aideveloper: deep learning image classification in life science and beyond. Adv Sci 8(11):2003743

Nourani M, Roy C, Honeycutt DR et al (2022) Detoxer: a visual debugging tool with multi-scope explanations for temporal multi-label classification. IEEE Comput Graph Appl

Deng Z, Sun H, Zhou S et al (2018) Multi-scale object detection in remote sensing imagery with convolutional neural networks. ISPRS J Photogramm Remote Sens 145:3–22

Shang X, Ren T, Guo J et al (2017) Video visual relation detection. In: Proceedings of the 25th ACM international conference on Multimedia, pp 1300–1308

Shang X, Di D, Xiao J, et al (2019) Annotating objects and relations in user-generated videos. In: Proceedings of the 2019 on international conference on multimedia retrieval, pp 279–287

Pang G, Shen C, Cao L et al (2021) Deep learning for anomaly detection: a review. ACM Comput Surv 54(2):1–38

Chalapathy R, Chawla S (2019) Deep learning for anomaly detection: a survey. arXiv:1901.03407

Zhang W, Yang D, Wang H (2019) Data-driven methods for predictive maintenance of industrial equipment: a survey. IEEE Syst J 13(3):2213–2227

Vollert S, Atzmueller M, Theissler A (2021) Interpretable machine learning: a brief survey from the predictive maintenance perspective. In: 2021 26th IEEE international conference on emerging technologies and factory automation (ETFA ), pp 01–08

Zoppi T, Ceccarelli A, Bondavalli A (2019) Evaluation of anomaly detection algorithms made easy with reload. In: 2019 IEEE 30th international symposium on software reliability engineering (ISSRE). IEEE, pp 446–455

Herzen J, Lässig F, Piazzetta SG et al (2021) Darts: user-friendly modern machine learning for time series. arXiv:2110.03224

Carrasco J, López D, Aguilera-Martos I et al (2021) Anomaly detection in predictive maintenance: a new evaluation framework for temporal unsupervised anomaly detection algorithms. Neurocomputing 462:440–452

Krokotsch T, Knaak M, Gühmann C (2020) A novel evaluation framework for unsupervised domain adaption on remaining useful lifetime estimation. In: 2020 IEEE international conference on prognostics and health management (ICPHM). IEEE, pp 1–8

Zangrando N, Torres RN, Milani F et al (2022) Odin ts: a tool for the black-box evaluation of time series analytics. In: Conference proceedings ITISE. Springer

Gralinski F, Wróblewska A, Stanisławek T et al (2019) Geval: tool for debugging nlp datasets and models. In: Proceedings of the 2019 ACL workshop BlackboxNLP: analyzing and interpreting neural networks for NLP, pp 254–262

Tenney I, Wexler J, Bastings J et al (2020) The language interpretability tool: extensible, interactive visualizations and analysis for nlp models. arXiv:2008.05122

Manabe H, Hagiwara M (2021) Expats: a toolkit for explainable automated text scoring. arXiv:2104.03364

Zhao WX, Mu S, Hou Y et al (2021) Recbole: towards a unified, comprehensive and efficient framework for recommendation algorithms. In: Proceedings of the 30th ACM international conference on information and knowledge management, pp 4653–4664

Anelli VW, Bellogín A, Ferrara A et al (2021) Elliot: a comprehensive and rigorous framework for reproducible recommender systems evaluation. In: Proceedings of the 44th international ACM SIGIR conference on research and development in information retrieval, pp 2405–2414

Monteiro FC, Campilho AC (2006) Performance evaluation of image segmentation. In: International conference image analysis and recognition. Springer, pp 248–259

Hossin M, Sulaiman M (2015) A review on evaluation metrics for data classification evaluations. Int J Data Min Knowl Manag Process 5(2):1

Novaković JD, Veljović A, Ilić SS et al (2017) Evaluation of classification models in machine learning. Theory Appl Math Comput Sci 7(1):39–46

Milani F, Fraternali P (2021) A dataset and a convolutional model for iconography classification in paintings. J Comput Cult Heritage (JOCCH) 14(4):1–18

Petsiuk V, Jain R, Manjunatha V et al (2020) Black-box explanation of object detectors via saliency maps. arXiv:2006.03204

Theissler A, Thomas M, Burch M et al (2022) Confusionvis: comparative evaluation and selection of multi-class classifiers based on confusion matrices. Knowl Based Syst 247(108):651

Theissler A, Vollert S, Benz P et al (2020) Ml-modelexplorer: an explorative model-agnostic approach to evaluate and compare multi-class classifiers. In: International cross-domain conference for machine learning and knowledge extraction. Springer, pp 281–300

Chen Y, Zheng B, Zhang Z et al (2020) Deep learning on mobile and embedded devices: state-of-the-art, challenges, and future directions. ACM Comput Surv 53(4):8:41-8:437

Talbi EG (2021) Automated design of deep neural networks: a survey and unified taxonomy. ACM Comput Surv 54(2):1–37

Thornton C, Hutter F, Hoos HH et al (2013) Auto-weka: combined selection and hyperparameter optimization of classification algorithms. In: Proceedings of the 19th ACM SIGKDD international conference on knowledge discovery and data mining, pp 847–855

Liu Z, Xu Z, Rajaa S et al (2020) Towards automated deep learning: analysis of the autodl challenge series 2019. In: NeurIPS 2019 competition and demonstration track, PMLR, pp 242–252

Dong X, Kedziora DJ, Musial K et al (2021) Automated deep learning: neural architecture search is not the end. arXiv:2112.09245

Acknowledgements

This work is partially supported by the project “PRECEPT - A novel decentralized edge-enabled PREsCriptivE and ProacTive framework for increased energy efficiency and well-being in residential buildings” funded by the EU H2020 Programme, Grant Agreement No. 958284.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Fraternali, P., Milani, F., Torres, R.N. et al. Black-box error diagnosis in Deep Neural Networks for computer vision: a survey of tools. Neural Comput & Applic 35, 3041–3062 (2023). https://doi.org/10.1007/s00521-022-08100-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-08100-9