Abstract

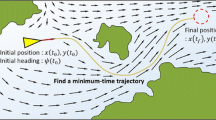

The capability of path tracking and obstacle avoidance in a complex ocean environment is the basis of the autonomous ocean vehicle voyage. In this paper, a hybrid sea surface path tracking guidance and controller for the autonomous surface underwater vehicle based on the carrot-chasing (CC) and deep reinforcement learning (DRL) is proposed. Firstly, the reference heading angle is provided by the CC algorithm, and then the DRL algorithm is used to combine it with the vehicle-borne sensor information for decision and control. The vehicle’s tracking capability is self-developed through a Markov decision process model that includes states, actions, and reward functions, so as to interact and train with the surrounding environment without prior knowledge. The simulation experiments are carried out in high-fidelity sea surface environments with wind, wave and current disturbances, and the experimental results show that the proposed method can converge effectively, has high tracking accuracy and flexible obstacle avoidance ability while avoiding the calculation of complex parameters.

Similar content being viewed by others

Data availability

All data included in this study are available upon reasonable request by contact with the corresponding author.

References

Lin M, Yang C (2020) Ocean observation technologies: a review. Chin J Mech Eng 33(1):1–18

Fossen TI (2011) Handbook of marine craft hydrodynamics and motion control. Wiley, Hoboken

Conte G, Duranti S, Merz T (2004) Dynamic 3D path following for an autonomous helicopter. IFAC Proc Vol 37(8):472–477

Naini SHJ (2015) Optimal line-of-sight guidance law for moving targets. In: 14th international conference of Iranian Aerospace Society

Lim S, Jung W, Bang H (2014) Vector field guidance for path following and arrival angle control. In: 2014 International conference on unmanned aircraft systems (ICUAS). IEEE, pp 329–338

Kim MJ, Baek W-K, Ha KN, Joo MG (2015) Way-point tracking for a hovering AUV by pid controller. In: 2015 15th international conference on control, automation and systems (ICCAS). IEEE, pp 744–746

Elmokadem T, Zribi M, Youcef-Toumi K (2016) Trajectory tracking sliding mode control of underactuated AUVs. Nonlinear Dyn 84(2):1079–1091

Liang X, Wan L, Blake JIR, Shenoi RA, Townsend N (2016) Path following of an underactuated AUV based on fuzzy backstepping sliding mode control. Int J Adv Robot Syst 13(3):122

Zhu D, Zhao Y, Yan M et al (2012) A bio-inspired neurodynamics-based backstepping path-following control of an AUV with ocean current. Int J Robot Autom 27(3):298

Zhu J, Wang J, Zheng T, Wu G (2016) Straight path following of unmanned surface vehicle under flow disturbance. In: OCEANS 2016-Shanghai. IEEE, pp 1–7

Shen C, Shi Y, Buckham B (2017) Trajectory tracking control of an autonomous underwater vehicle using Lyapunov-based model predictive control. IEEE Trans Ind Electron 65(7):5796–5805

Wu J, Wang H, Li N, Su Z (2017) UAV path following based on bladrc and inverse dynamic guidance approach. In: 2017 11th Asian control conference (ASCC). IEEE, pp 400–405

Chu Z, Zhu D (2015) 3D path-following control for autonomous underwater vehicle based on adaptive backstepping sliding mode. In: 2015 IEEE international conference on information and automation. IEEE, pp 1143–1147

Xiang X, Caoyang Yu, Zhang Q (2017) Robust fuzzy 3D path following for autonomous underwater vehicle subject to uncertainties. Comput Oper Res 84:165–177

Zhang G, Deng Y, Zhang W, Huang C (2018) Novel dvs guidance and path-following control for underactuated ships in presence of multiple static and moving obstacles. Ocean Eng 170:100–110

Mnih V, Kavukcuoglu K, Silver D, Rusu AA, Veness J, Bellemare MG, Graves A, Riedmiller M, Fidjeland AK, Ostrovski G et al (2015) Human-level control through deep reinforcement learning. Nature 518(7540):529–533

Silver D, Huang A, Maddison CJ, Guez A, Sifre L, Van Den Driessche G, Schrittwieser J, Antonoglou I, Panneershelvam V, Lanctot M et al (2016) Mastering the game of go with deep neural networks and tree search. Nature 529(7587):484–489

Cheng Y, Zhang W (2018) Concise deep reinforcement learning obstacle avoidance for underactuated unmanned marine vessels. Neurocomputing 272:63–73

Yu R, Shi Z, Huang C, Li T, Ma Q (2017) Deep reinforcement learning based optimal trajectory tracking control of autonomous underwater vehicle. In: 2017 36th Chinese control conference (CCC). IEEE, pp 4958–4965

Martinsen AB, Lekkas AM (2018) Straight-path following for underactuated marine vessels using deep reinforcement learning. IFAC-PapersOnLine 51(29):329–334

Mohammadi M, Arefi MM, Vafamand N, Kaynak O (2021) Control of an AUV with completely unknown dynamics and multi-asymmetric input constraints via off-policy reinforcement learning. Neural Comput Appl, pp 1–11

Duan K, Fong S, Chen CLP (2022) Reinforcement learning based model-free optimized trajectory tracking strategy design for an AUV. Neurocomputing 469:289–297

Carlucho I, De Paula M, Wang S, Petillot Y, Acosta GG (2018) Adaptive low-level control of autonomous underwater vehicles using deep reinforcement learning. Robot Auton Syst 107:71–86

Woo J, Chanwoo Yu, Kim N (2019) Deep reinforcement learning-based controller for path following of an unmanned surface vehicle. Ocean Eng 183:155–166

Song D, Gan W, Yao P, Zang W, Zhang Z, Xiuqing Q (2022) Guidance and control of autonomous surface underwater vehicles for target tracking in ocean environment by deep reinforcement learning. Ocean Eng 250:110947

Sun W, Zang W, Liu C, Guo T, Song D (2021) Motion pattern optimization and energy analysis for underwater glider based on the multi-objective artificial bee colony method. J Mar Sci Eng 9(3):327

Chen L, Jin Y, Yin Y (2017) Ocean wave rendering with whitecap in the visual system of a maritime simulator. J Comput Inf Technol 25(1):63–76

Mitchell JL (2005) Real-time synthesis and rendering of ocean water. ATI Res Tech Rep 4(1):121–126

Fréchot J (2006) Realistic simulation of ocean surface using wave spectra. In: Proceedings of the first international conference on computer graphics theory and applications (GRAPP 2006), pp 76–83

Yao P, Wei Y, Zhao Z (2021) Null-space-based modulated reference trajectory generator for multi-robots formation in obstacle environment. ISA Trans

Aguiar AP, Hespanha JP, Kokotović PV (2008) Performance limitations in reference tracking and path following for nonlinear systems. Automatica 44(3):598–610

Perez-Leon H, Acevedo JJ, Millan-Romera JA, Castillejo-Calle A, Maza I, Ollero A (2019) An aerial robot path follower based on the ‘carrot chasing’ algorithm. In: Iberian robotics conference. Springer, pp 37–47

Carlucho I, De Paula M, Wang S, Menna BV, Petillot YR, Acosta GG (2018) AUV position tracking control using end-to-end deep reinforcement learning. In: OCEANS 2018 MTS/IEEE Charleston. IEEE, pp 1–8

LeCun YA, Bottou L, Orr GB, Müller K-R (2012) Efficient backprop. In: Neural networks: tricks of the trade. Springer, pp 9–48

Schulman J, Wolski F, Dhariwal P, Radford A, Klimov O (2017) Proximal policy optimization algorithms. arXiv preprint arXiv:1707.06347

François-Lavet V, Henderson P, Islam R, Bellemare MG, Pineau J (2018) An introduction to deep reinforcement learning. arXiv preprint arXiv:1811.12560

Braylan A, Hollenbeck M, Meyerson E, Miikkulainen R (2015) Frame skip is a powerful parameter for learning to play atari. In: Workshops at the twenty-ninth AAAI conference on artificial intelligence

Bengio Y (2009) Learning deep architectures for AI. Now Publishers Inc, Delft

Niu H, Lu Y, Savvaris A, Tsourdos A (2016) Efficient path following algorithm for unmanned surface vehicle. In: OCEANS 2016-Shanghai. IEEE, pp 1–7

Chai R, Tsourdos A, Savvaris A, Chai S, Xia Y, Chen CLP (2020) Design and implementation of deep neural network-based control for automatic parking maneuver process. IEEE Trans Neural Netw Learn Syst 33:1400–1413

Duisterhof BP, Krishnan S, Cruz JJ, Banbury CR, Fu W, Faust A, de Croon GCHE, Reddi VJ (2019) Learning to seek: autonomous source seeking with deep reinforcement learning onboard a nano drone microcontroller. arXiv preprint arXiv:1909.11236

Acknowledgements

This work was partially supported by the Fundamental Research Funds for the Central Universities (Grant No. 201962010), the National Natural Science Foundation of China (Grant No. 51909252).

Author information

Authors and Affiliations

Contributions

DS involved in original draft preparation and hardware resources. WG involved in conceptualization, methodology, software, and writing and editing. PY involved in conceptualization, original draft preparation, and revision. WZ involved in dynamic modeling and methodology. XQ involved in revision and writing and editing.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Song, D., Gan, W., Yao, P. et al. Surface path tracking method of autonomous surface underwater vehicle based on deep reinforcement learning. Neural Comput & Applic 35, 6225–6245 (2023). https://doi.org/10.1007/s00521-022-08009-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-08009-3