Abstract

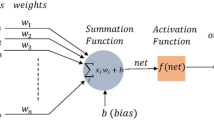

The multilayer perceptron (MLP), a type of feed-forward neural network, is widely used in various artificial intelligence problems in the literature. Backpropagation is the most common learning method used in MLPs. The gradient-based backpropagation method, which is one of the classical methods, has some disadvantages such as entrapment in local minima, convergence speed, and initialization sensitivity. To eliminate or minimize these disadvantages, there are many studies in the literature that use metaheuristic optimization methods instead of classical methods. These methods are constantly being developed. One of these is an improved grey wolf optimizer (IMP-GWO) proposed to eliminate the disadvantages of the grey wolf optimizer (GWO), which suffers from a lack of search agent diversity, premature convergence, and imbalance between exploitation and exploration. In this study, a new hybrid method, IMP-GWO-MLP, machine learning method was designed for the first time by combining IMP-GWO and MLP. IMP-GWO was used to determine the weight and bias values, which are the most challenging parts of the MLP training phase. The proposed IMP-GWO-MLP was applied to 20 datasets consisting of three different approximations, eight regression problems, and nine classification problems. The results obtained have been suggested in the literature and compared with the gradient descent-based MLP, commonly used GWO, particle swarm optimization, whale optimization algorithm, ant lion algorithm, and genetic algorithm-based MLP methods. The experimental results show that the proposed method is superior to other state-of-the-art methods in the literature. In addition, it is thought that the proposed method can be modeled with high success in real-world problems.

Similar content being viewed by others

References

McCulloch WS, Pitts W (1943) A logical calculus of the ideas immanent in nervous activity. Bull Math Biol 5(4):115–133

Feng ZK, Niu WJ (2021) Hybrid artificial neural network and cooperation search algorithm for nonlinear river flow time series forecasting in humid and semi-humid regions. Knowl Based Syst 211:106580

Fuqua D, Razzaghi T (2020) A cost-sensitive convolution neural network learning for control chart pattern recognition. Expert Syst Appl 150:113275

Chatterjee S, Sarkar S, Hore S et al (2017) Particle swarm optimization trained neural network for structural failure prediction of multistoried RC buildings. Neural Comput Appl 28:2005–2016. https://doi.org/10.1007/s00521-016-2190-2

Ulas M, Altay O, Gurgenc T, Özel C (2020) A new approach for prediction of the wear loss of PTA surface coatings using artificial neural network and basic, kernel-based, and weighted extreme learning machine. Friction 8:1102–1116. https://doi.org/10.1007/s40544-017-0340-0

Yang J, Ma J (2019) Feed-forward neural network training using sparse representation. Expert Syst Appl 116:255–264

Siemon HP, Ultsch A (1990) Kohonen networks on transputers: ımplementation and animation. In: International Neural Network Conference Springer Netherlands, pp 643-646

Orr M (1996) Introduction to radial basis function networks. Technical Report, center for cognitive science. The University of Edinburgh

Kousik N, Natarajan Y, Raja RA, Kallam S, Patan R, Gandomi AH (2021) Improved salient object detection using hybrid convolution recurrent neural network. Expert Syst Appl 166:114064

Winoto AS, Kristianus M, Premachandra C (2020) Small and slim deep convolutional neural network for mobile device. IEEE Access 8:125210–125222. https://doi.org/10.1109/ACCESS.2020.3005161

Ghosh-Dastidar S, Adeli H (2009) Spiking neural networks. Int J Neural Syst 19:295–308. https://doi.org/10.1142/S0129065709002002

Ertuğrul ÖF (2020) A novel clustering method built on random weight artificial neural networks and differential evolution. Soft Comput 24:12067–12078. https://doi.org/10.1007/s00500-019-04647-3

Fekri-Ershad S (2020) Bark texture classification using improved local ternary patterns and multilayer neural network. Expert Syst Appl 158:113509

Faris H, Aljarah I, Al-Madi N, Mirjalili S (2016) Optimizing the learning process of feedforward neural networks using lightning search algorithm meta-heuristic approaches for tackling data-mining tasks view project optimizing the learning process of feedforward neural networks using lightning search algorithm 1st reading. Int J Artif Intell Tools 25:1650033. https://doi.org/10.1142/S0218213016500330

Altay O, Ulas M, Alyamac KE (2021) DCS-ELM: a novel method for extreme learning machine for regression problems and a new approach for the SFRSCC. PeerJ Comput Sci 7:e411

Wang L, Zeng Y, Chen T (2015) Back propagation neural network with adaptive differential evolution algorithm for time series forecasting. Expert Syst Appl 42(2):855–863

Li X, Chen L, Tang Y (2020) Hard: Bit-split string matching using a heuristic algorithm to reduce memory demand. Rom J Inf Sci Technol 23:T94–T105

Precup RE, David RC, Roman RC et al (2021) Slime mould algorithm-based tuning of cost-effective fuzzy controllers for servo systems. Int J Comput Intell Syst 14:1042–1052. https://doi.org/10.2991/ijcis.d.210309.001

Martínez-Estudillo A, Martínez-Estudillo F, Hervás-Martínez C, García-Pedrajas N (2006) Evolutionary product unit based neural networks for regression. Neural Netw 19(4):477–486

Rocha M, Cortez P, Neves J (2007) Evolution of neural networks for classification and regression. Neurocomputing 70(16–18):2809–2816

Montana DJ, Davis L (1989) Training feedforward neural networks using genetic algorithms. IJCAI 89: 762–767 https://www.ijcai.org/Proceedings/89-1/Papers/122.pdf

Socha K, Blum C (2007) An ant colony optimization algorithm for continuous optimization: application to feed-forward neural network training. Neural Comput Appl 16:235–247. https://doi.org/10.1007/s00521-007-0084-z

Slowik A, Bialko M (2008) Training of artificial neural networks using differential evolution algorithm In 2008 conference on human system interactions (pp 60–65) IEEE

Mendes R, Cortez P, Rocha M, & Neves J (2002) Particle swarms for feedforward neural network training In Proceedings of the 2002 International Joint Conference on Neural Networks IJCNN'02 (Cat. No. 02CH37290) (2: 1895-1899) IEEE

Karaboga D, Akay B, Ozturk C (2007) Artificial Bee Colony (ABC) optimization algorithm for training feed-forward neural networks. In: Torra V, Narukawa Y, Yoshida Y (eds) Modeling decisions for artificial intelligence. MDAI 2007. Lecture Notes in Computer Science, vol 4617. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-540-73729-2_30

Mirjalili S (2015) How effective is the grey wolf optimizer in training multi-layer perceptrons. Appl Intell 43:150–161. https://doi.org/10.1007/s10489-014-0645-7

Aljarah I, Faris H, Mirjalili S (2018) Optimizing connection weights in neural networks using the whale optimization algorithm. Soft Comput 22(1):1–15. https://doi.org/10.1007/s00500-016-2442-1

Heidari AA, Faris H, Mirjalili S, et al (2020) Ant lion optimizer: theory, literature review, and application in multi-layer perceptron neural networks. In: Mirjalili S, Song Dong J, Lewis A (eds) Nature-Inspired Optimizers. Studies in Computational Intelligence, vol 811. Springer, Cham. https://doi.org/10.1007/978-3-030-12127-3_3

Heidari AA, Faris H, Aljarah I, Mirjalili S (2019) An efficient hybrid multilayer perceptron neural network with grasshopper optimization. Soft Comput 23:7941–7958. https://doi.org/10.1007/s00500-018-3424-2

Zamfirache IA, Precup RE, Roman RC, Petriu EM (2022) Reinforcement Learning-based control using Q-learning and gravitational search algorithm with experimental validation on a nonlinear servo system. Inf Sci (Ny) 583:99–120. https://doi.org/10.1016/j.ins.2021.10.070

Kalinli A, Karaboga D (2004) Training recurrent neural networks by using parallel tabu search algorithm based on crossover operation. Eng Appl Artif Intell 17(5):529–542

Mirjalili S, Mirjalili SM, Lewis A (2014) Let a biogeography-based optimizer train your multi-layer perceptron. Inf Sci 269:188–209

Cinar AC (2020) Training feed-forward multi-layer perceptron artificial neural networks with a tree-seed algorithm. Arab J Sci Eng 45:10915–10938. https://doi.org/10.1007/s13369-020-04872-1

Luo Q, Li J, Zhou Y, Liao L (2021) Using spotted hyena optimizer for training feedforward neural networks. Cogn Syst Res 65:1–16

Ho YC, Pepyne DL (2002) Simple explanation of the no-free-lunch theorem and its implications. J Optim Theory Appl 115:549–570. https://doi.org/10.1023/A:1021251113462

Nadimi-Shahraki M, Taghian S, Mirjalili S (2021) An improved grey wolf optimizer for solving engineering problems. Expert Syst Appl 166:113917

Ergezinger S, Thomsen E (1995) An accelerated learning algorithm for multilayer perceptrons: optimization layer by layer. IEEE Trans Neural Netw 6(1):31–42

Mirjalili S, Mirjalili S, Lewis A (2014) Grey wolf optimizer. Adv Eng Softw 69:46–61

Muro C, Escobedo R, Spector L, Coppinger RP (2011) Wolf-pack (Canis lupus) hunting strategies emerge from simple rules in computational simulations. Behav Processes 88(3):192–197

Long W, Jiao J, Liang X, Tang M (2018) An exploration-enhanced grey wolf optimizer to solve high-dimensional numerical optimization. Eng Appl Artif Intell 68:63–80

Tu Q, Chen X, Liu X (2019) Hierarchy strengthened grey wolf optimizer for numerical optimization and feature selection. IEEE Access 7:78012–78028

Heidari A, Pahlavani P (2017) An efficient modified grey wolf optimizer with Lévy flight for optimization tasks. Appl Soft Comput 60:115–134

MacNulty D, Mech LD, Smith DW (2007) A proposed ethogram of large-carnivore predatory behavior, exemplified by the wolf. J Mammal 88(3):595–605

Altay O, Ulas M, Alyamac KE (2020) Prediction of the fresh performance of steel fiber reinforced self-compacting concrete using quadratic SVM and weighted KNN models. IEEE Access 8:92647–92658

Alcalá-Fdez J, Fernández A, Luengo J et al (2011) KEEL data-mining software tool: data set repository, integration of algorithms and experimental analysis framework. J Mult Log Soft Comput 17:255–287

Frank A and Asuncion A (2010) UCI machine learning repository: data sets http://archive.ics.uci.edu/ml/datasets.php Accessed 14 May 2021

Derrac J, García S, Molina D, Herrera F (2011) A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm Evol Comput 1(1):3–18

García S, Molina D, Lozano M et al (2009) A study on the use of non-parametric tests for analyzing the evolutionary algorithms’ behaviour: a case study on the CEC’2005 special session on real parameter optimization by using non-parametric test procedures. J Heuristics 15(6):617–644. https://doi.org/10.1007/s10732-008-9080-4

Wilcoxon F (1992) Individual comparisons by ranking methods pp 196-202 https://doi.org/10.1007/978-1-4612-4380-9_16

Friedman M (1937) The use of ranks to avoid the assumption of normality implicit in the analysis of variance. J Am Stat Assoc 32:675–701. https://doi.org/10.1080/01621459.1937.10503522

Richardson A (2010) Nonparametric statistics for non-statisticians: a step-by-step approach by Gregory W Corder Dale I Foreman. Int Stat Rev 78(3):451–452. https://doi.org/10.1111/j.1751-5823.2010.00122_6.x

Funding

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Replication of results

The results provided in this paper are generated by MATLAB codes developed by the authors. Additional information and codes will be made available upon reasonable request to the corresponding author.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Altay, O., Varol Altay, E. A novel hybrid multilayer perceptron neural network with improved grey wolf optimizer. Neural Comput & Applic 35, 529–556 (2023). https://doi.org/10.1007/s00521-022-07775-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-07775-4