Abstract

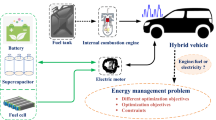

In this paper, a deep Q-learning (DQL)-based energy management strategy (EMS) is designed for an electric vehicle. Firstly, the energy management problem is reformulated to satisfy the condition of employing DQL by considering the dynamics of the system. Then, to achieve the minimum of electricity consumption and the maximum of the battery lifetime, the DQL-based EMS is designed to properly split the power demand into two parts: one is supplied by the battery and the other by supercapacitor. In addition, a hyperparameter tuning method, Bayesian optimization (BO), is introduced to optimize the hyperparameter configuration for the DQL-based EMS. Simulations are conducted to validate the improvements brought by BO and the convergence of DQL algorithm equipped with tuned hyperparameters. Simulations are also carried out on both training dataset and the testing dataset to validate the optimality and the adaptability of the DQL-based EMS, where the developed EMS outperforms a previously published rule-based EMS in almost all the cases.

Similar content being viewed by others

References

Chan CC, Wong YS, Bouscayrol A, Chen K (2009) Powering sustainable mobility: roadmaps of electric, hybrid, and fuel cell vehicles [point of view]. Proc IEEE 97(4):603–607

Wang B, Xu J, Cao B, Ning B (2017) Adaptive mode switch strategy based on simulated annealing optimization of a multi-mode hybrid energy storage system for electric vehicles. Appl Energy 194:596–608

Song Z, Li J, Han X, Xu L, Lu L, Ouyang M et al (2014) Multi-objective optimization of a semi-active battery/supercapacitor energy storage system for electric vehicles. Appl Energy 135:212–224

Cao J, Emadi A (2012) A new battery/ultracapacitor hybrid energy storage system for electric, hybrid, and plug-in hybrid electric vehicles. IEEE Trans Power Electron 27(1):122–132

Zhang L, Hu X, Wang Z, Sun F, Dorrell DG (2015) Experimental impedance investigation of an ultracapacitor at different conditions for electric vehicle applications. J Power Sources 287:129–138

Wang B, Xu J, Cao B, Qiyu L, Qingxia Y (2015) Compound-type hybrid energy storage system and its mode control strategy for electric vehicles. J Power Electron 15(3):849–859

Trovão JP, Pereirinha PG, Jorge HM, Antunes CH (2013) A multi-level energy management system for multi-source electric vehicles—an integrated rule-based meta-heuristic approach. Appl Energy 105:304–318

Blanes JM, Gutierrez R, Garrigos A, Lizan JL, Cuadrado JM (2013) Electric vehicle battery life extension using ultracapacitors and an FPGA controlled interleaved buck–boost converter. IEEE Trans Power Electron 28(12):5940–5948

Hu X, Johannesson L, Murgovski N, Egardt B (2015) Longevity-conscious dimensioning and power management of the hybrid energy storage system in a fuel cell hybrid electric bus. Appl Energy 137:913–924

Song Z, Hofmann H, Li J, Han X, Ouyang M (2015) Optimization for a hybrid energy storage system in electric vehicles using dynamic programing approach. Appl Energy 139:151–162

Sisakht ST, Barakati SM (2016) Energy management using fuzzy controller for hybrid electrical vehicles. J. Intell. Fuzzy Syst. 30:1411–1420

Santucci A, Sorniotti A, Lekakou C (2014) Power split strategies for hybrid energy storage systems for vehicular applications. J Power Source 258:395–407

Vinot E, Trigui R (2013) Optimal energy management of HEVs with hybrid storage system. Energy Convers Manag 76:437–452

Xiong R, Cao J, Yu Q (2018) Reinforcement learning-based real-time power management for hybrid energy storage system in the plug-in hybrid electric vehicle. Appl Energy 211(C):538–548

Wu J, He H, Peng J, Li Y, Li Z (2018) Continuous reinforcement learning of energy management with deep q network for a power split hybrid electric bus. Appl Energy 222:799–811

Hu Yue, Li Weimin, Xu Kun, Zahid Taimoor, Qin Feiyan, Li Chenming (2018) Energy management strategy for a hybrid electric vehicle based on deep reinforcement learning. Appl Sci 8:187. https://doi.org/10.3390/app8020187

Hredzak B, Agelidis VG, Jang M (2014) Model predictive control system for a hybrid battery-ultracapacitor power source. IEEE Trans Power Electron 29:1469–1479

Mnih V, Kavukcuoglu K, Silver D, Graves A, Antonoglou I, Wierstra D et al (2013) Playing Atari with deep reinforcement learning. Technical report. Deepmind Technologies, arXiv:1312.5602 [cs.LG]

Neary P (2018) Automatic hyperparameter tuning in deep convolutional neural networks using asynchronous reinforcement learning. In: 2018 IEEE international conference on cognitive computing (ICCC), San Francisco, CA, pp 73–77

Bergstra J, Bengio Y (2012) Random search for hyper-parameter optimization. J Mach Learn Res 13:281–305

Barsce JC, Palombarini JA, Martínez EC (2018) Towardsautonomous reinforcement learning: automatic setting of hyper-parameters using bayesian optimization. CoRR, vol. abs/1805.04748. http://arxiv.org/abs/1805.04748

Jones DR, Schonlau M, Welch WJ (1998) Efficient global optimization of expensive black-box functions. J Glob Optim 13(4):455–492

Srinivas N, Krause A, Kakade SM, Seeger M (2012) Information-theoretic regret bounds for gaussian process optimization in the bandit setting. IEEE Trans Inf Theory 58(5):3250–3265

Contal E, Perchet V, Vayatis N (2014) Gaussian process optimization with mutual information. In: International conference on machine learning (ICML)

Mnih V, Kavukcuoglu K, Silver D, Rusu A, Veness J, Bellemare G, Marc MG, Graves A, Riedmiller M, Fidjeland K, Andreas, Ostrovski G, Petersen S, Beattie C, Sadik A, Antonoglou I, King H, Kumaran D, Wierstra D, Legg S, Hassabis D (2015) Human-level control through deep reinforcement learning. Nature 518:529–533. https://doi.org/10.1038/nature14236

Bergstra J, Bardenet R, Bengio Y, Kégl B (2011) Algorithms for hyper-parameter optimization. In: Proceedings of neural information and processing systems

Jones DR (2001) A taxonomy of global optimization methods based on response surfaces. J Glob Optim 21:345–383

Thornton C, Hutter F, Hoos HH, Leyton-Brown K (2013) Auto-WEKA: combined selection and hyperparameter optimization of classification algorithms. In: Proceedings of the Knowledge discovery and data mining, pp 847–855

Xia Y, Liu C, Li YY, Liu N (2017) A boosted decision tree approach using bayesian hyper-parameter optimization for credit scoring. Expert Syst Appl 78:225–241

Meyer RT, DeCarlo RA, Pekarek S (2016) Hybrid model predictive power management of a battery-supercapacitor electric vehicle. Asian J Control 18(1):150–165

Yue SA, Wang YA, Xie QA, Zhu DA, Pedram MA, Chang NB (2015) Model-free learning-based online management of hybrid electrical energy storage systems in electric vehicles. In: Conference of the IEEE Industrial Electronics Society. IEEE

Zhang S, Xiong R, Sun F (2015) Model predictive control for power management in a plug-in hybrid electric vehicle with a hybrid energy storage system ☆. Appl Energy 185:1654–1662

Golchoubian P, Azad NL (2015) An optimal energy management system for electric vehicles hybridized with supercapacitor. In: ASME 2015 Dynamic Systems and Control Conference. American Society of Mechanical Engineers, p V001T10A004

Wang J, Liu P, Hicks-Garner J, Sherman E, Soukiazian S, Verbrugge M et al (2011) Cycle-life model for graphite-lifepo4 cells. J Power Sources 196(8):3942–3948

Safari M, te Morcret M, Teyssot A, Delacourt C (2010) Life-prediction methods for Lithium-ion batteries derived from a fatigue approach. J Electrochem Soc 157:A713–A720

Barto AG, Sutton RS (1998) Reinforcement learning: an introduction. MIT Press, Cambridge

Anschel O, Baram N, Shimkin N (2017) Averaged-dqn: variance reduction and stabilization for deep reinforcement learning. In: Proceedings of the 34th International Conference on Machine Learning, vol 70. JMLR. org, pp 176–185

Bergstra J, Komer B, Eliasmith C, Yamins D, Cox DD (2015) Hyperopt: a python library for model selection and hyperparameter optimization. Comput Sci Discov 8:014008

Levesque J-C, Durand A, Gagne C, Sabourin R (2017) Bayesian optimization for conditional hyperparameter spaces. In: Int. Joint Conf. Neural Networks, Anchorage, Alaska, USA, pp 286–293

Song Z, Hofmann H, Li J, Hou J, Han X, Ouyang M (2014) Energy management strategies comparison for electric vehicles with hybrid energy storage system. Appl Energy 134(C):321–331

Acknowledgements

This research was supported by the National Science and Technology Support Program under grant No 2014BAG06B02, Fundamental Research Funds for the Central Universities under grant No 2014HGCH0003 and the National Natural Science Foundation of China under Grant 61771178.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no competing interests in the present work.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Kong, H., Yan, J., Wang, H. et al. Energy management strategy for electric vehicles based on deep Q-learning using Bayesian optimization. Neural Comput & Applic 32, 14431–14445 (2020). https://doi.org/10.1007/s00521-019-04556-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-019-04556-4