Abstract

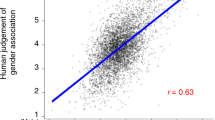

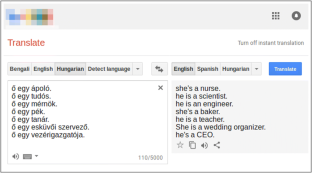

Recently there has been a growing concern in academia, industrial research laboratories and the mainstream commercial media about the phenomenon dubbed as machine bias, where trained statistical models—unbeknownst to their creators—grow to reflect controversial societal asymmetries, such as gender or racial bias. A significant number of Artificial Intelligence tools have recently been suggested to be harmfully biased toward some minority, with reports of racist criminal behavior predictors, Apple’s Iphone X failing to differentiate between two distinct Asian people and the now infamous case of Google photos’ mistakenly classifying black people as gorillas. Although a systematic study of such biases can be difficult, we believe that automated translation tools can be exploited through gender neutral languages to yield a window into the phenomenon of gender bias in AI. In this paper, we start with a comprehensive list of job positions from the U.S. Bureau of Labor Statistics (BLS) and used it in order to build sentences in constructions like “He/She is an Engineer” (where “Engineer” is replaced by the job position of interest) in 12 different gender neutral languages such as Hungarian, Chinese, Yoruba, and several others. We translate these sentences into English using the Google Translate API, and collect statistics about the frequency of female, male and gender neutral pronouns in the translated output. We then show that Google Translate exhibits a strong tendency toward male defaults, in particular for fields typically associated to unbalanced gender distribution or stereotypes such as STEM (Science, Technology, Engineering and Mathematics) jobs. We ran these statistics against BLS’ data for the frequency of female participation in each job position, in which we show that Google Translate fails to reproduce a real-world distribution of female workers. In summary, we provide experimental evidence that even if one does not expect in principle a 50:50 pronominal gender distribution, Google Translate yields male defaults much more frequently than what would be expected from demographic data alone. We believe that our study can shed further light on the phenomenon of machine bias and are hopeful that it will ignite a debate about the need to augment current statistical translation tools with debiasing techniques—which can already be found in the scientific literature.

Similar content being viewed by others

References

Angwin J, Larson J, Mattu S, Kirchner L (2016) Machine bias: there’s software used across the country to predict future criminals and it’s biased against blacks. https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing. Last visited 2017-12-17

Bahdanau D, Cho K, Bengio Y (2014) Neural machine translation by jointly learning to align and translate. arxiv:1409.0473. Accessed 9 Mar 2019

Bellens E (2018) Google translate est sexiste. https://datanews.levif.be/ict/actualite/google-translate-est-sexiste/article-normal-889277.html?cookie_check=1549374652. Posted 11 Sep 2018

Boitet C, Blanchon H, Seligman M, Bellynck V (2010) MT on and for the web. In: 2010 International conference on natural language processing and knowledge engineering (NLP-KE), IEEE, pp 1–10

Bolukbasi T, Chang KW, Zou JY, Saligrama V, Kalai AT (2016) Man is to computer programmer as woman is to homemaker? Debiasing word embeddings. In: Advances in neural information processing systems 29: annual conference on neural information processing systems 2016, December 5–10. Barcelona, Spain, pp 4349–4357

Boroditsky L, Schmidt LA, Phillips W (2003) Sex, syntax, and semantics. In: Getner D, Goldin-Meadow S (eds) Language in mind: advances in the study of language and thought. MIT Press, Cambridge, pp 61–79

Bureau of Labor Statistics (2017) Table 11: employed persons by detailed occupation, sex, race, and Hispanic or Latino ethnicity, 2017. Labor force statistics from the current population survey, United States Department of Labor, Washington D.C

Carl M, Way A (2003) Recent advances in example-based machine translation, vol 21. Springer, Berlin

Chomsky N (2011) The golden age: a look at the original roots of artificial intelligence, cognitive science, and neuroscience (partial transcript of an interview with N. Chomsky at MIT150 Symposia: Brains, minds and machines symposium). https://chomsky.info/20110616/. Last visited 26 Dec 2017

Clauburn T (2018) Boffins bash Google Translate for sexism. https://www.theregister.co.uk/2018/09/10/boffins_bash_google_translate_for_sexist_language/. Posted 10 Sep 2018

Dascal M (1982) Universal language schemes in England and France, 1600–1800 comments on James Knowlson. Studia leibnitiana 14(1):98–109

Diño G (2019) He said, she said: addressing gender in neural machine translation. https://slator.com/technology/he-said-she-said-addressing-gender-in-neural-machine-translation/. Posted 22 Jan 2019

Dryer MS, Haspelmath M (eds) (2013) WALS online. Max Planck Institute for Evolutionary Anthropology, Leipzig

Firat O, Cho K, Sankaran B, Yarman-Vural FT, Bengio Y (2017) Multi-way, multilingual neural machine translation. Comput Speech Lang 45:236–252. https://doi.org/10.1016/j.csl.2016.10.006

Garcia M (2016) Racist in the machine: the disturbing implications of algorithmic bias. World Policy J 33(4):111–117

Google: language support for the neural machine translation model (2017). https://cloud.google.com/translate/docs/languages#languages-nmt. Last visited 19 Mar 2018

Gordin MD (2015) Scientific Babel: how science was done before and after global English. University of Chicago Press, Chicago

Hajian S, Bonchi F, Castillo C (2016) Algorithmic bias: from discrimination discovery to fairness-aware data mining. In: Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining, ACM, pp 2125–2126

Hutchins WJ (1986) Machine translation: past, present, future. Ellis Horwood, Chichester

Johnson M, Schuster M, Le QV, Krikun M, Wu Y, Chen Z, Thorat N, Viégas FB, Wattenberg M, Corrado G, Hughes M, Dean J (2017) Google’s multilingual neural machine translation system: enabling zero-shot translation. TACL 5:339–351

Kay P, Kempton W (1984) What is the Sapir–Whorf hypothesis? Am Anthropol 86(1):65–79

Kelman S (2014) Translate community: help us improve google translate!. https://search.googleblog.com/2014/07/translate-community-help-us-improve.html. Last visited 12 Mar 2018

Kirkpatrick K (2016) Battling algorithmic bias: how do we ensure algorithms treat us fairly? Commun ACM 59(10):16–17

Knebel P (2019) Nós, os robôs e a ética dessa relação. https://www.jornaldocomercio.com/_conteudo/cadernos/empresas_e_negocios/2019/01/665222-nos-os-robos-e-a-etica-dessa-relacao.html. Posted 4 Feb 2019

Koehn P (2009) Statistical machine translation. Cambridge University Press, Cambridge

Koehn P, Hoang H, Birch A, Callison-Burch C, Federico M, Bertoldi N, Cowan B, Shen W, Moran C, Zens R, Dyer C, Bojar O, Constantin A, Herbst E (2007) Moses: open source toolkit for statistical machine translation. In: ACL 2007, Proceedings of the 45th annual meeting of the association for computational linguistics, June 23–30, 2007, Prague, Czech Republic. http://aclweb.org/anthology/P07-2045. Accessed 9 Mar 2019

Locke WN, Booth AD (1955) Machine translation of languages: fourteen essays. Wiley, New York

Mills KA (2017) ’Racist’ soap dispenser refuses to help dark-skinned man wash his hands—but Twitter blames ’technology’. http://www.mirror.co.uk/news/world-news/racist-soap-dispenser-refuses-help-11004385. Last visited 17 Dec 2017

Moss-Racusin CA, Molenda AK, Cramer CR (2015) Can evidence impact attitudes? Public reactions to evidence of gender bias in stem fields. Psychol Women Q 39(2):194–209

Norvig P (2017) On Chomsky and the two cultures of statistical learning. http://norvig.com/chomsky.html. Last visited 17 Dec 2017

Olson P (2018) The algorithm that helped Google Translate become sexist. https://www.forbes.com/sites/parmyolson/2018/02/15/the-algorithm-that-helped-google-translate-become-sexist/#1c1122c27daa. Last visited 12 Mar 2018

Papenfuss M (2017) Woman in China says colleague’s face was able to unlock her iPhone X. http://www.huffpostbrasil.com/entry/iphone-face-recognition-double_us_5a332cbce4b0ff955ad17d50. Last visited 17 Dec 2017

Rixecker K (2018) Google Translate verstärkt sexistische vorurteile. https://t3n.de/news/google-translate-verstaerkt-sexistische-vorurteile-1109449/. Posted 11 Sep 2018

Santacreu-Vasut E, Shoham A, Gay V (2013) Do female/male distinctions in language matter? Evidence from gender political quotas. Appl Econ Lett 20(5):495–498

Schiebinger L (2014) Scientific research must take gender into account. Nature 507(7490):9

Shankland S (2017) Google Translate now serves 200 million people daily. https://www.cnet.com/news/google-translate-now-serves-200-million-people-daily/. Last visited 12 Mar 2018

Thompson AJ (2014) Linguistic relativity: can gendered languages predict sexist attitudes?. Linguistics Department, Montclair State University, Montclair

Wang Y, Kosinski M (2018) Deep neural networks are more accurate than humans at detecting sexual orientation from facial images. J Personal Soc Psychol 114(2):246–257

Weaver W (1955) Translation. In: Locke WN, Booth AD (eds) Machine translation of languages, vol 14. Technology Press, MIT, Cambridge, pp 15–23. http://www.mt-archive.info/Weaver-1949.pdf. Last visited 17 Dec 2017

Women’s Bureau – United States Department of Labor (2017) Traditional and nontraditional occupations. https://www.dol.gov/wb/stats/nontra_traditional_occupations.htm. Last visited 30 May 2018

Acknowledgements

This study was financed in part by the Coordenação de Aperfeiçoamento de Pessoal de Nível Superior - Brasil (CAPES) - Finance Code 001 and the Conselho Nacional de Desenvolvimento Científico e Tecnológico (CNPq).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

All authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Prates, M.O.R., Avelar, P.H. & Lamb, L.C. Assessing gender bias in machine translation: a case study with Google Translate. Neural Comput & Applic 32, 6363–6381 (2020). https://doi.org/10.1007/s00521-019-04144-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-019-04144-6