Abstract

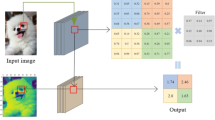

Convolutional network (ConvNet) has been shown to be able to increase the depth as well as improve performance. Deep net, however, is not perfect yet because of vanishing/exploding gradients and some weights avoid learning anything during the training. To avoid this, can we just keep the depth shallow and simply make network wide enough to achieve a similar or better performance? To answer this question, we empirically investigate the architecture of popular ConvNet models and try to widen the network enough in the fixed depth. Following this method, we carefully design a shallow and wide ConvNet configured with fractional max-pooling operation with a reasonable number of parameters. Based on our technical approach, we achieve 6.43% test error on CIFAR-10 classification dataset. At the same time, optimal performances are also achieved on benchmark datasets MNIST (0.25% test error) and CIFAR-100 (25.79% test error) compared with related methods.

Similar content being viewed by others

References

Ba J, Caruana R (2014) Do deep nets really need to be deep? Adv Neural Inf Process Syst 1:2654–2662

Bengio Y, LeCun Y et al (2007) Scaling learning algorithms towards AI. Large Scale Kernel Mach 34(5):1–41

Bengio Y, Simard P, Frasconi P (1994) Learning long-term dependencies with gradient descent is difficult. IEEE Trans Neural Netw 5(2):157–166

Bergstra J, Breuleux O, Bastien F, Lamblin P, Pascanu R, Desjardins G, Turian J, Warde-Farley D, Bengio Y (2010) Theano: a cpu and gpu math compiler in python. In: Proceedings of 9th Python in Science Conference, pp 1–7

Ciregan D, Meier U, Schmidhuber J (2012) Multi-column deep neural networks for image classification. In: 2012 IEEE conference on computer vision and pattern recognition (CVPR), pp 3642–3649. IEEE

Coates A, Ng A, Lee H (2011) An analysis of single-layer networks in unsupervised feature learning. In: Proceedings of the fourteenth international conference on artificial intelligence and statistics, pp 215–223

Collobert R, Kavukcuoglu K, Farabet C (2011) Torch7: a matlab-like environment for machine learning. In: BigLearn, NIPS Workshop, number EPFL-CONF-192376

Dauphin YN, Bengio Y (2013) Big neural networks waste capacity. arXiv preprint arXiv:1301.3583

Glorot X, Bengio Y (2010) Understanding the difficulty of training deep feedforward neural networks. In: Aistats vol 9, pp 249–256

Glorot X, Bengio Y (2010) Understanding the difficulty of training deep feedforward neural networks. In: Aistats, vol 9, pp 249–256

Goodfellow IJ, Warde-Farley D, Mirza M, Courville AC, Bengio Y (2013) Maxout networks. ICML (3) 28:1319–1327

Graham B (2014) Fractional max-pooling. arXiv preprint arXiv:1412.6071

Gu B, Sheng VS (2016) A robust regularization path algorithm for \(\nu \)-support vector classification. In: IEEE

Haralick RM, Shanmugam K et al (1973) Textural features for image classification. IEEE Trans Syst Man Cybern 3(6):610–621

He K, Sun J (2015) Convolutional neural networks at constrained time cost. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5353–5360

He K, Zhang X, Ren S, Sun J (2015) Delving deep into rectifiers: surpassing human-level performance on imagenet classification. In: Proceedings of the IEEE international conference on computer vision, pp 1026–1034

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

He K, Zhang X, Ren S, Sun J (2016) Identity mappings in deep residual networks. In: European conference on computer vision, pp 630–645. Springer

Ioffe S, Szegedy C (2015) Batch normalization: accelerating deep network training by reducing internal covariate shift. arXiv preprint arXiv:1502.03167

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. Adv Neural Inf Process Syst 25:1097–1105

Larochelle H, Erhan D, Courville A, Bergstra J, Bengio Y (2007) An empirical evaluation of deep architectures on problems with many factors of variation. In: Proceedings of the 24th international conference on machine learning, pp 473–480. ACM

LeCun Y, Bottou L, Bengio Y, Haffner P (1998) Gradient-based learning applied to document recognition. Proc IEEE 86(11):2278–2324

Liao X, Li K, Yin J (2016) Separable data hiding in encrypted image based on compressive sensing and discrete Fourier transform. Multimedia Tools Appl 1–15

Liao X, Shu C (2015) Reversible data hiding in encrypted images based on absolute mean difference of multiple neighboring pixels. J Vis Commun Image Represent 28:21–27

Lin M, Chen Q, Yan S (2013) Network in network. arXiv preprint arXiv:1312.4400

Russakovsky O, Deng J, Hao S, Krause J, Satheesh S, Ma S, Huang Z, Karpathy A, Khosla A, Bernstein M et al (2015) Imagenet large scale visual recognition challenge. Int J Comput Vis 115(3):211–252

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556

Springenberg JT, Dosovitskiy A, Brox T, Riedmiller M (2014) Striving for simplicity: the all convolutional net. arXiv preprint arXiv:1412.6806

Srivastava N, Hinton GE, Krizhevsky A, Sutskever I, Salakhutdinov R (2014) Dropout: a simple way to prevent neural networks from overfitting. J Mach Learn Res 15(1):1929–1958

Srivastava RK, Greff K, Schmidhuber J (2015) Highway networks. arXiv preprint arXiv:1505.00387

Suykens JAK, Vandewalle J (1999) Least squares support vector machine classifiers. Neural Process Lett 9(3):293–300

Szegedy C, Ioffe S, Vanhoucke V, Alemi A (2016) Inception-v4, inception-resnet and the impact of residual connections on learning. arXiv preprint arXiv:1602.07261

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A (2015) Going deeper with convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1–9

Urban G, Geras KJ, Kahou SE, Aslan O, Wang S, Caruana R, Mohamed A, Philipose M, Richardson M (2016) Do deep convolutional nets really need to be deep and convolutional? arXiv preprint arXiv:1603.05691

Zagoruyko S, Komodakis N (2016) Wide residual networks. arXiv preprint arXiv:1605.07146

Zeiler MD, Fergus R (2013) Stochastic pooling for regularization of deep convolutional neural networks. arXiv preprint arXiv:1301.3557

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Rights and permissions

About this article

Cite this article

Yue, K., Xu, F. & Yu, J. Shallow and wide fractional max-pooling network for image classification. Neural Comput & Applic 31, 409–419 (2019). https://doi.org/10.1007/s00521-017-3073-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-017-3073-x