Abstract

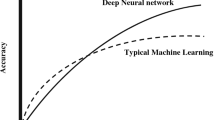

Training artificial neural networks is considered as one of the most challenging machine learning problems. This is mainly due to the presence of a large number of solutions and changes in the search space for different datasets. Conventional training techniques mostly suffer from local optima stagnation and degraded convergence, which make them impractical for datasets with many features. The literature shows that stochastic population-based optimization techniques suit this problem better and are reliably alternative because of high local optima avoidance and flexibility. For the first time, this work proposes a new learning mechanism for radial basis function networks based on biogeography-based optimizer as one of the most well-regarded optimizers in the literature. To prove the efficacy of the proposed methodology, it is employed to solve 12 well-known datasets and compared to 11 current training algorithms including gradient-based and stochastic approaches. The paper considers changing the number of neurons and investigating the performance of algorithms on radial basis function networks with different number of parameters as well. A statistical test is also conducted to judge about the significance of the results. The results show that the biogeography-based optimizer trainer is able to substantially outperform the current training algorithms on all datasets in terms of classification accuracy, speed of convergence, and entrapment in local optima. In addition, the comparison of trainers on radial basis function networks with different neurons size reveal that the biogeography-based optimizer trainer is able to train radial basis function networks with different number of structural parameters effectively.

Similar content being viewed by others

References

Aljarah I, Ludwig SA (2012) Parallel particle swarm optimization clustering algorithm based on mapreduce methodology. In: Nature and biologically inspired computing (NaBIC), 2012 fourth world congress on, pp 104–111. doi:10.1109/NaBIC.2012.6402247

de Almeida Rego JB, de Medeiros Martins A, de Costa B (2014) Deterministic system identification using RBF networks. Math Probl Eng 2014:1–10

Ayala H, Vicente H, Coelho LDS (2014) Multiobjective cuckoo search applied toradial basis function neural networks training for system identification. In: World congress, vol 19, pp 2539–2544

Bajer D, Zorić B, Martinović G (2015) Automatic design of radial basis function networks through enhanced differential evolution. In: Hybrid artificial intelligent systems, Springer, pp 244–256

Bansal J, Singh P, Saraswat M, Verma A, Jadon SS, Abraham A (2011) Inertia weight strategies in particle swarm optimization. In: Nature and biologically inspired computing (NaBIC), 2011 third world congress on, IEEE, pp 633–640

Billings SA, Zheng GL (1995) Radial basis function network configuration using genetic algorithms. Neural Netw 8(6):877–890

BoussaïD I, Lepagnot J, Siarry P (2013) A survey on optimization metaheuristics. Inform Sci 237:82–117

Broomhead D, Lowe D (1988) Multivariable functional interpolation and adaptive networks. Complex Syst 2:321–355

Castao A, Hervas-Martinez C, Gutiérrez PA, Fernandez-Navarro F, Garcia MM (2009) Classification by evolutionary generalized radial basis functions. In: Intelligent systems design and applications, 2009. ISDA’09. Ninth international conference on IEEE, pp 203–208

Chaowanawatee K, Heednacram A (2012) Implementation of cuckoo search in RBF neural network for flood forecasting. In: Proceedings of the 2012 fourth international conference on computational intelligence, communication systems and networks, IEEE Computer Society, Washington, DC, USA, CICSYN ’12, pp 22–26. doi:10.1109/CICSyN.2012.15

Chng E, Chen S, Mulgrew B (1996) Gradient radial basis function networks for nonlinear and nonstationary time series prediction. Neural Netw IEEE Trans 7(1):190–194

Chun-tao M, Xiao-xia L, Li-yong Z (2007) Radial basis function neural network based on ant colony optimization. In: Computational intelligence and security workshops, 2007. International Conference on CISW 2007, pp 59–62. doi:10.1109/CISW.2007.4425446

Cruz DPF, Maia RD, da Silva LA, de Castro LN (2016) BeeRBF: a bee-inspired data clustering approach to design RBF neural network classifiers. Neurocomputing 172:427–437. doi:10.1016/j.neucom.2015.03.106

Dash CSK, Behera AK, Pandia MK, Dehuri S (2013) Neural networks training based on differential evolution in radial basis function networks for classification of web logs. In: Distributed computing and internet technology, Springer, pp 183–194

Ding S, Xu L, Su C, Jin F (2012) An optimizing method of RBF neural network based on genetic algorithm. Neural Comput Appl 21(2):333–336

Du KL, Swamy MN (2006) Neural networks in a softcomputing framework. Springer, NewYork

Fogel DB (1997) The advantages of evolutionary computation. In: BCEC, Citeseer, pp 1–11

Gan M, Peng H, ping Dong X (2012) A hybrid algorithm to optimize RBF network architecture and parameters for nonlinear time series prediction. Appl Math Model 36(7):2911–2919. doi:10.1016/j.apm.2011.09.066, http://www.sciencedirect.com/science/article/pii/S0307904X11006251

Goldberg DE et al (1989) Genetic algorithms in search optimization and machine learning, vol 412. Addison-wesley Reading, Menlo Park

Hall M, Frank E, Holmes G, Pfahringer B, Reutemann P, Witten IH (2009) The weka data mining software: an update. ACM SIGKDD Explor Newsl 11(1):10–18

Harpham C, Dawson CW, Brown MR (2004) A review of genetic algorithms applied to training radial basis function networks. Neural Comput Appl 13(3):193–201

Ho YC, Pepyne DL (2002) Simple explanation of the no free lunch theorem of optimization. Cybern Syst Anal 38(2):292–298

Horng M (2013) Training radial basis function network using the honey bee mating optimization. Comput Model New Technol 17(3):43–49

Horng MH, Lee YX, Lee MC, Liou RJ (2012) Firefly metaheuristic algorithm for training the radial basis function network for data classification and disease diagnosis. In: Parpinnelli R, Lopes HS (eds) Theory and New Applications of Swarm Intelligence, InTech, Rijeka, pp 1–19

Huang CM, Wang FL (2007) An RBF network with OLS and EPSO algorithms for real-time power dispatch. Power Syst IEEE Trans 22(1):96–104

Hunter D, Yu H, Pukish MS III, Kolbusz J, Wilamowski BM (2012) Selection of proper neural network sizes and architectures a comparative study. IEEE Trans Ind Inform 8(2):228–240

Karaboga D, Basturk B (2007) A powerful and efficient algorithm for numerical function optimization: artificial bee colony (abc) algorithm. J Glob Optim 39(3):459–471

Kuncheva LI (1997) Initializing of an RBF network by a genetic algorithm. Neurocomputing 14(3):273–288

Kurban T, Beşdok E (2009) A comparison of rbf neural network training algorithms for inertial sensor based terrain classification. Sensors 9(8):6312–6329

Lee MJ, Choi YK (2004) An adaptive neurocontroller using RBFN for robot manipulators. Ind Electron IEEE Trans 51(3):711–717

Leonard J, Kramer MA et al (1991) Radial basis function networks for classifying process faults. Control Syst IEEE 11(3):31–38

Lichman M (2013) UCI machine learning repository. http://archive.ics.uci.edu/ml

Mak MW, Cho KW (1998) Genetic evolution of radial basis function centers for pattern classification. In: Neural networks proceedings, 1998. IEEE world congress on computational intelligence. The 1998 IEEE International Joint Conference on IEEE, vol 1, pp 669–673

Mezura-Montes E, Velázquez-Reyes J, Coello Coello CA (2006) A comparative study of differential evolution variants for global optimization. In: Proceedings of the 8th annual conference on Genetic and evolutionary computation, ACM, pp 485–492

Mirjalili S, Mirjalili SM, Lewis A (2014) Let a biogeography-based optimizer train your multi-layer perceptron. Inf Sci 269:188–209

Mohaghegi S, Valle YD, Venayagamoorthy GK, Harley RG (2005) A comparison of PSO and backpropagation for training RBF neural networks for identification of a power system with STATCOM. In: Swarm intelligence symposium, 2005. SIS 2005. Proceedings 2005 IEEE, IEEE, pp 381–384

Noman S, Shamsuddin SM, Hassanien AE (2009) Hybrid learning enhancement of RBF network with particle swarm optimization. In: Foundations of computational, intelligence Vol 1, Springer, pp 381–397

Ovreiu M, Simon D (2010) Biogeography-based optimization of neuro-fuzzy system parameters for diagnosis of cardiac disease. In: Proceedings of the 12th annual conference on Genetic and evolutionary computation, ACM, pp 1235–1242

Pedersen MEH, Chipperfield AJ (2008) Tuning differential evolution for artificial neural networks. HL0803 Hvass Laboratories

Qasem SN, Shamsuddin SM (2011) Radial basis function network based on time variant multi-objective particle swarm optimization for medical diseases diagnosis. Appl Soft Comput 11(1):1427–1438

Qasem SN, Shamsuddin SM, Zain AM (2012) Multi-objective hybrid evolutionary algorithms for radial basis function neural network design. Knowl Based Syst 27:475–497

Schwenker F, Kestler HA, Palm G (2001) Three learning phases for radial-basis-function networks. Neural Netw 14(4):439–458

Sheta AF, Jong KD (2001) Time-series forecasting using ga-tuned radial basis functions. Inf Sci 133(34):221 – 228, doi:10.1016/S0020-0255(01)00086-X, http://www.sciencedirect.com/science/article/pii/S002002550100086X, evolutionary Algorithms

Simon D (2008) Biogeography-based optimization. Evol Comput IEEE Trans 12(6):702–713

Sun X, Liu D, Li A (2011) The use of RBF based on ant colony algorithm and fisher ratio for eddy current nondestructive detecting system. In: Progress In electromagnetics research symposium proceedings, pp 633–636

Talal R (2014) Comparative study between the (ba) algorithm and (pso) algorithm to train (rbf) network at data classification. Int J Comput Appl 92(5):16–22

Tsekouras GE, Tsimikas J (2013) On training RBF neural networks using inputoutput fuzzy clustering and particle swarm optimization. Fuzzy Sets Syst 221:65–89. doi:10.1016/j.fss.2012.10.004, http://www.sciencedirect.com/science/article/pii/S0165011412004460, theme: Clustering

Vakil-Baghmisheh MT, Pavešić N (2004) Training RBF networks with selective backpropagation. Neurocomputing 62:39–64. doi:10.1016/j.neucom.2003.11.011, http://www.sciencedirect.com/science/article/pii/S0925231203005411

Wolpert DH, Macready WG (1997) No free lunch theorems for optimization. Evol Comput IEEE Trans 1(1):67–82

Wu Y, Wang H, Zhang B, Du (2012) Using radial basis function networks for function approximation and classification. ISRN Appl Math 2012:1–34

Xin J, Chen G, Hai Y (2009) A particle swarm optimizer with multi-stage linearly-decreasing inertia weight. In: Computational sciences and optimization, 2009. CSO 2009. International joint conference on IEEE, vol 1, pp 505–508

Yang XS (2009) Firefly algorithms for multimodal optimization. In: Stochastic algorithms: foundations and applications, Springer, pp 169–178

Yang XS (2010) Firefly algorithm, levy flights and global optimization. In: Research and development in intelligent systems XXVI, Springer, pp 209–218

Yang XS (2010) A new metaheuristic bat-inspired algorithm. In: Nature inspired cooperative strategies for optimization (NICSO 2010), Springer, pp 65–74

Yang XS, Deb S (2009) Cuckoo search via lévy flights. In: Nature and biologically inspired computing, 2009. NaBIC 2009. World congress on IEEE, pp 210–214

Yang XS, He X (2013) Firefly algorithm: recent advances and applications. Int J Swarm Intell 1(1):36–50

Yang XS, Deb S, Fong S (2011) Accelerated particle swarm optimization and support vector machine for business optimization and applications. In: Networked digital technologies, Springer, pp 53–66

Yu B, He X (2006) Training radial basis function networks with differential evolution. In: Granular computing, 2006 IEEE international conference on IEEE, pp 369–372

Zhang J, Sanderson AC (2009) Jade: adaptive differential evolution with optional external archive. Evol Comput IEEE Trans 13(5):945–958

Zhao ZQ, Huang DS (2007) A mended hybrid learning algorithm for radial basis function neural networks to improve generalization capability. Appl Math Model 31(7):1271–1281

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there is no conflict of interest regarding the publication of this paper.

Rights and permissions

About this article

Cite this article

Aljarah, I., Faris, H., Mirjalili, S. et al. Training radial basis function networks using biogeography-based optimizer. Neural Comput & Applic 29, 529–553 (2018). https://doi.org/10.1007/s00521-016-2559-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-016-2559-2