Abstract

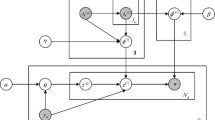

Recently, some statistical topic modeling approaches based on LDA have been applied in the field of supervised document classification, where the model generation procedure incorporates prior knowledge to improve the classification performance. However, these customizations of topic modeling are limited by the cumbersome derivation of a specific inference algorithm for each modification. In this paper, we propose a new supervised topic modeling approach for document classification problems, Neural Labeled LDA (NL-LDA), which builds on the VAE framework, and designs a special generative network to incorporate prior information. The proposed model can support semi-supervised learning based on the manifold assumption and low-density assumption. Meanwhile, NL-LDA has a consistent and concise inference method while semi-supervised learning and predicting. Quantitative experimental results demonstrate our model has outstanding performance on supervised document classification relative to the compared approaches, including traditional statistical and neural topic models. Specially, the proposed model can support both single-label and multi-label document classification. The proposed NL-LDA performs significantly well on semi-supervised classification, especially under a small amount of labeled data. Further comparisons with related works also indicate our model is competitive with state-of-the-art topic modeling approaches on semi-supervised classification.

Similar content being viewed by others

Notes

sklearn.datasets.fetch_20newsgroups.

tensorflow.keras.datasets.imdb.

We replace the diagonal covariance with the log diagonal covariance in implementation for computation convenience.

References

Abadi M, Barham P, Chen J, Chen Z, Davis A, Dean J, Devin M, Ghemawat S, Irving G, Isard M, et al. (2016) Tensorflow: A system for large-scale machine learning. In: 12th \(\{\)USENIX\(\}\) Symposium on Operating Systems Design and Implementation (\(\{\)OSDI\(\}\) 16), pp 265–283

Blei DM (2012) Probabilistic topic models. Commun ACM 55(4):77–84

Blei DM, McAuliffe JD (2010) Supervised topic models. arXiv preprint arXiv:1003.0783

Blei DM, Ng AY, Jordan MI (2003) Latent dirichlet allocation. J Mach Learn Res 3:993–1022

Burkhardt S, Kramer S (2019a) Decoupling sparsity and smoothness in the dirichlet variational autoencoder topic model. J Mach Learn Res 20(131):1–27

Burkhardt S, Kramer S (2019b) A survey of multi-label topic models. ACM SIGKDD Explorations Newsl 21(2):61–79. https://doi.org/10.1145/3373464.3373474

Card D, Tan C, Smith NA (2018) Neural models for documents with metadata. arXiv preprint arXiv:1705.09296

Chaudhary Y, Gupta P, Saxena K, Kulkarni V, Runkler T, Schütze H (2020) Topicbert for energy efficient document classification. arXiv preprint arXiv:2010.16407

Chen J, Zhang K, Zhou Y, Chen Z, Liu Y, Tang Z, Yin L (2019) A novel topic model for documents by incorporating semantic relations between words. Soft Comput 24(15):11407–11423. https://doi.org/10.1007/s00500-019-04604-0

Devlin J, Chang MW, Lee K, Toutanova K (2018) Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805

Engelen JEV, Hoos HH (2019) A survey on semi-supervised learning. Mach Learn 109(2):373–440. https://doi.org/10.1007/s10994-019-05855-6

Goutte C, Gaussier E (2005) A probabilistic interpretation of precision, recall and f-score, with implication for evaluation. In: European conference on information retrieval, Springer, pp 345–359

Grandvalet Y, Bengio Y (2004) Semi-supervised learning by entropy minimization. Adv Neural Inf Process Syst 17:529–536

Griffiths TL, Steyvers M (2004) Finding scientific topics. Proc Natl Acad Sci 101(Supplement 1):5228–5235. https://doi.org/10.1073/pnas.0307752101

Hennig P, Stern D, Herbrich R, Graepel T (2012) Kernel topic models. In: Artificial Intelligence and Statistics, pp 511–519

Joo W, Lee W, Park S, Moon IC (2020) Dirichlet variational autoencoder. Pattern Recogn 107:107107514. https://doi.org/10.1016/j.patcog.2020.107514

Kingma DP, Welling M (2013) Auto-encoding variational bayes. arXiv preprint arXiv:1312.6114

Kingma DP, Rezende DJ, Mohamed S, Welling M (2014) Semi-supervised learning with deep generative models. arXiv preprint arXiv:1406.5298

Ma T, Pan Q, Rong H, Qian Y, Tian Y, Al-Nabhan N (2021) T-bertsum: Topic-aware text summarization based on bert. IEEE Transactions on Computational Social Systems

Miao Y, Yu L, Blunsom P (2016) Neural variational inference for text processing. In: International conference on machine learning, pp 1727–1736

Palani S, Rajagopal P, Pancholi S (2021) T-bert–model for sentiment analysis of micro-blogs integrating topic model and bert. arXiv preprint arXiv:2106.01097

Pavlinek M, Podgorelec V (2017) Text classification method based on self-training and lda topic models. Expert Syst Appl 80:83–93

Peinelt N, Nguyen D, Liakata M (2020) tbert: Topic models and bert joining forces for semantic similarity detection. In: Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, pp 7047–7055

Raffel C, Shazeer N, Roberts A, Lee K, Narang S, Matena M, Zhou Y, Li W, Liu PJ (2019) Exploring the limits of transfer learning with a unified text-to-text transformer. arXiv preprint arXiv:1910.10683

Ramage D, Hall D, Nallapati R, Manning CD (2009) Labeled lda: A supervised topic model for credit attribution in multi-labeled corpora. In: Proceedings of the 2009 conference on empirical methods in natural language processing, pp 248–256

Rezende DJ, Mohamed S, Wierstra D (2014) Stochastic backpropagation and approximate inference in deep generative models. arXiv preprint arXiv:1401.4082

Rubin TN, Chambers A, Smyth P, Steyvers M (2011) Statistical topic models for multi-label document classification. Mach Learn 88(1–2):157–208. https://doi.org/10.1007/s10994-011-5272-5

Sheikhpour R, Sarram MA, Gharaghani S, Chahooki MAZ (2017) A survey on semi-supervised feature selection methods. Pattern Recogn 64:141–158. https://doi.org/10.1016/j.patcog.2016.11.003

Soleimani H, Miller DJ (2016) Semi-supervised multi-label topic models for document classification and sentence labeling. In: Proceedings of the 25th ACM International on Conference on Information and Knowledge Management, pp 105–114

Soleimani H, Miller DJ (2017) Exploiting the value of class labels on high-dimensional feature spaces: topic models for semi-supervised document classification. Pattern Anal Appl 22(2):299–309. https://doi.org/10.1007/s10044-017-0629-4

Srivastava A, Sutton C (2017) Autoencoding variational inference for topic models. arXiv preprint arXiv:1703.01488

Teh Y, Newman D, Welling M (2006) A collapsed variational bayesian inference algorithm for latent dirichlet allocation. Adv Neural Inf Process Syst 19:1353–1360

Ueda N, Saito K (2002) Parametric mixture models for multi-labeled text. Adv Neural Inf Process Syst 15:737–744

Wang C, Blei D, Li FF (2009) Simultaneous image classification and annotation. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition, IEEE, pp 1903–1910

Wang D, Thint M, Al-Rubaie A (2012) Semi-supervised latent dirichlet allocation and its application for document classification. In: 2012 IEEE/WIC/ACM International Conferences on Web Intelligence and Intelligent Agent Technology, IEEE, vol 3, pp 306–310

Wang R, Hu X, Zhou D, He Y, Xiong Y, Ye C, Xu H (2020a) Neural topic modeling with bidirectional adversarial training. arXiv preprint arXiv:2004.12331

Wang W, Guo B, Shen Y, Yang H, Chen Y, Suo X (2020b) Twin labeled LDA: a supervised topic model for document classification. Appl Intell 50(12):4602–4615. https://doi.org/10.1007/s10489-020-01798-x

Williams RJ (1992) Simple statistical gradient-following algorithms for connectionist reinforcement learning. Mach Learn 8(3):229–256

Xu W, Sun H, Deng C, Tan Y (2017) Variational autoencoder for semi-supervised text classification. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol 31(1)

Yang Y (1999) An evaluation of statistical approaches to text categorization. Inf Retrieval 1(1–2):69–90

Zhang H, Chen B, Guo D, Zhou M (2018) Whai: Weibull hybrid autoencoding inference for deep topic modeling. arXiv preprint arXiv:1803.01328

Zhang X, Zhao J, LeCun Y (2015) Character-level convolutional networks for text classification. Adv Neural Inf Process Syst 28:649–657

Zhang Y, Wei W (2014) A jointly distributed semi-supervised topic model. Neurocomputing 134:38–45

Zhou C, Ban H, Zhang J, Li Q, Zhang Y (2020) Gaussian mixture variational autoencoder for semi-supervised topic modeling. IEEE Access 8:106843–106854. https://doi.org/10.1109/access.2020.3001184

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China under Grant No. 61772352; the Science and Technology Planning Project of Sichuan Province under Grant No. 2019YFG0400, 2018GZDZX0031, 2018GZDZX0004, 2017GZDZX0003, 2018JY0182, 19ZDYF1286.

Author information

Authors and Affiliations

Contributions

Wei Wang was involved in conceptualization and writing—original draft. Wei Wang and Bing Guo were involved in methodology and investigation. Wei Wang, Bing Guo, Yaosen Chen and Xinhua Suo were involved in formal analysis. Han Yang and Wei Wang performed software. Bing Guo, Yan Shen and Han Yang were involved in writing—review and editing. Bing Guo and Yan Shen were involved in supervision and funding acquisition. Han Yang, Xinhua Suo and Yaosen Chen were involved in validation. Han Yang, Wei Wang and Yaosen Chen were involved in datasets preparation Yaosen Chen was involved in visualization.

Corresponding author

Ethics declarations

Conflict of interest

The authors declared that they have no conflicts of interest/competing interests to this work.

Availability of data and material

The datasets used during the current study are publicly available.

Code availability

The source code used in the current study is available from the first author or corresponding author on reasonable request.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wang, W., Guo, B., Shen, Y. et al. Neural labeled LDA: a topic model for semi-supervised document classification. Soft Comput 25, 14561–14571 (2021). https://doi.org/10.1007/s00500-021-06310-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-021-06310-2