Abstract

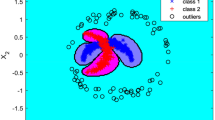

Multi-class supervised novelty detection (multi-class SND) is used for finding minor anomalies in many unknown samples when the normal samples follow a mixture of distributions. It needs to solve a quadratic programming (QP) whose size is larger than that in one-class support vector machine. In multi-class SND, one sample corresponds to \( n_{c} \) variables in QP. Here, \( n_{c} \) is the number of normal classes. Thus, it is time-consuming to solve multi-class SND directly. Fortunately, the solution of multi-class SND is only determined by minor samples which are with nonzero Lagrange multipliers. Due to the sparsity of the solution in multi-class SND, we learn multi-class SND on a small subset instead of the whole training set. The subset consists of the samples which would be with nonzero Lagrange multipliers. These samples are located near the boundary of the distributions and can be identified by the nearest neighbours’ distribution information. Our method is evaluated on two toy data sets and three hyperspectral remote sensing data sets. The experimental results demonstrate that the performance learning on the retained subset almost keeps the same as that on the whole training set. However, the training time reduces to less than one tenth of the whole training sets.

Similar content being viewed by others

References

Breunig MM, Kriegel HP, Ng RT, Sander J (2000) LOF: identifying density-based local outliers. In: Proceedings of the ACM SIGMOD 2000 international conference on management of data. ACM, pp 93–104

Butun I, Morgera SD, Sankar R (2014) A survey of intrusion detection systems in wireless sensor networks. IEEE Commun Surv Tutor 16(1):266–282

Das K, Schneider J (2007) Detecting anomalous records in categorical datasets. In: Proceedings of the 13th ACM SIGKDD international conference on knowledge discovery and data mining. ACM, pp 220–229

De Almeida MB, de Pádua Braga A, Braga JP (2000) SVM-KM: speeding SVMs learning with a priori cluster selection and k-means. In: SBM. IEEE, p 162

Eskin E, Arnold A, Prerau M, Portnoy L, Stolfo S (2002) A geometric framework for unsupervised anomaly detection. In: Barbará D, Jajodia S (eds) Applications of data mining in computer security. Kluwer Academic Publishers, Boston, pp 77–101

Guo G, Zhang JS (2007) Reducing examples to accelerate support vector regression. Pattern Recogn Lett 28(16):2173–2183

Hauskrecht M, Batal I, Valko M, Visweswaran S, Cooper GF, Clermont G (2013) Outlier detection for patient monitoring and alerting. J Biomed Inform 46(1):47–55

Jumutc V, Suykens JA (2014) Multi-class supervised novelty detection. IEEE Trans Pattern Anal Mach Intell 36(12):2510–2523

Koggalage R, Halgamuge S (2004) Reducing the number of training samples for fast support vector machine classification. Neural Inf Process Lett Rev 2(3):57–65

Kriegel HP, Zimek A (2008) Angle-based outlier detection in high-dimensional data. In: Proceedings of the 14th ACM SIGKDD international conference on knowledge discovery and data mining. ACM, pp 444–452

Kriegel HP, Kröger P, Schubert E, Zimek A (2009). LoOP: local outlier probabilities. In: Proceedings of the 18th ACM conference on information and knowledge management. ACM, pp 1649–1652

Li Y (2011) Selecting training points for one-class support vector machines. Pattern Recogn Lett 32(11):1517–1522

Li X, Lv J, Yi Z (2018) An efficient representation-based method for boundary point and outlier detection. IEEE Trans Neural Netw Learn Syst 29(1):51–62

Pham N, Pagh R (2012). A near-linear time approximation algorithm for angle-based outlier detection in high-dimensional data. In: Proceedings of the 18th ACM SIGKDD international conference on knowledge discovery and data mining. ACM, pp 877–885

Sanz JA, Galar M, Jurio A, Brugos A, Pagola M, Bustince H (2014) Medical diagnosis of cardiovascular diseases using an interval-valued fuzzy rule-based classification system. Appl Soft Comput 20:103–111

Schölkopf B, Platt JC, Shawe-Taylor J, Smola AJ, Williamson RC (2001) Estimating the support of a high-dimensional distribution. Neural Comput 13(7):1443–1471

Tax DM, Duin RP (2004) Support vector data description. Mach Learn 54(1):45–66

Wächter A, Biegler LT (2006) On the implementation of an interior-point filter line-search algorithm for large-scale nonlinear programming. Math Program 106(1):25–57

Wang D, Shi L (2008) Selecting valuable training samples for SVMs via data structure analysis. Neurocomputing 71(13–15):2772–2781

Zhu F, Yang J, Ye N, Gao C, Li G, Yin T (2014a) Neighbors’ distribution property and sample reduction for support vector machines. Appl Soft Comput 16:201–209

Zhu F, Ye N, Yu W, Xu S, Li G (2014b) Boundary detection and sample reduction for one-class support vector machines. Neurocomputing 123:166–173

Zhu F, Yang J, Gao C, Xu S, Ye N, Yin T (2016a) A weighted one-class support vector machine. Neurocomputing 189:1–10

Zhu F, Yang J, Xu S, Gao C, Ye N, Yin T (2016b) Relative density degree induced boundary detection for one-class SVM. Soft Comput 20(11):4473–4485

Zhu F, Yang J, Gao J, Xu C, Xu S, Gao C (2017) Finding the samples near the decision plane for support vector learning. Inf Sci 382:292–307

Zhu F, Gao J, Xu C, Yang J, Tao D (2018) On selecting effective patterns for fast support vector regression training. IEEE Trans Neural Netw Learn Syst 29(8):3610–3622

Funding

This study was funded by the National Natural Science Foundation of China (No. 61602221).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Weiping Shi declares that he has no conflict of interest. Shengwen Yu declares that he has no conflict of interest.

Human and animal rights

This article does not contain any studies with human participants or animals performed by any of the authors.

Informed consent

Informed consent was obtained from all individual participants included in the study.

Additional information

Communicated by V. Loia.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Shi, W., Yu, S. Fast supervised novelty detection and its application in remote sensing. Soft Comput 23, 11839–11850 (2019). https://doi.org/10.1007/s00500-018-03740-3

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-018-03740-3