Abstract

Model order reduction (MOR) techniques are often used to reduce the order of spatially discretized (stochastic) partial differential equations and hence reduce computational complexity. A particular class of MOR techniques is balancing related methods which rely on simultaneously diagonalizing the system Gramians. This has been extensively studied for deterministic linear systems. The balancing procedure has already been extended to bilinear equations, an important subclass of nonlinear systems. The choice of Gramians in Al-Baiyat and Bettayeb (In: Proceedings of the 32nd IEEE conference on decision and control, 1993) is the most frequently used approach. A balancing related MOR scheme for bilinear systems called singular perturbation approximation (SPA) has been described that relies on this choice of Gramians. However, no error bound for this method could be proved. In this paper, we extend SPA to stochastic systems with bilinear drift and linear diffusion term. However, we propose a slightly modified reduced order model in comparison to previous work and choose a different reachability Gramian. Based on this new approach, an \(L^2\)-error bound is proved for SPA which is the main result of this paper. This bound is new even for deterministic bilinear systems.

Similar content being viewed by others

1 Introduction

Many phenomena in real life can be described by partial differential equations (PDEs). For an accurate mathematical modeling of these real-world applications, it is often required to take random effects into account. Uncertainties in a PDE model can, for example, be represented by an additional noise term leading to stochastic PDEs (SPDEs) [11, 15, 28, 29].

It is often necessary to numerically approximate time-dependent SPDEs since analytic solutions do not exist in general. Discretizing in space can be considered as a first step. This can, for example, be done by spectral Galerkin [17, 19, 20] or finite element methods [2, 21, 22]. This usually leads to large-scale SDEs. Solving such complex SDE systems causes large computational cost. In this context, model order reduction (MOR) is used to save computational time by replacing high-dimensional systems by systems of low order in which the main information of the original system should be captured.

1.1 Literature review

Balancing related MOR schemes were developed for deterministic linear systems first. Famous representatives of this class of methods are balanced truncation (BT) [3, 26, 27] and singular perturbation approximation (SPA) [14, 23].

BT was extended in [5, 8] and SPA was generalized in [33] to stochastic linear systems. With this first extension, however, no \(L^2\)-error bound can be achieved [6, 12]. Therefore, an alternative approach based on a different reachability Gramian was studied for stochastic linear systems leading to an \(L^2\)-error bound for BT [12] and for SPA [32].

BT [1, 5] and SPA [18] were also generalized to bilinear systems, which we refer to as the standard approach for these systems. Although bilinear terms are very weak nonlinearities, they can be seen as a bridge between linear and nonlinear systems. This is because many nonlinear systems can be represented by bilinear systems using a so-called Carleman linearization. Applications of these equations can be found in various fields [10, 25, 34]. A drawback of the standard approach for bilinear systems is that no \(L^2\)-error bound could be shown so far. A first error bound for the standard ansatz was recently proved in [4], where an output error bound in \(L^\infty \) was formulated for infinite dimensional bilinear systems. Based on the alternative choice of Gramians in [12], a new type of BT for bilinear systems was considered [31] providing an \(L^2\)-error bound under the assumption of a possibly small bound on the controls.

A more general setting extending both the stochastic linear and the deterministic bilinear case is investigated in [30]. There, BT was studied and an \(L^2\)-error bound was proved overcoming the restriction of bounded controls in [31]. In this paper, we consider SPA for the same setting as in [30] in order to generalize the work in [18]. Moreover, we modify the reduced order model (ROM) in comparison to [18] and show an \(L^2\)-error bound which closes the gap in the theory in this context.

For further extensions of balancing related MOR techniques to other nonlinear systems, we refer to [7, 35].

1.2 Outline of the paper

This work on SPA for stochastic bilinear systems, see (1), can be interpreted as a generalization of the deterministic bilinear case [18]. This extension builds a bridge between stochastic linear systems and stochastic nonlinear systems such that SPA can possibly be used for many more stochastic equations and applications.

In Sect. 2, the procedure of SPA is described and the ROM is stated. With this, we provide an alternative to [30], where BT was studied for the same kind of systems. We also extend the work of [18] combined with a modification of the ROM and the choice of a new Gramian, compare with (3). Based on this, we obtain an error bound in Sect. 3 that was not available even for the deterministic bilinear case. Its proof requires new techniques that cannot be found in the literature so far and this is the main result of this paper. The efficiency of the error bound is shown in Sect. 5. There, the proposed version of SPA is compared with the one in [18] and with BT [30].

2 Setting and ROM

Let every stochastic process appearing in this paper be defined on a filtered probability space \(\left( \varOmega , {\mathcal {F}}, \left( {\mathcal {F}}_t\right) _{t\ge 0}, \mathbb P\right) \).Footnote 1 Suppose that \(M=\left( M_1, \ldots , M_{v}\right) ^T\) is an \(\left( {\mathcal {F}}_t\right) _{t\ge 0}\)-adapted and \({\mathbb {R}}^{v}\)-valued mean zero Lévy process with \({\mathbb {E}} \left\| M(t)\right\| ^2_2={\mathbb {E}}\left[ M^T(t)M(t)\right] <\infty \) for all \(t\ge 0\). Moreover, we assume that for all \(t, h\ge 0\) the random variable \(M\left( t+h\right) -M\left( t\right) \) is independent of \({\mathcal {F}}_t\).

We consider a large-scale stochastic control system with bilinear drift that can be interpreted as a spatially discretized SPDE. We investigate the system

We assume that \(A, N_k, H_i\in {\mathbb {R}}^{n\times n}\) (\(k\in \left\{ 1, \ldots , m\right\} \) and \(i\in \left\{ 1, \ldots , v\right\} \)), \(B\in {\mathbb {R}}^{n\times m}\) and \(C\in {\mathbb {R}}^{p\times n}\). Moreover, we define \(x(t-):=\lim _{s\uparrow t} x(s)\). The control \(u=\left( u_1, \ldots , u_{m}\right) ^T\) is assumed to be deterministic and square integrable, i.e.,

for every \(T>0\). By [28, Theorem 4.44] there is a matrix \(K=\left( k_{ij}\right) _{i, j=1, \ldots , v}\) such that \({\mathbb {E}}[M(t)M^T(t)]=K t\). K is called covariance matrix of M.

In this paper, we study SPA to obtain a ROM. SPA is a balancing related method and relies on defining a reachability Gramian P and an observability Gramian Q. These two matrices are selected such that P characterizes the states that barely contribute to the dynamics in (1a) and Q identifies the less important states in (1b), see [30] for estimates on the reachability and observability energy functionals. The estimates in [30] are global, whereas the standard choice of Gramians leads to results being valid in a small neighborhood of zero only [5, 16].

In order to ensure the existence of these Gramians, throughout the paper it is assumed that

Here, \(\lambda \left( \cdot \right) \) denotes the spectrum of a matrix. The reachability Gramian P and the observability Gramian Q are, according to [30], defined as the solutions to

where the existence of a positive definite solution to (3) goes back to [12, 32] and is ensured if (2) holds.

We approximate the large scale system (1) by a system which has a much smaller state dimension \(r\ll n\). This ROM is supposed be chosen, such that the corresponding output \(y_r\) is close to the original one, i.e., \(y_r\approx y\) in some metric. In order to be able to remove both the unimportant states in (1a) and (1b) simultaneously, the first step of SPA is a state space transformation

where \(S=\varSigma ^{-\tfrac{1}{2}} X^T L_Q^T \) and \(S^{-1}=L_PY\varSigma ^{-\tfrac{1}{2}}\). The ingredients of the balancing transformation are computed by the Cholesky factorizations \(P=L_PL_P^T\), \(Q=L_QL_Q^T\), and the singular value decomposition \(X\varSigma Y^T=L_Q^TL_P\). This transformation does not change the output y of the system, but it guarantees that the new Gramians are diagonal and equal, i.e., \(S P S^T=S^{-T}Q S^{-1}=\varSigma ={\text {diag}}(\sigma _1,\ldots , \sigma _n)\) with \(\sigma _1\ge \ldots \ge \sigma _n\) being the Hankel singular values (HSVs) of the system.

We partition the balanced coefficients of (1) as follows:

where \(A_{11}, N_{k, 11}, H_{i, 11}\in {\mathbb {R}}^{r\times r}\) (\(k\in \left\{ 1, \ldots , m\right\} \) and \(i\in \left\{ 1, \ldots , v\right\} \)), \(B_1\in {\mathbb {R}}^{r\times m}\) and \(C_1\in {\mathbb {R}}^{p\times r}\) etc. Furthermore, we partition the state variable \({\tilde{x}}\) of the balanced system and the diagonal matrix of HSVs

where \(x_1\) takes values in \({\mathbb {R}}^r\) (\(x_2\) accordingly), \(\varSigma _1\) is the diagonal matrix of large HSVs and \(\varSigma _2\) contains the small ones.

Remark 1

The balancing procedure requires the computation of the Gramians from (3) and (4). Practically, one always computes the solution of the equation in (4). The reason why an inequality is considered is that the proof of the error bound in Theorem 3 does not need an equality in (4). However, it is essential that we consider an inequality in (3). In contrast to the equation that may not have a solution, the inequality always has a solution under the given assumptions, but some regularization is needed to enforce uniqueness. In particular, one solves an optimization problem like, e.g., minimize \({\text {tr}}(P)\) subject to (3). The reason why the trace is minimized is that one wants to achieve small HSVs, because this ensures a small error according to Theorem 3. We refer to Sect. 5 for more details on the computation of P.

Based on the balanced full model (1) with matrices as in (5), the ROM is obtained by neglecting the state variables \(x_2\) corresponding to the small HSVs. The ROM using SPA is obtained by setting \(\mathrm{d}x_2(t)=0\) and furthermore neglecting the diffusion and bilinear term in the equation related to \(x_2\). Note that the condition \(\mathrm{d}x_2(t)=0\) is almost surely false. However, we enforce it since it leads to a ROM with remarkable properties. After setting \(\mathrm{d}x_2(t)=0\) it is no simplification to not take the diffusion into account since it would follow automatically that it is zero within the resulting algebraic constraint due to the consideration in [33, Section 2]. Assuming that the bilinear term is equal to zero in the equation is needed, so that the matrices of the ROM below do not depend on the control u. The dependence on u is something that is not desired. With this simplification, one can solve for \(x_2\) in the algebraic constraint. This leads to \(x_2(t)=-A_{22}^{-1}(A_{21} x_1(t)+B_2 u(t))\). Inserting this expression into the equation for \(x_1\) and into the output equation, the reduced system is

with matrices defined by

where \({\bar{x}}(0)=0\) and the time dependence in (7a) is omitted to shorten the notation. This straightforward ansatz is based on observations from the deterministic case (\(N_k=H_i=0\)), where \(x_2\) represents the fast variables, i.e., \(\dot{x}_2(t) \approx 0\) after a short time, see [23].

This ansatz for stochastic systems might, however, be false, no matter how small the HSVs corresponding to \(x_2\) are. Despite the fact that, for the motivation, a possibly less convincing argument is used, this leads to a viable MOR method for which an error bound can be proved. An averaging principle would be a mathematically well-founded alternative to this naive approach. Averaging principles for stochastic systems have for example been investigated in [36, 37]. A further strategy to derive a ROM in this context can be found in [9].

Moreover, notice that system (7) is not a bilinear system anymore due to the quadratic term in the control u. This is an essential difference to the ROM proposed in [18]. One can think about a structure preserving version by setting \(B_2=0\) in (7). This would lead to a generalized variant of the ROMs considered in [18, 33]. The reason why this simplified method is not studied is, because the error bound in Theorem 3 could not be achieved. We refer to a further discussion below Theorem 3 and to Sect. 5 where (7) is compared numerically with the version obtained by choosing \(B_2=0\).

Remark 2

Notice that if \(\sigma _r\ne \sigma _{r+1}\), then (2) implies

for \(l=1, 2\) due to considerations in [6]. This implies \(\lambda \left( A_{ll}\right) \subset {\mathbb {C}}_-\) and hence guarantees the existence of \(A^{-1}_{ll}\) for \(l=1, 2\).

3 \(L^2\)-error bound for SPA

The proof of the main result (Theorem 3) is divided into two parts. We first investigate the error that we encounter by removing the smallest HSV from the system in Sect. 3.1. In this reduction step, the structure from the full model (1) to the ROM (7) changes. Therefore, when removing the other HSVs from the system, another case needs to be studied in Sect. 3.2. There, an error bound between two ROM is achieved which are neighboring, i.e., the larger ROM has exactly one HSV more than the smaller one. The results of Sects. 3.1 and 3.2 are then combined in Sect. 3.3 in order to prove the general error bound.

For simplicity, let us from now on assume that system (1) is already balanced and has a zero initial condition (\(x_0=0\)). Thus, (3) and (4) become

i.e., \(P=Q=\varSigma ={\text {diag}}(\sigma _1, \ldots , \sigma _n)>0\).

3.1 Error bound of removing the smallest HSV

We consider the balanced system (1) with partitions as in (5) and (6). As mentioned before, the reduced state equation is obtained by approximating \(x_2\) through \({\bar{x}}_2:= - A_{22}^{-1}(A_{21} {\bar{x}} + B_2u)\) in the differential equation for \(x_1\) and within the output Eq. (1b). The ROM (7) can hence be rewritten as

where we define

We want to be able to subtract the ROM (10) from (1). Therefore, we add the following zero line to (10a)

with the compensation terms \(c_0(t):=\sum _{k=1}^m \left[ {\begin{matrix}{N}_{k, 21}&N_{k, 22} \end{matrix}}\right] \left[ {\begin{matrix}{{\bar{x}}(t)} \\ {{\bar{x}}_2(t)}\end{matrix}}\right] u_k(t)\) and \(c_i(t):=\left[ {\begin{matrix}{H}_{i, 21}&{H}_{i, 22}\end{matrix}}\right] \left[ {\begin{matrix}{{\bar{x}}(t)} \\ {{\bar{x}}_2(t)}\end{matrix}}\right] \) for \(i=1, \ldots , v\). Subtracting (10) from (1) together with (11) yields the following error system

where we introduce the variables \(\mathbf{x }_{-} =\left[ {\begin{matrix}{x}_1-{\bar{x}} \\ x_2-{\bar{x}}_2\end{matrix}}\right] \) and \(x_-=\left[ {\begin{matrix}{x}_1-{\bar{x}} \\ x_2\end{matrix}}\right] \). Moreover, adding (1a) and (10a) combined with (11) leads to

setting \(\mathbf{x }_{+}=\left[ {\begin{matrix}{x}_1+{\bar{x}} \\ x_2+{\bar{x}}_2\end{matrix}}\right] \) and \(x_+=\left[ {\begin{matrix}{x}_1+{\bar{x}} \\ x_2\end{matrix}}\right] \).

Before we prove an error bound based on (12a) and (13), we need to introduce the vector \(u^0\) of control components with a nonzero \(N_k\). This is given by

The proof of the error bound when only one HSV is removed can be reduced to the task of finding suitable estimates for \({\mathbb {E}}[x_-^T(t) \varSigma x_-(t)]\) and \({\mathbb {E}}[x_+^T(t) \varSigma ^{-1} x_+(t)]\). In particular, the rough idea is to apply Ito’s lemma to \({\mathbb {E}}[x_-^T(t) \varSigma x_-(t)]\) and subsequently the lemma of Gronwall. Then, a first estimate for (12b) is obtained, i.e.,

where f depends on the control u, the state x, the compensation terms \(c_0, \ldots , c_v\) and \(\varSigma _2^{-1}\) assuming \(\varSigma _2= \sigma I\). Of course, the dependence of the above estimate on the state and the compensation terms is not desired. That is why another inequality is derived by using Ito’s and Gronwall’s lemma for \({\mathbb {E}}[x_+^T(t) \varSigma ^{-1} x_+(t)]\) which yields

Combining both inequalities, the result of the next theorem is obtained. A similar idea was also used to determine an error bound for BT [30]. However, the proof for SPA requires different techniques to find the estimates sketched above.

Theorem 1

Let y be the output of the full model (1) with \(x(0)=0\), \({\bar{y}}\) be the output of the ROM (7) with \({\bar{x}}(0)=0\) and \(\varSigma _2=\sigma I\), \(\sigma >0\), in (6). Then, the following holds:

Proof

In order to improve the readability of this paper, the proof is given in Sect. 4.1\(\square \)

We proceed with the study of an error bound between two ROM that are neighboring.

3.2 Error bound for neighboring ROMs

In this section, we investigate the output error between two ROMs, in which the larger ROM has exactly one more HSV than the smaller one. This concept of neighboring ROMs was first introduced in [32] but in the much simpler stochastic linear setting.

The reader might wonder why a second case is considered besides the one in Sect. 3.1 since one might just start with a full model that has the same structure as the ROM (7). The reason is that it is not clear how the Gramians need to be chosen for (7). In order to investigate the error between two ROMs by SPA, a finer partition than the one in (5) is required. We partition the matrices of the balanced full system (1) as follows:

The partitioned balanced solution to (1a) and the Gramians are then of the form

We introduce the ROM of truncating \(\varSigma _3\) first. According to the procedure described in Sect. 2, the reduced system is obtained by setting \(\mathrm{d}x_3\) equal to zero, neglecting the bilinear and the diffusion term in this equation. The solution \({\bar{x}}_3\) of the resulting algebraic constraint is an approximation for \(x_3\). One can solve for this approximating variable and obtains \({\bar{x}}_3=-A_{33}^{-1}(A_{31}x_1+A_{32}x_2+B_3u)\). Inserting this result for \(x_3\) in the equations for \(x_1\), \(x_2\) and into the output Eq. (1b) leads to

where \(\left[ {\begin{matrix}{x}_1(0) \\ x_2(0)\end{matrix}}\right] =\left[ {\begin{matrix}{0} \\ 0\end{matrix}}\right] \) and

We aim to determine the error between this ROM and the reduced system of neglecting \(\varSigma _2\) and \(\varSigma _3\). This is

where \({\bar{x}}_r(0)=0\),

and we define

In order to find a bound for the error between (17b) and (18b), state variables analogous to \(\mathbf{x }_-\) and \(\mathbf{x }_+\) in Sect. 3.1 are constructed in the following and corresponding equations are derived. For simplicity, we use a similar notation again and define

Now, we find the differential equations for \(\hat{\mathbf{x }}_-\) and \(\hat{\mathbf{x }}_+\). Using (19), we find that

Applying the first line of (20), we obtain the following equation

where \({\hat{c}}_0=\sum _{k=1}^m \left[ {\begin{matrix}{N}_{k, 21}&{N}_{k, 22}&{N}_{k, 23} \end{matrix}}\right] \left[ {\begin{matrix}{{\bar{x}}_r} \\ h_1\\ h_2\end{matrix}}\right] u_k\) and \({\hat{c}}_i=\left[ {\begin{matrix}{H}_{i, 21}&{H}_{i, 22}&{H}_{i, 23} \end{matrix}}\right] \left[ {\begin{matrix}{{\bar{x}}_r} \\ h_1\\ h_2\end{matrix}}\right] \) for \(i=1, \ldots , v\). We add the zero line (21) to the state Eq. (18a) and subtract the resulting system from (17). Hence, we obtain

where \({\hat{x}}_- = \left[ {\begin{matrix}{x}_1 - {\bar{x}}_r \\ x_2\end{matrix}}\right] \). One can see that the output of (22) is the output error that we aim to analyze. The sum of (17a) and (18a) together with (21) leads to

where \({\hat{x}}_+ = \left[ {\begin{matrix}{x}_1 + {\bar{x}}_r \\ x_2\end{matrix}}\right] \). We now state the output error between the systems (17) and (18) for the case that the ROM are neighboring, i.e., the larger model has exactly one HSV more than the smaller one. Similarly as in Theorem 1, the proof relies on finding suitable estimates for \({\mathbb {E}}\left[ {\hat{x}}_-^T(t) {{\hat{\varSigma }}}{\hat{x}}_-(t)\right] \) and \({\mathbb {E}}\left[ {\hat{x}}_+^T(t) {{\hat{\varSigma }}} {\hat{x}}_+(t)\right] \), where \({\hat{\varSigma }}= \left[ {\begin{matrix}{\varSigma }_1&{} \\ &{} \varSigma _2\end{matrix}}\right] \) is a submatrix of \(\varSigma \) in (16).

Theorem 2

Let \({\bar{y}}\) be the output of the ROM (17), \({\bar{y}}_r\) be the output of the ROM (18) and \(\varSigma _2=\sigma I\), \(\sigma >0\), in (16). Then, it holds that

Proof

In order to improve the readability of this paper, the proof is presented later, see Sect. 4.2. \(\square \)

3.3 Main result

In the following, the main result of this paper is formulated. It is a consequence of Theorems 1 and 2.

Theorem 3

Let y be the output of the full model (1) with \(x(0)=0\) and \({\bar{y}}\) be the output of the ROM (7) with zero initial state. Then, for all \(T>0\), it holds that

where \(\sigma _{r+1}, \sigma _{r+2}, \ldots , \sigma _n\) are the diagonal entries of \(\varSigma _2\) and \(u^0=(u^0_1, \dots , u_m^0)^T\) is the control vector with components defined by \(u_k^0 \equiv {\left\{ \begin{array}{ll} 0 &{} \text {if }N_k = 0,\\ u_k &{} \text {else}. \end{array}\right. }\)

Proof

We apply the results in Theorems 1 and 2. We remove the HSVs step by step and exploit the triangle inequality in order to bound the error between the outputs y and \({\bar{y}}\). We introduce \({\bar{y}}_\ell \) as the output of ROM (7) with state space dimension \(\ell = r, r+1, \ldots , n-1\). Notice that \({\bar{y}}_r\) coincides with \({\bar{y}}\). Moreover, we set \({\bar{y}}_n := y\). We then have

In the reduction step from y to \({\bar{y}}_{n-1}\) only the smallest HSV \(\sigma _n\) is removed from the system. Hence, by Theorem 1, we have

The ROMs of the outputs \({\bar{y}}_{\ell }\) and \({\bar{y}}_{\ell -1}\) are neighboring according to Sect. 3.2, i.e., only the HSV \(\sigma _{\ell }\) is removed in the reduction step. By Theorem 2, we obtain

for \(\ell =r+1, \ldots , n-1\). This provides the claimed result. \(\square \)

The result in Theorem 3 tells us that the ROM (7) yields a very good approximation if the truncated HSVs (diagonal entries of \(\varSigma _2\)) are small and the vector \(u^0\) of control components with a nonzero \(N_k\) is not too large. The exponential in the error bound can be an indicator that SPA performs badly if \(u^0\) is very large.

We conclude this section by a discussion on the result in Theorem 3. It is important to notice that the dependence of the error bound on the system matrices and the covariance matrix of M is hidden in the HSVs. Those are given by \(\sigma _\ell = \sqrt{\lambda _\ell (P Q)}\). We can see from (3) and (4) that the Gramians depend on \((A, B, C, H_i, N_k, K)\) and hence \(\sigma _\ell = \sigma _\ell (A, B, C, H_i, N_k, K)\). Consequently, changing the system matrices or the covariance matrix will change the HSVs too. Now, if K is large, the terms related to \(H_i\) in (3) and (4) become more dominant which results in larger HSVs. According to Theorem 3 a worse approximation can then be expected. We also observe that the exponential term in Theorem 3 is related to the bilinearity in the drift. Setting \(N_k=0\) for all \(k=1, \ldots , m\) the exponential becomes a one. This results in the bound that is known from the stochastic linear case [32]. Choosing \(H_i=0\) for all \(i=1, \ldots , v\) yields a bound for the deterministic bilinear case. Notice that in this case, the state variables of the full and reduced system are not random anymore such that the expected value is redundant and can hence be omitted. Finally, considering \(H_i = N_k = 0\) leads to the bound obtained for the deterministic linear case [23].

Since the ROM (7) has a different structure than (1), it is an important question why we do not use a structure preserving generalization of SPA considered in [18, 33]. This variant of SPA is obtained by setting \(B_2=0\) in (7). Conducting the proof of the error bound for this simplified method, one would obtain an additional term in the error bound that depends on \(\varSigma _1\), a possibly large matrix. This indicates that SPA with \(B_2=0\) probability performs worse than the version stated in (7). We refer to Sect. 5 where both versions of SPA are compared numerically.

4 Proofs of Theorems 1 and 2

In this section, we present the pending proofs of Theorems 1 and 2.

4.1 Proof of Theorem 1

We derive a suitable upper bound for \({\mathbb {E}}[x_-^T(t) \varSigma x_-(t)]\) first applying Ito’s formula. Hence, Lemma 1 (“Appendix”) and Eq. (12a) yield

We find an estimate for the terms related to \(N_k\), that is

where \(u^0\) is defined in (14). Moreover, adding a zero, we rewrite

With (25) and (26), (24) becomes

Taking the partitions of \(x_-\) and \(\varSigma \) into account, we see that \(x_-^T\varSigma \left[ {\begin{matrix}{0} \\ c_0\end{matrix}}\right] =x_2^T \varSigma _2 c_0\). Furthermore, the partitions of \(\mathbf{x }_{-}\) and \(H_i\) yield

since \(\left[ {\begin{matrix}{H}_{i, 21}&H_{i, 22}\end{matrix}}\right] \left[ {\begin{matrix}{{\bar{x}}} \\ {{\bar{x}}_2}\end{matrix}}\right] =c_i\). Using the partition of A, it holds that

because \(\left[ {\begin{matrix}{A}_{21}&A_{22}\end{matrix}}\right] \left[ {\begin{matrix}{{\bar{x}}} \\ {{\bar{x}}_2}\end{matrix}}\right] =-B_2u\). We insert (9) and (12b) into inequality (27) and exploit the relations in (28) and (29). Hence,

We define the function \(\alpha _-(t):={\mathbb {E}}\int _0^t 2 x_2^T \varSigma _2 c_0 + \sum _{i, j=1}^v \left( 2 \left[ {\begin{matrix}{H}_{i, 21}&H_{i, 22}\end{matrix}}\right] x - c_i\right) ^T \varSigma _2 c_j k_{ij} \mathrm{d}s + {\mathbb {E}} \int _0^t 2 {\bar{x}}_2^T\varSigma _2 (\left[ {\begin{matrix}{A}_{21}&A_{22}\end{matrix}}\right] x + B_2u) \mathrm{d}s\) and apply Lemma 3 (‘Appendix’) implying

Since \(\varSigma \) is positive definite, we obtain an upper bound for the output error by

Defining the term \(\alpha _+(t):={\mathbb {E}}\int _0^t 2 x_2^T \varSigma _2^{-1} c_0 + \sum _{i, j=1}^v \left( 2 \left[ {\begin{matrix}{H}_{i, 21}&H_{i, 22}\end{matrix}}\right] x - c_i\right) ^T \varSigma _2^{-1} c_j k_{ij} \mathrm{d}s + {\mathbb {E}} \int _0^t 2 {\bar{x}}_2^T\varSigma _2^{-1} (\left[ {\begin{matrix}{A}_{21}&A_{22}\end{matrix}}\right] x + B_2u) \mathrm{d}s\) and exploiting the assumption that \(\varSigma _2=\sigma I\), leads to

The remaining step is to find a bound for the right side of (30) that does not depend on \(\alpha _+\) anymore. For that reason, a bound for the expression \({\mathbb {E}}[x_+^T(t) \varSigma ^{-1} x_+(t)]\) is derived next using Ito’s lemma again. From (13) and Lemma 1 (‘Appendix’), we obtain

Analogously to (25), it holds that

Additionally, we rearrange the term related to A as follows

Moreover, we have

We plug in the above results into (31) which gives us

From inequality (8) and the Schur complement condition on definiteness, it follows that

We multiply (33) with \(\left[ {\begin{matrix}{\mathbf{x }_{+}} \\ 2u\end{matrix}}\right] ^T\) from the left and with \(\left[ {\begin{matrix}{\mathbf{x }_{+}}\\ 2u\end{matrix}}\right] \) from the right. Hence,

Applying this result to (32) yields

We first of all see that \(x_+^T\varSigma ^{-1} \left[ {\begin{matrix}{0} \\ c_0\end{matrix}}\right] =x_2^T\varSigma _2^{-1}c_0\) using the partitions of \(x_+\) and \(\varSigma \). With the partition of \(H_i\), we moreover have

In addition, it holds that

Plugging the above relations into (35) leads to

We add \(2{\mathbb {E}}\int _0^t \sum _{i, j=1}^v c_i^T\varSigma _2^{-1} c_j k_{ij} \mathrm{d}s\) to the right side of (36) and preserve the inequality since this term is nonnegative due to Lemma 2 (‘Appendix’). This results in

Gronwall’s inequality in Lemma 3 (“Appendix”) yields

We find an estimate for the following expression:

Combining (37) with (38), we obtain

Comparing this result with (30) implies

4.2 Proof of Theorem 2

We make use of Eqs. (22a) and (23) in order to prove this bound. We set \({\hat{\varSigma }}= \left[ {\begin{matrix}{\varSigma }_1&{} \\ &{} \varSigma _2\end{matrix}}\right] \) as a submatrix of \(\varSigma \) in (16). Lemma 1 (‘Appendix’) now yields

We see that the right side of (41) contains the submatrices \({\hat{A}}, {\hat{B}}, {\hat{H}}, {\hat{N}}\) and \({\hat{\varSigma }}\). In order to be able to refer to the full matrix inequality (9), we find upper bounds for certain terms in the following involving the full matrices A, B, H, N and \(\varSigma \). With the same estimate as in (25) and the control vector \(u^0\) defined in (14), we have

Adding the term \(\sum _{k=1}^m \left( \left[ {\begin{matrix}{N}_{k, 31}&{N}_{k, 32}&{N}_{k, 33} \end{matrix}}\right] \hat{\mathbf{x }}_{-}(s)\right) ^T \varSigma _3 \left[ {\begin{matrix}{N}_{k, 31}&{N}_{k, 32}&{N}_{k, 33} \end{matrix}}\right] \hat{\mathbf{x }}_{-}(s)\) to the right side of this inequality results in

Moreover, it holds that

We derive \(\left[ {\begin{matrix}{A}_{31}&{A}_{32}&A_{33}\end{matrix}}\right] \left[ {\begin{matrix}{x}_{1}\\ x_{2}\\ {\bar{x}}_{3}\end{matrix}}\right] =-B_3u\) by the definition of \({\bar{x}}_3\). Moreover, it can be seen from the second line of (20) that \(\left[ {\begin{matrix}{A}_{31}&{A}_{32}&A_{33}\end{matrix}}\right] \hat{\mathbf{x }}_{-}=0\). Hence,

It remains to find a suitable upper bound related to the expression depending on \({\hat{H}}_i\). We first of all see that

The term \(\sum _{i, j=1}^v \left( \left[ {\begin{matrix}{H}_{i, 31}&{H}_{i, 32}&{H}_{i, 33} \end{matrix}}\right] \hat{\mathbf{x }}_{-}(s)\right) ^T \varSigma _3 \left[ {\begin{matrix}{H}_{j, 31}&{H}_{j, 32}&{H}_{j, 33} \end{matrix}}\right] \hat{\mathbf{x }}_{-}(s) k_{ij}\) is nonnegative by Lemma 2 (‘Appendix’). Adding this term to the right side of the above equation yields

Applying (42), (43) and (44) to (41), results in

Using that \({\hat{c}}_i=\left[ {\begin{matrix}{H}_{i, 21}&{H}_{i, 22}&{H}_{i, 23} \end{matrix}}\right] \left[ {\begin{matrix}{{\bar{x}}_r} \\ h_1\\ h_2\end{matrix}}\right] \), we have

It can be seen further that

taking the first line of (20) into account. Inserting (46) and (47) into (45) and using the fact that \(2 {\hat{x}}_-^T{\hat{\varSigma }} \left[ {\begin{matrix}{0} \\ {\hat{c}}_0\end{matrix}}\right] = 2 x_2 \varSigma _2 {\hat{c}}_0 \) leads to

where we set \({\hat{\alpha }}_-(t):={\mathbb {E}}\int _0^t 2 x_2^T \varSigma _2 {\hat{c}}_0 + \left( 2 \left[ {\begin{matrix}{H}_{i, 21}&H_{i, 22}&H_{i, 23}\end{matrix}}\right] \left[ {\begin{matrix}{x}_1\\ x_2 \\ {\bar{x}}_3\end{matrix}}\right] - {\hat{c}}_i\right) ^T \varSigma _2 {\hat{c}}_j \mathrm{d}s +{\mathbb {E}}\int _0^t 2 h_1^T\varSigma _2 (\left[ {\begin{matrix}{A}_{21}&A_{22}&A_{23}\end{matrix}}\right] \left[ {\begin{matrix}{x}_1\\ x_2 \\ {\bar{x}}_3\end{matrix}}\right] + B_2u) \mathrm{d}s\). With (9) and (22b), we obtain

Applying Lemma 3 (‘Appendix’) to this inequality yields

Since the above left side of the inequality is positive, we obtain

We exploit that \(\varSigma _2=\sigma I\). Hence, we have

where we set \({\hat{\alpha }}_+(t):={\mathbb {E}}\int _0^t 2 x_2^T \varSigma _2^{-1} {\hat{c}}_0 + \left( 2 \left[ {\begin{matrix}{H}_{i, 21}&H_{i, 22}&H_{i, 23}\end{matrix}}\right] \left[ {\begin{matrix}{x}_1\\ x_2 \\ {\bar{x}}_3\end{matrix}}\right] - {\hat{c}}_i\right) ^T \varSigma _2^{-1} {\hat{c}}_j \mathrm{d}s +{\mathbb {E}}\int _0^t 2 h_1^T\varSigma _2^{-1} (\left[ {\begin{matrix}{A}_{21}&A_{22}&A_{23}\end{matrix}}\right] \left[ {\begin{matrix}{x}_1\\ x_2 \\ {\bar{x}}_3\end{matrix}}\right] + B_2u) \mathrm{d}s\). In order to find a suitable bound for the right side of (49), Ito’s lemma is applied to \({\mathbb {E}}[{\hat{x}}_+^T(t) {\hat{\varSigma }}^{-1}{\hat{x}}_+(t)]\). Due to (23) and Lemma 1 (‘Appendix’), we obtain

Analogously to (42), it holds that

Furthermore, we see that

Since \(\left[ {\begin{matrix}{A}_{31}&{A}_{32}&A_{33}\end{matrix}}\right] \left[ {\begin{matrix}{x}_{1}\\ x_{2}\\ {\bar{x}}_{3}\end{matrix}}\right] = \left[ {\begin{matrix}{A}_{31}&{A}_{32}&A_{33}\end{matrix}}\right] \left[ {\begin{matrix}{{\bar{x}}_r}\\ h_1\\ h_2\end{matrix}}\right] =-B_3u\) by the definition of \({\bar{x}}_3\) and the second line of (20), we obtain \(\left[ {\begin{matrix}{A}_{31}&{A}_{32}&A_{33}\end{matrix}}\right] \hat{\mathbf{x }}_{+}=-2B_3 u\). Thus,

Finally, we see that

applying Lemma 2 (‘Appendix’). With (51), (52) and (53) inequality (50) becomes

Similarly to (34), we obtain

This leads to

In the following (55) is expressed by terms depending on \(\varSigma _2\). We obtain \({\hat{x}}_+^T{\hat{\varSigma }}^{-1} \left[ {\begin{matrix}{0} \\ {\hat{c}}_0\end{matrix}}\right] =x_2^T\varSigma _2^{-1} {\hat{c}}_0\) exploiting the partitions of \({\hat{x}}_+\) and \({\hat{\varSigma }}\). The terms depending on \({\hat{H}}_i\) become

adding \(2\sum _{i, j=1}^v {\hat{c}}_i^T\varSigma _2^{-1} {\hat{c}}_j k_{ij}\) which is positive due to Lemma 2 (‘Appendix’). Furthermore, using the first line of (20), it holds that

We insert (56) and (57) into (55) and obtain

With Lemma 3 (‘Appendix’), analogously to (39), we find

5 Numerical experiments

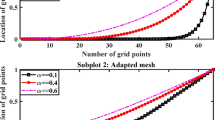

We conduct a numerical experiment in order to compare several MOR techniques and to check the performance of the error bound in Theorem 3. We determine three different ROMs. One is by SPA stated in (7). The corresponding output is denoted by \({\bar{y}}_{SPA}\). Moreover, we study a structure preserving version of SPA that is obtained by setting \(B_2=0\) in (7), i.e., \(({\bar{B}}, {\bar{D}}, {\bar{E}}_k, {\bar{F}}_i)= (B_1, 0, 0, 0)\). This technique is denoted by SPA2 and its output is written as \({\bar{y}}_{SPA2}\). Notice that this method is a generalization of the one in [18]. Finally, we deal with BT [30], another structure preserving scheme. The respective output is \({\bar{y}}_{BT}\).

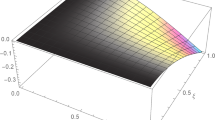

In particular, we apply the different MOR variants to a heat transfer problem that was proposed in [30]. We consider a heat equation on \([0, 1]^2\):

with Dirichlet and noisy Robin boundary conditions

where \(u\in L^2_T\) is a scalar deterministic input and w denotes a scalar standard Wiener process. We discretize the heat equation with a finite difference scheme on an equidistant \({\tilde{n}} \times {\tilde{n}}\)-mesh. This leads to an \(n={\tilde{n}}^2\)-dimensional stochastic bilinear system

where \(C= \frac{1}{n} [\begin{matrix}1&1&\dots&1\end{matrix}]\), i.e., the average temperature is considered. We refer to [5] or [12] for more details on the matrices A, B and N. There, a similar example was investigated for deterministic bilinear systems and linear stochastic systems, respectively.

According to (3) and (4), the associated Gramians are the solutions to

We multiply (60) with P from the left and the right. Applying the Schur complement condition on definiteness, (60) can then be equivalently written as the following linear matrix inequality:

see also [12, Remark III.2]. The matrix inequality (61) now is solved using the LMI-solver YALMIP [24] minimizing \({\text {tr}}(P)\). LMI-solver are generally not suitable in a large-scale setting. Therefore, we choose \({\tilde{n}}=10\) implying \(n=100\).

As in [30], set \(T=2\) and choose two different controls \(u(t)={\tilde{u}}(t), {\hat{u}}(t)\), where \({\tilde{u}}(t)=\cos (\pi t)\) and \({\hat{u}}(t)=\frac{1}{\sqrt{2}}\), \(t\in [0, T]\). We derive the ROMs using SPA, a modified structure preserving version SPA2 and BT based on Q and P. We determine the reduced systems for \(r=3, 6, 9\). We have an error bound for SPA (Theorem 3) and BT [30] but none for SPA2. The bound for BT and SPA is \({{\mathcal {E}}}{{\mathcal {B}}}_r:=2\left( \sum _{i=r+1}^{100}\sigma _i\right) \left\| u\right\| _{L^2_T}\exp \left( 0.5 \left\| u\right\| _{L^2_T}^2\right) \). Notice that \(u^0\equiv u\) in this example. We compute \(\sqrt{{\mathbb {E}}\left\| y-{\bar{y}}_{l}\right\| _{L^2_{T}}^2}\) for \(l=SPA, BT, SPA2\) in Tables 1, 2 and 3.

We can see by looking at Tables 1 and 3 that SPA performs clearly better than the structure preserving variant SPA2. This tells us that it is worth to allow a structure change since this can lead to better approximations. We can also see that the error bound for SPA is relatively tight. It is tighter for BT, compare with Table 2. However, this also means that BT performs worse than SPA. Consequently, SPA is the best choice for the example considered here.

6 Conclusions

In this paper, we investigated a large-scale stochastic bilinear system. In order to reduce the state space dimension, a model order reduction technique called singular perturbation approximation was extended to this setting. This method is based on Gramians proposed in [30] that characterize how much a state contributes to the system dynamics or to the output of a system. This choice of Gramians as well as the structure of the reduced system is different than in [18]. With this modification, we provided a new \(L^2\)-error bound that can be used to point out the cases in which the reduced order model by singular perturbation approximation delivers a good approximation to the original model. This error bound is new even for deterministic bilinear systems. Its quality was tested in a numerical experiment.

Notes

We assume that \(\left( {\mathcal {F}}_t\right) _{t\ge 0}\) is right-continuous and \({\mathcal {F}}_0\) contains all sets A with \({\mathbb {P}}(A)=0\).

References

Al-Baiyat SA, Bettayeb M (1993) A new model reduction scheme for k–power bilinear systems. In: Proceedings of the 32nd IEEE conference on decision and control, pp 22–27

Allen EJ, Novosel SJ, Zhang Z (1998) Finite element and difference approximation of some linear stochastic partial differential equations. Stochast Stochast Rep 64(1–2):117–142

Antoulas AC (2005) Approximation of large-scale dynamical systems. Advances in design and control 6. SIAM, Philadelphia

Becker S, Hartmann C (2019) Infinite-dimensional bilinear and stochastic balanced truncation with error bounds. Math Control Signals Syst 31(2):1–37

Benner P, Damm T (2011) Lyapunov equations, energy functionals, and model order reduction of bilinear and stochastic systems. SIAM J Control Optim 49(2):686–711

Benner P, Damm T, Rodriguez Cruz YR (2017) Dual pairs of generalized Lyapunov inequalities and balanced truncation of stochastic linear systems. IEEE Trans Autom Contr 62(2):782–791

Benner P, Goyal P (2017) Balanced truncation model order reduction for quadratic-bilinear control systems. Technical report. arXiv preprint arXiv:1705.00160

Benner P, Redmann M (2015) Model reduction for stochastic systems. Stoch PDE Anal Comp 3(3):291–338

Berglund N, Gentz B (2006) Noise-induced phenomena in slow-fast dynamical systems. A sample-paths approach. Springer, London

Bruni C, DiPillo G, Koch G (1971) On the mathematical models of bilinear systems. Automatica 2(1):11–26

Da Prato G, Zabczyk J (1992) Stochastic equations in infinite dimensions. Encyclopedia of mathematics and its applications, vol 44. Cambridge University Press, Cambridge

Damm T, Benner P (2014) Balanced truncation for stochastic linear systems with guaranteed error bound. In: Proceedings of MTNS–2014, Groningen, The Netherlands, pp 1492–1497

Emmrich E (1999) Discrete versions of Gronwall’s lemma and their application to the numerical analysis of parabolic problems. Preprint No. 637, TU Berlin

Fernando KV, Nicholson H (1982) Singular perturbational model reduction of balanced systems. IEEE Trans Automat Control 27:466–468

Gawarecki L, Mandrekar V (2011) Stochastic differential equations in infinite dimensions with applications to stochastic partial differential equations. Springer, Berlin

Gray WS, Mesko J (1998) Energy functions and algebraic gramians for bilinear systems. Proc Fourth IFAC Nonlinear Control Syst Des Symp 31(17):101–106

Grecksch W, Kloeden PE (1996) Time-discretised Galerkin approximations of parabolic stochastic PDEs. Bull Aust Math Soc 54(1):79–85

Hartmann C, Schäfer-Bung B, Thöns-Zueva A (2013) Balanced averaging of bilinear systems with applications to stochastic control. SIAM J Control Optim 51(3):2356–2378

Hausenblas E (2003) Approximation for semilinear stochastic evolution equations. Pot Anal 18(2):141–186

Jentzen A, Kloeden PE (2009) Overcoming the order barrier in the numerical approximation of stochastic partial differential equations with additive space-time noise. Proc R Soc A 2009(465):649–667

Kovács M, Larsson S, Saedpanah F (2010) Finite element approximation of the linear stochastic wave equation with additive noise. SIAM J Numer Anal 48(2):408–427

Kruse R (2014) Strong and weak approximation of semilinear stochastic evolution equations. Lecture notes in mathematics, vol 2093. Springer, Berlin

Liu Y, Anderson BDO (1989) Singular perturbation approximation of balanced systems. Int J Control 50(4):1379–1405

Löfberg J (2004) YALMIP: a toolbox for modeling and optimization in MATLAB. In: Proceedings of the CACSD conference, Taipei, Taiwan

Mohler RR (1973) Bilinear control processes. Academic Press, New York

Moore BC (1981) Principal component analysis in linear systems: controllability, observability, and model reduction. IEEE Trans Autom Control 26:17–32

Obinata G, Anderson BDO (2001) Model reduction for control system design. Communications and control engineering series. Springer, London

Peszat S, Zabczyk J (2007) Stochastic partial differential equations with Lévy noise. An evolution equation approach. Encyclopedia of mathematics and its applications, vol 113. Cambridge University Press, Cambridge

Prévôt C, Röckner M (2007) A concise course on stochastic partial differential equations. Lecture notes in mathematics, vol 1905. Springer, Berlin

Redmann M (2018) Energy estimates and model order reduction for stochastic bilinear systems. Int J Control. https://doi.org/10.1080/00207179.2018.1538568

Redmann M (2018) Type II balanced truncation for deterministic bilinear control systems. SIAM J Control Optim 56(4):2593–2612

Redmann M (2018) Type II singular perturbation approximation for linear systems with Lévy noise. SIAM J Control Optim 56(3):2120–2158

Redmann M, Benner P (2018) Singular perturbation approximation for linear systems with Lévy noise. Stochast Dyn 18(4):1850033

Rugh WJ (1981) Nonlinear system theory. The Johns Hopkins University Press, Baltimore

Scherpen JMA (1993) Balancing for nonlinear systems. Syst Control Lett 21:143–153

Thompson WF, Kuske RA, Monahan AH (2014) Stochastic averaging of dynamical systems with multiple time scales forced with \(\alpha \)-stable noise. SIAM Multisc Model Simul 13(4):1194–1223

Xu Y, Duan J, Xu W (2011) An averaging principle for stochastic dynamical systems with Lévy noise. Phys D Nonlinear Phen 240(17):1395–1401

Acknowledgements

The author would like to thank the anonymous reviewers for their helpful and constructive comments that greatly contributed to improving the final version of the paper.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original online version of this article was revised due to a retrospective Open Access order.

The author gratefully acknowledge the support from the DFG through the research unit FOR2402.

Supporting Lemmas

Supporting Lemmas

In this appendix, we state three important results and the corresponding references that we frequently use throughout this paper.

Lemma 1

Let \(a, b_1, \ldots , b_v\) be \({\mathbb {R}}^d\)-valued processes, where a is \(\left( {\mathcal {F}}_t\right) _{t\ge 0}\)-adapted and almost surely Lebesgue integrable and the functions \(b_i\) are integrable with respect to the mean zero square integrable Lévy process \(M=(M_1, \ldots , M_v)^T\) with covariance matrix \(K=\left( k_{ij}\right) _{i, j=1, \ldots , v}\). If the process x is given by

then, we have

Proof

We refer to [32, Lemma 5.2] for a proof of this lemma. \(\square \)

Lemma 2

Let \(A_1, \ldots , A_v\) be \(d_1\times d_2\) matrices and \(K=(k_{ij})_{i, j=1, \ldots , v}\) be a positive semidefinite matrix, then

is also positive semidefinite.

Proof

The proof can be found in [32, Proposition 5.3]. \(\square \)

Lemma 3

(Gronwall lemma) Let \(T>0\), \(z, \alpha : [0, T]\rightarrow {\mathbb {R}}\) be measurable bounded functions and \(\beta : [0, T]\rightarrow {\mathbb {R}}\) be a nonnegative integrable function. If

then it holds that

for all \(t\in [0, T]\).

Proof

The result is shown as in [13, Proposition 2.1]. \(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Redmann, M. A new type of singular perturbation approximation for stochastic bilinear systems. Math. Control Signals Syst. 32, 129–156 (2020). https://doi.org/10.1007/s00498-020-00257-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00498-020-00257-9