Abstract

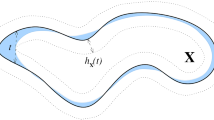

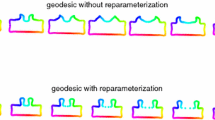

This paper describes the formulation and experimental testing of an estimation of submanifold models of animal motion. It is assumed that the animal motion is supported on a configuration manifold, Q, and that the manifold is homeomorphic to a known smooth, Riemannian manifold, S. Estimation of the configuration submanifold is achieved by finding an unknown mapping, \(\gamma \), from S to Q. The overall problem is cast as a distribution-free learning problem over the manifold of measurements. This paper defines sufficient conditions that show that the rates of convergence in \(L^2_\mu (S)\) of approximations of \(\gamma \) correspond to those known for classical distribution-free learning theory over Euclidean space. This paper concludes with a study and discussion of the performance of the proposed method using samples from recent reptile motion studies.

Similar content being viewed by others

Abbreviations

- \(\beta \) :

-

The kernel hyperparameter

- \(\Gamma \) :

-

The set of admissible mappings from \(S \rightarrow Q\)

- \(\gamma \) :

-

A mapping function from \(S \rightarrow Q\)

- \(\gamma ^*\) :

-

The optimal mapping of \(E_\mu \)

- \(\gamma _\mu \) :

-

The Regressor Function

- \(\gamma _{m.n}\) :

-

The optimal estimate from \(E_m\)

- \(\zeta _c\) :

-

The coordinates measured in the camera frame

- \(\zeta _b\) :

-

The coordinates measured in the body frame

- \(\mu \) :

-

The Joint Measure on \({\mathbb {Z}}\)

- \(\mu _X\) :

-

The Conditional measure on X

- \(\mu _S\) :

-

The Marginal measure on S

- \(\Xi _n\) :

-

The representative points of the partition \(S_\ell \)

- \(\xi \) :

-

A center of the kernel functions in \(S\)

- \(\Pi _n^S\) :

-

The Projection onto n-dimensional space

- \(A^{r,2}\) :

-

The Approximation Space

- d :

-

The dimension of X

- \({\mathbb {E}}\) :

-

The Expectation Operator

- \(E_\mu \) :

-

The Expected Risk

- \(E_m\) :

-

The Empirical Risk

- \({\mathcal {F}}_n\) :

-

A finite dimensional family of functions

- \(H^S_n\) :

-

The Space of Piece-wise Constants on \(S\)

- \({\mathbb {H}}^S_n\) :

-

The Reproducing Kernel Hilbert Space on \(S\)

- \({\mathfrak {K}}^{\mathcal {A}}\) :

-

A basis kernel function over a set \({\mathcal {A}}\)

- \(L^2_\mu \) :

-

the space of \(\mu \)-square integrable functions

- \(\ell \) :

-

The refinement depth

- m :

-

The number of samples

- n :

-

The dimension of the space of approximants

- \(O_b\) :

-

The Origin of the body frame

- p :

-

The number of frames per gait

- Q :

-

The Configuration Submanifold

- q :

-

An element from Q

- \(R_c^b\) :

-

The Rotation Matrix from camera to body frame

- S :

-

The known, smooth Riemannian manifold

- \(S_\ell \) :

-

The partitioning of S after \(\ell \) refinements

- \(s\) :

-

An element from S

- T :

-

The period over one gait

- \(t_i\) :

-

A sample of time

- \(t_0\) :

-

The start time of one gait

- \(W^{r,2}\) :

-

The Sobolev Space

- X :

-

The Ambient Space of Outputs \(\approx {\mathbb {R}}^d\)

- x :

-

An element from X

- \({\mathbb {Z}}\) :

-

The Sample Space, \(S \times X\)

- z :

-

An element of \({\mathbb {Z}}\)

References

Bender MJ, McClelland M, Bledt G, Kurdila A, Furukawa T, Mueller R (2015) Trajectory estimation of bat flight using a multi-view camera system. In: AIAA modeling and simulation technologies conference, p 1806

Birn-Jeffery AV, Higham TE (2016) Geckos decouple fore- and hind limb kinematics in response to changes in incline. Frontiers Zool 13(1):1–13

Hudson PE, Corr SA, Wilson AM (2012) High speed galloping in the cheetah (acinonyx jubatus) and the racing greyhound (canis familiaris): spatio-temporal and kinetic characteristics. J Exp Biol 215(14):2425–2434

Rivers I, Lukas H, David L (2018) Biomechanics of hover performance in Neotropical hummingbirds versus bats. Sci Adv 4(9)

Porro LB, Collings AJ, Eberhard EA, Chadwick KP, Richards CT (2017) Inverse dynamic modelling of jumping in the red-legged running frog, kassina maculata. J Exp Biol 220(10):1882–1893

Schubert T, Gkogkidis A, Ball T, Burgard W (2015) Automatic initialization for skeleton tracking in optical motion capture. In: 2015 IEEE international conference on robotics and automation (ICRA). IEEE, pp 734–739

Brossette S, Escande A, Kheddar A (2018) Multicontact postures computation on manifolds. IEEE Trans Robot 34(5):1252–1265

Ćesić J, Joukov V, Petrović I, Kulić D (2016) Full body human motion estimation on lie groups using 3D marker position measurements. In: IEEE-RAS international conference on humanoid robots, pp 826–833

Hatton RL, Choset H (2015) Nonconservativity and noncommutativity in locomotion. Eur Phys J Spec Top 224(17–18):3141–3174

Shammas EA, Choset H, Rizzi AA (2007) Geometric motion planning analysis for two classes of underactuated mechanical systems. Int J Robot Res 26(10):1043–1073

Bender M, Yang X, Chen H, Kurdila A, Müller R (2017) Gaussian process dynamic modeling of bat flapping flight. In: 2017 IEEE international conference on image processing (ICIP), pp 4542–4546

McInroe B, Astley HC, Gong C, Kawano SM, Schiebel PE, Rieser JM, Choset H, Blob RW, Goldman DI (2016) Tail use improves performance on soft substrates in models of early vertebrate land locomotors. Science 353(6295):154–158

Rieser JM, Gong C, Astley HC, Schiebel PE, Hatton RL, Choset H, Goldman DI (2019) Geometric phase and dimensionality reduction in locomoting living systems. arXiv preprint arXiv:1906.11374

Wang JM, Fleet DJ, Hertzmann A (2007) Gaussian process dynamical models for human motion. IEEE Trans Pattern Anal Mach Intell 30(2):283–298

Bayandor J, Bledt G, Dadashi S, Kurdila A, Murphy I, Lei Y (2013) Adaptive control for bioinspired flapping wing robots. In: 2013 American control conference. IEEE, pp 609–614

Horvat T, Melo K, Ijspeert AJ (2017) Spine controller for a sprawling posture robot. IEEE Robot Autom Lett 2(2):1195–1202

Karakasiliotis K, Thandiackal R, Melo K, Horvat T, Mahabadi NK, Tsitkov S, Cabelguen J-M, Ijspeert AJ (2016) From cineradiography to biorobots: an approach for designing robots to emulate and study animal locomotion. J R Soc Interface 13(119):20151089

Nyakatura JA, Melo K, Horvat T, Karakasiliotis K, Allen VR, Andikfar A, Andrada E, Arnold P, Lauströer J, Hutchinson JR et al (2019) Reverse-engineering the locomotion of a stem amniote. Nature 565(7739):351

Shi Q, Li C, Li K, Huang Q, Ishii H, Takanishi A, Fukuda T (2018) A modified robotic rat to study rat-like pitch and yaw movements. IEEE/ASME Trans Mech 23(5):2448–2458

Van Truong T, Le TQ, Byun D, Park HC, Kim M (2012) Flexible wing kinematics of a free-flying beetle (Rhinoceros Beetle Trypoxylus Dichotomus). J Bionic Eng 9(2):177–184

Michael K (2012) Meet boston dynamics’ ls3-the latest robotic war machine

Li Y, Li B, Ruan J, Rong X (2011) Research of mammal bionic quadruped robots: a review. In: 2011 IEEE 5th international conference on robotics, automation and mechatronics (RAM). IEEE, pp 166–171

Seok S, Wang A, Chuah MY, Otten D, Lang J, Kim S (2013) Design principles for highly efficient quadrupeds and implementation on the MIT cheetah robot. In: 2013 IEEE international conference on robotics and automation. IEEE, pp 3307–3312

Semini C, Barasuol V, Goldsmith J, Frigerio M, Focchi M, Gao Y, Caldwell DG (2016) Design of the hydraulically actuated, torque-controlled quadruped robot hyq2max. IEEE/ASME Trans Mech 22(2):635–646

Raibert M (2008) BigDog, the rough-terrain quadruped robot. IFAC Proc Vol (IFAC-PapersOnline) 17(1 PART 1):6–9

Ugurlu B, Havoutis I, Semini C, Caldwell DG (2013) Dynamic trot-walking with the hydraulic quadruped robot-HYQ: Analytical trajectory generation and active compliance control. In: 2013 IEEE/RSJ international conference on intelligent robots and systems. IEEE, pp 6044–6051

Zhang G, Rong X, Hui C, Li Y, Li B (2016) Torso motion control and toe trajectory generation of a trotting quadruped robot based on virtual model control. Adv Robot 30(4):284–297

Collins SH, Ruina A (2005) A bipedal walking robot with efficient and human-like gait. In: Proceedings of the 2005 IEEE international conference on robotics and automation. IEEE, pp 1983–1988

Koolen T, Bertrand S, Thomas G, De Boer T, Tingfan W, Smith J, Englsberger J, Pratt J (2016) Design of a momentum-based control framework and application to the humanoid robot atlas. Int J Humanoid Robot 13(01):1650007

Negrello F, Garabini M, Catalano MG, Kryczka P, Choi W, Caldwell DG, Bicchi A, Tsagarakis NG (2016) Walk-man humanoid lower body design optimization for enhanced physical performance. In: 2016 IEEE international conference on robotics and automation (ICRA). IEEE, pp 1817–1824

Ott C, Roa MA, Hirzinger G (2011) Posture and balance control for biped robots based on contact force optimization. In: 2011 11th IEEE-RAS international conference on humanoid robots. IEEE, pp 26–33

Radford NA, Strawser P, Hambuchen K, Mehling JS, Verdeyen WK, Stuart Donnan A, Holley J, Sanchez J, Nguyen V, Bridgwater L et al (2015) Valkyrie: Nasa’s first bipedal humanoid robot. J Field Robot 32(3):397–419

Stephens BJ, Atkeson CG (2010) Dynamic balance force control for compliant humanoid robots. In: 2010 IEEE/RSJ international conference on intelligent robots and systems. IEEE, pp 1248–1255

Shabana A (2020) Dynamics of multibody systems. Cambridge University Press, Cambridge

Haug EJ (1989) Computer aided kinematics and dynamics of mechanical systems, vol 1. Allyn and Bacon, Boston

Nikravesh PE (1988) Computer-aided analysis of mechanical systems. Prentice-Hall, Inc., Hoboken

Murray RM, Shankar Sastry S (1993) Steering nonholonomic control systems using sinusoids. Nonholonomic Motion Plan 38(5):23–51

Lynch KM, Park FC (2017) Modern robotics. Cambridge University Press, Cambridge

Francesco B, Lewis Andrew D (2004) Geometric control of mechanical systems, vol 49. Texts in applied mathematics. Springer, New York

DeVore R, Kerkyacharian G, Picard D, Temlyakov V (2006) Approximation methods for supervised learning. Found Comput Math 6(1):3–58

DeVore R, Kerkyacharian G, Picard D, Temlyakov V (2004) Mathematical methods for supervised learning. IMI Preprints 22:1–51

Györfi L, Kohler M, Krzyzak A, Walk H (2006) A distribution-free theory of nonparametric regression. Springer, Berlin

Triebel H (1992) Theory of function spaces II. Springer, Basel

Hangelbroek T, Narcowich FJ, Ward JD (2012) Polyharmonic and related kernels on manifolds: interpolation and approximation. Found Comput Math 12(5):625–670

De Vito E, Mücke N, Rosasco L (2019) Reproducing kernel hilbert spaces on manifolds: Sobolev and diffusion spaces. arXiv preprint arXiv:1905.10913

Saitoh S, Alpay D, Ball JA, Ohsawa T (2013) Reproducing kernels and their applications, vol 3. Springer, Berlin

Berlinet A, Thomas-Agnan C (2011) Reproducing kernel Hilbert spaces in probability and statistics. Springer, Berlin

Williams CKI, Rasmussen CE (2006) Gaussian processes for machine learning, vol 2. MIT Press, Cambridge

Wendland H (2004) Scattered data approximation, vol 17. Cambridge University Press, Cambridge

Hedrick TL (2008) Software techniques for two-and three-dimensional kinematic measurements of biological and biomimetic systems. Bioinspir Biomim 3(3):034001

Klus S, Schuster I, Muandet K (2020) Eigendecompositions of transfer operators in reproducing kernel hilbert spaces. Nonlinear Sci 30:283–315

Kurdila AJ, Bobade P (2018) Koopman theory and linear approximation spaces. arXiv preprint arXiv:1811.10809

Corazza S, Lars Muendermann AM, Chaudhari TD, Cobelli C, Andriacchi TP (2006) A markerless motion capture system to study musculoskeletal biomechanics: visual hull and simulated annealing approach. Ann Biomed Eng 34(6):1019–1029

Kreyszig E (1978) Introductory functional analysis with applications, vol 1. Wiley, New York

Adams RA, Fournier JJF (2003) Sobolev spaces. Elsevier, Amsterdam

Binev P, Cohen A, Dahmen W, DeVore R, Temlyakov V (2005) Universal algorithms for learning theory part I: piecewise constant functions. J Mach Learn Res 6:1297–1321

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

A. Appendix

A.1 Lebesgue and Sobolev function spaces

The goal of this section is to provide a brief review of the Lebesgue spaces, which we denote \(L^p_\mu (\Omega )\), and the Sobolev Spaces that are denoted \(W^{r,p}(\Omega )\). Both are defined over some particular domain \(\Omega \). In order to provide some motivation toward understanding these function spaces, we first introduce the space of continuous functions over the domain \(\Omega \). We denote this space \(C(\Omega )\). The conventional norm \(\Vert \cdot \Vert _{C(\Omega )}\) on \(C(\Omega )\) is given by

With this norm, the space is complete.In a complete metric space, Cauchy sequences , which are sequences in the space whose elements get closer to one another as the sequence approaches \(\infty \). converge to a limit in the space. We note that the distances between elements, and the convergence, are based on a metric induced by the norm. We can make this space a vector space with the following two operations.

Given these two operations, \(C(\Omega )\) becomes a normed linear space. As mentioned in the paper, our expected risk function is based on convergence using the \(2-\) norm, denoted \(\Vert \cdot \Vert _2\) defined by

If we instead use the \(2-\)norm on the space, \(C(\Omega )\), it is well known that the space is no longer complete. However, the space \(L^2_\mu (\Omega )\), which consists of functions f where

is complete with the metric induced by the 2 norm. We can generalize this notion to the so-called \(p-\)norms. If we consider any positive real number p, the \(\mu \)-square integrable Lebesgue functions, denoted \(L^p_\mu (\Omega )\), consist of spaces of measurable functions f for which

For functions in \(L^p_\mu (\Omega )\), we can use the norm to get a sense of the “size” of the function. Additionally, we can utilize topological notions in convergence proofs. For the space \(L^p_\mu (\Omega )\), if we have disjoint subsets \(\{A_k\}_{k=1}^n\) of \(\Omega \) where \(\Omega = \cup _{k=1}^\infty A_k\). If we define \(1_{A_k}\) as the characteristic function of the set \(A_k\), the finite-dimensional space of functions f of the form

are dense in \(L^p_\mu (\Omega )\).

While we know that the functions in this space exhibit these nice properties, we are often interested in the regularity of our functions in a particular space. It is well known that taking the derivative of functions in \(L^P_\mu (\Omega )\) can result in functions that are not in \(L^P_\mu (\Omega )\). Thus, we would like a space of functions which when we take the derivative still behave nicely. These are the Sobolev Spaces \(W^{r,p}(\Omega )\), which are functions with derivatives that are in \(L^P_\mu (\Omega )\). There are many different ways to define Sobolev Spaces over various domains. Conventionally, a norm for a Sobolev Space \(W^{r,p}(\Omega )\) can be defined as

where a is a multi-index. For functions in \(W^{r,p}(\Omega )\), we have most of the convenient properties of functions in \(L^p_\mu (\Omega )\). In fact, the \(L^p_\mu (\Omega )\) space can be thought of as a special case of a Sobolev space \(W^{r,p}(\Omega )\) when \(r = 0\). We make a final remark that there are many important details about these spaces omitted because covering all the fundamentals would require a much larger discussion. A much more detailed motivation can begin from a more elementary functional analysis texts such as [54]. Specifically, details and motivation toward Sobolev Spaces can be found in [55].

A.2 Kernel method estimation

This section outlines the minimization over \({\mathbb {H}}_n^S\) and solves for \(\gamma _{n,m}\) in terms of the kernel basis. For a particular dimension j, any estimate can be represented by a linear combination of n radial basis functions with centers \(\xi \). In other words, \( \gamma ^j_{n,m}(s) = \sum _{k=1}^n \alpha _k {\mathfrak {K}}^S_{\xi _k}(s)\). Given our fixed basis, we see that equation 9 can be solved now by determining the optimal coefficients \(\{\alpha _k^*\}_{k=1}^n\). Specifically, choosing a minimizing \(\gamma ^j_{n,m}\) is found by finding optimal coefficients \(\varvec{\alpha }^*\) where

If we arrange the coefficients, \(\alpha _k\), into a n-dimensional vector \(\varvec{\alpha } = [\alpha _1, \alpha _2, \dots , \alpha _n]^T\), create an m-dimensional vector of measurement outputs of the \(j^{th}\) dimension \(\varvec{q}^j = [q^j_1, q^j_2, \dots , q^j_m]^T\), and denote our \(n \times m\) Kernel matrix \(\varvec{K}\) where

This matrix is a tall matrix with rows equal to the large number of samples m and columns n equal to the dimension of the function space. With these matrices we can reform our minimization with matrix representations to finding the optimal vector \(\varvec{\alpha }\) in \({\mathbb {R}}^n\)

for \(X \approx {\mathbb {R}}^d\) is a Hilbert Space, which means we have an inner product related to the norm by

for any \(v \in X\). Our minimization can now be interpreted as minimizing the least squares error on an n-dimensional function space

We can determine the optimal coefficients \(\varvec{\alpha }^*\) by taking the partial derivative and setting it equal to zero. The optimal vector \(\varvec{\alpha }^*\) is, therefore,

As mentioned before, matrix inversion can prove to be computationally costly. Furthermore, ill-conditioned matrices can lead to poor estimates. We make one remark that the optimal approximation from \(H^S_n\), where we build estimates from piece-wise constants, does not face these same issues.

A.3 Proof of Theorem 1

Proof

When we define \(\gamma ^j_{n,m}:=\sum _{k=1,\ldots ,n(\ell )}\alpha _k 1_{S_{\ell ,k}}(\cdot )\), where \(\alpha _k\) represent the weighted coefficients of our piece-wise constants \(1_{S_{\ell ,k}}(\cdot )\), we have the explicit representation of \(E^j_m(\gamma ^j_{n,m})\) given by

We can also write this sum as

and this summation can be reordered as

with each \(E_{m,n}^j(\alpha _k)\) depending on a single variable \(\alpha _k\). By taking the partial derivative \(D_{\alpha _k}(E^j_{m})=0\), we see that for the optimal choice of coefficients \({\hat{a}}_i\)

which establishes the form of solution given in the claim. \(\square \)

A.4 Proof of Theorem 2

We now turn to the consideration of the error bound in the theorem.

Proof

From the triangle inequality

we can bound the first term above by \(n^{-r}\) from the definition of the linear approximation space \(A^{r,2}(L^2_\mu (S))\). The bound in the theorem is proven if we can show that there is a constant \(C_2\) such that \(\Vert \Pi _n^S \gamma _\mu ^j - \gamma _{n,m}\Vert _{L^2_\mu (S)}\le C_2 n(\ell )\log (n(\ell ))/m\). We establish this bound by an extension to functions on the manifold S of the proof in [56], which is given for functions defined on \({\mathbb {R}}^p\) for some \(p\ge 1\). The expression above for \(\gamma ^j_{n,m}\) can be written in the form \(\gamma ^j_{n,m}:=\sum _{k=1}^{n(\ell )}\alpha _k1_{S_{\ell ,k}}\), and that for \(\Pi ^S_n\gamma ^j_\mu \) can be written as \(\gamma ^j_{\mu }:=\sum _{k=1}^{n(\ell )}{\hat{\alpha }}^j_{\ell ,k}1_{S_{\ell ,k}}\). In terms of these expansions, we write the error as

Let \(\epsilon >0\) be an arbitrary, but fixed, positive number. We define the set of indices \({\mathcal {I}}(\epsilon )\) that denote subsets \(S_{n,k}\) that have, in a sense, small measure,

where \({\bar{X}} = \sup _{s\in S}\Vert \gamma (s)\Vert _X\). We define the complement \(\tilde{{\mathcal {I}}} (\epsilon ):=\{ i\in \{1\ldots n(\ell )\} \ | \ k\not \in {\mathcal {I}}\}\), and then set the associated sums \( S_{{\mathcal {I}}}:=\sum _{k\in {\mathcal {I}}}(\alpha _{\ell ,k}^j-{\hat{\alpha }}_{\ell ,k}^j)^2\mu _S(S_{\ell ,k}) \) and \( S_{\tilde{{\mathcal {I}}}}:=\sum _{k\in \tilde{{\mathcal {I}}}}(\alpha _{\ell ,k}^j-{\hat{\alpha }}_{\ell ,k}^j)^2\mu _S(S_{\ell ,k}) \). The bound in Equation 2 follows if we can demonstrate a concentration of measure formula that has the form

for some constants b, c. See [40, 56] for a discussion of such concentration inequalities. The fact that such a concentration inequality implies the bound in expectation in Equation 2 is proven in [56] on page 1311 for functions over Euclidean space. The argument proceeds exactly in the same way for the problem at hand by integration of the distribution function defined by Equation 15 over the manifold S. To establish the concentration inequality, let us define two events

We can compute directly from the definitions of the coefficients \(\alpha _k,{\hat{\alpha }}^j_{\ell ,k}\), and using the compactness of \(\gamma (S)\subset X\), that \(S_{{\mathcal {I}}}\le \epsilon ^2/2\) for any \(\epsilon >0\). Since we always have

we know that \(E_{{\mathcal {I}}+\tilde{{\mathcal {I}}}}(\epsilon )\subseteq E_{\tilde{{\mathcal {I}}}}(\epsilon )\) for any \(\epsilon >0\). If the inequality \(S_{\tilde{{\mathcal {I}}}}>\epsilon ^2/2\), then we know there is at least one \(\tilde{k}\in \tilde{{\mathcal {I}}}\) such that

When we define the event \( E_i(\epsilon ):=\{z\in {\mathbb {Z}}^m \ | \ S_i(\epsilon )> \epsilon ^2/2n(\ell ) \) for each \(i\in \{1,\ldots , n(\ell )\}\), we conclude

By the monotonicity of measures, we conclude that \(\text {Prob}\left( {\mathcal {I}}+\tilde{{\mathcal {I}}} \right) \le \sum _{i\in \tilde{{\mathcal {I}}}} \text {Prob}(E_i)\). But we can show, again by a simple modification of the arguments on pages 1310 of [56], that \(\text {Prob}(E_i)\lesssim e^{-cm\epsilon ^2/n(\ell )}\). The analysis proceeds as in that reference by using Bernstein’s inequality for random variables defined over the probability space \((S,\Sigma _S, \mu _S)\) instead of over Euclidean space. \(\square \)

Rights and permissions

About this article

Cite this article

Powell, N., Kurdila, A.J. Distribution-free learning theory for approximating submanifolds from reptile motion capture data. Comput Mech 68, 337–356 (2021). https://doi.org/10.1007/s00466-021-02034-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00466-021-02034-0