Abstract

We consider a random graph \(\mathcal{G}(n,p)\) whose vertex set \(V,\) of cardinality \(n,\) has been randomly embedded in the unit square and whose edges, which occur independently with probability \(p,\) are given weight equal to the geometric distance between their end vertices. Then each pair \(\{u,v\}\) of vertices has a distance in the weighted graph, and a Euclidean distance. The stretch factor of the embedded graph is defined as the maximum ratio of these two distances, over all \(\{u,v\}\subseteq V.\) We give upper and lower bounds on the stretch factor (holding asymptotically almost surely), and show that for \(p\) not too close to 0 or 1, these bounds are the best possible in a certain sense. Our results imply that the stretch factor is bounded with probability tending to 1 if and only if \(n(1-p)\) tends to 0, answering a question of O’Rourke.

Similar content being viewed by others

1 Introduction

Let \(G\) be a graph embedded in the plane. For every two points \(u\) and \(v,\) let \(d(u,v)\) denote their Euclidean distance. Make \(G\) weighted by putting weight \(d(u,v)\) on every edge \(uv.\) For two vertices \(u\) and \(v,\) let \(d_G(u,v)\) denote their shortest-path distance on (weighted) \(G.\) The stretch factor of \(G\) is defined as

where the maximum is taken over all vertices \(u,v.\) If \(G\) is disconnected, then its stretch factor is undefined.

The stretch factor (also known as the spanning ratio or the dilation) is a well-studied parameter in discrete geometry, see, for instance, the book [6] or the recent survey [2]. An important problem in this context is the following. Given \(n\) points on the plane, find a set of \(O(n)\) pairs of them, such that when you create a geometric graph by adding the segments joining the points in each pair, this geometric graph has bounded stretch factor. A possible approach is to choose a random set of pairs. Suppose that we randomly choose \(M\) distinct pairs from the set of all \(\genfrac(){0.0pt}{}{n}{2}\) pairs of points, and add the corresponding edges. Then one can ask, how large should \(M\) be to guarantee that the stretch factor is bounded, with probability tending to 1? In this article, we show that if the initial points are chosen uniformly at random from the unit square, then we need almost all edges to guarantee a bounded stretch factor, and hence, this method is inefficient.

The setting is as follows. Select \(n\) points uniformly at random from the unit square, and then form a random geometric graph \(G\) on these points by joining each pair independently with probability \(p,\) where \(p\) is in general a function of \(n.\) This is not a ‘random geometric graph’ in the sense of Penrose [7], because points are joined without regard to their geometric distance. However, one can call this a randomly embedded random graph, since you get the same thing if you start from an Erdős-Rényi random graph with parameters \(n,p\) and embed each of its vertices into a random point in the unit square. The stretch factor of \(G\) is a random variable, and we denote it by \(\mathcal{F }(n,p).\) We study the asymptotic behaviour of \(\mathcal{F }(n,p)\) when \(n\) is large, and give probabilistic lower and upper bounds for it. In the following, asymptotically almost surely means with probability \(1-o(1),\) where the asymptotics is with respect to \(n.\)

In the open problem session of CCCG 2009 [3], O’Rourke asked the following question: for what range of \(p\) is \(\mathcal{F }(n,p)\) bounded asymptotically almost surely? As a conclusion of our bounds, we answer this question as follows. Let \(\lambda > 1\) be any fixed constant, and note that \(\omega (1)\) denotes a function that tends to infinity as \(n\) grows. If \(n(1-p) = \omega (1),\) then asymptotically almost surely \(\mathcal{F }(n,p) > \lambda .\) If \(n(1-p) = \Theta (1),\) then \(\mathcal{F }(n,p) > \lambda \) with probability \(\Omega (1).\) Finally, if \(n(1-p) = o(1),\) then asymptotically almost surely \(\mathcal{F }(n,p) < \lambda .\)

Our main lower bound is the following theorem.

Theorem 1

Let \(w(n) = \omega (1).\) Then asymptotically almost surely

Let \(\lambda \) be fixed. This theorem implies that if \(n(1-p) = \omega (1),\) then asymptotically almost surely \(\mathcal{F }(n,p) > \lambda .\) This strengthens the result of the first author [5], who proved the same thing for \(p < 1 - \Omega (1).\)

Let \(\mathrm{CON}\) denote the event ‘\(G\) is connected’. Recall that if the graph is disconnected, then its stretch factor is undefined. For any \(p<1,\) this happens with a positive probability, and hence, \(\mathbf{E } [ \mathcal{F }(n,p)]\) is undefined. It is then natural to bound \(\mathbf{E } [ \mathcal{F }(n,p) | \mathrm{CON}]\) instead.

Our main upper bound is the following theorem.

Theorem 2

Let \(p^2n \ge 33 \log n\) and let \(w(n)=\omega (1).\) Then, asymptotically almost surely we have

If \(p^2n \ge 113 \log n,\) then

When \(n(1-p) = o(1),\) this theorem implies that for any fixed \(\varepsilon >0,\) we have that \(\mathbf{E }\left[\mathcal{F (n,p) | \mathrm{CON}}\right] \le 1+\varepsilon ,\) and asymptotically almost surely \(\mathcal{F }(n,p) \le 1 + \varepsilon .\)

The more interesting case is when \(n (1-p) = \Theta (1).\) In this regime Theorem 2 states that \(\mathbf{E }\left[{ \mathcal{F }(n,p)|\,\mathrm{CON}}\right] = O(1).\) So, one may wonder if there is a constant \(\lambda \) such that asymptotically almost surely \(\mathcal{F }(n,p) < \lambda .\) However, Lemma 8 (which is the main lemma in the proof of Theorem 1) implies that this is not the case: for any fixed \(\lambda ,\) with probability \(\Omega (1)\) we have \(\mathrm{CON}\) and \(\mathcal{F }(n,p) > \lambda .\) In other words, the random variable \(\mathcal{F }(n,p)\) is not concentrated. In this case, one might expect that the distribution of \(\mathcal{F }(n,p)\) tends to some nontrivial limit if \(n(1-p)\) is constant.

Lemma 8 actually implies that for a wide range of \(p,\) the first conclusion of Theorem 2 is tight, in the sense that \(w(n)\) cannot be replaced with a constant. Namely, the following is true.

Theorem 3

Assume that \(p=\Omega (1)\) and \(n(1-p)=\Omega (1).\) There is no absolute constant \(C\) for which asymptotically almost surely

There is a nontrivial gap between our lower and upper bounds when \(p=o(1).\) It remains open to determine which of the bounds are closer to the correct answer in this regime.

The following notation will be used in the rest of the article. For a point \(Q\) and nonnegative real \(R,\) \(C(Q, R)\) denotes the intersection of the disc with centre \(Q\) and radius \(R\) and the unit square, and \(F\) simply denotes \(\mathcal{F }(n,p).\) We often identify each vertex with the point it has been embedded into. All logarithms are in natural base.

2 The Lower Bound

In this section, we prove Theorems 1 and 3. First, we need an easy geometric result.

Proposition 4

Let \(Q\) be a point in the unit square. If \(0 \le R \le 1/2,\) then \(C(Q,R)\) has area at least \(\pi R ^2 / 4.\) If \(0 \le R \le \sqrt{2},\) then \(C(Q,R)\) has area at least \(\pi R ^2 / 32.\)

Proof

By symmetry, we may assume that \(Q\) lies in the upper left quarter of the unit square. If \(0 \le R \le 1/2,\) then the bottom right quarter of the disc with centre \(Q\) and radius \(R\) lies completely inside the unit square, and hence, the intersection area is at least \(\pi R^2 / 4 \ge \pi R^2 / 32.\) If \(1/2 < R \le \sqrt{2},\) then \(C(Q,R)\) contains \(C(Q,1/2),\) so its area is at least

Let \(c\) be such that \(1/ 51 < c < 1/ 16\pi \) and \(cn\) is an even integer. Notice that here, as in the rest of the article, we always mean \(1/(ab)\) when we write \(1/ab.\)

Lemma 5

Choose \(cn\) points independently and uniformly at random from the unit square. Build a graph \(H\) on these vertices, by joining two vertices if their distance is at most \(2 / \sqrt{n}.\) With probability at least \(1 - O(1/n),\) \(H\) has at least \(cn / 2\) isolated vertices.

Proof

Let \(X\) be the number of edges of \(H.\) Then we have \(X = \sum _{i<j} X_{i,j},\) where \(X_{i,j}\) is the indicator variable for the distance between vertices \(i\) and \(j\) being at most \(2 / \sqrt{n}.\) Note that if vertex \(i\) has been embedded in point \(p_i,\) then \(X_{i,j} = 1\) if and only if vertex \(j\) is embedded in \(C(p_i, 2/\sqrt{n}).\) So by Proposition 4 we have

Let \(q = \mathbf E [X_{i,j}],\) \(q_2 = 4 \pi / n,\) and \(M = \genfrac(){0.0pt}{}{cn}{2}.\) Thus,

We claim that \(\mathbf{Var }\left[{X}\right] = O(n).\) Note that if \(\{i,j\}\) and \(\{k,l\}\) are two disjoint sets of vertices, then

and the number of such pairs of pairs equals \(\genfrac(){0.0pt}{}{cn}{2}\genfrac(){0.0pt}{}{cn-2}{2} \le M^2.\) Otherwise, let \(j = l.\) Then for \(X_{i,j}=X_{k,j}=1\) to happen, both vertices \(i\) and \(k\) should be embedded at distance at most \(2 / \sqrt{n}\) from where vertex \(j\) has been embedded, the probability of which is not more than \(q_2 ^ 2.\) Hence in this case

and the number of such pairs of pairs is not more than \((cn)^3.\) Consequently,

and so

By Chebyshev’s inequality,

Thus, with probability \(1 - O(1/n),\) \(H\) has at most \(2 \mathbf{E }\left[{X}\right] \le 4 c^2 \pi n\) edges. If this is the case, then it has at least

isolated vertices, and this completes the proof.\(\square \)

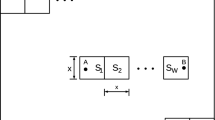

Now, back to the main problem. Assume that the \(n\) vertices are embedded one by one, and the edges are exposed at the end. Consider the moment when exactly \(cn\) vertices have been embedded. Build an auxiliary graph on these vertices, by joining two vertices if their distance is at most \(2 / \sqrt{n}.\) By Lemma 5, with probability \(1-O(1/n),\) this graph has at least \(cn/2\) isolated vertices. We condition on the embedding of the first \(cn\) vertices such that this event holds. Let \(A\) be a set of \(cn/2\) isolated vertices in this graph. The vertices in \(A\) are called the primary vertices, the vertices that are one of the first \(cn\) vertices but not in \(A\) are called far vertices, and the vertices that have not been embedded yet are called the secondary vertices.

Let \(m = cn/2\) be the number of primary vertices, and \(n^{\prime } = n(1-c)\) be the number of secondary vertices. A primary disc is a set of the form \(C(v, 1/\sqrt{n}),\) where \(v\in A\); the vertex \(v\) is called the centre of the primary disc. So, we have \(m\) primary discs, say \(\mathcal{R }_1, \mathcal{R }_2, \dots , \mathcal{R }_m.\) Let \(\mathcal{W }\) be the set of points of the unit square that are not contained in any primary disc. Notice that by the definition of \(A,\) the primary discs are disjoint, and no far vertex is contained in any primary disc.

Consider the following process for embedding the secondary vertices and exposing all of the edges of \(G\):

-

(1)

Note that \(\left\{ \mathcal{W },\mathcal R _1,\mathcal R _2,\dots ,\mathcal R _m \right\} \) is a partition of the unit square. In the first phase, to each secondary vertex, independently, we randomly assign an element of the partition, with probability proportional to the area of that element. Thus, for each primary disc, it is known how many secondary vertices it contains, but their exact position is not known.

-

(2)

In the second phase, for each secondary vertex, we choose a random point in the corresponding element, and place the vertex at that point.

-

(3)

In the third phase, for every pair of vertices, we add an edge independently with probability \(p.\)

Clearly this process generates random geometric graphs with the same distribution as before; however, it makes the analysis easier.

Lemma 6

With probability \(1-\exp \left( -\Omega (n) \right),\) after the first phase, there exist at least \(e^{-8} m\) primary discs containing exactly two vertices: one primary (the centre) and one secondary.

To prove this lemma, we will use the following large deviation inequality, which is Corollary 2.27 in [4].

Proposition 7

([4]) Let \(Z_1,Z_2,\dots ,Z_{n}\) be a sequence of independent random variables, and suppose that the function \(f\) satisfies

whenever the vectors \((x_1,x_2,\dots ,x_{n})\) and \((y_1,y_2,\dots ,y_{n})\) differ only in one of the coordinates. Then

Proof of Lemma 6.

Recall that we have \(n^{\prime }\) secondary vertices, and to each of them, independently, we randomly assign an element of the partition \(\left\{ \mathcal W ,\mathcal R _1,\mathcal R _2,\dots ,\mathcal R _m \right\} ,\) with probability proportional to the area of that element. By Proposition 4, the area of each primary disc is between \(\pi / 4n\) and \(\pi / n\); so for every \(1\le i \le m,\) the probability that \(\mathcal{R }_i\) contains exactly one secondary vertex is at least

as \(n^{\prime } \ge n/2.\) Let \(p_1 = \pi \exp (-2 \pi ) / 8.\)

Let \(X\) be the number of primary discs that contain exactly one secondary vertex. Since every primary disc contains exactly one primary vertex (its centre), to prove the lemma we need to show that we have \(X \ge e^{-8}m\) with probability \(1-\exp \left( -\Omega (n) \right).\) The calculation above shows that \(\mathbf{E }\left[{X}\right] \ge m p_1.\) Since \(p_1 > 2 e^{-8},\) showing that

completes the proof of the lemma.

To every secondary vertex \(v,\) we assign a variable \(Z_v,\) which equals \(k\) if \(v\) is assigned to \(\mathcal{R }_k\) in the first phase, and equals \(0\) if \(v\) is assigned to \(\mathcal{W }.\) Since the assignment in the first phase is done independently, the random variables \(\left\{ Z_v : v\mathrm{\ secondary }\right\} \) are independent. Moreover, changing the value of \(Z_v\) for a single vertex will change \(X\) by at most 2. Hence by Proposition 7 we have

Therefore, with probability \(1-\exp \left( -\Omega (n) \right),\) there exist at least \(e^{-8} m\) primary discs containing exactly two vertices.\(\square \)

A primary disc \(\mathcal{R }\) containing exactly two vertices \(u\) and \(v\) is called nice if the distance between \(u\) and \(v\) is less than \(1 / \lambda \sqrt{n},\) and \(u\) and \(v\) are not adjacent in \(G.\) We claim that the existence of a nice primary disc \(\mathcal{R }\) implies that the stretch factor of \(G\) is larger than \(\lambda .\) To see this, assume, by symmetry, that \(u\) is the vertex of the centre of \(\mathcal{R }.\) The (weighted) distance between \(u\) and \(v\) in \(G\) is at least \(1 / \sqrt{n},\) since any \((u,v)\)-path in \(G\) must go out of \(\mathcal{R }\) at the very first step. However, the Euclidean distance between \(u\) and \(v\) is at most \(1 / \lambda \sqrt{n},\) and we have

Theorem 1 follows immediately from the following lemma.

Lemma 8

For any positive \(\lambda \) we have

Proof

Consider a primary disc \(\mathcal{R }\) such that after the first phase, it has been determined that \(\mathcal{R }\) contains exactly two vertices, \(u\) and \(v,\) where \(u\) is the centre of \(\mathcal{R }.\) Then for \(\mathcal{R }\) to be nice, in the second phase \(v\) should be placed in \(C(u, 1 / \lambda \sqrt{n}),\) and in the third phase \(u\) and \(v\) should become nonadjacent in \(G.\) The probability of the former is at least

even if the disc of radius \(1/\sqrt{n}\) centred at \(u\) is not wholly contained in the unit square, and the probability of the latter is \(1-p.\) These two events are independent, so the probability that \(\mathcal{R }\) is not nice is at most \(1-(1-p) \lambda ^{-2}.\)

By Lemma 6, once the first phase finishes, with probability \(1 - \exp (-\Omega (n))\) there exists a set \(\mathcal{B }\) of at least \(e^{-8}m\) primary discs, such that each primary disc in \(\mathcal{B }\) contains exactly two vertices. We condition on this event in the following. The crucial observation is that the events happening during the second and third phases inside each primary disc in \(\mathcal{B }\) are independent of the others. In particular, the events

are mutually independent; hence, the probability that none of the primary discs in \(\mathcal{B }\) becomes nice during the second and third phases, is at most

so that

Theorem 3 follows from Lemma 8 by putting \(\lambda = C^{\prime } \sqrt{n(1-p)}\) for a suitable constant \(C^{\prime },\) noting that \(p = \Omega (1)\) and \(n(1-p) = \Omega (1).\)

3 The Upper Bound

In this section, we prove Theorem 2. We will use the following version of the Chernoff bound. This is Theorem 2.1 in [4].

Proposition 9

([4]) Let \(X = X_1 + \dots + X_m,\) where the \(X_i\) are independent identically distributed indicator random variables. Then for any \( \varepsilon \ge 0,\)

Lemma 10

For any positive \(\lambda ,\)

Proof

Say a pair \((u,v)\) of vertices is bad if \(d_G(u,v) > (2\lambda +1) d(u,v).\) Let \(u\) and \(v\) be arbitrary vertices. First, we show that with probability at least \(1 - \exp (-p^2n / 16),\) \(u\) and \(v\) have at least \(p^2 n / 4\) common neighbours. The expected number of common neighbours of \(u\) and \(v\) is \(p^2 (n-2) > p^2 n /2,\) and since the edges appear independently, by Proposition 9, the probability that \(u\) and \(v\) have less than \(p^2 n / 4\) common neighbours is less than \(\exp (-p^2 n / 16).\) In the following, we condition on the event that \(u\) and \(v\) have at least \(p^2 n /4\) common neighbours.

Now, consider the random embedding of the graph. For any \(t\ge 0,\) if \(u\) and \(v\) are adjacent, or if \(d(u,v) \ge t\) and \(u\) and \(v\) have a common neighbour \(w\) with \(d(u,w) \le \lambda t,\) then we would have \(d_G(u,v) \le (2\lambda +1) d(u,v)\) so the pair is not bad. To give an upper bound for the probability of badness of the pair, we compute the probability that \(u\) and \(v\) are nonadjacent, and \(i h \le d(u,v) \le (i+1) h,\) and they have no common neighbour \(w\) with \(d(u,w) \le \lambda ih,\) and sum over \(i.\)

Let us condition on the embedding of vertex \(u,\) and denote by \(a(u,s)\) the area of the set of points in the unit square at distance at most \(s\) from \(u,\) and let \(q=1-p.\) Then since \(u\) and \(v\) have at least \(p^2n/4\) common neighbours and these common neighbours are embedded independently, for any \(h>0\)

Note that

and also for \(ih > \sqrt{2} / \lambda \) the summand is zero. Hence, letting \(t = ih\) and taking the limit,

By Proposition 4, \(a(u,\lambda t) \ge \pi (\lambda t)^2/32.\) Hence,

So by the union bound

We are now ready to prove Theorem 2.

Proof of Theorem 2

We need to show that if \(p^2n \ge 33 \log n\) and \(w(n)=\omega (1),\) then asymptotically almost surely we have

and that if \(p^2n \ge 113 \log n,\) then

For the first part, let

By Lemma 10,

Thus, asymptotically almost surely

For the second part, let

Since \(F\) is nonnegative, we have

Clearly,

Assuming the graph is connected, for any pair \(\{u,v\}\) of vertices there is a path having at most \(n-1\) edges joining them, so \(d_{G}(u,v) < n \sqrt{2}.\) Hence, if \(F > s,\) then there exists a pair \(\{u,v\}\) of nonadjacent vertices with \(d(u,v) < n\sqrt{2} / s.\) Let \(u\) be a fixed vertex. Let \(A_u\) be the event that there exists a vertex, not adjacent to \(u,\) at distance less than \(n\sqrt{2} / s\) from \(u.\) By the union bound, \(\mathbf Pr [A_u] < 2 \pi n^3 (1-p) / s^2.\) The probability that there exists a vertex \(u\) for which \(A_u\) happens is by the union bound less than \(2 \pi n^4 (1-p) / s^2.\) Therefore,

We will now bound the second term in the right hand side of (1). Notice that for any fixed embedding of the vertices, the event ‘\(F > \lambda \)’ is a decreasing property (with respect to the edges in the graph), and the event ‘\(G\) is connected’ is an increasing one. Hence, by the correlation inequalities (see, e.g., Theorem 6.3.3 in [1]) we have

Let \(\lambda = (s-1) / 2.\) Then by Lemma 10

Therefore, since \(p^2n > 113 \log n,\)

Consequently,

References

Alon, N., Spencer, J.H.: The Probabilistic Method, 3rd edn. Wiley-Interscience Series in Discrete Mathematics and Optimization. Wiley, Hoboken (2008)

Bose, P., Smid, M.: On plane geometric spanners: a survey and open problems. Submitted (2009)

Demaine, E.D., O’Rourke, J.: Open problems from CCCG 2009. In: Proceedings of the 22nd Canadian Conference on Computational Geometry (CCCG 2010), pp. 83–86 (2010). http://cccg.ca/proceedings/2010/paper24.pdf

Janson, S., Łuczak, T., Rucinski, A.: Random Graphs. Wiley-Interscience, New York (2000)

Mehrabian, A.: A randomly embedded random graph is not a spanner. In: Proceedings of the 23rd Canadian Conference on Computational Geometry (CCCG 2011), pp. 373–374 (2011). http://www.cccg.ca/proceedings/2011/papers/paper2.pdf

Narasimhan, G., Smid, M.: Geometric Spanner Networks. Cambridge University Press, Cambridge (2007)

Penrose, M.: Random Geometric Graphs. Oxford University Press, Oxford (2003)

Acknowledgments

Nick Wormald is supported by the Canada Research Chairs Program and NSERC.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Mehrabian, A., Wormald, N. On the Stretch Factor of Randomly Embedded Random Graphs. Discrete Comput Geom 49, 647–658 (2013). https://doi.org/10.1007/s00454-012-9482-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00454-012-9482-9