Abstract

Error-correcting codes are efficient methods for handling noisy communication channels in the context of technological networks. However, such elaborate methods differ a lot from the unsophisticated way biological entities are supposed to communicate. Yet, it has been recently shown by Feinerman et al. (PODC 2014) that complex coordination tasks such as rumor spreading and majority consensus can plausibly be achieved in biological systems subject to noisy communication channels, where every message transferred through a channel remains intact with small probability \(\frac{1}{2}+\epsilon \), without using coding techniques. This result is a considerable step towards a better understanding of the way biological entities may cooperate. It has nevertheless been established only in the case of 2-valued opinions: rumor spreading aims at broadcasting a single-bit opinion to all nodes, and majority consensus aims at leading all nodes to adopt the single-bit opinion that was initially present in the system with (relative) majority. In this paper, we extend this previous work to k-valued opinions, for any constant \(k\ge 2\). Our extension requires to address a series of important issues, some conceptual, others technical. We had to entirely revisit the notion of noise, for handling channels carrying k-valued messages. In fact, we precisely characterize the type of noise patterns for which plurality consensus is solvable. Also, a key result employed in the bivalued case by Feinerman et al. is an estimate of the probability of observing the most frequent opinion from observing the mode of a small sample. We generalize this result to the multivalued case by providing a new analytical proof for the bivalued case that is amenable to be extended, by induction, and that is of independent interest.

Similar content being viewed by others

1 Introduction

1.1 Context and objective

To guarantee reliable communication over a network in the presence of noise is the main goal of Network Information Theory [23]. Thanks to the achievements of this theory, the impact of noise can often be drastically reduced to almost zero by employing error-correcting codes, which are practical methods whenever dealing with artificial entities. However, as observed in [26], the situation is radically different for scenarios in which the computational entities are biological. Indeed, from a biological perspective, a computational process can be considered “simple” only if it consists of very basic primitive operations, and is extremely lightweight. As a consequence, it is unlikely that biological entities are employing techniques like error-correcting codes to reduce the impact of noise in communications between them. Yet, biological signals are subject to noise, when generated, transmitted, and received. This rises the intriguing question of how entities in biological ensembles can cooperate in presence of noisy communications, but in absence of mechanisms such as error-correcting codes.

An important step toward understanding communications in biological ensembles has been achieved recently in [26], which showed how it is possible to cope with noisy communications in absence of coding mechanisms for solving complex tasks such as rumor-spreading and majority consensus. Such a result provides highly valuable hints on how complex tasks can be achieved in frameworks such as the immune system, bacteria populations, or super-organisms of social insects, despite the presence of noisy communications.

In the case of rumor-spreading, [26] assumes that a source-node initially handles a bit, set to some binary value, called the correct opinion. This opinion has to be transmitted to all nodes, in a noisy environment, modeled as a complete network with unreliable links. More precisely, messages are transmitted in the network according to the classical uniform push model [19, 32, 38] where, at each round, every node can send one binary opinion to a neighbor chosen uniformly and independently at random and, before reaching the receiver, that opinion is flipped with probability at most \(\frac{1}{2}-\epsilon \) with \(\epsilon >0\). It is proved that, even in this very noisy setting, the rumor-spreading problem can be solved quite efficiently. Specifically, [26] provides an algorithm that solves the noisy rumor-spreading problem in \(O(\frac{1}{\epsilon ^{2}}\log n)\) communication rounds, with high probabilityFootnote 1 (w.h.p.) in n-node networks, using \(O(\log \log n+\log (1/\epsilon ))\) bits of memory per nodeFootnote 2. Again, this algorithm exchanges solely opinions between nodes.

In the case of majority consensus, [26] assumes that some nodes are supporting opinion 0, some nodes are supporting opinion 1, and some other nodes are supporting no opinion. The objective is that all nodes eventually support the initially most frequent opinion (0 or 1). More precisely, let A be the set of nodes with opinion, and let \(b\in \{0,1\}\) be the majority opinion in A. The majority bias of A is defined as \(\frac{1}{2}(|A_{b}|-|A_{\bar{b}}|)/|A|\) where \(A_{i}\) is the set of nodes with opinion \(i\in \{0,1\}\). In the very same noisy communication model as above, [26] provides an algorithm that solves the noisy majority consensus problem for \(|A|=\Omega (\frac{1}{\epsilon ^{2}}\log n)\) with majority-bias \(\Omega (\sqrt{\log n/|A|})\). The algorithm runs in \(O(\frac{1}{\epsilon ^{2}}\log n)\) rounds, w.h.p., in n-node networks, using \(O(\log \log n+\log (1/\epsilon ))\) bits of memory per node. As for the case of rumor spreading, the algorithm exchanges solely opinions between nodes. In fact, the latter algorithm for majority consensus is used as a subroutine for solving the rumor-spreading problem. Note that the majority consensus algorithm of [26] requires that the nodes are initially aware of the size of A.

According to [26], both algorithms are optimal, since both rumor-spreading and majority consensus require \(\Omega (\frac{1}{\epsilon ^{2}}\log n)\) rounds w.h.p. in n-node networks.

Our objective is to extend the work of [26] to the natural case of an arbitrary number of opinions, to go beyond a proof of concept. The problem that results from this extension is an instance of the plurality consensus problem in the presence of noise, i.e., the problem of making the system converging to the initially most frequent opinion (i.e., the plurality opinion). Indeed, the plurality consensus problem naturally arises in several biological settings, typically for choosing between different directions for a flock of birds [11], different speeds for a school of fish [42], or different nesting sites for ants [27]. The computation of the most frequent value has also been observed in biological cells [15].

1.2 Our contribution

1.2.1 Our results

We generalize the results in [26] to the setting in which an arbitrary large number k of opinions is present in the system. In the context of rumor spreading, the correct opinion is a value \(i\in \{1,\ldots ,k\}\), for any constant \(k\ge 2\). Initially, one node supports this opinion i, and the other nodes have no opinions. The nodes must exchange opinions so that, eventually, all nodes support the correct opinion i. In the context of (relative) majority consensus, also known as plurality consensus, each node u initially supports one opinion \(i_{u}\in \{1,\ldots ,k\}\), or has no opinion. The objective is that all nodes eventually adopt the plurality opinion (i.e., the opinion initially held by more nodes than any other, but not necessarily by an overall majority of nodes). As in [26], we restrict ourselves to “natural” algorithms [16], that is, algorithms in which nodes only exchange opinions in a straightforward manner (i.e., they do not use the opinions to encode, e.g., part of their internal state). For both problems, the difficulty comes from the fact that every opinion can be modified during its traversal of any link, and switched at random to any other opinion. In short, we prove that there are algorithms solving the noisy rumor spreading problem and the noisy plurality consensus problem for multiple opinions, with the same performances and probabilistic guarantees as the algorithms for binary opinions in [26].

1.2.2 The technical challenges

Generalizing noisy rumor spreading and noisy majority consensus to more than just two opinions requires to address a series of issues, some conceptual, others technical.

Conceptually, one needs first to redefine the notion of noise. In the case of binary opinions, the noise can just flip an opinion to its complement. In the case of multiple opinions, an opinion i subject to a modification is switched to another opinion \(i'\), but there are many ways of picking \(i'\). For instance, \(i'\) can be picked uniformly at random (u.a.r.) among all opinions. Or, \(i'\) could be picked as one of the “close opinions”, say, either \(i+1\) or \(i-1\) modulo k. Or, \(i'\) could be “reset” to, say, \(i=1\). In fact, there are very many alternatives, and not all enable rumor spreading and plurality consensus to be solved. One of our contributions is to characterize noise matrices\(P=(p_{i,j})\), where \(p_{i,j}\) is the probability that opinion i is switched to opinion j, for which these two problems are efficiently solvable. Similar issues arise for, e.g., redefining the majority bias into a plurality bias.

The technical difficulties are manifold. A key ingredient of the analysis in [26] is a fine estimate of how nodes can mitigate the impact of noise by observing the opinions of many other nodes, and then considering the mode of such sample. Their proof relies on the fact that for the binary opinion case, given a sample of size \(\gamma \), the number of 1s and 0s in the sample sum up to \(\gamma \). Even for the ternary opinion case, the additional degree of freedom in the sample radically changes the nature of the problem, and the impact of noise is statistically far more difficult to handle.

Also, to address the multivalued case, we had to cope with the fact that, in the uniform push model, the messages received by nodes at every round are correlated. To see why, consider an instance of the system in which a certain opinion b is held by one node only, and there is no noise at all. In one round, only one other node can receive b. It follows that if a certain node u has received b, no other nodes have received it. Thus, the messages each node receives are not independent. In [25] (conference version of [26]), probability concentration results are claimed for random variables (r.v.) that depend on such messages, using Chernoff bounds. However, Chernoff bounds have been proved to hold only for random variables whose stochastic dependence belongs to a very limited class (see for example [22]). In [26], it is pointed out that the binary random variables on which the Chernoff bound is applied satisfy the property of being negatively 1-correlated (see Section 1.7 in [26] for a formal definition). In our analysis, we show instead how to obtain concentration of probability in this dependent setting by leveraging Poisson approximation techniques. Our approach has the following advantage: instead of showing that the Chernoff bound can be directly applied to the specific involved random variables, we show that the execution of the given protocol, on the uniform push model, can be tightly approximated with the execution of the same protocol on a another suitable communication model, that is not affected by the stochastic correlation that affects the uniform push model.

1.3 Other related work

By extending the work of [26], we contribute to the theoretical understanding of how communications and interactions function in biological distributed systems, from an algorithmic perspective [2, 3, 6,7,8, 13, 14, 34]. We refer the reader to [26] for a discussion on the computational aspects of biological distributed systems, an overview of the rumor spreading problem in distributed computing, as well as its biological significance in the presence of noise. In this section, we mainly discuss the previous technical contributions from the literature related to the novelty of our work, that is the extension to the case of several different opinions.

We remark that, in the following, we say that a protocol solves a problem within a given time if a correct solution is achieved with high probability within said time. In the context of population protocol, the problem of achieving majority consensus in the binary case has been solved by employing a simple protocol called undecided-state dynamic [7]. In the uniform push model, the binary majority consensus problem can be solved very efficiently as a consequence of a more general result about computing the median of the initial opinions [20]. Still in the uniform push model, the undecided state dynamic has been analyzed in the case of an arbitrarily large number of opinions, which may even be a function of the number of agents in the system [9]. A similar result has been obtained for another elementary protocol, so-called 3-majority dynamics, in which, at each round, each node samples the opinion of three random nodes, and adopts the most frequent opinion among these three [10]. The 3-majority dynamics has also been shown to be fault-tolerant against an adversary that can change up to \(O(\sqrt{n})\) agents at each round [10, 20]. Other work has analyzed the undecided-state dynamics in asynchronous models with a constant number of opinions [21, 31, 37], and the h-majority dynamics (or slight variations of it) on different graph classes in the uniform push model [1, 18]. The analysis of the undecided-state dynamics in [9] has been followed by a series of work which have used it to design optimal plurality consensus algorithms in the uniform pull model [29, 30].

A general result by Kempe et al. [33] shows how to compute a large class of functions in the uniform push model. However, their protocol requires the nodes to send slightly more complex messages than their sole current opinion, and its effectiveness heavily relies on a potential function argument that does not hold in the presence of noise.

To the best of our knowledge, we are the first considering the plurality consensus problem in the presence of noise.

2 Model and formal statement of our results

In this section we formally define the communication model, the main definitions, the investigated problems and our contribution to them.

As discussed in Sect. 1.1, intuitively we look for protocols that are simple enough to be plausible communication strategies for primitive biological system. We believe that the computational investigation regarding biologically-feasible protocols is still too premature for a reasonable attempt to provide a general formal definition of what constitutes a biologically feasible computation. Hence, in the following we restrict our attention solely on the biological significance of the rumor-spreading and plurality consensus problems and the corresponding protocols that we consider.

Regarding the problems of multivalued rumor spreading and plurality consensus, while for practical reasons many experiments on collective behavior have been designed to investigate the binary-decision setting, the considered natural phenomena usually involve a decision among a large number of different options [17]: famous examples in the literature include cockroaches aggregating in a common site [5], and the house-hunting process of ant colonies when seeking a new site to relocate their nest [28] or of honeybee swarms when a portion of a strong colony branches from it in order to start a new one [40, 41]. Therefore, it is natural to ask what trade-offs and constraints are required by the extension of the results in [26] to the multivalued case.

Regarding the solution we consider, as illustrated in Sects. 2.3 and 3.1, we consider a natural generalization of the protocol given in [26], which is essentially an elementary combination of sampling and majority operations. These elementary operations have extensively been observed in the aforementioned experimental settings [17].

2.1 Communication model and definition of the problems

The communication model we consider is essentially the uniform push model [19, 32, 38], where in each (synchronous) round each agent can send (push) a message to another agent chosen uniformly at random. This occurs without having the sender or the receiver learning about each other’s identity. Note that it may happen that several agents push a message to the same node u at the same round. In the latter case we assume that the nodes receive them in a random order; we discuss this assumption in detail in Sect. 2.1.1.

We study the problems of rumor-spreading and plurality consensus. In both cases, we assume that nodes can support opinions represented by an integer in \([k]=\{1,\dots ,k\}\). Additionally, there may be undecided nodes that do not support any opinion, which represents nodes that are not actively aware that the system has started to solve the problem; thus, undecided nodes are not allowed to send any message before receiving any of them.

-

In rumor spreading, initially, one node, called the source, has an opinion \(m\in \{1,\dots ,k\}\), called the correct opinion. All the other nodes have no opinion. The objective is to design a protocol insuring that, after a certain number of communication rounds, every node has the correct opinion m.

-

In plurality consensus, initially, for every \(i\in \{1,\dots ,k\}\), a set \(A_{i}\) of nodes have opinion i. The sets \(A_{i}\), \(i=1,\dots ,k\), are pairwise disjoint, and their union does not need to cover all nodes, i.e., there may be some undecided nodes with no opinion initially. The objective is to design a protocol insuring that, after a certain number of communication rounds, every node has the plurality opinion, that is, the opinion m with relative majority in the initial setting (i.e., \(|A_{m}|>|A_{j}|\) for any \(j\ne m\)).

Observe that the rumor-spreading problem is a special case of the plurality consensus problem with \(|A_{m}|=1\) and \(|A_{j}|=0\) for any \(j\ne m\).

Following the guidelines of [26], we work under two constraints:

-

1.

We restrict ourselves to protocols in which each node can only transmit opinions, i.e., every message is an integer in \(\{1,\dots ,k\}\).

-

2.

Transmissions are subject to noise, that is, for every round, and for every node u, if an opinion \(i\in \{1,\dots ,k\}\) is transmitted to node u during that round, then node u will receive message \(j\in \{1,\dots ,k\}\) with probability \(p_{i,j}\ge 0\), where \(\sum _{j=1}^{k}p_{i,j}=1\).

The noisy push model is the uniform push model together with the previous two constraints. The probabilities \(\{p_{i,j}\}_{i,j\in [k]}\) can be seen as a transition matrix, called the noise matrix, and denoted by \(P=(p_{i,j})_{i,j\in [k]}\). The noise matrix in [26] is simply

2.1.1 The reception of simultaneous messages

In the uniform push model, it may happen that several agents push a message to the same node u at the same round. In such cases, the model should specify whether the node receives all such messages, only one of them or neither of them. Which choice is better depends on the biological setting that is being modeled: if the communication between the agents of the system is an auditory or tactile signal, it could be more realistic to assume that simultaneous messages to the same node would “collide”, and the node would not be able to grasp any of them. If, on the other hand, the messages represent visual or chemical signals (see e.g., [10, 11, 27, 42]), then it may be unrealistic to assume that nodes cannot receive more than one of such messages at the same round and besides, by a standard balls-into-bins argument (e.g., by applying Lemma 3), it follows that in the uniform push model at each round no node receives more than \({\mathcal O}(\log n)\) messages w.h.p. In this work we thus consider the model in which all messages are received, also because such assumption allows us to obtain simpler proofs than the other variants. We finally note that our protocol does not strictly need such assumption, since it only requires the nodes to collect a small random sample of the received messages. However, since we look at the latter feature as a consequence of active choices of the nodes rather than some inherent property of the environment, we avoid to weaken the model to the point that it matches the requirements of the protocol.

2.1.2 On the role of synchronicity in the result

An important aspect of many natural biological computations is their tolerance with respect to a high level of asynchrony. Following [26], in this work we tackle the noisy rumor-spreading and plurality consensus problems by assuming that agents are provided a shared clock, which they can employ to synchronize their behavior across different phases of a protocol. In [26, Section 3], it is shown how substantially relax the previous assumption by assuming, instead, that a source agent can broadcast a starting signal to the rest of the system to initiate the execution of the protocol. That is, a simple rumor-spreading procedure is employed to awake the agents which join the system asynchronously, and it is shown that with high probability the level of asynchrony (i.e. the largest difference among the agents’ estimates of the time since the start of the execution of the protocol), is logarithmically bounded with high probability. It thus follows that their results can be generalized to the setting in which source agents initiate the execution of the protocol by waking up the rest of the system, with only a logarithmic overhead factor in the running time. We defer the reader to [26, Section 3] for formal details regarding this synchronization procedure. Our generalization of the results in [26] is independent from any aspect which concerns the aforementioned procedure. Hence, their relaxation holds for our results as well, with the same \(\log n\) additional factor in the running time. It is an open problem to obtain a simple procedure to wake up the system while incurring a smaller overhead than the logarithmic one given by [26]. Finally, we remark that, more generally, the research on solving fundamental coordination problems such as plurality consensus in fully-asynchronous communication models such as population protocols is an active research area [4, 24]. We believe that obtaining analogous results to those provided here in a noisy version of population protocols is an interesting direction for future research.

2.2 Plurality bias, and majority preservation

When time proceeds, our protocols will result in the proportion of nodes with a given opinion to evolve. Note that there might be nodes who do not support any opinion at time t. As mentioned in the previous section, we call such nodes undecided. We denote by \(a^{(t)}\) the fraction of nodes supporting any opinion at time t and we call the nodes contributing to \(a^{(t)}\)opinionated. Consequently, the fraction of undecided nodes at time t is \(1-a^{(t)}\). Let \(c_{i}^{(t)}\) be the fraction of opinionated nodes in the system that support opinion \(i\in [k]\) at the beginning of round t, so that \(\sum _{i\in [k]} c_{i}^{(t)} = a^{(t)}\). Let \(\hat{c}_{i}^{(t)}\) be the fraction of opinionated nodes which receive at least one message at time \(t-1\) and support opinion \(i\in [k]\) at the beginning of round t. We write \( \mathbf {c}^{(t)}=(c_{1}^{(t)},\ldots ,c_{k}^{(t)})\) to denote the opinion distribution of the opinions at time t. Similarly, let \(\hat{\mathbf {c}}^{(t)}=(\hat{c}_{1}^{(t)},\ldots ,\hat{c}_{k}^{(t)})\). In particular, if every node would simply switch to the last opinion it received, then

That is,

where P is the noise matrix. In particular, in the absence of noise, we have \(P=I\) (the identity matrix), and if every node would simply copy the opinion that it just received, we had \( \mathbb {E}[\hat{\mathbf {c}}^{(t+1)}\mid \mathbf {c}^{(t)}]=\mathbf {c}^{(t)}\). So, given the opinion distribution at round t, from the definition of the model it follows that the messages each node receives at round \(t+1\) can equivalently be seen as being sent from a system without noise, but whose opinion distribution at round t is \(\mathbf {c}^{(t)}\cdot P\).

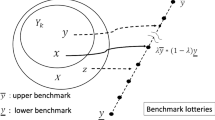

Recall that m denotes the initially correct opinion, that is, the source’s opinion in the rumor-spreading problem, and the initial plurality opinion in the plurality consensus problem. The following definition naturally extends the concept of majority bias in [26] to plurality bias.

Definition 1

Let\(\delta >0\). Anopinion distribution\(\mathbf {c}\)is said to be\(\delta \)-biased toward opinion mif\( c_{m}-c_{i}\ge \delta \)for all\(i\ne m\).

In [26], each binary opinion that is transmitted between two nodes is flipped with probability at most \(\frac{1}{2}-\epsilon \), withFootnote 3\(\epsilon =n^{-\frac{1}{4}+\eta }\) for an arbitrarily small \(\eta >0\). Thus, the noise is parametrized by \(\epsilon \). The smaller \(\epsilon \), the more noisy are the communications. We generalize the role of this parameter with the following definition.

Definition 2

Let\(\epsilon =\epsilon (n)\)and\(\delta =\delta (n)\)be positive. A noise matrixPis said to be\((\epsilon , \delta )\)-majority-preserving (\((\epsilon , \delta )\)-m.p.) with respect to opinion mif, for every opinion distribution\(\mathbf {c}\)that is\(\delta \)-biased toward opinion m, we have\( \left( \mathbf {c}\cdot P\right) _{m}-\left( \mathbf {c}\cdot P\right) {}_{i}>\epsilon \,\delta \)for all\(i\ne m\).

In the rumor-spreading problem, as well as in the plurality consensus problem, when we say that a noise matrix is \((\epsilon , \delta )\)-m.p., we implicitly mean that it is \((\epsilon , \delta )\)-m.p. with respect to the initially correct opinion. Because of the space constraints, we defer the discussion on the class of \((\epsilon , \delta )\)-m.p. noise matrices in Sect. 4 (including its tightness w.r.t. Theorems 1 and 2).

2.3 Our formal results

We show that a natural generalization of the protocol in [26] solves the rumor spreading problem and the plurality consensus problem for an arbitrary number of opinions k. More precisely, using the protocol which we describe in Sect. 3.1, we can establish the following two results, whose proof can be found in Sect. 3.

Theorem 1

Assume that the noise matrix P is \((\epsilon , \delta )\)-m.p. with \(\epsilon =\Omega (n^{-\frac{1}{4}+\eta })\) for an arbitrarily small constant \(\eta >0\) and  . The noisy rumor-spreading problem with k opinions can be solved in \(O(\frac{\log n}{\epsilon ^{2}})\) communication rounds, w.h.p., by a protocol using \(O(\log \log n+\log \frac{1}{\epsilon })\) bits of memory at each node.

. The noisy rumor-spreading problem with k opinions can be solved in \(O(\frac{\log n}{\epsilon ^{2}})\) communication rounds, w.h.p., by a protocol using \(O(\log \log n+\log \frac{1}{\epsilon })\) bits of memory at each node.

Theorem 2

Let S with \(|S|=\Omega (\frac{1}{\epsilon ^{2}}\log n)\) be an initial set of nodes with opinions in [k], the rest of the nodes having no opinions. Assume that the noise matrix P is \((\epsilon , \delta )\)-m.p. for some \(\epsilon >0\), and that S is \(\Omega (\sqrt{\log n/|S|})\)-majority-biased. The noisy plurality consensus problem with k opinions can be solved in \(O(\frac{\log n}{\epsilon ^{2}})\) communication rounds, w.h.p., by a protocol using \(O(\log \log n+\log \frac{1}{\epsilon })\) bits of memory at each node.

For \(k=2\), we get the theorems in [26] from the above two theorems. Indeed, the simple 2-dimensional noise matrix of Eq. (1) is \(\epsilon \)-majority-biased. Note that, as in [26], the plurality consensus algorithm requires the nodes to known the size |S| of the set S of opinionated nodes.

3 The analysis

In this section we prove Theorems 1 and 2 by generalizing the analysis of Stage 1 given in [26] and by providing a new analysis of Stage 2. Note that the proof techniques required for the generalization to arbitrary k significantly depart from those in [26] for the case \(k=2\). In particular, our approach provides a general framework to rigorously deal with many kind of stochastic dependences among messages in the uniform push model (Fig. 1).

3.1 Definition of the protocol

We describe a rumor spreading protocol performing in two stages. Each stage is decomposed into a number of phases, each one decomposed into a number of rounds. During each phase of the two stages, the nodes apply the simple rules given below.

3.1.1 The rule during each phase of Stage 1

Nodes that already support some opinion at the beginning of the phase push their opinion at each round of the phase. Nodes that do not support any opinion at the beginning of the phase but receive at least one opinion during the phase start supporting an opinion at the end of the phase, chosen u.a.r. (counting multiplicities) from the received opinions.Footnote 4 In other words, each node tries to acquire an opinion during each phase of Stage 1, and, as it eventually receives some opinions, it starts supporting one of them (chosen u.a.r.) from the beginning of the next phase. In particular, opinionated nodes never change their opinion during the entire stage.

More formally, let \(\phi ,\beta \), and s be three constants satisfying \(\phi>\beta > s\). The rounds of Stage 1 are grouped in \(T+2\) phases with \( T=\lfloor \log (n/(2s/\epsilon ^{2} \log n))/\log (\beta /\epsilon ^{2}+1)\rfloor \). Phase 0 takes \(s/\epsilon ^{2} \log n\) rounds, phase \(T+1\) takes \(\phi /\epsilon ^{2} \log n\) rounds, and each phase \(j\) with \(1\le j\le T\) takes \(\beta /\epsilon ^{2}\) rounds. We denote with \(\tau _{j}\) the end of the last round of phase \(j\).

Let \(t_{u}\) be the first time in which u receives any opinion since the beginning of the protocol (with \(t_u=0\) for the source). Let \(j_{u}\) be the phase of \(t_{u}\), and let \(\text{ val }(u)\) be an opinion chosen u.a.r. by u among those that it receives during phase \(j_u\).Footnote 5 During the first stage of the protocol each node applies the following rule.

3.1.2 The rule during each phase of Stage 2

During each phase of Stage 2, every node pushes its opinion at each round of the phase. At the end of the phase, each node that received “enough” opinions takes a random \(\hbox {sample}^{4}\) of them, and starts supporting the most frequent opinion in that sample (breaking ties u.a.r.).

More formally, the rounds of stage 2 are divided in \(T^{\prime }+1\) phases with \(T^{\prime }=\lceil \log (\sqrt{n/\log n}) \rceil \). Each phase j, \(0\le j\le T^{\prime }-1\), has length \(2\ell \) with \(\ell = \lceil c/\epsilon ^{2} \rceil \) for some large-enough constant \(c>0\), and phase \(T^{\prime }\) has length \(2\ell '\) with \(\ell '=O(\epsilon ^{-2}\log n)\). For any finite multiset A of elements in \(\{1,\ldots ,k\}\), and any \(i\in \{1,\ldots ,k\}\), let \(\text{ occ }(i,A)\) be the number of occurrences of i in A, and let \( \mathrm {mode}(A)= \{ i \in \{1,\dots ,k\} \mid \text{ occ }(i,A) \ge \text{ occ }(j,A) \; \text{ for } \text{ every } j \in \{1,\ldots ,k\}\}. \) We then define \(\mathrm {maj}(A)\) as the most frequent value in A (breaking ties u.a.r.), i.e., \(\mathrm {maj}(A)\) is the r.v. on \(\{1,\ldots ,k\}\) such that \( \Pr ( \mathrm {maj}(A) = i ) ={\mathbb {1}_{\left\{ i\in \mathrm {mode}(A)\right\} }}/{\left| \mathrm {mode}(A)\right| }. \) Let \(R_j(u)\) be the multiset of messages received by node u during phase j. During the second stage of the protocol each node applies the following rule.

Let us remark that the reason we require the use of sampling in the previous rule is that at a given round a node may receive much more messages than 2L. Thus, if the nodes were to collect all the messages they receive, some of them would need much more memory than our protocol does. Finally, observe that overall both stages 1 and 2 take \(O(\frac{1}{\epsilon ^{2}}\log n)\) rounds.

3.2 Pushing colored balls-into-bins

Before delving into the analysis of the protocol, we provide a framework to rigorously deal with the stochastic dependence that arises between messages in the uniform push model. Let process \(\mathbf {O}\) be the process that results from the execution of the protocol of Sect. 3.1 in the uniform push model. In order to apply concentration of probability results that requires the involved random variables to be independent, we view the messages as balls, and the nodes as bins, and employ Poisson approximation techniques. More specifically, during each phase j of the protocol, let \(M_{j} \) be the set of messages that are sent to random nodes, and \(N_{j} \) be the set of messages sent after the noise has acted on them. (In other words, \(N_{j} = \bigcup _{u}R_j(u)\)). We prove that, at the end of phase j, we can equivalently assume that all the messages \(M_{j}\) have been sent to the nodes according to the following process.

Definition 3

The balls-into-bins process \(\mathbf {B}\) associated to phase j is the two-step process in which the nodes represent bins and all messages sent in the phase represent colored balls, with each color corresponding to some opinion. Initially, balls are colored according to \(M_{j} \). At the first step, each ball of color \(i\in \{1,\ldots ,k\}\) is re-colored with color \(j \in \{1,\ldots ,k\}\) with probability \( p_{i,j}\), independently of the other balls. At the second step all balls are thrown into the bins u.a.r. as in a balls-into-bins experiment.

Claim 1

Given the opinion distribution and the number of active nodes at the beginning of phase j, the probability distribution of the opinion distribution and the number of active nodes at the end of phase j in process \(\mathbf {O}\) is the same as if the messages were sent according to process \(\mathbf {B}\).

It is not hard to see that Claim 1 holds in the case of a single round. For more than one round, it is crucial to observe that the way each node u acts in the protocol depends only on the received messages \(R_j(u)\), regardless of the order in which these messages are received. As an example, consider the opinion distribution in which one node has opinion 1, one other node has opinion 2, and all other nodes have opinion 3. Suppose that each node pushes its opinion for two consecutive rounds. Since, at each round, exactly one opinion 1 and exactly one opinion 2 are pushed, no node can receive two 1s during the first round and then two 2s during the second round, i.e., no node can possibly receive the sequence of messages “1,1,2,2” in this exact order. Instead, in process \(\mathbf {B}\) such a sequence is possible.

Proof of Claim 1

In both process \(\mathbf {B}\) and process \(\mathbf {O}\), at each round, the noise acts independently on each ball/message of a given color/opinion, according to the same probability distribution for that color/opinion. Then, in both processes, each ball/message is sent to some bin/node chosen u.a.r. and independently of the other balls/messages. Indeed, we can couple process \(\mathbf {B}\) and process \(\mathbf {O}\) by requiring that:

-

1.

each ball/message is changed by the noise to the same color/value, and

-

2.

each ball/message ends up in the same bin/node.

Thus, the joint probability distribution of the sets \({\left\{ R_j(u)\right\} }_{u\in [n]}\) in process \(\mathbf {O}\) is the same as the one given by process \(\mathbf {B}\).

Observe also that, from the definition of the protocol (see the rule of Stage 1 and Stage 2 in Sect. 3.1), it follows that each node’s action depends only on the set \(R_j(u)\) of received messages at the end of each phase j, and does not depend on any further information such as the actual order in which the messages are received during the phase.

Summing up the two previous observations, we get that if, at the end of each phase j, we generate the \(R_j(u)\)s according to process \(\mathbf {B}\), and we let the protocol execute according to them, then we indeed get the same stochastic process as process \(\mathbf {O}\). \(\square \)

Now, one key ingredient in our proof is to approximate process \(\mathbf {B}\) using the following process \(\mathbf {P}\).

Definition 4

Given \(N_{j}\), process \(\mathbf {P}\) associated to phase j is the one-shot process in which each node receives a number of opinions i that is a random variable with distribution Poisson\((h_{i}/n)\), where \(h_{i}\) is the number of messages in \(N_{j}\) carrying opinion i, and each Poisson random variable is independent of the others.

Now we provide some results from the theory of Poisson approximation for balls-into-bins experiments that are used in Sect. 3.2. For a nice introduction to the topic, we refer to [36].

Lemma 1

Let \(\left\{ X_{j}\right\} _{j\in \left[ \tilde{n}\right] }\) be independent r.v. such that \(X_{j}\sim \hbox {Poisson}(\lambda _{j})\). The vector \((X_{1},\ldots ,X_{\tilde{n}})\) conditional on \(\sum _{j}X=\tilde{m}\) follows a multinomial distribution with \(\tilde{m}\) trials and probabilities \(\left( \frac{\lambda _{1}}{\sum _{j}\lambda _{j}},\ldots ,\frac{\lambda _{\tilde{n}}}{\sum _{j}\lambda _{j}}\right) \).

Lemma 2

Consider a balls-into-bins experiment in which \(h\) colored balls are thrown in n bins, where \(h_{i}\) balls have color i with \(i\in \left\{ 1,\ldots ,k\right\} \) and \(\sum _{i}h_{i}=h\). Let \(\left\{ X_{u,i}\right\} _{\begin{array}{c} u\in \left\{ 1,\ldots ,n\right\} , i\in \left\{ 1,\ldots ,k\right\} \end{array} }\) be the number of i-colored balls that end up in bin u, let \(f(x_{1,1},\ldots ,x_{n,1},x_{n,2},\ldots ,x_{n,k},z_{1},\ldots ,z_{n})\) be a non-negative function with positive integer arguments \(x_{1,1},\ldots ,x_{n,1},\!\)\(x_{n,2},\ldots ,x_{n,k},\!\)\(z_{1},\ldots ,z_{n}\), let \(\left\{ Y_{u,i}\right\} _{\begin{array}{c} u\in \left\{ 1,\ldots ,n\right\} , i\in \left\{ 1,\ldots ,k\right\} \end{array} }\) be independent r.v. such that \(Y_{u,i}\sim \)Poisson\((h_{i}/n)\) and let \(Z_{1},\ldots ,Z_{n}\) be integer valued r.v. independent from the \(X_{u,i}\)s and \(Y_{u,i}\)s. Then

Proof

To simplify notation, let \(\bar{Z}=(Z_{1},\ldots ,Z_{n})\), \(\bar{X}=(X_{1,1},\ldots ,X_{n,1},X_{n,2},\ldots ,X_{n,n})\), \(\bar{Y}=(Y_{1,1},\ldots ,Y_{n,1},Y_{n,2},\ldots ,Y_{n,n})\), \(\bar{Y}_{\sum }=(\sum _{u=1}^{n}Y_{u,1},\ldots ,\sum _{u=1}^{n}Y_{u,k})\), \(\lambda _{i}=h_{i}/n\), \(\bar{\lambda }=(\lambda _{1},\ldots ,\lambda _{k})\) and finally \(\bar{x}=(x_{1},\ldots ,x_{k})\) for any \(x_{1},\ldots ,x_{k}\). Observe that, while \(X_{u,i}\) and \(X_{v,i}\) are clearly dependent, \(X_{u,i}\) and \(X_{v,j}\) with \(i\ne j\) are stochastically independent (even if \(u=v\)). Indeed, the distribution of the r.v. \(\left\{ X_{u,i}\right\} _{u\in \left\{ 1,\ldots ,n\right\} }\) for each fixed i is multinomial with \(\lambda _{i}\) trials and uniform distribution on the bins. Thus, from Lemma 1 we have that \(\left\{ X_{u,i}\right\} _{u\in \left\{ 1,\ldots ,n\right\} }\)are distributed as \(\left\{ Y_{u,i}\right\} _{u\in \left\{ 1,\ldots ,n\right\} }\) conditional on \(\sum _{u=1}^{n}Y_{u,i}=\lambda _{i}\), that is

Therefore, we have

where, in the last inequality, we use that, by Stirling’s approximation, \(a!\le e\sqrt{a}(\frac{a}{e})^{a}\) for any \(a>0\). \(\square \)

From Lemmas 1 and 2, we get the following general result which says that if a generic event \(\mathcal {E} \) holds w.h.p in process \(\mathbf {P}\), it also holds w.h.p. in process \(\mathbf {O}\).

Lemma 3

Given the opinion distribution and the number of active nodes at the beginning of a fixed phase j, let \({\mathcal {E} }\) be an event that, at the end of that phase, holds with probability at least \(1-n^{-b}\) in process \(\mathbf {P}\), for some \(b> ( k \log h ) / ( 2 \log n ) \) with \(h = \sum _i h_i\).Footnote 6 Then, at the end of phase j, \({\mathcal {E} }\) holds w.h.p. also in process \(\mathbf {O}\).

Proof

Thanks to Claim 1, it suffices to prove that, at the end of phase j, \({\mathcal {E} }\) holds w.h.p. in process \(\mathbf {B}\).

Let \(\bar{\mathcal {E} }\) be the complementary event of \(\mathcal {E} \). Let \( h= |M_{j} | \) be the number of balls that are thrown in process \(\mathbf {B}\) associated to phase j, where \(h_{i}\) balls have color i with \(i\in \left\{ 1,\ldots ,k\right\} \) and \(\sum _{i}h_{i}=h\). Let \(\left\{ X_{u,i}\right\} _{\begin{array}{c} u\in \left\{ 1,\ldots ,n\right\} , i\in \left\{ 1,\ldots ,k\right\} \end{array} }\) be the number of i-colored balls that end up in bin u, let \(\left\{ Y_{u,i}\right\} _{\begin{array}{c} u\in \left\{ 1,\ldots ,n\right\} , i\in \left\{ 1,\ldots ,k\right\} \end{array} }\) be the independent r.v. of process \(\mathbf {P}\) such that \(Y_{u,i}\sim \)Poisson\((h_{i}/n)\) and let \(Z_{1},\ldots ,Z_{n}\) be integer valued r.v. independent from the \(X_{u,i}\)s and \(Y_{u,i}\)s.

Fix any realization of \(N_{j}\), i.e. any re-coloring of the balls in the first step of process \(\mathbf {B}\). By choosing f in Lemma 2 as the binary r.v. indicating whether event \(\bar{\mathcal {E} }\) has occurred, where \(\bar{\mathcal {E} }\) is a function of the r.v. \(X_{1,1},\ldots ,X_{n,1},\)\(X_{n,2},\ldots ,X_{n,k},\)\(Z_{1},...,Z_{n}\), we get

Thus, from Eq. (3), the Inequality of arithmetic and geometric means and the hypotheses on the probability of \(\mathcal {E} \), we get

Finally, let \(\mathcal {N}\) be the set of all possible realizations of \(N_{j}\). By the law of total probability over \(\mathcal {N}\), we get that

where in the last line we used the hypotheses on the probability of \(\bar{\mathcal {E} }\). \(\square \)

We now analyze the two stages of our protocol, starting with Stage 1. Note that, in the following two sections, the statements about the evolution of the process refer to process \(\mathbf {O}\).

3.3 Stage 1

The rule of Stage 1 is aimed at guaranteeing that, w.h.p., the system reaches a target opinion distribution from which the rumor-spreading problem becomes an instance of the plurality consensus problem. More precisely, we have the following.

Lemma 4

Stage 1 takes \(O(\frac{1}{\epsilon ^{2}}\log n)\) rounds, after which w.h.p. all nodes are active and \(\mathbf {c}^{(\tau _{T+1})}\) is \(\delta \)-biased toward the correct opinion, with \(\delta =\Omega (\sqrt{\log n/n})\).

Proof

The fact that an undecided node becomes opinionated during a phase only depends on whether it gets a message during that phase, regardless of the value of such messages. Hence, the proof that, w.h.p., \(a^{(\tau _{T+1})}=1\) is reduced to the analysis of the rule of Stage 1 as an information spreading process. First, by carefully exploiting the Chernoff bound and Lemma 3, we can establish Claims 2 and 3 below: \(\square \)

Claim 2

W.h.p., at the end of phase 0, we have \( s/\epsilon ^{2} \log n/3n \le a^{(\tau _{0})}\le s/\epsilon ^{2} \log n/n \).

Claim 3

W.h.p., at the end of phase \(j\), \(1\le j\le T\), we have

Proof of Claims 2 and 3

The probability that, in the process \(\mathbf {O}\), an undecided node becomes opinionated at the end of phase j is \(1-(1-\frac{1}{n})^{h}\) where \(h\) is the number of messages sent during that phase. In process \(\mathbf {P}\), this probability is \(1-e^{-\frac{h}{n}}\). By using that \(e^{\frac{x}{1+x}}\le 1+x\le e^{x}\) for \(\left| x\right| <1\) we see that \(1-e^{-\frac{h}{n}}\le 1 -( 1-{1}/{n})^{h}\le 1-e^{-\frac{h}{n-1}}\). Thus, we can prove Claims 2 and 3 for process \(\mathbf {P}\) by repeating essentially the same calculations as in the proofs of Claims 2.2 and 2.4 in [26]. Since the Poisson distributions in process \(\mathbf {P}\) are independent, we can apply the Chernoff bound as claimed in [26]. Finally, we can prove that the statements hold also for process \(\mathbf {O}\) thanks to Lemma 2. \(\square \)

From the previous two claims, and by the definition of T we get the following.

Lemma 5

W.h.p., at the end of phase T, we have \(a^{(\tau _{T+1})}=\Omega ((\beta /\epsilon ^{2}+1)^{T}a^{(\tau _{0})})=\Omega (\epsilon ^{2})\).

Finally, from Lemma 5, an application of the Chernoff bound gives us the following.

Lemma 6

W.h.p., at the end of Stage 1, all nodes are opinionated.

As for the fact that, w.h.p., \(\mathbf {c}^{(\tau +1)}\) is a \(\delta \)-biased opinion distribution with \(\delta =\Omega (\sqrt{\log n/n})\), we can prove the following.

Lemma 7

W.h.p., at the end of each phase \(j\) of Stage 1, we have an \((\epsilon /2)^{j}\)-biased opinion distribution.

Proof

We prove the lemma by induction on the phase number. The case \(j=1\) is a direct application of Lemma 16 to \(c_{m}^{(\tau _{1})}-c_{i}^{(\tau _{1})}\) (\(i\ne m\)), where the number of opinionated nodes is given by Claim 2, and, where the independence of the r.v. follows from the fact that each node that becomes opinionated in the first phase has necessarily received the messages from the source-node. Now, suppose that the lemma holds up to phase \(j-1\le T\). Let  be the set of nodes that become opinionated during phase \(j\). Recall the definition of \(M_{ j} \) and \(N_{ j} \) from Sect. 3.2, and observe that \(\left| M_{ j} \right| = \left| N_{ j} \right| = \left( \tau _{j}- \tau _{j-1}\right) n\cdot a^{(\tau _{j-1})}\), and that the number of times opinion i occurs in \(M_{j}\) is \(\left| M_{j}\right| c_{i}^{(\tau _{j-1})}\). Let us identify each message in \(M_{j}\) with a distinct number in \(1,\ldots ,\left| M_{j}\right| \), and let \(\left\{ X_{w}(i)\right\} _{w\in \left\{ 1,\ldots ,\left| M_{j}\right| \right\} }\) be the binary r.v. such that \( X_{w}(i)=1\) if and only if w is i after the action of the noise. The frequency of opinion i in \(N_{j}\) is \(\frac{1}{\left| N_{j}\right| }\sum _{w=1}^{\left| N_{j}\right| }X_{w}(i)\).

be the set of nodes that become opinionated during phase \(j\). Recall the definition of \(M_{ j} \) and \(N_{ j} \) from Sect. 3.2, and observe that \(\left| M_{ j} \right| = \left| N_{ j} \right| = \left( \tau _{j}- \tau _{j-1}\right) n\cdot a^{(\tau _{j-1})}\), and that the number of times opinion i occurs in \(M_{j}\) is \(\left| M_{j}\right| c_{i}^{(\tau _{j-1})}\). Let us identify each message in \(M_{j}\) with a distinct number in \(1,\ldots ,\left| M_{j}\right| \), and let \(\left\{ X_{w}(i)\right\} _{w\in \left\{ 1,\ldots ,\left| M_{j}\right| \right\} }\) be the binary r.v. such that \( X_{w}(i)=1\) if and only if w is i after the action of the noise. The frequency of opinion i in \(N_{j}\) is \(\frac{1}{\left| N_{j}\right| }\sum _{w=1}^{\left| N_{j}\right| }X_{w}(i)\).

Thanks to Lemma 3, it suffices to prove the lemma for process \(\mathbf {P}\). By definition, in process \(\mathbf {P}\), for each i, the number of messages with opinion i that each node receives conditional on \(N_{ j} \) follows a Poisson \((\frac{1}{n}\sum _{w=1}^{\left| N_{j} \right| }X_{w}\left( i)\right) \) distribution. Each node u that becomes opinionated during phase \(j\) gets at least one message during the phase. Thus, from Lemma 1, the probability that u gets opinion i conditional on \(N_{ j} \) is

Since opinionated nodes never change opinion during Stage 1, the bias of \(\mathbf {c}^{(\tau _{j})}\) is at least the minimum between the bias of \(\mathbf {c}^{(\tau _{j-1})}\) and the bias among the newly opinionated nodes in \(S_{j}\). Hence, we can apply the Chernoff bound to the nodes in \(S_{j}\) to prove that the bias at the end of phase \(j\) is, w.h.p.Footnote 7,

where \(\tilde{\delta }_{j}=O(\sqrt{\log n/|S_{j}|})\).

Moreover, note that

Furthermore, (conditional on \(\mathbf {c}^{(\tau _{j-1})}\) and \(a^{(\tau _{j-1})}\)) the r.v. \(\left\{ X_{w} (i) \right\} _{w \in \left\{ 1,\ldots ,\left| N_{j}\right| \right\} }\) are independent. Thus, for each \(i\ne m\), from Claim 3, and by applying the Chernoff bound on \(\sum _{w=1}^{\left| N_{j}\right| }X_{w}(m)\), and on \(\sum _{w=1}^{\left| N_{j}\right| }X_{w}(i)\), we get that w.h.p.

where \(\delta _{j}=O(\sqrt{\log n/|N_{j}|})\).

From Claims 2 and 3, it follows that \(\tilde{\delta }_{j},\delta _{j}\le \frac{1}{4}\) w.h.p. Thus by putting together Eqs. (4) and (5) via the chain rule, we get that, w.h.p.,

\(\square \)

Lemma 7 implies that, w.h.p., we get a bias \(\epsilon ^{T+2}=\Omega (\sqrt{\log n/n})\) at the end of Stage 1, which completes the proof of Lemma 4. \(\square \)

3.4 Stage 2

As proved in the previous section, w.h.p., all nodes are opinionated at the end of Stage 1, and the final opinion distribution is \(\Omega (\sqrt{\log n/n})\)-biased. Now, we have that the rumor-spreading problem is reduced to an instance of the plurality consensus problem. The purpose of Stage 2 is to progressively amplify the initial bias until all nodes support the plurality opinion, i.e., the opinion originally held by the source node.

During the first \(T^{\prime }\) phases, it is not hard to see that, by taking \(c\) large enough, a fraction arbitrarily close to 1 of the nodes receives at least \(\ell \) messages, w.h.p. Each node u in such fraction changes its opinion at the end of the phase. With a slight abuse of notation, let \({\text {maj}}_{\ell }(u) = {\text {maj}}{(\mathcal {S}(u))}\) be u’s new opinion based on the \(\ell = |\mathcal {S}(u)|\) randomly sampled received messages. We show that, w.h.p., these new opinions increase the bias of the opinion distribution toward the plurality opinion by a constant factor \(>1\).

For the sake of simplicity, we assume that \(\ell \) is odd (see Appendix B for details on how to remove this assumption).

Proposition 1

Suppose that, at the beginning of phase j of Stage 2 with \(0\le j\le T^{\prime }-1\), the opinion distribution is \(\delta \)-biased toward m. In process \(\mathbf {P}\), if a node u changes its opinion at the end of the phase, then, for any \(i\ne m\), we have

where

First, we prove Eq. (6) for \(k=2\). We then obtain the general case by induction. The proof for \(k=2\) is based on a known relation between the cumulative distribution function of the binomial distribution, and the cumulative distribution function of the beta distribution. This relation is given by the following lemma.

Lemma 8

Given \(p\in (0,1)\) and \(0\le j\le \ell \) it holds

Proof

By integrating by parts, for \(j<\ell -1\) we have

where, in the last equality, we used the identity

Note that when \(j=\ell -1\), Eq. (7) becomes

Hence, we can unroll the recurrence given by Eq. (7) to obtain

concluding the proof. \(\square \)

Lemma 8 allows us to express the survival function of a binomial sample as an integral. Thanks to it, we can prove Proposition 1 when \(k=2\).

Lemma 9

Let \(\mathbf {c}=(c_{1},c_{2})\) be a \(\delta \)-biased opinion distribution during Stage 2. In process \(\mathbf {P}\), for any node u, we have \( \Pr \left( {\text {maj}}_{\ell }(u)=m\right) -\Pr \left( {\text {maj}}_{\ell }(u)=3-m\right) \ge \sqrt{{2\ell } / {\pi }} \cdot g\left( \delta ,\ell \right) . \)

Proof

Without loss of generality, let \(m=1\). Let \(X_{1}^{(\ell )}\) be a r.v. with distribution \(Bin(\ell ,p_{1})\), and let \(X_{2}^{(\ell )}=\ell -X_{1}^{(\ell )}\). By using Lemma 8, we get

By setting \(t=z-\frac{1}{2}\), and rewriting \(p_{1} = \frac{p_{1}-p_{2}}{2} + \frac{1}{2}\) and \(p_{2}=\frac{p_{2}-p_{1}}{2}+\frac{1}{2}\) we obtain

For any \(t\in (-\frac{y}{2}, \frac{y}{2})\subseteq (-\frac{p_{1}-p_{2}}{2}, \frac{p_{1}-p_{2}}{2})\), it holds

Thus, for any \(y\in (-p_{1}+p_{2},p_{1}-p_{2})\) we have

The r.h.s. of Eq. (8) is maximized w.r.t. \(y\in (-p_{1}+p_{2},p_{1}-p_{2})\) when

Hence, for \(p_{1}-p_{2}<\frac{1}{\sqrt{\ell }}\), we get

For \(p_{1}-p_{2}\ge \frac{1}{\sqrt{\ell }}\) we get

By using the fact that g is a non-decreasing function w.r.t. its first argument, we obtain

Finally, by using the bounds \({2r \atopwithdelims ()r} \ge \frac{2^{2r}}{\sqrt{\pi r}} e^{\frac{1}{9r}}\) (see Lemma 13), and \(e^{x}\ge 1-x\) together with the identityFootnote 8

we get

concluding the proof. \(\square \)

Next we show how to lower bound the above difference with a much simpler expression.

Lemma 10

In process \(\mathbf {P}\), during Stage 2, for any node u, \(\Pr ({\text {maj}}_{\ell }(u)=m)-\Pr ({\text {maj}}_{\ell }(u)=3-m)\) is at least \( \Pr (X_{1}^{(\ell )}>X_{2}^{(\ell )},...,X_{k}^{(\ell )}) -\Pr (X_{i}^{(\ell )}>X_{1}^{(\ell )}, ..., X_{i-1}^{(\ell )}, X_{i+1}^{(\ell )},...,X_{k}^{(\ell )}), \) where \(\bar{X}^{(\ell )}=(X_{1}^{(\ell )},...,X_{k}^{(\ell )})\) follows a multinomial distribution with \(\ell \) trials and probability distribution \(\mathbf {c}\cdot P\).

Proof

Without loss of generality, let \(m=1\). Let \(\mathbf {x}=(x_{1},...,x_{k})\) denote a generic vector with positive integer entries such that \(\sum _{j=1}^{k}x_{j}=\ell \), let \(W(\mathbf {x})\) be the set of the greatest entries of \(\mathbf {x}\), and, for \(j\in \left\{ 1,i\right\} \), let

-

\(A_{j}^{(!)} = \left\{ \mathbf {x} \,|\, W(\mathbf {x})=\{ j\} \right\} \),

-

\(A_{j}^{(=)}= \left\{ \mathbf {x} \,|\,1,i\in W(\mathbf {x}) \right\} \),

-

\(A_{1}^{(\ne )}= \left\{ \mathbf {x} \,|\, 1\in W(\mathbf {x}) \wedge i\not \in W(\mathbf {x})\wedge \left| W(\mathbf {x})\right| >1\right\} \) and

-

\(A_{i}^{(\ne )}= \left\{ \mathbf {x} \,|\, i\in W(\mathbf {x})\wedge 1 \not \in W(\mathbf {x}) \wedge \left| W(\mathbf {x})\right| >1\right\} \).

It holds

Let

be the vector function that swaps the entries \(x_{1}\) and \(x_{i}\) in \(\mathbf {x}\). \(\sigma \) is clearly a bijection between the sets \(A_{1}^{(!)}\),\(A_{1}^{(=)}\),\(A_{1}^{(\ne )}\) and \(A_{i}^{(!)}\), \(A_{i}^{(=)}\), \(A_{i}^{(\ne )}\), respectively, namely

where  denotes a bijection.

denotes a bijection.

Moreover, for all \(\mathbf {x}\in A_{j}^{(=)}\), it holds

Therefore

Furthermore, for all \(\mathbf {x}\in A_{1}^{(\ne )}\), we have

where \(\sigma (\mathbf {x})\in A_{i}^{(\ne )}\). From Eq. (11) we thus have that

From Eq. (9), (10) and (12) we finally get

concluding the proof of Lemma 10. \(\square \)

Intuitively, Lemma 10 says that the set of events in which a tie occurs among the most frequent opinions in the node’s sample of observed messages does not favor the probability that the node picks the wrong opinion. Thus, by avoiding considering those events, we get a lower bound on \(\Pr ({\text {maj}}_{\ell }(u)=1)-\Pr ({\text {maj}}_{\ell }(u)=i)\).

Thanks to Lemma 10, the proof of Eq. (6) reduces to proving the following.

Lemma 11

For any fixed k, and with \(\bar{X}\) defined as in Lemma 10, we have

Proof

We prove Eq. (13) by induction. Lemma 9 provides us with the base case for \(k=2\). Let us assume that, for \(k\le \kappa \), Eq. (13) holds. For \(k=\kappa +1\), by using the law of total probability, we have

Now, \(\arg \max _j \{ X_{j}^{\left( \ell \right) } \} = X_{i}^{\left( \ell \right) }\) and \(X_{\kappa +1}^{\left( \ell \right) }\le \left\lfloor \frac{\ell }{\kappa +1}\right\rfloor \) together imply \(X_{i}^{\left( \ell \right) }>X_{\kappa +1}^{\left( \ell \right) }\). Thus, in the r.h.s. of Eq. (14), we have

and

Moreover, \(X^{(\ell )}\) follows a multinomial distribution with parameters \(\mathbf {p}\) and \(\ell \). Thus \(X_{k}^{(\ell )}=h\) implies that the remaining entries \(X_{1}^{(\ell )},\ldots ,X_{k-1}^{(\ell )}\) follow a multinomial distribution with \(l-h\) trials, and distribution \((\frac{p_{1}}{1-p_{k}}, \ldots , \frac{p_{k-1}}{1-p_{k}})\). Let \(Y^{(\ell -h)} =(Y_{1}^{(\ell -h)}, \ldots , Y_{k-1}^{(\ell -h)})\) be the distribution of \(X_{1}^{(\ell )},\ldots ,X_{k-1}^{(\ell )}\) conditional on \(X_{k}^{(\ell )}=h\). From Eq. (14) we get

Now, using the inductive hypothesis on the r.h.s. of Eq. (15) we get

where, in the last inequality, we used the fact that g is a non-increasing function w.r.t. the second argument (see Lemma 15).

It remains to show that

Let \(W_{\kappa +1}^{(\ell )}\) be a r.v. with probability distribution \(Bin(\ell ,\frac{1}{\kappa +1})\). Since \(X_{\kappa +1}^{(\ell )}\sim Bin(\ell ,p_{\kappa +1})\) with \(p_{\kappa +1}\le \frac{1}{\kappa +1}\), a standard coupling argument (see for example [22, Exercise 1.1.]), enables to show that

Hence, we can apply the central limit theorem (Lemma 14) on \(W_{\kappa +1}^{(\ell )}\), and get that, for any \(\tilde{\epsilon }\le \frac{2-\sqrt{3}}{4}\), there exists some fixed constant \(\ell _{0}\) such that, for \(\ell \ge \ell _{0}\), we have

By using Eq. (16), for \(\ell \ge \ell _{0}\) we finally get that

concluding the proof that

\(\square \)

By using Proposition 1, we can then prove Lemma 12.

Lemma 12

W.h.p., at the end of Stage 2, all nodes support the initial plurality opinion.

Proof

Let \(\delta =\Omega (\sqrt{\log n/n})\) be the bias of the opinion distribution at the beginning of a generic phase \(j<T^{\prime }\) of Stage 2. Thanks to Proposition 1, by choosing the constant \(c\) of the phase length large enough, in process \(\mathbf {P}\) we get that \( \Pr \left( {\text {maj}}_{\ell }(u)=m\right) -\Pr \left( {\text {maj}}_{\ell }(u)=i\right) \ge \alpha \delta \) for some constant \(\alpha >1\) (provided that \(\delta \le 1/2\)). Hence, by applying Lemma 16 in Appendix A with \(\theta =\frac{\alpha }{4}\delta \), we get \( \Pr ( c_{m}^{(\tau _{j})} -c_{i}^{(\tau _{j})} \le {\alpha \delta }/{2}) \le \exp ( -{(\alpha \delta )^{2}n}/{16}) \le n^{-\tilde{\alpha }} \) for some constant \(\tilde{\alpha }\) that is large enough to apply Lemma 2. Therefore, until \(\delta \ge 1/2\), in process \(\mathbf {P}\) we have that \( c_{m}^{\left( \tau _{j}\right) } -c_{i}^{\left( \tau _{j}\right) } \ge {\alpha }\delta /{2} \) holds w.h.p. From the previous equation it follows that, after \(T^{\prime }\) phases, the protocol has reached an opinion distribution with a bias greater than 1 / 2. Thus, by a direct application of Lemma 16 and Lemma 2 to \(c_{m}^{(\tau _{T^{\prime }})} -c_{i}^{(\tau _{T^{\prime }})}\), we get that, w.h.p., \(c_{m}^{(\tau _{T^{\prime }})} -c_{i}^{(\tau _{T^{\prime }})}=1\), concluding the proof. \(\square \)

Finally, the time efficiency claimed in Theorems 1 and 2 directly follows from Lemma 12, while the required memory follows from the fact that in each phase each node needs only to count how many times it has received each opinion, i.e. to count up to at most \(O(\frac{1}{\epsilon ^2}\log n)\) w.h.p.

4 On the notion of \((\epsilon , \delta )\)-majority-preserving matrix

In this section we discuss the notion of \((\epsilon , \delta )\)-m.p. noise matrix introduced by Definition 2. Let us consider Eq. (2). The matrix P represents the “perturbation” introduced by the noise, and so \((\mathbf {c}\cdot P)_{m}-(\mathbf {c}\cdot P)_{i}\) measures how much information the system is losing about the correct opinion m, in a single communication round. An \((\epsilon , \delta )\)-m.p. noise matrix is a noise matrix that preserves at least an \(\epsilon \) fraction of bias, provided the initial bias is at least \(\delta \). The \((\epsilon , \delta )\)-m.p. property essentially characterizes the amount of noise beyond which some coordination problems cannot be solved without further hypotheses on the nodes’ knowledge of the matrix P. To see why this is the case, consider an \((\epsilon , \delta )\)-m.p. noise matrix for which there is a \(\delta \)-biased opinion distribution \(\tilde{\mathbf {c}}\) such that \(\left( {\tilde{\mathbf {c}}}\cdot P\right) _{m}-({\tilde{\mathbf {c}}}\cdot P)_{i}<0\) for some opinion i. Given opinion distribution \(\tilde{\mathbf {c}}\), from each node’s perspective, opinion m does not appear to be the most frequent opinion. Indeed, the messages that are received are more likely to be i than m. Thus, plurality consensus cannot be solved from opinion distribution \(\tilde{\mathbf {c}}\).

Observe that verifying whether a given matrix P is \((\epsilon , \delta )\)-m.p. with respect to opinion m consists in checking whether for each \(i\ne m\) the value of the following linear program is at least \(\epsilon \delta \):

We now provide some negative and positive examples of \((\epsilon , \delta )\)-m.p. noise matrices. First, we note that a natural matrix property such as being diagonally dominant does not imply that the matrix is \((\epsilon , \delta )\)-m.p. For example, by multiplying the following diagonally dominant matrix by the \(\delta \)-biased opinion distribution \(\mathbf {c}= (1/2 + \delta , 1/2 - \delta , 0)^{\intercal }\), we see that it does not even preserve the majority opinion at all when \({\epsilon , \delta } < 1/6\):

On the other hand, the following natural generalization of the noise matrix in [26] (see Eq. (1)), is \((\epsilon , \delta )\)-m.p. for every \(\delta >0\) with respect to any opinion:

More generally, let P be a noise matrix such that

for some positive numbers p, \(q_u\) and \(q_l\). Since

By defining \(\epsilon = (p-q_u)/2\), we get that the last line in Eq. (18) is greater than \(\epsilon \delta \) iff \((p-q_u) \delta /2\ge (q_u - q_l)\), which gives a sufficient condition for any matrix of the form given in Eq. (17) for being \((\epsilon , \delta )\)-m.p.

5 Conclusion

In this paper, we solved the general version of rumor spreading and plurality consensus in biological systems. That is, we have solved these problems for an arbitrarily large number k of opinions. We are not aware of realistic biological contexts in which the number of opinions might be a function of the number n of individuals. Nevertheless, it could be interesting, at least from a conceptual point to view, to address rumor spreading and plurality consensus in a scenario in which the number of opinions varies with n. This appears to be a technically challenging problem. Indeed, extending the results in the extended abstract of [26] from 2 opinions to any constant number k of opinions already required to use complex tools. Yet, several of these tools do not apply if k depends on n. This is typically the case of Proposition 1. We let as an open problem the design of stochastic tools enabling to handle the scenario where \(k=k(n)\).

Notes

A series of events \(\mathcal {E}_{n}\), \(n\ge 1\), hold w.h.p. if \(\Pr (\mathcal {E}_{n})\ge 1-O(1/n^{c})\) for some \(c>0\).

We remark that, while it would be more appropriate to measure the space complexity by the number of states here (in accordance with other work which is concerned with minimizing it, such as population protocols [7] or cellular automata [35]), we make use of the memory bits for consistency with the main related work [26].

For a discussion on what happens for other values of \(\epsilon \), see “Appendix C”.

Note that, in the protocol considered in [26], the choice of each node’s new opinion in both stages is based on the first messages received. In [26], in order to relax the synchronicity assumption that nodes share a common clock, they adopt the same sample-based variant of the rule that we adopt here.

Note that, in order to sample u.a.r. one of them, u does not need to collect all the opinions it receives. A natural sampling strategy such as reservoir sampling can be used.

Note that, if \(N_{j}\) is not yet fixed, the parameters \(h_{i}\) of process \(\mathbf {P}\) associated to phase j are random variables. However, if the opinion distribution and the number of active nodes at the beginning of phase j are given, then \(h = \sum _i h_i = |N_{j} | = |M_{j} |\) is fixed.

We remark that Eq. (4) concerns the value of \(\Pr ( c_{m}^{\left( \tau _{j}\right) } -c_{i}^{\left( \tau _{j}\right) } | N_{ j})\), which is a random variable.

Recall that we are assuming that \(\ell \) is odd.

References

Abdullah, M.A., Draief, M.: Global majority consensus by local majority polling on graphs of a given degree sequence. Discrete Appl. Math. 180, 1–10 (2015)

Afek, Y., Alon, N., Barad, O., Barkai, N., Bar-Joseph, Z., Hornstein, E.: A biological solution to a fundamental distributed computing problem. Science 331(6014), 183–185 (2011)

Afek, Y., Alon, N., Bar-Joseph, Z., Cornejo, A., Haeupler, B., Kuhn, F.: Beeping a maximal independent set. Distrib. Comput. 26(4), 195–208 (2013)

Alistarh, D., Aspnes, J., Gelashvili, R.: Space-optimal majority in population protocols. In: Proceedings of the 19th Annual ACM-SIAM Symposium on Discrete Algorithms, pp. 2221–2239 (2018)

Ame, J.-M., Rivault, C., Deneubourg, J.-L.: Cockroach aggregation based on strain odour recognition. Anim. Behav. 68(4), 793–801 (2004)

Angluin, D., Aspnes, J., Eisenstat, D., Ruppert, E.: The computational power of population protocols. Distrib. Comput. 20(4), 279–304 (2007)

Angluin, D., Aspnes, J., Eisenstat, D.: A simple population protocol for fast robust approximate majority. Distrib. Comput. 21(2), 87–102 (2008)

Aspnes, J., Ruppert, E.: An introduction to population protocols. In: Middleware for Network Eccentric and Mobile Applications. Springer, pp. 97–120 (2009)

Becchetti, L., Clementi, A., Natale, E., Pasquale, F., Silvestri, R.: Plurality consensus in the gossip model. In: Proceedings of the 26th Annual ACM-SIAM Symposium on Discrete Algorithms, SIAM, pp. 371–390 (2015)

Becchetti, L., Clementi, A., Natale, E., Pasquale, F., Silvestri, R., Trevisan, L.: Simple dynamics for plurality consensus. Distrib. Comput. 30(4), 1–14 (2016)

Ben-Shahar, O., Dolev, S., Dolgin, A., Segal, M.: Direction election in flocking swarms. In: Proceedings of the 6th International Workshop on Foundations of Mobile Computing, ACM, pp. 73–80 (2010)

Berenbrink, P., Friedetzky, T., Kling, P., Mallmann-Trenn, F., Wastell, C.: Plurality consensus in arbitrary graphs: lessons learned from load balancing. In: Proceedings of the 24th Annual European Symposium on Algorithms, vol. 57, p. 10:1–10:18 (2016)

Boczkowski, L., Korman, A., Natale, E.: Limits for Rumor Spreading in Stochastic Populations. In: Proceedings of the 9th Innovations in Theoretical Computer Science Conference, vol. 94, pp. 49:1–49:21 (2018)

Boczkowski, L., Korman, A., Natale, E.: Minimizing message size in stochastic communication patterns: fast self-stabilizing protocols with 3 bits. In: Proceedings of the 28th Annual ACM-SIAM Symposium on Discrete Algorithms, SIAM, pp. 2540–2559 (2017)

Cardelli, L., Csikász-Nagy, A.: The cell cycle switch computes approximate majority. Sci. Rep. 2, 656–656 (2011)

Chazelle, B.: Natural algorithms. In: Proceedings of the 20th Annual ACM-SIAM Symposium on Discrete Algorithms, SIAM, pp. 422–431 (2009)

Conradt, L., Roper, T.J.: Group decision-making in animals. Nature 421(6919), 155–158 (2003)

Cooper, C., Elsässer, R., Radzik, T.: The power of two choices in distributed voting. In: Automata, Languages, and Programming, vol. 8573 of Lecture Notes in Computer Science. Springer, pp. 435–446 (2014)

Demers, A., Greene, D., Hauser, C., Irish, W., Larson, J., Shenker, S., Sturgis, H., Swinehart, D., Terry, D.: Epidemic algorithms for replicated database maintenance. In: Proceedings of the 6th Annual ACM Symposium on Principles of Distributed Computing, ACM, pp. 1–12 (1987)

Doerr, B., Goldberg, L.A., Minder, L., Sauerwald, T., Scheideler, C.: Stabilizing consensus with the power of two choices. In: Proceedings of the 23th Annual ACM Symposium on Parallelism in Algorithms and Architectures, ACM, pp. 149–158 (2011)

Draief, M., Vojnovic, M.: Convergence speed of binary interval consensus. SIAM J. Control Optim. 50(3), 1087–1109 (2012)

Dubhashi, D.P., Panconesi, A.: Concentration of Measure for the Analysis of Randomized Algorithms. Cambridge University Press, Cambridge (2009)

El Gamal, A., Kim, Y.-H.: Network Information Theory. Cambridge University Press, Cambridge (2011)

Elsässer, R., Friedetzky, T., Kaaser, D., Mallmann-Trenn, F., Trinker, H.: Brief announcement: rapid asynchronous plurality consensus. In: Proceedings of the 37th ACM Symposium on Principles of Distributed Computing, ACM, pp. 363–365 (2017)

Feinerman, O., Haeupler, B., Korman, A.: Breathe before speaking: Efficient information dissemination despite noisy, limited and anonymous communication. In: Proceedings of the 34th ACM Symposium on Principles of Distributed Computing, ACM, pp. 114–123. Extended abstract of [27] (2014)

Feinerman, O., Haeupler, B., Korman, A.: Breathe before speaking: efficient information dissemination despite noisy, limited and anonymous communication. Distrib. Comput. 30(5), 1–17 (2015)

Franks, N.R., Pratt, S.C., Mallon, E.B., Britton, N.F., Sumpter, D.J.: Information flow, opinion polling and collective intelligence in house-hunting social insects. Philos. Trans. R. Soc. Lond. B Biol. Sci. 357(1427), 1567–1583 (2002)

Franks, N.R., Dornhaus, A., Fitzsimmons, J.P., Stevens, M.: Speed versus accuracy in collective decision making. Proc. Biol. Sci. 270(1532), 2457–2463 (2003)

Ghaffari, M., Parter, M.: A polylogarithmic gossip algorithm for plurality consensus. In: Proceedings of the 36th ACM Symposium on Principles of Distributed Computing, ACM, pp. 117–126 (2016)

Giakkoupis, G., Berenbrink, P., Friedetzky, T., Kling, P.: Efficient Plurality Consensus, or: the benefits of cleaning up from time to time. In: Proceedings of the 43rd International Colloquium on Automata, Languages, and Programming vol. 55, p. 136:1–136:14 (2016)

Jung, K., Kim, B.Y., Vojnović, M.: Distributed ranking in networks with limited memory and communication. In: Proceedings of the 2012 IEEE International Symposium on Information Theory, IEEE, pp. 980–984 (2012)

Karp, R., Schindelhauer, C., Shenker, S., Vocking, B.: Randomized rumor spreading. In: Proceedings of the 41st Annual Symposium on Foundations of Computer Science, IEEE, pp. 565–574 (2000)

Kempe, D., Dobra, A., Gehrke, J.: Gossip-based computation of aggregate information. In: Proceedings of the 44st Annual Symposium on Foundations of Computer Science, IEEE, pp. 482–491 (2003)

Korman, A., Greenwald, E., Feinerman, O.: Confidence sharing: an economic strategy for efficient information flows in animal groups. PLoS Comput. Biol. 10(10), e1003862–e1003862 (2014)

Land, M., Belew, R.: No perfect two-state cellular automata for density classification exists. Phys. Rev. Lett. 74(25), 5148–5150 (1995)

Mitzenmacher, M., Upfal, E.: Probability and Computing: Randomized Algorithms and Probabilistic Analysis. Cambridge University Press, Cambridge (2005)

Perron, E., Vasudevan, D., Vojnovic, M.: Using three states for binary consensus on complete graphs. In: Proceedings of 28th IEEE INFOCOM (2009)

Pittel, B.: On spreading a rumor. SIAM J. Appl. Math. 47(1), 213–223 (1987)

Robbins, H.: A remark on Stirling’s formula. Am. Math. Mon. 62, 26–29 (1955)

Seeley, T.D., Buhrman, S.C.: Group decision making in swarms of honey bees. Behav. Ecol. Sociobiol. 45(1), 19–31 (1999)

Seeley, T.D., Visscher, P.K.: Quorum sensing during nest-site selection by honeybee swarms. Behav. Ecol. Sociobiol. 56(6), 594–601 (2004)

Sumpter, D.J., Krause, J., James, R., Couzin, I.D., Ward, A.J.: Consensus decision making by fish. Curr. Biol. 18(22), 1773–1777 (2008)

Acknowledgements

Open access funding provided by Max Planck Society. We thank the anonymous reviewers of an earlier version of this work for their constructive criticisms and comments, which were of great help in improving the results and their presentation.

Author information

Authors and Affiliations

Corresponding author

Additional information

An extended abstract of this work appeared in Proceedings of the 2016 ACM Symposium on Principles of Distributed Computing (PODC’16).

P. Fraigniaud: Additional support from the ANR project DISPLEXITY and from the INRIA project GANG.

E. Natale: This work has been partly done while the author was a fellow of the Simons Institute for the Theory of Computing.

Appendices

APPENDIX

A technical tools

Lemma 13

For any integer \(r\ge 1\) it holds

Proof

By using Stirling’s approximation [39]

we have

The proof of the upper bound is analogous (swap \(e^{\frac{1}{12r+1}}\) and \(e^{\frac{1}{12r}}\) in the first inequality). \(\square \)

Lemma 14

Let \(X_{1},...,X_{n}\) be a random sample from a Bernoulli(p) distribution with \(p\in (0,1)\) constant, and let \(Z\sim N(0,1)\). It holds

Lemma 15

The function

with \(x\in \left[ 0,1\right] \) and \(y\in \left[ 1,+\infty \right) \) is non-decreasing w.r.t. x and non-increasing w.r.t. y.

Proof

To show that g(x, y) is non-decreasing w.r.t. x, observe that

for \(x< y^{ - \frac{1}{2}} < 1\), and

for \(x < y^{ - \frac{1}{2}}\).

To show that g(x, y) is non-increasing w.r.t. y, observe that this is true for \(x < y^{ - \frac{1}{2}}\). For \(x \ge y^{ - \frac{1}{2}}\), since

we have

concluding the proof. \(\square \)

Lemma 16

Let \(\left\{ X_{t}\right\} {}_{t\in [n]}\) be n i.i.d. random variables such that

with \(p+r+q=1\). It holds

Proof

Let us define the r.v.

We can apply the Chernoff-Hoeffding bound to \(Y_{t}\) (see Theorem 1.1 in [22]), obtaining

for any \(\theta \in (0,1)\). Substituting Eq. (19) we have

concluding the proof. \(\square \)

B. Removing the parity assumption on \(\ell \)

The next lemma shows that, for \(k=2\), the increment of bias at the end of each phase of Stage 2 in the process \(\mathbf {P}\) is non-decreasing in the value of \(\ell \), regardless of its parity. In particular, since Proposition 1 is proven by induction, and since the value of \(\ell \) affects only the base case, the next lemma implies also the same kind of monotonicity for general k.

Lemma 17

Let \(k=2\), \(a=1\), let \(\ell \) be odd, and let \((\mathbf {c}\cdot P)_{1}\ge (\mathbf {c}\cdot P)_{2}\). The rule of Stage 2 of the protocol is such that

Proof

To simplify notation, let \(p_{1}=(\mathbf {c}\cdot P)_{1}\) and \(p_{2}=(\mathbf {c}\cdot P)_{2}\). By definition, we have

where \(X_{1}^{(\ell )}\), \(X_{1}^{(\ell +1)}\) and \(X_{1}^{(\ell +2)}\) are binomial r.v. with probability \(p_{1}\) and number of trials \(\ell ,\)\(\ell +1\), and \(\ell +2\), respectively. We can view \(X_{1}^{(\ell )}\), \(X_{1}^{(\ell +1)}\), and \(X_{1}^{(\ell +2)}\) as the sum of \(\ell \), \(\ell +1\) and \(\ell +2\)\(Bernoulli(p_{1})\) r.v., respectively. In particular, let Y and \(Y^{'}\) be independent r.v. with distribution \(Bernoulli(p_{1})\). We can couple \(X_{1}^{(\ell )}\), \(X_{1}^{(\ell +1)}\) and \(X_{1}^{(\ell +2)}\) as follows:

and

Since \(\ell \) is odd, observe that if \(X_{1}^{(\ell )}>\left\lceil \frac{\ell }{2}\right\rceil \), then \({\text {maj}}_{\ell }(u)=1\) regardless of the value of Y, and similarly if \(X_{1}^{(\ell )}<\left\lceil \frac{\ell }{2}\right\rceil \) then \({\text {maj}}_{\ell }(u)=2\). Thus we have

As for the last two terms in the previous equation, we have that

and

Moreover, by a direct calculation one can verify that

From Eqs. (22), (23) and (24) it follows that

By plugging Eq. (25) in Eq. (21) we get

The proof that

is analogous, proving the first part of Eq. (20).

As for the second part, observe that if \(X_{1}^{(\ell +1)}>\frac{\ell +1}{2}\), then \({\text {maj}}_{\ell +2}(u)=1\) regardless of the value of \(Y^{\prime }\), and similarly if \(X_{1}^{(\ell +1)}<\frac{\ell +1}{2}\) then \({\text {maj}}_{\ell +2}(u)=2\). Observe also that

Because of the previous observations and the hypothesis that \(p_{1}\ge \frac{1}{2}\), we have that

The proof of

is the same up to the inequality in (26), whose direction is reversed because \(p_{2}\le \frac{1}{2}\). \(\square \)

C. Rumor spreading with \(\epsilon =\Theta (n^{-\frac{1}{4}-\eta })\)

In [26] it is shown that at the end of Stage 1 the bias toward the correct opinion is at least \(\epsilon ^{T+2}/2\) and, at the beginning of Stage 2, they assume a bias toward the correct opinion of \(\Omega (\sqrt{\log n/n})\). In this section, we show that, when \(\epsilon =\Theta (n^{-\frac{1}{4}-\eta })\) for some \(\eta \in (0, 1/4)\), the protocol considered by [26] and us cannot solve the rumor-spreading and the plurality consensus problem in time \(\Theta (\log n/\epsilon ^{2})\).

First, observe that when \(\epsilon =\Theta (\sqrt{\log n/n})\) the length of the first phase of Stage 1 is \(\Theta \left( \log n/\epsilon ^{2}\right) =\Omega (n\log n)\), which implies that, w.h.p., each node gets at least one message from the source during the first phase. Thus, thanks to our analysis of Stage 2 we have that when \(\epsilon =\Theta (\sqrt{\log n/n})\) the protocol effectively solves the rumor-spreading problem, w.h.p., in time \(\Theta (\log n/\epsilon ^{2})\).

In general, for \(\epsilon < n^{-1/2-\eta }\) for some constant \(\eta >0\), if we adopt the second stage right from the beginning (which means that the source node sends \(\epsilon ^{-2}\) messages), we get that, w.h.p., all nodes receive at least \(\log n /( \epsilon ^2 n )\) messages. Thus, by a direct application of Lemma 16, after the first phase we get an \(\sqrt{ \log n / n}\)-biased opinion distribution, w.h.p., and Stage 2 correctly solves the problem according to Theorem 2.

However, when \(\epsilon =\Theta (n^{-\frac{1}{4}-\eta })\) for some \(\eta >0\), from Claim 2 and Lemma 7 we have that, after phase 0 in opinion distribution \(\mathbf {c}\), at most \({\mathcal O}\left( \log n/\epsilon ^{2}\right) ={\mathcal O}(n^{\frac{1}{2}+2\eta }\log n)\) nodes are opinionated, and \(\mathbf {c}\) is \(\frac{\epsilon }{2}\)-biased. Each node that gets opinionated in phase 1 receives a message pushed from some node of \(\mathbf {c}\), and, because of the noise, the value of this message is distributed according to \(\mathbf {c}^{(\tau _{0})}\cdot P\). It follows that \(\mathbf {c}\) is an \(\epsilon ^{2}/2\)-biased opinion distribution with \(\epsilon ^{2}=n^{-\frac{1}{2}-2\eta }\) which is much smaller than the \(\Omega (\sqrt{\log n/n})\) bound required for the second stage.

We believe that no minor modification of the protocol proposed here can correctly solve the noisy rumor-spreading problem when \(\epsilon =\Theta (n^{-\frac{1}{4}-\eta })\) in time \({\mathcal O}\left( \log n/\epsilon ^2 \right) \).

Rights and permissions