Abstract

We study the critical probability for the metastable phase transition of the two-dimensional anisotropic bootstrap percolation model with (1, 2)-neighbourhood and threshold \(r = 3\). The first order asymptotics for the critical probability were recently determined by the first and second authors. Here we determine the following sharp second and third order asymptotics:

We note that the second and third order terms are so large that the first order asymptotics fail to approximate \(p_c\) even for lattices of size well beyond \(10^{10^{1000}}\).

Similar content being viewed by others

1 Introduction

1.1 Motivation and statement of the main result

Bootstrap percolation is a general name for the dynamics of monotone, two-state cellular automata on a graph G. Bootstrap percolation models with different rules and on different graphs have since their invention by Chalupa et al. [20] been applied in various contexts and the mathematical properties of bootstrap percolation are an active area of research at the intersection between probability theory and combinatorics. See for instance [1, 2, 4, 5, 8, 23, 33] and the references therein.

Motivated by applications to statistical (solid-state) physics such as the Glauber dynamics of the Ising model [26, 36] and kinetically constrained spin models [17], the underlying graph is often taken to be a d-dimensional lattice, and the initial state is usually chosen randomly.

Although some progress has recently been made in the study of very general cellular automata on lattices [12, 14, 25], attention so far has mainly focused on obtaining a very precise understanding of the metastable transition for specific simple models [4, 8, 9, 18, 23, 30, 33].

In this paper we will provide the most detailed description so far for such a model; namely, the so-called anisotropic bootstrap percolation model, defined as follows: First, given a finite set \({\mathcal {N}}\subset {\mathbb {Z}}^d {\setminus } \{0\}\) (the neighbourhood) and an integer r (the threshold), define the bootstrap operator

for every set \({\mathcal {S}}\subset {\mathbb {Z}}^d\). That is, viewing \({\mathcal {S}}\) as the set of “infected” sites, every site v that has at least r infected “neighbours” in \(v+{\mathcal {N}}\) becomes infected by the application of \({\mathcal {B}}\). For \(t \in {\mathbb {N}}\) let \({\mathcal {B}}^{(t)} ({\mathcal {S}}) = {\mathcal {B}}({\mathcal {B}}^{(t-1)}({\mathcal {S}}))\), where \({\mathcal {B}}^{(0)}({\mathcal {S}}) = {\mathcal {S}}\), and let \(\langle {\mathcal {S}}\rangle = \lim _{t \rightarrow \infty } {\mathcal {B}}^{(t)}({\mathcal {S}})\) denote the set of eventually infected sites. For each \(p \in (0,1)\), let \({\mathbb {P}}_p\) denote the probability measure under which the elements of the initial set \({\mathcal {S}}\subset {\mathbb {Z}}^d\) are chosen independently at random with probability p, and for each set \(\Lambda \subset {\mathbb {Z}}^d\), define the critical probability on \(\Lambda \) to be

If \(\Lambda \subset \langle {\mathcal {S}}\cap \Lambda \rangle \) then we say that \({\mathcal {S}}\) percolates on \(\Lambda \). We remark that since we will usually expect the probability of percolation to undergo a sharp transition around \(p_c\), the choice of the constant 1 / 2 in the definition (1.2) is not significant.

The anisotropic bootstrap percolation model is a specific two-dimensional process in the family described above. To be precise, set \(d = 2\) and

or graphically,

and set \(r = 3\). \({\mathcal {N}}_{\scriptscriptstyle (1,2)}\) is sometimes called the “(1, 2)-neighbourhood” of the origin. See Fig. 1 for an illustration of the behaviour of the anisotropic model.

On the left: a final configuration of the anisotropic model on \([40]^2\). Note that not all stable shapes are rectangles. On the right: a final configuration on \([200]^2\) with \(p=0.085\), where the color of each site represents the time it became infected. Blue sites became infected first, red sites last (color figure online)

The main result of this paper is the following theorem:Footnote 1

Theorem 1.1

The critical probability of the anisotropic bootstrap percolation model satisfies

To put this theorem in context, let us recall some of the previous results obtained for bootstrap processes in two dimensions. The archetypal example of a bootstrap percolation model is the “two-neighbour model”, that is, the process with neighbourhood

and \(r = 2\). The strongest known bounds are due to Gravner, Holroyd, and Morris [28, 30, 37], who, building on work of Aizenman and Lebowitz [4] and Holroyd [33], proved that

The anisotropic model was first studied by Gravner and Griffeath [27] in 1996. In 2007, the second and third authors [41] determined the correct order of magnitude of \(p_c\). More recently, the first and second authors [23] proved that the anisotropic model exhibits a sharp threshold by determining the first term in (1.3).

The “Duarte model” is another anisotropic model that has been studied extensively [13, 22, 38]. The Duarte model has neighbourhood

and \(r = 2\). The sharpest known bounds here are due to the Bollobás et al. [13]:

Although the Duarte model has the same first order asymptotics for \(p_c\) as the anisotropic model (up to the constant), the behaviour is very different. In particular, the Duarte model has a “drift” to the right: clusters grow only vertically and to the right. This asymmetry has severe consequences for the analysis of the model (especially for the shape of critical droplets).

The “r-neighbour model” in d dimensions generalises the standard (two-neighbour) model described above. In this model, a vertex of \({\mathbb {Z}}^d\) is infected by the process as soon as it acquires at least r already-infected nearest neighbours. Building on work of Aizenman and Lebowitz [4], Schonmann [40], Cerf and Cirillo [18], Cerf and Manzo [19], Holroyd [33] and Balogh et al. [9, 10], the following sharp threshold result for all non-trivial pairs (d, r) was obtained by Balogh et al. [8]: for every \(d \geqslant r \geqslant 2\), there exists an (explicit) constant \(\lambda (d,r) > 0\) such that

(Here, and throughout the paper, \(\log _{\scriptscriptstyle (k)}\) denotes a k-times iterated logarithm.)

Finally, we remark that much weaker bounds (differing by a large constant factor) have recently been obtained for an extremely general class of two-dimensional models by Bollobás et al. [12], see Sect. 1.3, below. Moreover, stronger bounds (differing by a factor of \(1 + o(1)\)) were proved for a certain subclass of these models (including the two-neighbour model, but not the anisotropic model) by the Duminil-Copin and Holroyd [25].

Although various other specific models have been studied (see e.g. [15, 16, 34]), in each case the authors fell very far short of determining the second term.

1.2 The bootstrap percolation paradox

In [33] Holroyd for the first time determined sharp first order bounds on \(p_c\) for the standard model, and observed that they were very far removed from numerical estimates: \(\pi ^2/18 \approx 0.55\), while the same constant was numerically determined to be \(0.245 \pm 0.015\) on the basis of simulations of lattices up to \(L = 28800\) [3]. This phenomenon became known in the literature as the bootstrap percolation paradox, see e.g. [2, 21, 28, 31].

An attempt to explain this phenomenon goes as follows: if the convergence of \(p_c\) to its first-order asymptotic value is extremely slow, while for any fixed L the transition around \(p_c\) is very sharp, then it may appear that \(p_c\) converges to a fixed value long before it actually does.

This indeed appears to be the case. The first rigorisation of the “extremely slow convergence” part of this argument appears in [28], for a model related to bootstrap percolation. Theorem 1.1 gives another unambiguous illustration of extremely slow convergence for a bootstrap percolation model: the second term in (1.3) is actually larger than the first while

which holds for all L in the range \(66< L < 10^{2390}\). Moreover, the second term does not become negligible (smaller than \(1\%\) of the first term, say) until \(L > 10^{10^{1403}}\). On relatively small lattices, even the third term makes a significant contribution to \(p_c\): it is larger than the first term when \(L < 10^{60}\) and larger than the second term when \(L < 10^{13}\).

The “sharp transition” part of the argument has also been made rigorous: for the standard model, an application of the Friedgut–Kalai sharp-threshold theorem [7] tells us that the “\(\varepsilon \)-window of the transition”Footnote 2 is

So the \(\varepsilon \)-window is much smaller than the second order asymptotics in (1.4).

For the anisotropic model a similar analysis [11] yields that the \(\varepsilon \)-window satisfies

which is again much smaller than the second and third order asymptotics in Theorem 1.1. So our analysis supports the above explanation of the bootstrap percolation paradox.

1.3 Universality

Recently, a very general family of bootstrap-type processes was introduced and studied by Bollobás et al. [14]. To define this family, let \({\mathcal {U}}= \{X_1,\ldots ,X_m\}\) be a finite collection of finite subsets of \({\mathbb {Z}}^d {\setminus } \{0\}\), and define the corresponding bootstrap operator by setting

for every set \({\mathcal {S}}\subset {\mathbb {Z}}^d\). It is not hard to see that all of the bootstrap processes described above can be encoded by such an ‘update family’ \({\mathcal {U}}\), and in fact this definition is substantially more general. The key discovery of [14] was that in two dimensions the class of such monotone cellular automata can be elegantly partitionedFootnote 3 into three classes, each with completely different behaviour. More precisely, for every two-dimensional update family \({\mathcal {U}}\), one of the following holds:

-

\({\mathcal {U}}\) is “supercritical” and has polynomial critical probability.

-

\({\mathcal {U}}\) is “critical” and has poly-logarithmic critical probability.

-

\({\mathcal {U}}\) is “subcritical” and has critical probability bounded away from zero.

We emphasise that the first two statements were proved in [14], but the third was proved slightly later, by Balister et al. [6]. Note that the critical class includes the two-neighbour, anisotropic and Duarte models (as well as many others, of course). For this class a much more precise result was recently obtained by Bollobás et al. [12]. In order to state this result, let us first (informally) define a two-dimensional update family to be “balanced” if its growth is asymptotically two-dimensionalFootnote 4 (like that of the two-neighbour model), and “unbalanced” if its growth is asymptotically one-dimensional (like that of the anisotropic and Duarte models). The following theorem was proved in [12].

Theorem 1.2

Let \({\mathcal {U}}\) be a critical two-dimensional bootstrap percolation update family. There exists \(\alpha = \alpha ({\mathcal {U}}) \in {\mathbb {N}}\) such that the following holds:

-

(a)

If \({\mathcal {U}}\) is balanced, thenFootnote 5

$$\begin{aligned} p_c\big ( {\mathbb {Z}}_L^2,{\mathcal {U}}\big ) = \Theta \bigg ( \frac{1}{(\log L)^{1/\alpha }} \bigg ). \end{aligned}$$ -

(b)

If \({\mathcal {U}}\) is unbalanced, then

$$\begin{aligned} p_c\big ( {\mathbb {Z}}_L^2,{\mathcal {U}}\big ) = \Theta \bigg ( \frac{(\log \log L)^2}{(\log L)^{1/\alpha }} \bigg ). \end{aligned}$$

Theorem 1.2 thus justifies our view of the anisotropic model as a canonical example of an unbalanced model.

1.4 Internally filling a critical droplet

As usual in (critical) bootstrap percolation, the key step in the proof of Theorem 1.1 will be to obtain very precise bounds on the probability that a “critical droplet” R is internally filled Footnote 6 (IF), i.e., that \(R \subset \langle {\mathcal {S}}\cap R \rangle \). We will prove the following bounds:

Theorem 1.3

Let \(p > 0\) and \(x,y \in {\mathbb {N}}\) be such that \(1/p^2 \leqslant x \leqslant 1/p^5\) and \(\frac{1}{3p} \log \frac{1}{p} \leqslant y \leqslant \frac{1}{p} \log \frac{1}{p}\), and let R be an \(x \times y\) rectangle. Then

The alert reader may have noticed the following surprising fact: we obtain the first three terms of \(p_c( [L]^2, {\mathcal {N}}_{\scriptscriptstyle (1,2)}, 3 )\) in Theorem 1.1, despite only determining the first two terms of \(\log {\mathbb {P}}_p( R\) is IF) in Theorem 1.3. We will show how to formally deduce Theorem 1.1 from Theorem 1.3 in Sect. 7, but let us begin by giving a brief outline of the argument.

To slightly simplify the calculations, let us write

We claim (and will later prove) that \(p_c = p_c( [L]^2, {\mathcal {N}}_{\scriptscriptstyle (1,2)}, 3 )\) is essentially equal to the value of p for which the expected number of internally filled critical droplets in \([L]^2\) is equal to 1 (the idea being that a critical droplet with size as in Theorem 1.3 will keep growing indefinitely with probability very close to one). We therefore have

and hence

Iterating the right-hand side gives

Upon using the approximation \(\log \log (1/p_c) \approx \log \log \log L\) and multiplying out, this reduces to

which is what we hope to prove. Thus we obtain three terms for the price of two.

1.5 A generalisation of the anisotropic model

One natural way to generalise the anisotropic model is to consider, for each \(b > a \geqslant 1\), the neighbourhood

It follows from Theorem 1.2 that

where \(\alpha = r - b\), for each \(b +1 \leqslant r \leqslant a + b\).Footnote 7 The arguments developed in [23] can be applied to prove that the leading order behaviour of \(p_c\) for the (1, b)-model isFootnote 8

Combining the techniques of [23] with those introduced in this paper, it is possible to prove the following stronger bounds:

Theorem 1.4

Given \(b \geqslant 2\), set

Then

Note that in the case \(b = 2\) this reduces to Theorem 1.1. We remark that Theorem 1.4 follows from a corresponding generalisation of Theorem 1.3, with the constants \(\frac{1}{6}\) and \(\frac{1}{3} \log \frac{8}{3\mathrm {e}}\) replaced by

respectively.

We will not prove Theorem 1.4, since the proof is conceptually the same as that in the case \(b = 2\), but requires several straightforward but lengthy calculations that might obscure the key ideas of the proof. It is, however, not too hard to see where the numerical factors come from:

A droplet grows horizontally in the (1, b)-model as long as it does not occur that the \(b+1\) consecutive columns to its left and/or right do not contain an infected site. And it grows vertically as long as there are b sites in a “growth configuration” somewhere above and/or below. There are

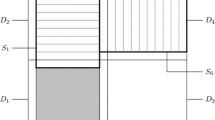

such configurations. Indeed, there are \({2b \atopwithdelims ()b}\) different ways of finding b infected sites inside \({\mathcal {N}}_{\scriptscriptstyle (1,b)} {\setminus } (\{(0,-1) ,(0,1)\})\). Of these, \(\sum _{i=2}^b {2b-i \atopwithdelims ()b} +1 = \frac{2b-1}{b+1} {2b-2 \atopwithdelims ()b} +1\) are right-shifts of another configuration (e.g. for \({\mathcal {N}}_{\scriptscriptstyle (1,2)}\) the choices \(\bullet \circ 0 \bullet \circ \) and \(\circ \bullet 0 \circ \bullet \) count as a single growth configuration), so their contribution must be subtracted. If (0, 1) is occupied, there are \({2b \atopwithdelims ()b-1}\) ways of placing the other \(b-1\) sites in \({\mathcal {N}}_{\scriptscriptstyle (1,b)} {\setminus } (\{(0,-1) ,(0,1)\})\). None of these are shift invariant, but some of them cannot grow to fill the entire row. Indeed, when \(b \geqslant 3\), configurations where \((-b,0), (0,1),\) and (b, 0) are infected do not cause the row to fill up. Therefore, we must subtract \({2b-2 \atopwithdelims ()b-3}\). This explains (1.6). See Fig. 2 for growth configurations of the case \(b = 2\). Finally, it takes \(b-1\) more infected sites for a rectangle to grow a row than it does to grow a column, which explains the remaining factors \(b-1\) in (1.5).

1.6 Comparison with simulations

One might be tempted to hope that the third-order approximation of \(p_c\) in Theorem 1.1 is reasonably good already for lattices that a computer might be able to handle. Simulations indicate that this is not the case. Indeed, for lattices with \(L \leqslant 10,000,\) the third-order approximation is even farther from to the simulated values than the first-order approximation (and recall that the second-order approximation is negative here). We believe that this should not be surprising, because it is not at all obvious that the fourth order term should be significantly smaller: careful inspection of our proof suggests that the \(o(\frac{1}{p} \log \frac{1}{p})\) term in Theorem 1.3 is at most \(O(\frac{1}{p} \log \log \frac{1}{p})\). Although we do not prove this, we have no reason to believe that a correction term of that order does not exist. Even if we suppose that the third order correction in Theorem 1.3 can be sharply bounded by \(C_3 /p\), say, so that we would have the bound

for critical droplets instead, then a computation like the one in Sect. 1.4 above suggests that this would yield

so the fourth, fifth, and sixth order terms of \(p_c\) would also be comparable to the first for moderately sized lattices. Moreover, because of the extremely slow decay of these correction terms (e.g. \((\log \log 10^{10})^2 \approx 10\)), it might be too optimistic to expect that one would be able to determine \(C_3\) by fitting to the simulated values of \(p_c\), if indeed \(C_3\) exists.

1.7 Comparison with the two-neighbor model

Comparing Theorem 1.1 with the analogous result for the two-neighbor model, (1.4), it may seem remarkable how much sharper the former is than the latter. We believe the following heuristic discussion goes a way towards explaining this difference.

Both approximations of \(p_c\) are proved using essentially the same critical droplet heuristic described above. Once a critical droplet has formed, the entire lattice will easily fill up. But filling a droplet-sized area is exponentially unlikely: it is essentially a large deviations event. The theory of large deviations tells us that if a rare event occurs, it will occur in the most probable way that it can. For filling a droplet, this means that one should find an optimal “growth trajectory”: a sequence of dimensions from which a very small infected area (a “seed”) steadily grows to fill up the entire droplet. For the anisotropic model, in [23], the first and second authors determined this trajectory to be close to \(x = \frac{\mathrm {e}^{3py}}{3p}\), where x and y denote the horizontal and vertical dimensions of the seed as it grows. This approximation was enough to yield the first term of \(p_c\). In the current paper we establish tighter bounds of optimal trajectory around \(x = \frac{\mathrm {e}^{3py}}{3p}\), allowing us to give the sharper estimate for the probability of filling a droplet in Theorem 1.3. As we showed in Sect. 1.4 above, this correction is enough to obtain the first three terms of \(p_c\) for the anisotropic model.

For the two-neighbor model, however, finding this optimal growth trajectory is not at all the challenge: by symmetry it is trivially \(x=y\). The correction to \(p_c\) that Gravner, Holroyd, and Morris determined in [28, 30, 37], is instead due to the much smaller entropic effect of random fluctuations around this trajectory (see also the introduction of [29] for a more detailed explanation of this effect). We believe that such fluctuations also influence \(p_c\) for the anisotropic model, but that their effect will be much smaller than the improvements that can still be made in controlling the precise shape of the optimal growth trajectory.

1.8 About the proofs

The proof of Theorem 1.1 uses a rigorisation of the iterative determination of \(p_c\) in Sect. 1.4 above, combined with Theorem 1.3 and the classical argument of Aizenman and Lebowitz [4].

The lower bound in Theorem 1.3 is a refinement of the computation in [23].

Most of the work of this paper goes into the proof of the upper bound of Theorem 1.3. Like many recent entries in the bootstrap percolation literature, our proof centers around the “hierarchies” argument of Holroyd [33]. In particular, we sharpen the argument of [23] by incorporating the idea of “good” and “bad” hierarchies from [30], and by using very precise bounds on horizontal and vertical growth of infected rectangular regions.

The main new contributions of this paper (besides the iterative determination of \(p_c\)) can be found in Sects. 3 and 6.

In Sect. 3, we introduce the notion of spanning time (Definition 3.3), which characterises to a large extent the structure of configurations of vertical growth. We show that if the spanning time is 0, then such structures have a simple description in terms of paths of infected sites, whereas if the spanning time is not 0, then this description can still be given in terms of paths, but these paths now also involve more complex arrangements of infected sites. We call such arrangements infectors (Definition 3.7), and show that they are sufficiently rare that their contribution does not dominate the probability of vertical growth.

In Sect. 6 we generalise the variational principle of Holroyd [33] to a more general class of growth trajectories. This part of the proof is intended to be more widely applicable than the current anisotropic case, and is set up to allow for precise estimates.

1.9 Notation and definitions

A rectangle \([a,b] \times [c,d]\) is the set of sites in \({\mathbb {Z}}^2\) contained in the Euclidean rectangle \([a,b] \times [c,d]\). For a finite set \({\mathcal {Q}}\subset {\mathbb {Z}}^2\), we denote its dimensions by \(({\mathbf {x}}({\mathcal {Q}}), {\mathbf {y}}({\mathcal {Q}}))\), where \({\mathbf {x}}({\mathcal {Q}}) = \max \{a_1 - b_1 +1 \, : \, \{(a_1,a_2), (b_1, b_2)\} \in {\mathcal {Q}}\times {\mathcal {Q}}\}\), and similarly, \({\mathbf {y}}({\mathcal {Q}}) = \max \{a_2 - b_2 +1\, : \, \{(a_1,a_2), (b_1, b_2)\} \in {\mathcal {Q}}\times {\mathcal {Q}}\}\). So in particular, a rectangle \(R = [a,b] \times [c,d]\) has dimensions \(({\mathbf {x}}(R), {\mathbf {y}}(R)) = (|[a,b]\, \cap \, {\mathbb {Z}}|, |[c,d] \,\cap \,{\mathbb {Z}}|)\). Oftentimes, the quantities that we calculate will only depend on the size of R, and be invariant with respect to the position of R. In such cases, when there is no possible confusion, we will write R with \({\mathbf {x}}(R)=x\) and \({\mathbf {y}}(R)=y\) as \([x] \times [y]\). A row of R is a set \(\{(m,n) \in R \,:\, n=n_0\}\) for some fixed \(n_0\). A column is similarly defined as a set \(\{(m,n) \in R \,:\, m =m_0\}\). We sometimes write \([a,b] \times \{c\}\) for the row \(\{ (m,c) \in {\mathbb {Z}}^2 \, :\, m \in [a,b] \cap {\mathbb {Z}}\}\), and use similar notation for columns.

We say that a rectangle \(R = [a,b] \times [c,d]\) is horizontally traversable (hor-trav) by a configuration \({\mathcal {S}}\) if

That is, R is horizontally traversable if the rectangle becomes infected when the two columns to its left are completely infected. Under \({\mathbb {P}}_p\), this event is equiprobable to the event that \(R \subset \langle (R \cap {\mathcal {S}}) \cup ([b+1, b+2] \times [c,d])\rangle \), and more importantly, it is equivalent to the event that R does not contain three or more consecutive columns without any infected sites and the rightmost column contains an infected site.

We say that R is up-traversable (up-trav) by \({\mathcal {S}}\) if

That is, R becomes entirely infected when all sites in the row directly below R are infected. Similarly, we say that R is down-traversable by \({\mathcal {S}}\) if \(R \subset \langle (R \cap {\mathcal {S}}) \cup ([a,b] \times \{d+1\})\rangle \). Again, under \({\mathbb {P}}_p\) up and down traversability are equiprobable, so we will only discuss up-traversability. If \({\mathcal {S}}\) is a random site percolation, then we simply say that R is horizontally- or up- or down-traversable.

Given rectangles \(R \subset R'\) we write \(\left\{ R \Rightarrow R' \right\} \) for the event that the dynamics restricted to \(R'\) eventually infect all sites of \(R'\) if all sites in R are infected, i.e., for the event that \(R' = \langle ({\mathcal {S}}\cap R') \cup R\rangle \).

We will frequently make use of two standard correlation inequalities: The first is the Fortuin–Kasteleyn–Ginibre inequality (FKG-inequality), which states that for increasing events A and B, \({\mathbb {P}}_p(A \cap B) \geqslant {\mathbb {P}}_p(A) {\mathbb {P}}_p(B)\). The second is the van den Berg–Kesten inequality (BK-inequality), which states that for increasing events A and B, \({\mathbb {P}}_p(A \circ B) \leqslant {\mathbb {P}}_p(A) {\mathbb {P}}_p(B)\), where \(A \circ B\) means that A and B occur disjointly (see [32, Chapter 2] for a more in-depth discussion).

1.10 The structure of this paper

In Sect. 2 we state two key bounds, Lemmas 2.2 and 2.3, giving primarily lower bounds on the probabilities of horizontal and vertical growth of an infected rectangular region, and we use them to prove the lower bound of Theorem 1.3. In Sect. 3 we prove a complementary upper bound on the vertical growth of infected rectangles, Lemma 3.1. In Sect. 4 we prove Lemma 4.1, which combines the upper bounds on horizontal and vertical growth from Lemmas 2.2 and 3.1. This lemma is crucial for the upper bound of Theorem 1.3. We prove the upper bound of Theorem 1.3 in Sect. 5, subject to a variational principle, Lemma 5.9, that we prove in Sect. 6. Finally, in Sect. 7 we use Theorem 1.3 to prove Theorem 1.1.

2 The lower bound of Theorem 1.3

Recall that \(C_1 = \frac{1}{12}\) and \(C_2 = \frac{1}{6} \log \frac{8}{3 \mathrm {e}}\).

Proposition 2.1

Let \(p>0\) and \(\frac{1}{p^2} \leqslant x \leqslant \frac{1}{p^5}\) and \(\frac{1}{3p} \log \frac{1}{p} \leqslant y \leqslant \frac{1}{p^5}\). Then

Note that the upper bound on y is different from the bound in Theorem 1.3.

For the proof it suffices to show that there exists a subset of configurations that has the desired probability. We choose a subset of configurations that follow a typical “growth trajectory”: configurations that contain a small area that is locally densely infected (a seed). We bound the probability that such a seed will grow a bit (which is likely), and then a lot more (which is exponentially unlikely), until the infected region reaches a size where the growth is again very likely, because the boundary of the infected region is large and the dynamics depend only on the existence of infected sites on the boundary, not on their number.

To prove this proposition we will need bounds on the probability that a rectangle becomes infected in the presence of a large infected cluster on its boundary. We state two lemmas that achieve this, which are improvements upon [23, Lemmas 2.1 and 2.2].

Lemma 2.2

For any rectangle \([x] \times [y]\),

where \(f(p,y) \,{:=}\, -\log ( \alpha (1-(1-p)^y))\) and where \(\alpha (u)\) is the positive root of the polynomial

Moreover, f(p, y) satisfies the following bounds:

-

(a)

when \(p\rightarrow 0\) and \(py\rightarrow \infty \),

$$\begin{aligned} f(p,y)=\mathrm {e}^{-3py}+\Theta (\mathrm {e}^{-4py}), \end{aligned}$$ -

(b)

when \(y \geqslant \frac{2}{p} \log \log \frac{1}{p}\),

$$\begin{aligned} f(p,y)=\mathrm {e}^{-3py}\left( 1+\Theta \left( \log ^{-2} (1/p)\right) \right) , \end{aligned}$$ -

(c)

when \(p \rightarrow 0\), \(y \rightarrow \infty \), and \((1-p)^y \rightarrow 1\),

$$\begin{aligned} f(p,y) \geqslant \tfrac{1}{2} p y - 3 p^2 y^2. \end{aligned}$$

Proof

From [23, Lemma 2.1]Footnote 9 we know that

When u is close to 1, \(X = \mathrm {e}^{-(1-u)^3}\) is an approximate solution for the positive root, since

So, as \(p \rightarrow 0\) and \(py \rightarrow \infty \),

This establishes (a) and (b) simply follows.

To prove (c), recall Rouché’s Theorem (see e.g. [39, Theorem 10.43]), which states that if two functions g(z) and h(z) are holomorphic on a bounded region \(U \subset {\mathbb {C}}\) with continuous boundary \(\partial U\) and satisfy \(|g(z) - h(z)| < |g(z)|\) for all \(z \in \partial U\), then g and h have an equal number of roots on U. Applying Rouché’s Theorem with \(h(z) = a_0 + a_1 z + a_2 z^2 + a_3 z^3\) and \(g(z) = a_0\), it follows that the moduli of the roots of h(z) are all bounded from below by \(|a_0| /(|a_0| + \max \{|a_1|, |a_2|, |a_3|\})\). Applying this bound to (2.1) we find that when \(u > 0\) is sufficiently small,

where the second inequality is due to a series expansion around \(u=0\). (We remark that an explicit computation gives \(\alpha (u) \geqslant u -3u^2\) for all \(u >0\), but without relying on a computer this may take several pages to verify.) Since we assumed \((1-p)^y \rightarrow 1\) we thus have

where we used \(\frac{1}{2} py \leqslant 1-(1-p)^y \leqslant py\) for p sufficiently small. \(\square \)

Lemma 2.3

-

(a)

If \(p^2x\) is sufficiently small, then we have, for any rectangle \([x] \times [y]\),

$$\begin{aligned} {\mathbb {P}}_p\left( [x] \times [y] \text { is up-trav} \right) \; \geqslant \; \exp \Big ( y \log (8p^2x)\big (1+O(p^2x + p)\big ) \Big ). \end{aligned}$$ -

(b)

As long as \( \frac{8 p^2 x}{5} \le 1\) we have

$$\begin{aligned} {\mathbb {P}}_p([x] \times [y] \text { is up-trav}) \; \geqslant \; \left( \frac{8 p^2 x}{5 \mathrm {e}} \right) ^y. \end{aligned}$$

Proof

We say that a rectangle is North-traversable (N-trav) if the intersection of every row with R contains a site (a, b) such that \(((a,b) + {\mathcal {N}}_{\scriptscriptstyle (1,2)}){\setminus } \{(a, b-1)\}\) contains at least two infected sites. Observe that North-traversability implies up-traversability, so

We can similarly define South-traversability by requiring that the intersection of every row with R contains a site (a, b) such that \(((a,b) + {\mathcal {N}}_{\scriptscriptstyle (1,2)}){\setminus } \{(a, b+1)\}\) contains at least two infected sites. South-traversability implies down-traversability. Again, from a probabilistic point of view North- and South-traversability are equivalent, so we will henceforth only discuss North-traversability.

If \([x] \times [y]\) is North-traversable then for each of the y rows there must exist an infected pair of sites u and v and a site z in the row such that \(u,v \in z+ {\mathcal {N}}_{\scriptscriptstyle (1,2)}\). By the FKG inequality we thus have the lower bound

For the proof of (a) we apply Janson’s inequality [35]. The expected number of infected pairs immediately above an infected rectangle of width x is at least \(\mu ~=~(8x - 16)p^2\). To see this, consider that up to translations there are 8 possible pairs of infected sites above the rectangle that can infect the whole row, see Fig. 2 above. The variance isFootnote 10 \(\Delta = O(p^3x) \ll \mu \), so the probability that some pair is infected is at least

using the inequality \(1 - \mathrm {e}^{-x} \geqslant x - x^2\) for \(x \geqslant 0\).

For the proof of (b) we use a cruder approximation: For \((a,b) \in [x] \times [y]\) let \(A_{(a,b)}\) be the event that (a, b) is the leftmost site of an infected pair as in Fig. 2. These pairs all have width at most 5, so the probability that a row of length x does not have an infected pair can be bounded from above by

when \( \frac{8 p^2 x}{5} \leqslant 1\). The claim follows. \(\square \)

Proof of Proposition 2.1

We start by constructing a seed. Let \(r\, {:=} \,\lfloor \frac{2}{p} \log \log \frac{1}{p} \rfloor \) and infect sites (1, 2i) and \((2,2i+1)\) for \(2i\le r\). The probability that a rectangle \([2] \times [r]\) is a seed is \(p^r\). Note that the infected sites internally fill \([2]\times [r]\).

The growth of the seed to a rectangle of arbitrary size can be divided into three stages:

Stage 1. By Lemma 2.2(a) the probability of finding a seed of size r that will grow to size \(\left[ \mathrm {e}^{3 rp}/(3p)\right] \times [r]\) is about the same as the probability of just finding the seed, i.e.,

Stage 2. Next we bound the probability that the infected rectangle grows to size

that is, we want to bound

where \(m\,{:=} \,\frac{1}{3p} \log \frac{1}{p}\). This is the bottleneck for the growth dynamics. We bound (2.3) by considering the growth in many small steps. In each such step, the rectangle will either infect an entire row above or below it, or it will infect an entire row to the left or right of it (with the help of infected sites on the boundary of the rectangle). Because vertical growth is less probable than horizontal growth, we will consider sequences where the rectangle grows by one vertical step, from height \(\ell \) to \(\ell +1\), followed by horizontal growth that infects many columns successively, with the rectangle growing from width \(x_\ell \) to \(x_{\ell +1}\) where \( x_\ell : = \frac{\mathrm {e}^{3 \ell p}}{3p}\). That this choice is close to optimal can be seen in Sect. 6 below, where a variational principle for the upper bound of Theorem 1.3 is derived.

Having divided the growth into steps, we can bound (2.3) from below using the FKG-inequality:

We bound these three products separately.

It follows from Lemma 2.2(a) that the horizontal growth from width \(x_\ell \) to \(x_{\ell +1}\) occurs with probability approximately \(1/\mathrm {e}\), i.e.,

Therefore,

When \(\ell \leqslant m-r\), then \(p^2 x_\ell \leqslant \log ^{-2} \frac{1}{p}\), so we can apply Lemma 2.3(a) to bound

Therefore we can bound the second product in (2.4) from below by

Using Lemma 2.3(b) we can similarly bound the third product from below by

Multiplying the bounds (2.6), (2.7), and (2.8), and using that \(m = \frac{1}{3p} \log \frac{1}{p}\), we get

Stage 3. The infected region can grow from \([\frac{1}{3p^2}] \times [m]\) to arbitrary size with good probability. Indeed, we claim that

This bound is proved in [23, proof of Proposition 2.4]. We do not repeat the proof here, but let us indicate how this bound is established: Consider the case where the cluster first grows horizontally to width \(1/p^2\). By Lemma 2.2(b) we have

Now consider the case where it grows vertically, this time to height 3m. This also occurs with probability at least \(\mathrm {e}^{-O(1/p)}\). As the infected region gets larger, the probability that it keeps growing converges to 1. The result is that (2.10) holds for any rectangle R that is large enough, as long as the dimensions of R are sufficiently balanced (which is guaranteed by the assumptions on x and y).

Now, by the FKG-inequality, we can multiply the bounds from the three stages (i.e., (2.2), (2.9), and (2.10)) to complete the proof of Proposition 2.1.\(\square \)

3 An upper bound on the probability of up-traversability

The following bound is crucial for the proof of the upper bound of Theorem 1.3. Recall from (1.1) the definition of the bootstrap operator \({\mathcal {B}}\), and recall that \({\mathcal {B}}^{(t)}({\mathcal {S}})\) is the t-th iterate of \({\mathcal {B}}\) with initial set \({\mathcal {S}}\), and that \(\langle {\mathcal {S}}\rangle = \lim _{t \rightarrow \infty } {\mathcal {B}}^{(t)}({\mathcal {S}})\). Recall that a rectangle \(R = [1,x] \times [1,y]\) is said to be up-traversable by a set \({\mathcal {S}}\) if \(R \subset \langle ({\mathcal {S}}\cap R) \cup ([1,x] \times \{0\}) \rangle \), and that we write \({\mathbb {P}}_p\) to indicate that the elements of \({\mathcal {S}}\) are chosen independently at random with probability p.

Lemma 3.1

Let \(1 \leqslant k \ll p^{-2/5}\) and let R be a rectangle with dimensions (x, y) such that \(y < x\). Then, for p sufficiently small,

We will apply this lemma with \(\frac{1}{p} \ll y \ll \frac{1}{p} \log ^6 \frac{1}{p} \leqslant x\) and \(k = \log ^2 \frac{1}{p}\). Note that in this case the upper bound given by the lemma is not much larger than the lower bound given by Lemma 2.3. In particular, for these choices of x, y and k, the bound given by the lemma is of the form \(\big ( ( 8 + o(1)) p^2 x \big )^y\).

We begin the proof of Lemma 3.1 with the following simple but important definition: let us say that a pair of sites \({\mathcal {P}}\) is a spanning pair for the row \([a,b] \times \{c\}\) if

That is, \({\mathcal {P}}\) is a spanning pair for \([a,b] \times \{c\}\) if the row becomes infected when \({\mathcal {P}}\) and the row below it are infected. Note that for each spanning pair \({\mathcal {P}}= \{u,v\}\) there exists \(z \in [a,b] \times \{c\}\) such that \(u,v \in z + ({\mathcal {N}}_{\scriptscriptstyle (1,2)}{\setminus } \{(0,-1)\})\), and thus that any spanning pair is a translate of one of the eight pairs on the right-hand side of Fig. 2.

Lemma 3.2

Let R be a rectangle such that R has \({\mathbf {x}}(R) \geqslant 2\) and \({\mathbf {y}}(R) \geqslant 1\), and let \({\mathcal {S}}\subset R\). Then R is up-traversable by \({\mathcal {S}}\) if and only if \(\langle {\mathcal {S}}\rangle \) contains a spanning pair for every row of R.

Proof

Suppose that \(R = [a,b] \times [c,d]\) with \(b-a \geqslant 1\) and \(d-c \geqslant 0\). It is easy to see that if \(\langle {\mathcal {S}}\rangle \) contains a spanning pair for every row of R, then R is up-traversable by \({\mathcal {S}}\): if \(\langle {\mathcal {S}}\rangle \) contains a spanning pair for the bottom row of R, then the whole row becomes infected, i.e., \([a,b] \times \{c\} \subset \langle {\mathcal {S}}\cup [a,b] \times \{c\}\rangle \). And given that the bottom row is infected, the row above the bottom row must also become infected, since \(\langle {\mathcal {S}}\rangle \) also contains a spanning pair for it, i.e., \([a,b] \times \{c+1\} \subset \langle {\mathcal {S}}\cup [a,b] \times \{c\}\rangle \). This argument can be repeated for all rows.

It will therefore suffice to prove that the converse holds. To do that, let \(j \in [c,d]\) be the smallest j such that \(\langle {\mathcal {S}}\rangle \) does not contain a spanning pair for the row \([a,b] \times \{j\}\). We claim that the set

is empty. Indeed, suppose that for some \(t \geqslant 1\) there exists a site v such that

Then there must be a pair of already-infected sites in \({\mathcal {N}}_{\scriptscriptstyle (1,2)}(v) \cap ([a,b] \times [j,j+1])\) at time \(t-1\). But this pair lies in \(\langle {\mathcal {S}}\rangle \), and thus is a spanning pair for the row \([a,b] \times \{j\}\), a contradiction. Now, since \(\langle {\mathcal {S}}\rangle \) does not contain a spanning pair for \([a,b] \times \{j\}\), this implies that \(R \nsubseteq \langle {\mathcal {S}}\cup ([a,b] \times \{c-1\}) \rangle \), as required.\(\square \)

We now make another important definition.

Definition 3.3

For each rectangle R such that \({\mathbf {x}}(R) \geqslant 2\) and \({\mathbf {y}}(R) \geqslant 1\), and each set \({\mathcal {S}}\subset R\) such that R is up-traversable by \({\mathcal {S}}\), let \({\mathcal {A}}({\mathcal {S}}) \subset {\mathcal {S}}\) be a minimum-size subset such that R is up-traversable by \({\mathcal {A}}({\mathcal {S}})\). (If more than one such subset exists, then choose one according to some arbitrary rule.) Define the spanning time

In words, the spanning time \(\tau \) is the first time t such that \({\mathcal {B}}^{(t)}({\mathcal {A}}({\mathcal {S}}))\) spans all rows of R. Since R is up-traversable by \({\mathcal {A}}({\mathcal {S}})\), it follows by Lemma 3.2 that \(\tau \) must be finite. However, we emphasise that it is possible that \(\tau > 0\), see Fig. 3 for some examples.

Five configurations (the red and black sites) that do not have a spanning pair for the row above the dark grey row at time \(t=0\), but that create a spanning pair (the red and blue sites) at some time \(t >0\) by iteration with \({\mathcal {B}}\). The light grey sites indicate which sites must become infected to create the spanning pair. Note that in each case these sets have minimal cardinality (i.e., if we remove any black site, then iteration of \({\mathcal {B}}\) will not create the spanning pair) (color figure online)

The central idea in the proof of Lemma 3.1 is to consider the cases \(\tau = 0\) and \(\tau > 0\) separately. When \(\tau = 0\), the structure is significantly simpler than when \(\tau > 0\), which allows for a very sharp estimate. When \(\tau > 0\) more complex structures are possible, but more infected sites are required, and this allows us to use a less precise analysis.

3.1 The case \(\tau = 0\)

Given a rectangle R, let \({\mathcal {F}}_0(R)\) and \({\mathcal {F}}_+(R)\) denote the families of all minimal sets \({\mathcal {A}}\subset R\) such that R is up-traversable by \({\mathcal {A}}\) and \(\tau (R,{\mathcal {A}}) = 0\) and \(\tau (R,{\mathcal {A}})>0\), respectively. Let us write \({\mathcal {U}}_0(R)\) and \({\mathcal {U}}_+(R)\) for the upsets generated by \({\mathcal {F}}_0(R)\) and \({\mathcal {F}}_+(R)\), respectively, i.e., the collections of subsets of R that contain a set \({\mathcal {A}}\in {\mathcal {F}}_0(R)\) or \({\mathcal {A}}\in {\mathcal {F}}_+(R)\), respectively.

The following lemma gives a precise estimate of the probability that a rectangle is up-traversable and \(\tau =0\).

Lemma 3.4

Let R be a rectangle with dimensions (x, y), and let \(p \in (0,1)\). Then

We will prove Lemma 3.4 using the first moment method. To be precise, we will show that the expected number of members of \({\mathcal {F}}_0(R)\) that are contained in \({\mathcal {S}}\) is at most the right-hand side of (3.2). This will follow easily from the following lemma.

Lemma 3.5

Let R be a rectangle with dimensions (x, y), and let \(p \in (0,1)\). Then

To count the sets in \({\mathcal {F}}_0(R)\), we will need to understand their structure. We will show that each set \({\mathcal {A}}\in {\mathcal {F}}_0(R)\) can be partitioned into “paths” as follows:

Lemma 3.6

Let R be a rectangle with dimensions (x, y), and let \({\mathcal {A}}\in {\mathcal {F}}_0(R)\). Then there exists a partition \({\mathcal {A}}= A_1 \cup \cdots \cup A_r\), where \(r = |{\mathcal {A}}| - y\), with the following property: For each \(j \in [r]\), there exists an ordering \((u_1,\ldots ,u_{|A_j|})\) of the elements of \(A_j\) such that

for each \(2 \leqslant i < |A_j|\), and

See Fig. 4 for an illustration.

Proof

Since \({\mathcal {A}}\) is a minimal subset of R such that R is up-traversable by \({\mathcal {A}}\), and \(\tau (R,{\mathcal {A}}) = 0\), it follows from Definition 3.3 that \({\mathcal {A}}\) contains a spanning pair for each row of R, and hence (by minimality of \({\mathcal {A}}\)) it follows that \({\mathcal {A}}\) consists exactly of a union of spanning pairs (one pair for each row) and no other sites. Let these pairs be \({\mathcal {P}}_1,\ldots ,{\mathcal {P}}_y\), and define a graph on [y] by placing an edge between i and j if \({\mathcal {P}}_i \cap {\mathcal {P}}_j\) is non-empty. The sets \(A_1,\ldots ,A_r\) are simply (the elements of \({\mathcal {A}}\) corresponding to) the components of this graph.

Let the components of the graph be \(C_1,\ldots ,C_r\), and note first that each component is a path, since a spanning pair for row \([a,b] \times \{c\}\) is contained in \([a,b] \times [c,c+1]\). Moreover, it follows immediately from this simple fact that if \({\mathcal {P}}_i \cap {\mathcal {P}}_j\) is non-empty then \({\mathcal {P}}_i\) and \({\mathcal {P}}_j\) must be spanning pairs for adjacent rows (say, \([a,b] \times \{c\}\) and \([a,b] \times \{c+1\}\)), and that their common element must lie in \([a,b] \times \{c+1\}\).

Now, consider a component \(C_\ell = \{ i_1,\ldots , i_s \}\), set \(A_\ell = \bigcup _{j = 1}^s {\mathcal {P}}_{i_j}\), and note that \(|A_\ell | = s + 1\). Let \(A_\ell = \{u_1,\ldots ,u_{s+1}\}\), and assume (without loss of generality) that \({\mathcal {P}}_{i_j} = \{u_j,u_{j+1}\}\) for each \(j \in \{1,\dots , s\}\). It now follows from the comments above, and the definition of a spanning pair in (3.1), that

for each \(2 \leqslant i \leqslant s\), and that

as claimed. Finally, note that \(|{\mathcal {A}}| = y + r\), since \(|A_\ell | = |C_\ell | + 1\) for each \(\ell \in \{1,\dots , r\}\).

\(\square \)

Proof of Lemma 3.5

To count the sets \({\mathcal {A}}\in {\mathcal {F}}_0(R)\), let us first fix \(|{\mathcal {A}}|\), and the sizes of the sets \(A_1,\ldots ,A_r\) given by Lemma 3.6. Recall that \(r = |{\mathcal {A}}| - y\) and that \({\mathcal {A}}= A_1 \cup \cdots \cup A_r\) is a partition, and note that \(|A_j| \geqslant 2\) for each \(j \in \{1,\dots , r\}\), since \(A_j\) is a union of spanning pairs. It follows that there are exactly

ways to choose the sequence \((|A_1|,\ldots ,|A_r|)\), where we order the sets \(A_j\) so that if \(i < j\) then the top row of \(A_i\) is no higher than the bottom row of \(A_j\). (Note that this is possible because each \(A_i\) is a union of spanning pairs for some set of consecutive rows of R.) Now, we claim that there are at most

ways of choosing the elements of \(|A_j|\), given \(A_{j-1}\) and \(|A_j|\). Indeed, given \(A_{j-1}\) we can deduce which is the bottom row of \(A_j\), and we have at most x choices for the left-most element \(u_1\) of \(A_j\) in that row. If \(|A_j| = 2\) then (given \(u_1\)) there are then exactly 8 choices for the other element \(u_2\), since \(u_2 - u_1 \in \big \{ (4,0), (3,0), (2,0), (1,0), (\pm 2,1), (\pm 1,1) \big \}\). On the other hand, if \(|A_j| \geqslant 3\), then there are at most \(4^{|A_j| - 2} \cdot 12 \leqslant 8^{|A_j| - 1}\) choices for the remaining elements of \(A_j\) (given \(u_1\)), by Lemma 3.6, as required.

Now, multiplying together the (conditional) number of choices for each set \(A_j\), it follows that

as claimed, since \(\sum _{j = 1}^r (|A_j| - 1) = y\). \(\square \)

Lemma 3.4 now follows by Markov’s inequality:

Proof of Lemma 3.4

Define a random variable X to be the number of sets \({\mathcal {A}}\in {\mathcal {F}}_0(R)\) that are entirely infected at time zero, i.e., that are contained in our p-random set \({\mathcal {S}}\). By Markov’s inequality and Lemma 3.5, we have

as required. \(\square \)

3.2 The case \(\tau > 0\)

In this section we analyse the event \({\mathcal {S}}\cap R \in {\mathcal {U}}_+(R)\). If R is up-traversable by \({\mathcal {S}}\), then let \({\mathcal {A}}\) again denote a subset of \({\mathcal {S}}\) of minimal cardinality such that R is up-traversable by \({\mathcal {A}}\). By Lemma 3.2 above we know that if R is up-traversable by \({\mathcal {A}}\), then there must exist a time t at which there is spanning pair in \({\mathcal {B}}^{(t)}({\mathcal {A}})\) for each row in R. The following definition isolates the sites that are responsible for the creation of such spanning pairs.

Definition 3.7

Given \({\mathcal {S}}\) and a row \(\ell \), we say that \({\mathcal {M}}\subset {\mathcal {S}}\) is an infector of the row \(\ell \) if

-

there exists a \(t \geqslant 0\) such that \({\mathcal {B}}^{(t)}({\mathcal {M}})\) contains a spanning pair for the row \(\ell \), and

-

there does not exist a subset \({\mathcal {M}}' \subset {\mathcal {M}}\) such that there exists a \(t' \geqslant 0\) such that \({\mathcal {B}}^{(t')}({\mathcal {M}}')\) contains a spanning pair for the row \(\ell \) .

We call the bottom-most left-most site in \({\mathcal {M}}\) the root of \({\mathcal {M}}\). Given \({\mathcal {S}}\) we write \({\mathbb {M}}({\mathcal {S}}, R)\) for the set of all infectors contained in \({\mathcal {S}}\) for a row of R.

Note that spanning pairs are infectors, but that many other configurations are possible: see Fig. 3 for a few examples.

Lemma 3.8

(A property of the union of infectors) Suppose \(R = [1,x] \times [1,y]\) is up-traversable by \({\mathcal {S}}\) and that \({\mathcal {A}}\) is a subset of \({\mathcal {S}}\) of minimal cardinality with the same property. For each \(\ell \in \{1,\dots ,y\}\) there exists an infector \({\mathcal {M}}_\ell \) of row \(\ell \) in \({\mathcal {S}}\) such that

Proof

Let \({\mathcal {A}}'\) be a subset of \({\mathcal {S}}\) such that R is up-traversable by \({\mathcal {A}}'\) and such that \({\mathcal {A}}'\) is a set with minimal cardinality for this property. By Lemma 3.2, the event that R is up-traversable by \({\mathcal {A}}'\) is equivalent to the event that there exists a spanning pair for each row of R after some finite number of iterations of \({\mathcal {A}}'\) by the bootstrap operator \({\mathcal {B}}\). This means that for each row \({\mathcal {A}}'\) contains at least one infector. Note that it is a priori possible that the infectors in \({\mathbb {M}}({\mathcal {A}}', R)\) overlap partially or that an infector for some row \(\ell \) is contained in an infector for a row \(\ell ' \ne \ell \). Write \(({\mathcal {M}}^{(i)})_{i=1}^{|{\mathbb {M}}({\mathcal {A}}',R)|}\) for some (arbitrary) ordered list of the infectors, and, for \(1 \leqslant s \leqslant |{\mathbb {M}}({\mathcal {A}}', R)|\) write

for the union of the sites of all the infectors except those of \({\mathcal {M}}^{(s)}\). Now suppose that there exist \(1 \leqslant s < t \leqslant |{\mathbb {M}}({\mathcal {A}}', R)|\) such that \({\mathcal {M}}^{(s)}, {\mathcal {M}}^{(t)}\) are both infectors of the same row \(\ell \) and suppose that \({\mathcal {M}}^{(s)} {\setminus } {\mathbb {M}}^\flat (s) \ne \varnothing \) and \({\mathcal {M}}^{(t)} {\setminus } {\mathbb {M}}^\flat (t) \ne \varnothing \). Then, since \({\mathcal {M}}^{(s)}\) is an infector for row \(\ell \) and the sites in \({\mathcal {M}}^{(t)} {\setminus } {\mathbb {M}}^\flat (t)\) are not needed to create a spanning pair for any other row, R is also up-traversable by the set \({\mathcal {A}}' {\setminus } ({\mathcal {M}}^{(t)} {\setminus } {\mathbb {M}}^\flat (t))\), whose cardinality is strictly smaller than \({\mathcal {A}}'\). This gives a contradiction. Hence, for each row \(\ell \) there must exist at most one infector \({\mathcal {M}}^{(s)}\) with the property that \({\mathcal {M}}^{(s)} {\setminus } {\mathbb {M}}^\flat (s) \ne \varnothing \). Taking their union we obtain \({\mathcal {A}}\) (i.e., \({\mathcal {A}}= {\mathcal {A}}'\)). \(\square \)

Recall that for any set \({\mathcal {Q}}\subset {\mathbb {Z}}^2\) we write \({\mathbf {x}}({\mathcal {Q}})\) and \({\mathbf {y}}({\mathcal {Q}})\) for the horizontal and vertical dimensions of that set. We split the event \(\{{\mathcal {S}}\cap R \in {\mathcal {U}}_+(R)\}\) according to whether there exists an infector \({\mathcal {M}}_\ell \) with \({\mathbf {x}}({\mathcal {M}}_\ell ) \geqslant 6k^2\) or not.

Lemma 3.9

(Wide infectors) Let \(R = [1,x] \times [1,k]\) with \(k \geqslant 3\) such that \( k \ll p^{-1}\), and x such that \( k^5 \ll x \leqslant p^{-2}\), then

Proof

Write \({\mathcal {M}}_{j}\) for the first infector such that \({\mathbf {x}}({\mathcal {M}}_{j}) \geqslant 6 k^2\). Since \({\mathcal {M}}_{j} \subset [1,x] \times [1,k]\), \({\mathbf {y}}({\mathcal {M}}_{j}) \leqslant k\). Moreover, \({\mathcal {M}}_{j}\) is the minimal set responsible for the creation of the spanning pair in row j, so it must be the case that \({\mathcal {M}}_{j}\) does not have a gap of more than three consecutive columns. There are at most xk possible positions for the root of the infector. We thus bound (3.3) for the range of x and our choice of k from above by

\(\square \)

Lemma 3.10

(Small infectors) There exist no infectors that are not a single spanning pair that intersect precisely one row, and there exist precisely two infectors that are not a single spanning pair that intersect precisely two rows, up to translations. The cardinality of these infectors is 4, and they span both rows they intersect.

Proof

Let \({\mathcal {M}}_j\) be the infector for some row j. Write v for an element of the spanning pair for row j that becomes infected due to the bootstrap dynamics on \({\mathcal {M}}_j\). (It is easy to see that only one element of a spanning pair can arise after time \(t=0\), but we do not use this fact.) Suppose t is the first time such that \({\mathcal {B}}^{(t)}({\mathcal {M}}_j)\) contains a spanning pair. Because \({\mathcal {M}}_j\) is not a spanning pair, \(t \geqslant 1\). Since v becomes infected at time t, it must be the case that \(|{\mathcal {N}}_{\scriptscriptstyle (1,2)}(v) \cap {\mathcal {B}}^{(t-1)} ({\mathcal {M}}_j)| \geqslant 3\). Any configuration of three sites in \({\mathcal {N}}_{\scriptscriptstyle (1,2)}(v)\) contains a spanning pair for the row that v is in, so v cannot be in row j. By the definition of spanning pairs, (3.1), a site can either span the row that it is in, or the row below it, so v is in row \(j+1\). We conclude that there are no infectors that are not a spanning pair that intersect precisely one row.

By the same argument, if \(t \geqslant 2\), then \({\mathcal {M}}_j\) must contain a site in row \(j+2\), so only infectors that intersect two rows can have \(t=1\).

One can easily verify that the only infectors with \(t =1\) that intersect two rows are translations of the configurations \(\{(0,0), (0,1), (3,1), (4,1)\}\) and \(\{(0,0), (0,1), (-\,3,1), (-\,4,1)\}\) (see the configuration in the bottom-left corner of Fig. 3). These infectors both have cardinality 4, and span both rows they intersect. \(\square \)

To analyse \({\mathbb {P}}_p({\mathcal {S}}\cap R \in {\mathcal {U}}_+(R))\) we again divide \({\mathcal {A}}\) into the maximal number of disjoint, “causally independent” pieces, to which we may apply the BK-inequality. We have seen that when \(\tau =0\) these pieces can be described as paths. When \(\tau >0\) this is still the case, but now the path structure can be found at the level of the infectors. We partition \({\mathcal {A}}\) as follows: let r be the largest integer such that there exist sets \(B_1, \dots , B_r\) that partition \({\mathcal {A}}\) (i.e., \(B_i \cap B_j = \varnothing \) for all \(i \ne j\) and \({\mathcal {A}}= \bigcup _{i=1}^r B_i\)) and such that there exist r pairs of integers \(\{(a_i, b_i)\}_{i=1}^r\) such that

-

\(1 = a_1 \leqslant b_1 \leqslant a_2 \leqslant b_2 \leqslant \cdots \leqslant a_r \leqslant b_r =k\), and

-

the event

$$\begin{aligned} \{[1,x] \times [a_1, b_1] \text { is up-trav by }B_1\} \circ \cdots \circ \{[1,x] \times [a_r, b_r] \text { is up-trav by } B_r\} \end{aligned}$$occurs.

Lemma 3.11

(Path structure of \(B_1, \dots , B_r\)) Let \(R = [1,x] \times [1,y]\) and suppose that R is up-traversable by \({\mathcal {S}}\). Let \({\mathcal {A}}\) be the subset of \({\mathcal {S}}\) with minimal cardinality such that R is up-traversable by \({\mathcal {A}}\). Let \(B_1, \dots , B_r\) be the division of \({\mathcal {A}}\) into disjointly occurring pieces described above. Then the following hold:

-

(a)

For any row \(\ell \in \{1, \dots , y\}\) there exists a unique \(i \in \{1,\dots , r\}\) such that \({\mathcal {M}}_\ell \subseteq B_i\).

-

(b)

If \(B_i\) spans rows \(\ell , \dots , \ell +m\), then \(B_i = \cup _{j=\ell }^{\ell +m} {\mathcal {M}}_j\).

-

(c)

If \({\mathcal {M}}_j \subseteq B_i\) and \(j < b_i\), then at least one of the following holds: \({\mathcal {M}}_j = B_i\); or there exists a \(j' < j\) such that \({\mathcal {M}}_j \subset {\mathcal {M}}_{j'} \subseteq B_i\); or \({\mathcal {M}}_j \cap {\mathcal {M}}_{j+1} \ne \varnothing \).

-

(d)

If \({\mathcal {M}}_j \subseteq B_i\) and \(j = b_i\), then at least one of the following holds: \({\mathcal {M}}_j = B_i\); or there exists a \(j' < j\) such that \({\mathcal {M}}_j \subset {\mathcal {M}}_{j'} \subseteq B_i\); or \({\mathcal {M}}_{j-1} \cap {\mathcal {M}}_{j} \ne \varnothing \).

Proof

-

(a)

By construction, \({\mathcal {A}}= \cup _{i=1}^r B_i\), and \(B_i \circ B_j\) occurs if \(i \ne j\). By Lemma 3.8, \({\mathcal {A}}= \cup _{\ell =1}^k {\mathcal {M}}_\ell \). Suppose that there exists an \(\ell \) such that \({\mathcal {M}}_\ell \cap B_i\ne \varnothing \) and \({\mathcal {M}}_\ell \cap B_j\ne \varnothing \) for some \(i\ne j\). Without loss of generality, we can further assume that \(a_i\leqslant \ell \leqslant b_i\). Since \({\mathcal {M}}_\ell \) is the minimal set to create a spanning pair for row \(\ell \), and that \({\mathcal {M}}_\ell \cap B_i\) is a strict subset of \({\mathcal {M}}_\ell \) (since the latter intersects \(B_j\), which is disjoint from \(B_i\) by assumption), we deduce that \(\langle {\mathcal {M}}_\ell \cap B_i\rangle \) cannot contain a spanning pair for row \(\ell \). By Lemma 3.2, this means that \([1,x]\times [a_i,b_i]\) is not up-traversable by \(B_i\), which is a contradiction.

-

(b)

By Lemma 3.8, \({\mathcal {A}}= \cup _{i=1}^k {\mathcal {M}}_i\). Combined with (a) this gives (b).

-

(c)

Suppose that \(B_i\) spans rows \(\ell , \dots , \ell +m\) and suppose that there exists a \(j < b_i\) such that neither \({\mathcal {M}}_j = B_i\) nor \({\mathcal {M}}_j \subset {\mathcal {M}}_{j'}\) for some \(j' < j\), and such that \({\mathcal {M}}_{j} \cap {\mathcal {M}}_{j+1} = \varnothing \). Then we can partition

$$\begin{aligned} B_i = \left( \bigcup _{s=\ell }^j {\mathcal {M}}_{s} \right) \sqcup \left( \bigcup _{t=j+1}^{\ell +m} {\mathcal {M}}_{t} \right) \,{=:}\, B_{i,1} \, \sqcup \, B_{i,2}. \end{aligned}$$It then follows that

$$\begin{aligned} \{[1,x] \times [\ell , j] \text { is up-trav by }B_{i,1}\} \circ \{[1,x] \times [j+1, \ell +m] \text { is up-trav by } B_{i,2}\} \end{aligned}$$occurs. This gives a contradiction, since by construction the sets \(B_1, \dots , B_r\) are the maximal partition of \({\mathcal {A}}\) with this property, so such a j does not exist. So we conclude that if \({\mathcal {M}}_j \subset B_i\) but \({\mathcal {M}}_j \ne B_i\) and \({\mathcal {M}}_j \nsubseteq {\mathcal {M}}_{j'}\) for some \(j'<j\), then \({\mathcal {M}}_{j} \cap {\mathcal {M}}_{j+1} \ne \varnothing \).

-

(d)

The proof is identical to that of (c), mutatis mutandis.

\(\square \)

For all \(k,\ell ,m,x \in {\mathbb {N}}\), let \({\mathcal {E}}_{\ell +1, \ell +m}\) denote the event that a configuration of infected sites \({\mathcal {S}}\) has the following properties:

-

\({\mathcal {S}}\cap ([1,x] \times [\ell +1, \ell + m]) \in {\mathcal {U}}_+([1,x] \times [\ell +1, \ell + m])\),

-

the minimal subset \({\mathcal {A}}\) of \({\mathcal {S}}\) such that \([1,x] \times [\ell +1, \ell + m]\) is up-traversable by \({\mathcal {A}}\) cannot be divided into two or more disjointly occurring pieces, i.e., \({\mathcal {A}}= B_1\) in the construction described above.

-

\(\max _{j=\ell +1}^m {\mathbf {x}}({\mathcal {M}}_j) < 6k^2\).

Lemma 3.12

For \(k \geqslant 3\), \(\ell + m \leqslant k\) and all \(p \in [0,1]\),

Proof

There is at least one infected site in row \(\ell +1\), and it can be at x positions.

By Lemma 3.11, the event \({\mathcal {E}}_{\ell +1, \ell +m}\) implies that \({\mathcal {A}}\) is the union of infectors that are not disjoint. Since, moreover, none of the infectors are wider than \(6k^2-1\), for each of the rows \(\ell +2,\dots , \ell +m\) we then need to have at least 1 infected site in the line-segment \([-\,6k^2-3,6k^2+3]\) directly above the infected site of the row below it. Finally, row \(\ell +m\) must also be spanned, and by Lemma 3.10 its spanning pair must already be present at time \(t=0\), so there must be another infected site in that row, in one of the four positions that can create a spanning pair for line \(\ell +m\). We thus bound

\(\square \)

Write

and

The following lemma states the key inequality for the induction:

Lemma 3.13

For \(k \geqslant 2\),

Proof

Since \({\mathcal {V}}^+_{1,k}\) occurs, \([1,x] \times [1,k]\) is up-traversable. Let \({\mathcal {A}}\) be the minimal subset of \({\mathcal {S}}\) such that \([1,x] \times [1,k]\) is up-traversable with respect to \({\mathcal {A}}\). Let \(B_1, \dots , B_r\) be the subdivision of \({\mathcal {A}}\) described above. Let \(u \in {\mathcal {A}}\) and \(v \in \langle {\mathcal {A}}\rangle {\setminus } {\mathcal {A}}\) be such that \(\{u,v\}\) form a spanning pair for the row i, while \({\mathcal {A}}\) does not contain a spanning pair for row i. At least one such pair must exist since \({\mathcal {V}}^+_{1,k}\) occurs. Let j be such that \({\mathcal {M}}_i \subseteq B_j\) (we can find such a \(B_j\) by Lemma 3.11(a)). Suppose that \(B_j\) spans exactly the rows \(\ell +1, \dots , \ell +m\) (i.e., \(a_j = \ell +1\) and \(b_j = \ell +m\)). Then, by the construction of \(B_1, \dots , B_r\) and \({\mathcal {E}}_{\ell +1, \ell +m}\) we know that

occurs for \({\mathcal {S}}\). Applying the BK-inequality and summing over \(\ell \) and m gives the asserted inequality. The sum over m starts at 2 because by Lemma 3.10, \(B_j\) must span at least two rows.\(\square \)

3.3 The proof of Lemma 3.1

To begin, assume that \(\frac{3 k^2}{p} \leqslant x \leqslant \frac{1}{p^2}\). We start by proving Lemma 3.1 for the cases where \(y \leqslant k\). More precisely, we will prove that

holds for \(k \ll p^{-1}\). We use induction. The inductive hypothesis is that (3.4) holds for \(k' \leqslant k-1\) and \( k^5 \ll x \leqslant p^{-2}\). To initialise the induction we observe that when \(k=1\) there exist four spanning pairs up to translations that intersect one row, so \({\mathbb {P}}_p({\mathcal {V}}_{1,1}) \leqslant 4p^2 x < \mathrm {e}(8p^2x + 8p)\). When \(k=2\) we use Lemma 3.10 to bound

which, combined with Lemma 3.4 yields that

when p is sufficiently small.

When \(3 \leqslant k \ll p^{-1}\), by (3.5), Lemmas 3.4, 3.9, 3.12, and 3.13, and the induction hypothesis (3.5), when p is sufficiently small,

where the second term on the right-hand side is due to Lemma 3.9, and the third and fourth correspond to the \(m=2\) and \(m\geqslant 3\) terms in Lemma 3.13.

It is not difficult to show that

When \(\frac{3 k^2}{p} \leqslant x\) we have \(12 p k^2 + 7p \leqslant \tfrac{1}{2} (8 p^2 x + 8p)\), so this implies that

Inspecting (3.6), it follows that the desired bound (3.4) holds if the following two inequalities hold for p sufficiently small:

The first inequality holds because \(k \ll x\). It is easy to verify that the second inequality holds when \(k^5 \ll x \leqslant p^{-2}\). Substituting the above inequalities into (3.6) proves the claim of Lemma 3.1 for \(y \leqslant k\).

Now we consider \(R = [1,x] \times [1,y]\) for y such that \(k< y < x\) (still assuming that \(\frac{3 k^2}{p} \leqslant x \leqslant \frac{1}{p^2}\)). We cover R with \(\lceil y/k \rceil \) rectangles of height k. If y is not divisible by k the covering “overshoots”: it includes at most \(k-1\) rows that are not in R. If R is up-traversable, and if the overshoot contains a connected upward path, then all these rectangles are also up-traversable. The probability that there is a connected path in the overshoot is at least \(p^k\). It thus follows by the BK-inequality that

where these bounds again hold for p sufficiently small. This completes the proof of Lemma 3.1 for the case \(\frac{3 k^2}{p} \leqslant x \leqslant \frac{1}{p^2}\).

The case \(x < \frac{3 k^2}{p}\) is now easy. Note that if \([1,x] \times [1,y]\) is up-traversable by \({\mathcal {S}}\), then also \([1,x+a] \times [1,y]\) for any \(a \geqslant 1\) is up-traversable by \({\mathcal {S}}\) (i.e., up-traversability is a monotone increasing event in the width of the rectangle). Hence, \({\mathbb {P}}_p([x] \times [y]\) is up-trav) is a monotone increasing function in x. The bound thus follows by choosing \(x = \frac{3k^2}{p}\) and applying the bound for the case \(\frac{3 k^2}{p} \leqslant x \leqslant \frac{1}{p^2}\). \(\square \)

4 The probability of simultaneous horizontal and vertical growth

The lemma below states an upper bound on the probability of an infected rectangle growing both vertically and horizontally, i.e., an upper bound on \({\mathbb {P}}_p(R \Rightarrow R')\) for certain \(R \subset R'\).

Let

Recalling Lemma 3.1 and the bound on f(p, y) in Lemma 2.2 above, let

and let

For two rectangles \(R\subset R'\) with dimensions (x, y) and \((x+s,y+t)\), let

Observe that \(\psi \) and \(\phi \) are both positive, decreasing, and convex functions (where they are not zero).

Lemma 4.1

Let \(R \subset R'\), with dimensions (x, y) and \((x+s,y+t)\) respectively. Assume that \(t \leqslant \frac{1}{p} \log ^{-4} \frac{1}{p}\). Then, for p sufficiently small,

The proof uses a similar strategy as [23, Proof of Proposition 3.3]. Roughly speaking this strategy entails that we “decorrelate” the horizontal and vertical growth events needed for \(\{R \Rightarrow R'\}\).

Proof

If \(y+t \leqslant \frac{4}{p} \log \log \frac{1}{p}\) and \(x+s > 1/p^2\), then we use the trivial bound \({\mathbb {P}}_p(R \Rightarrow R') \leqslant 1,\) corresponding to \(U^p(R,R')=0\), as required.

If \(y+t \leqslant \frac{4}{p} \log \log \frac{1}{p}\) and \(x+s \leqslant 1/p^2\), then we apply Lemma 3.1 (with \(k = \xi \)), again giving the required bound.

Therefore, we assume henceforth that \(y+t > \frac{4}{p} \log \log \frac{1}{p}\) and \(x+s \leqslant \frac{1}{p^2}\).

To start, suppose that \((1-\delta _\xi ) t \psi (x+s) > \delta _\xi s \phi (y+t)\), which corresponds to the vertical growth component t being disproportionately large compared to the horizontal growth component s. Then, we can simply ignore the horizontal growth and apply Lemma 3.1 to bound

and we are done. Therefore, let us henceforth also assume that

We identify five (intersecting) regions within the area \(R' {\setminus } R\): the North, South, West, and East regions \(R_n\), \(R_s\), \(R_w\), and \(R_e\), and the corner region H: for \(R'=[a_1,a_2]\times [b_1,b_2]\) and \(R=[c_1,c_2]\times [d_1,d_2]\), such that \(a_1 \leqslant c_1 < c_2 \leqslant a_2\) and \(b_1 \leqslant d_1 < d_2 \leqslant b_2\), we define the sets

see Fig. 5. Observe that

Let

Recall from Definition 3.7 above that we write \({\mathbb {M}}(S,R_n)\) and \({\mathbb {M}}({\mathcal {S}},R_s)\) for the sets of infectors of \(R_n\) and \(R_s\) (the latter being a set of infectors suitably defined for down-traversability). By Lemma 3.8, we are able to determine whether E occurs by inspecting only sites in \({\mathbb {M}}(S,R_n)\) and \({\mathbb {M}}({\mathcal {S}},R_s)\). So the event that \(R_w\) and \(R_e\) are horizontally traversable only depends on E through the information about the intersection of these sets with H, the region where the rectangles overlap. Define \({\mathbb {M}}^\flat _H({\mathcal {S}})\) as the set of all sites in \({\mathcal {S}}\cap H\) contained in either \({\mathbb {M}}(S,R_n)\) or \({\mathbb {M}}({\mathcal {S}},R_s)\). Let Y denote the number of columns in H that contain at least one infected site in \({\mathbb {M}}^\flat _H({\mathcal {S}})\). We split

We start by bounding the first term in (4.6). Let \(F : = \{Y \leqslant s/(2\xi )\}\). We use Lemma 3.1 with \(k = \xi = \lceil \log ^2 \frac{1}{p} \rceil \) to bound

Let \({\mathfrak {R}}_n\) denote the set of all sets of n subrectangles of \(R_e \cup R_w\) with heights \(y+t\), total width n, and such that each pair of rectangles in a set \({\mathfrak {r}}\in {\mathfrak {R}}_n\) are separated by at least one column. I.e., for \({\mathfrak {r}}= \{{\mathfrak {r}}_i\}_{i=1}^{N({\mathfrak {r}})} \in {\mathfrak {R}}_n\) we have that \({\mathfrak {r}}\) is a collection of \(N({\mathfrak {r}})\) strictly disjoint subrectangles with \(\sum _{i=1}^{N({\mathfrak {r}})} {\mathbf {x}}({\mathfrak {r}}_i) = n\), and \({\mathbf {y}}({\mathfrak {r}}_i) = y+t\) for all \(1 \leqslant i \leqslant N({\mathfrak {r}})\). For any \({\mathfrak {r}}= \{{\mathfrak {r}}_i\}_{i=1}^{N({\mathfrak {r}})} \in {\mathfrak {R}}_n\) define the following two events:

and

that is, \(E_2({\mathfrak {r}})\) is the event that \({\mathfrak {r}}\) is the partition into the least number of rectangles of total width n that does not intersect \({\mathbb {M}}_H^\flat \), and that there is no partition of total width greater than n that also does not intersect \({\mathbb {M}}_H^\flat \). Observe that

Thus,

where we used that the sum may be restricted to \(m \leqslant s/(2\xi )\) by the conditioning on F. Now note that the events E and F can be verified by inspecting only \({\mathbb {M}}^\flat _H\), which, on the event \(E_2({\mathfrak {r}})\) is contained in \(H {\setminus } {\mathfrak {r}}\), while \(E_1({\mathfrak {r}})\) by definition only depends on the sites in \({\mathfrak {r}}\), so that conditionally on \(E_2({\mathfrak {r}})\) the event \(E_1({\mathfrak {r}})\) is independent of E and F. We may thus write

Observe that for any fixed \({\mathfrak {r}}\) the event \(E_1({\mathfrak {r}})\) is increasing. Indeed, adding more sites to \({\mathcal {S}}\) can either make horizontal traversal occur when it did not before, or else, have no effect. We claim that the event \(E_2({\mathfrak {r}})\), on the other hand, is the intersection of three decreasing events, and hence itself a decreasing event. To see this, observe that the first event in (4.8) is decreasing because adding more sites to \({\mathcal {S}}\) cannot decrease the total width of \({\mathbb {M}}_H^\flat \), since it is the union of all infectors intersecting H (not only those of minimal cardinality for a given row). The second event in (4.8) is likewise decreasing, because increasing \({\mathbb {M}}_H^\flat \) cannot decrease the minimal number of rectangles of a partition that does not intersect \({\mathbb {M}}_H^\flat \), unless it also decreases the total width of that partition. The third event is decreasing because increasing \({\mathbb {M}}_H^\flat \) cannot decrease its total width. Therefore, we may apply the FKG-inequality to obtain

and we may thus further bound the right-hand side of (4.9) by

Uniformly for any fixed \({\mathfrak {r}}\in {\mathfrak {R}}_{s-m}\) with \(m \leqslant s/(2\xi )\), by Lemma 2.2,

where the final inequality follows from Lemma 2.2(b) when p is sufficiently small. Inserting this bound in (4.9), we proceed by using that the events \(E_2({\mathfrak {r}})\) are mutually disjoint for all \({\mathfrak {r}}\) to bound

Combining (4.7) and (4.10) we bound the first term in (4.6) by \( p^{-\xi } \exp (-U^p(R,R'))\).

Now we bound the second term in (4.6). If \(Y > s/(2\xi )\) then at least \(s/(2 \xi )\) out of s columns are non-empty. The probability that a column is non-empty is \(1-(1-p)^t \leqslant 2pt\) (when p is sufficiently small). Therefore, \({\mathbb {P}}(Y > s/(2\xi )) \leqslant {\mathbb {P}}(\)Bin\((s, 2pt) > s/(2\xi ))\). We use Chernoff’s bound that \({\mathbb {P}}(\)Bin\((n, p) > q) \leqslant \mathrm {e}^{-q}\) when \(q>np\) to estimate

(here we used that \(t \leqslant \frac{1}{p} \log ^{-4} \frac{1}{p}\)). Observe that since \(\xi = \lceil \log ^2 \frac{1}{p} \rceil \), \(\delta _\xi = 1-\xi ^{-1}\), and \(\phi (y+t) > \log ^{-12} \frac{1}{p}\) by our assumption that \(y+t > \frac{4}{p} \log \log \frac{1}{p}\), we have

Now recall our assumption (4.5) that \((1-\delta _\xi ) t \psi (x+s) \leqslant \delta _\xi s \phi (y+t)\). Applying this inequality twice, it follows that

We thus have \({\mathbb {P}}_p(Y > s/(2\xi )) \leqslant \exp (-U^p(R,R'))\), as required.

Applying the bounds for the two cases to (4.6) completes the proof (using the crude upper bound \(p^{-\xi } + 1 \leqslant 2 p^{- \xi }\) for p sufficiently small). \(\square \)

5 The upper bound of Theorem 1.3

Proposition 5.1

Let \(p > 0\) and \(\frac{1}{3p} \log \frac{1}{p} \leqslant y \leqslant \frac{1}{p} \log \frac{1}{p}\) and \(\frac{1}{p^2} \leqslant x \leqslant \frac{1}{p^5}\). Then

5.1 Notation and definitions

Before we proceed with the proof, we must introduce some more notation and a few definitions. Our proof uses hierarchies. The notion of hierarchies is due to Holroyd [33], and is common to much of the bootstrap percolation literature since. Here we use a definition of a hierarchy that is similar to the one in [23]:

Definition 5.2

(Hierarchies).

-

(a)

Hierarchy, seed, normal vertex, and splitter: A hierarchy \({\mathcal {H}}\) is a rooted tree with out-degrees at most threeFootnote 11 and with each vertex v labeled by non-empty rectangle \(R_v\) such that \(R_v\) contains all the rectangles that label the descendants of v. If the number of descendants of a vertex is 0, we call the vertex a seed. Footnote 12 If the vertex has one descendant, we call it a normal vertex, and we write \(u\mapsto v\) to indicate that u is a normal vertex with (unique) descendant v. If the vertex has two or more descendants, we call it a splitter vertex. We write \(N({\mathcal {H}})\) for the number of vertices in the tree \({\mathcal {H}}\).

-

(b)

Precision: A hierarchy of precision Z (with \(Z \geqslant 1\)) is a hierarchy that satisfies the following conditions:

-

(1)

If w is a seed, then \({\mathbf {x}}(R_w) \geqslant 2\) and \({\mathbf {y}}(R_w)<2Z\), while if u is a normal vertex or a splitter, then \({\mathbf {y}}(R_u)\geqslant 2Z\).

-

(2)

If u is a normal vertex with descendant v, then \({\mathbf {y}}(R_u)-{\mathbf {y}}(R_v) \leqslant 2Z\).

-

(3)

If u is a normal vertex with descendant v and v is either a seed or a normal vertex, then \({\mathbf {y}}(R_u)-{\mathbf {y}}(R_v) >Z\).

-

(4)

If u is a splitter with descendants \(v_1,\dots ,v_i\) and \(i \in \{2,3\}\), then there exists \(j\in \{1,\dots ,i\}\) such that \({\mathbf {y}}(R_{u})-{\mathbf {y}}(R_{v_j})> Z.\)

-

(1)

-

(c)

Presence: Given a set of infected sites \({\mathcal {S}}\) we say that a hierarchy \({\mathcal {H}}\) is present in \({\mathcal {S}}\) if all of the following events occur disjointly:

-

(1)

For each seed w, \(R_w = \langle R_w \cap {\mathcal {S}}\rangle \) (i.e., \(R_w\) is internally filled by \({\mathcal {S}}\)).

-

(2)

For each normal u and every v such that \(u \mapsto v\), \(R_u = \langle (R_v \cup {\mathcal {S}}) \cap R_u \rangle \) (i.e., the event \(\{R_v \Rightarrow R_u\}\) occurs on \({\mathcal {S}}\)).

-

(1)

-

(d)

Goodness: Similar to [30], we say that a seed w is large if \(Z/3 \leqslant {\mathbf {y}}(R_w) \leqslant Z\). We call a hierarchy good if it has at most \(\log ^{11} \frac{1}{p}\) large seeds, and we call it bad otherwise.

5.2 Outline of the proof of Proposition 5.1

In this section we give the proof of Proposition 5.1 subject to Lemma 5.9 below. We prove Lemma 5.9 in Sect. 6.

Let \({\mathcal {H}}_{Z,R}\) denote a hierarchy with root R and precision Z. Let \({\mathbb {H}}_{Z,R}\) denote the set of all \({\mathcal {H}}_{Z,R}\). Likewise, let \({\mathbb {H}}_{Z,R}^{\scriptscriptstyle \mathrm {good}}\) and \({\mathbb {H}}_{Z,R}^{\scriptscriptstyle \mathrm {bad}}\) denote the subsets of good and bad hierarchies in \({\mathbb {H}}_{Z,R}\). Lastly, given a set of hierarchies \({\mathbb {H}}\) and a rectangle R, define the event

Lemma 5.3

Let R be a rectangle with \({\mathbf {x}}(R) \geqslant 2\) and let \(Z \geqslant 3\). If R is internally filled, then there exists a hierarchy \({\mathcal {H}}_{Z,R} \in {\mathbb {H}}_{Z,R}\) that is present, i.e., \({\mathcal {X}}(R; {\mathbb {H}}_{Z,R})\) occurs.

The proof of this lemma is the same as the proof of [23, Proposition 3.8] so we do not repeat it here. (But note that it does not matter that our definition of hierarchies uses “internally filled” rather than “k-occurs”.)

Throughout this paper, let

Conform the hypothesis of Proposition 5.1 we restrict ourselves to hierarchies with root label \(R_p\) of dimensions (x, y) such that

For the sake of simplicity we often suppress subscripts \(Z_p\) and \(R_p\).

We bound the good and bad hierarchies separately:

We bound the second term with the following lemma:

Lemma 5.4

As p tends to 0 we have

Proof

We claim that if R is a large seed, i.e., \(Z_p/3 \leqslant {\mathbf {y}}(R) \leqslant Z_p\), then

To see that this is indeed the case we consider the cases \(x \geqslant 1/p\) and \(x < 1/p\) separately. For the case \(x \geqslant 1/p\), the bound follows from Lemma 2.2(c):

For the case \(x < 1/p\), the bound follows from Lemma 3.1 with \(k=2\) and p sufficiently small:

Now consider the event \({\mathcal {X}}(R_p; {\mathbb {H}}^{\scriptscriptstyle \mathrm {bad}})\). This event implies that there exists a hierarchy \({\mathcal {H}}\) that is present and bad, which by definition means that more than \(\log ^{11} \frac{1}{p}\) rectangles of size between \(Z_p/3\) and \(Z_p\) are internally filled disjointly. Since \(R_p\) contains at most \(\frac{1}{p^6} \log \frac{1}{p}\) sites, the probability of this event is smaller than

\(\square \)

We bound the first term of (5.1) as follows:

Now we apply the following lemma.

Lemma 5.5

The number of good hierarchies satisfies

Proof

We start by observing that any good hierarchy \({\mathcal {H}}\in {\mathbb {H}}^{\scriptscriptstyle \mathrm {good}}\) has root \(R_p\) such that \(x \leqslant \frac{1}{p^5}\) and \({\mathbf {y}}(R_p) \leqslant \frac{1}{p} \log \frac{1}{p}\) and precision \(Z_p = \frac{1}{p} \log ^{-8} \frac{1}{p}\), so its height \(h({\mathcal {H}})\) is bounded from above by

Moreover, since there are at most \(\log ^{11} \frac{1}{p}\) large seeds in a good hierarchy, the number of vertices \(N_{\mathcal {H}}\) in the hierarchy \({\mathcal {H}}\) obeys

Each vertex of a hierarchy has 0, 1, 2 or 3 descendants, so there are at most \(4^{\log ^{20} \frac{1}{p}}\) unlabelled trees corresponding to the good hierarchies. Finally, since each vertex of a hierarchy is labelled by a sub-rectangle of \(R_p\) with \({\mathbf {x}}(R_p) \leqslant p^{-5}\), the number of choices for each label is bounded from above by

so

\(\square \)

By Lemma 5.5 it suffices to give a uniform bound on the probability that a given hierarchy is present, if the hierarchy is good. Indeed, it remains to show that