Abstract

Chatbots, web-based artificial intelligence tools that simulate human conversation, are increasingly in use to support many areas of genomic medicine. However, patient preferences towards using chatbots across the range of clinical settings are unknown. We conducted a qualitative study with individuals who underwent genetic testing for themselves or their child. Participants were asked about their preferences for using a chatbot within the genetic testing journey. Thematic analysis employing interpretive description was used. We interviewed 30 participants (67% female, 50% 50 + years). Participants considered chatbots to be inefficient for very simple tasks (e.g., answering FAQs) or very complex tasks (e.g., explaining results). Chatbots were acceptable for moderately complex tasks where participants perceived a favorable return on their investment of time and energy. In addition to achieving this “sweet spot,” participants anticipated that their comfort with chatbots would increase if the chatbot was used as a complement to but not a replacement for usual care. Participants wanted a “safety net” (i.e., access to a clinician) for needs not addressed by the chatbot. This study provides timely insights into patients’ comfort with and perceived limitations of chatbots for genomic medicine and can inform their implementation in practice.

Similar content being viewed by others

Introduction

Increased demand for genetic testing, coupled with constrained service delivery systems, has led to long wait times for genetic consultations (Cooksey et al. 2005; Hoskovec et al. 2018; Office of the Auditor General of Ontario 2017). Innovative strategies have been developed to increase the capacity and efficiency of genetic service delivery, such as online decision aids and patient portals (Bombard et al. 2018; Green et al. 2001a, b; Green et al. 2004, 2005). This shift towards provision of digital solutions has been further accelerated by the COVID-19 pandemic (Bombard and Hayeems 2020; Golinelli et al. 2020; Gunasekeran et al. 2021; Keesara et al. 2020). These strategies can facilitate many components of care delivery, including family history taking, phenotyping, patient education, counseling, and return of results (Bombard et al. 2018; Green et al. 2001a, b; Green et al. 2001a, b; Green et al. 2004, 2005).

Another innovative strategy that may facilitate genetic service delivery is the use of chatbots. Chatbots are web-based tools that use artificial intelligence to simulate human conversation (Ireland et al. 2021) and are currently in use in many areas of medicine to support diagnostics, disease management, and treatment (Ahmed et al. 2021; Cooper and Ireland 2018; Dosovitsky et al. 2020; Gaffney et al. 2019; Ghosh, Bhatia, & Bhatia, 2018; Greer et al. 2019; Kobori et al. 2018; Milne-Ives et al. 2020; Stein and Brooks 2017; Watson et al. 2012). In genomics, chatbots are being developed to assist with delivering various components of care (Heald et al. 2020; Ireland et al. 2021; Nazareth et al. 2021; Sato et al. 2021; Schmidlen et al. 2019; Siglen et al. 2021). For example, chatbots exist or are in development to support patients with pre-test counseling, result disclosure, communication with the health care team, notification of family members about results or the availability of cascade testing, and attending to distress and anxiety (Heald et al. 2020; Ireland et al. 2021; Nazareth et al. 2021; Sato et al. 2021; Schmidlen et al. 2022, 2019). However, much of the research into chatbots has focused on their medical accuracy (Nazareth et al. 2021; Sato et al. 2021), feasibility (Heald et al. 2020), or potential impact on knowledge and decision-making (Ireland et al. 2021).

Patient acceptability of chatbots in healthcare has been studied in other disciplines like psychology, (Easton et al. 2019) (Goonesekera and Donkin 2022), oncology (Bibault et al. 2019; Chaix et al. 2019), and public health (Luk et al. 2022). A 2020 systematic review of the use of chatbots in health care found that patients generally reported high satisfaction with chatbots (Milne-Ives et al. 2020). However, research aimed at understanding patients’ attitudes towards, acceptance of, trust in, and comfort with chatbots within genetics is only just emerging (Nazareth et al. 2021; Siglen et al. 2021). For example, initial feedback on a genetics chatbot designed to answer questions about hereditary breast and ovarian cancer indicated that pilot testers found it to be trustworthy and user-friendly (Siglen et al. 2021). Another chatbot designed to calculate hereditary cancer risk prior to a clinic appointment was rated to be satisfactory on a one-item scale completed by users (Nazareth et al. 2021). A chatbot which collects family health histories in underserved patients was rated with high satisfaction and was considered easy to use and follow by participants (Wang et al. 2015). Another chatbot designed for patients with heritable heart disease or cancer was assessed through focus groups. Participants were highly supportive of two functions: consent and use of the chatbot for interactions with their health care providers to discuss care coordination following return of results (Schmidlen et al. 2019). Participants were generally supportive of a third function, contacting at risk family members for cascade testing, but this was dependent on the characteristics of the intended recipient (such as age and current health status) (Schmidlen et al. 2019). This chatbot was also assessed for its role in sharing genetic test results and cascade testing information with family, and downstream chatbot engagement by the proband’s family members. These findings indicated that the chatbot was a viable tool for sharing information and cascade testing (Schmidlen et al. 2022). While these studies provide preliminary evidence of positive user experiences and patient satisfaction with chatbots, evaluation has been limited to the functions of information provision or triage, with a focus on adult cancer or screening populations (Nazareth et al. 2021; Schmidlen et al. 2022, 2019; Siglen et al. 2021; Wang et al. 2015). A more in-depth understanding of the appropriateness of these tools, including evidence on diverse users’ needs and preferences in non-cancer and non-screening settings, is required to develop patient-centered digital tool for a range of settings.

To address this gap, we aimed to better understand patients’ preferences towards and opinions about the appropriate uses of a chatbot across all encounters that comprise the genetic testing journey, from initial consultation to result disclosure.

Methods

Design

We conducted a qualitative study using semi-structured interviews to understand the preferences of adult patients and parents of pediatric patients regarding the use of a chatbot within the genetic testing journey. We chose thematic analysis as our qualitative analytic method for its flexibility and usefulness in understanding research participants’ lived experiences with the genetic counseling and testing journey (Braun and Clarke 2022).

Research ethics approval was obtained through the Unity Health Toronto Research Ethics.

Board (REB# 20–143). Informed verbal consent was provided.

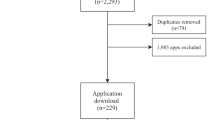

Setting, sample, and recruitment

Potential participants were sent an invitation email and one reminder email by the Canadian Organization of Rare Disorders (CORD) (Canadian Organization for Rare Disorders 2019) through their listserv or were previous participants in a Toronto-based cancer genetics study and had agreed to be recontacted for future research. We purposefully sampled nationally to maximize the geographic diversity of potential participants. To be eligible, the participant must have been proficient in English and they or their child must have previously had genetic testing of any type, as long as the testing was related to understanding the etiology of a presenting condition. Participants were offered a $20 gift card in appreciation of their participation. Interested individuals contacted the study team and were screened to ensure eligibility. Participants were recruited between January-July 2021 as part of a larger needs’ assessment to inform the development of a digital patient-facing genetics platform called the Genetics Navigator that integrates chatbot technology (Bombard and Hayeems 2020).

Data collection

A semi-structured interview guide was developed by the study team and informed by relevant literature (see supplementary material) (Nadarzynski et al. 2019; Schmidlen et al. 2019). During individual interviews, participants were asked to share their opinions on the acceptability of and comfort with using chatbot technology in genetics. Specifically, participants were asked to share (i) their general impressions of chatbots, (ii) the spectrum of tasks they believed would be best suited to chatbots, and (iii) their thoughts on the ideal integration points for chatbots within the genetic counseling and testing journey. In instances in which the term ‘chatbot’ itself was either unfamiliar or unclear to participants, the researchers provided a standard definition (i.e., a conversational agent that can communicate via text or audio) to ensure comprehension (Ireland et al. 2021).

Four members of the research team (SL: research project manager, AS: research assistant, WL: research genetic counselor, and MC: research program manager) conducted the interviews. All interviewers had a Master’s level degree, had previous experience conducting qualitative interviews, and were previously unknown to their interviewee. Three of the four interviewers were female. Each interviewer’s first two interviews were conducted with another research team member in attendance to optimize interviewer consistency. Following the first few interviews, the interview guide was adjusted to improve clarity, add probing questions, and build upon emerging themes. Interviews continued until the team was in agreement that the data we had collected provided rich responses to the main research questions. Interviews were conducted by telephone or video-conferencing technology at a time that was convenient for the participant. All interviews were digitally recorded and transcribed verbatim. Interview field notes were shared verbally with the larger research team. A short demographics survey was administered. Each participant was interviewed once and participants were offered the opportunity to provide additional feedback and review their transcript if requested.

Data analysis

Reflexive thematic analysis was used to iteratively analyze interview transcripts (Braun and Clarke 2022). Five members of the research team (SL, MC, AS, SK, EAW) met regularly to review the data and share impressions. The initial coding scheme was informed by these preliminary analysis discussions, field notes, and the interview guide and proceeded in regular consultation with the larger study team. The group of five analysts applied the initial coding scheme to four transcripts. Emergent findings were discussed which led to further refinements of the coding scheme. Once finalized, coding of four more interviews proceeded in smaller groups of two or three using Dedoose software (Version 9.0.17, Los Angeles) (SocioCultural Research Consultants, 2021). Further refinements were managed through group discussion. The remaining interviews were coded by AS, in close consultation with SL and MC. Throughout the coding process and following its completion, the analysts met to share impressions, discuss patterns in the data, and create preliminary themes. Often, analysts utilized diagrams and flowcharts to represent main ideas in these discussions. Analysts applied the preliminary themes to coded data and made some modifications to themes to more accurately represent the data. Through group discussion, themes were defined, and named. The analysts then shared the themes with the larger study team, which resulted in further refinement.

Results

Demographic characteristics

In total, 30 participants who previously received genetic testing for themselves (n = 17) or their child (n = 13) were interviewed. Of these, 20 (67%) identified as female and 10 (33%) identified as male. Among all participants, 20 (67%) had at minimum a university or college degree. All participants were 30 years of age or older and exactly half were above 50 years of age. Almost three-quarters (n = 22) lived in an urban center (Table 1). Interviews ranged from 34m58s to 68m15s in length, with an average interview time of 53m16s.

Overview of results

Participants expressed an initial reluctance with using chatbots, drawing from past “frustrating” experiences using this technology for e-commerce or as virtual assistants. However, participants acknowledged that, if designed appropriately and if a “safety net” (i.e., health care provider) was available as needed, chatbots could enhance the experience of genetic testing. When speaking with a chatbot, participants indicated that they wanted the ability to exit and request contact with their clinician, as needed. Knowing that a clinician is available to them and remains an integral part of their care increased participants’ comfort with using chatbots. In addition to the safety net requirement, participants’ acceptance of this technology was dependent on whether the payoff they would receive from the chatbot exceeded the effort required to use it. In most cases, if the task asked of the chatbot was too simple or too complex, participants indicated that the energy investment to interact with a chatbot was not worth the pay off. However, engaging a chatbot was considered useful when participants perceived a favorable return on their investment of time and energy, usually when engaging the chatbot in tasks of moderate complexity. We named this area of acceptability the “sweet spot.” Three overarching themes are presented in detail below and illustrative participant quotes are included throughout the results. Additional data are presented in Table 2.

Initial reluctance to chatbots

Some participants were not aware of the term ‘chatbot.’ However, once a definition was provided by the interviewer, all participants indicated familiarity with this technology. Several participants initially expressed hesitation about using chatbots. For some, this was due to “frustrating” experiences during past encounters with chatbots. Two participants reflected on this reluctance by saying

“It’d be one thing if it actually worked, but it’s, like, more frustrating and infuriating. I have not yet had one experience where it’s [chatbot] actually helpful.” (Participant 012).

Many participants perceived chatbots to be inefficient and primitive in design. For example, they described past interactions in which they had to ask a chatbot several questions before obtaining an adequate response. Participants also voiced their concerns around the reliability of chatbots’ answers. In some instances, participants indicated that they were not able to receive satisfactory responses from chatbots and resorted to a different method of assistance. For example, one participant said

“I just find that sometimes those chatbots, they come back with something, and it’s like, yeah that’s not really what I asked, or what I want to know.” (Participant 001)

Participants also drew examples of these frustrations from their experiences communicating with chatbots on e-commerce sites, and with chatbot features of virtual assistants like Alexa (Amazon.com, Inc.) or Siri (Apple Inc.):

“I have Alexa, for example, when I ask it, you know, tell me something, and it just, it can’t go further, you know what I mean? It can’t, you know it can just do one thing at a time, so, it’s limited.” (Participant 005).

Given the functional limitations encountered with these chatbots, it was challenging for participants to envision the potential for chatbots to be useful and efficient in supporting their genetic testing experience, especially given the nuanced and personal nature of genetic testing. One parent explained

“I feel like a lot of questions we’ve asked our geneticist have been very pointed, very specific, and unique, and I would not really imagine a chatbot being programmed to be able to pull that information and give us sufficient and correct answers.” (Participant 008).

However, most participants acknowledged that if designed appropriately, chatbots could advance the delivery of genetic services, but to feel comfortable using a chatbot, they wanted to be sure they could contact their clinician. They described needing a “safety net” in the form of access to a care provider when using the chatbot, in case the chatbot was unable to address their needs.

The safety net

When engaging with the chatbot, participants wanted the ability to exit the chatbot conversation and request contact with their clinician whenever needed. Participants described this clinician mechanism in various terms, such as “a safety net”, “pulling the chute”, or “a fail-safe”. They described the chatbot’s range of roles to include administrative tasks, acting as a go-between for patients and the rest of the medical team, answering medical questions directly, or providing technical or emotional support.

Participants explained this by saying:

“I think they [chatbots] can be remarkably effective So, that could actually be a really attractive tool. I think, I think you probably need a back-up, somehow some kind of a fail-safe, but yeah, I think that could work.” (Participant 005).

“If I want to pull the chute, and I need to talk to someone to answer my questions, I think that would be really important.” (Participant 009)

Another participant outlined their desire to speak to a real person, specifically for emotional support:

“Now if I’m reading a piece of information that I didn’t anticipate, that triggers an emotion, then, then what’s my next step? I want to talk to somebody.” (Participant 018)

Participants imagined this clinician could be contacted via telephone, email, or live chat and these modalities should be clear to patients. One participant said

“I think that you need to have a real body someplace, that you can pull on. Nothing is worse than being on a site, and you go around, and round in circles, and you can never talk to someone somewhere, it has to bump up to somebody who can say, either that’s the correct answer, or this is it.” (Participant 019).

Participants’ support for chatbots was contingent on the availability of the clinician safety net. Knowing that they could still meet with their clinician as needed increased participants’ comfort with using chatbots.

The sweet spot for chatbots

Generally, participants described chatbots as having the most acceptable and efficient function when the payoff they received from the chatbot exceeded their energy investment. We refer to this area as the sweet spot.

While most participants believed that chatbots could handle simple tasks, some thought that engaging with a chatbot for a straightforward activity did not seem worth the time or energy (i.e., high input). Participants indicated that simple, administrative tasks, such as booking an appointment, could be more easily accomplished through other, low-tech means, such as an online booking portal. Using a chatbot would unnecessarily complicate straightforward tasks. That is, a high investment would be required for little payoff. One participant explained that more efficient alternatives to chatbots exist for particular functions

“I would rather just, like have a really great search function.” (Participant 002)

On the other end of the spectrum, participants perceived limited utility for chatbots to address complex tasks. Participants did not think a chatbot could handle the specific, nuanced, and personal questions they might have and thought it would be impossible for a chatbot to be programmed to account for such specific variations.

A participant elaborated

“It’s so individualized, like for my son’s, our son’s deletion, some people have it and don’t exhibit really any symptoms and then some are even more severe than him, but like if you just have like an article that pops up, about his deletion, it could be really scary for somebody who has a baby, who’s not at the point where they’re having seizures yet, to see, so I would just really caution you on some of that stuff.” (Participant 015).

Interestingly, participants reflected on the potential risks (e.g., reduced credibility) of using chatbots for tasks they deemed too complex. As one participant states:

“When their [chatbot’s] responses are so standard and it’s clearly just not responding to what you’ve, you’ve written very closely I think that detracts from the credibility of the site.” (Participant 021).

Another key feature of the sweet spot relates to perceived efficiency: that the payoff (output received) is worth the energy invested (input required). These include moderately complex tasks such as content provision (e.g., general genetics education) and process-oriented assistance (e.g., providing status updates and information about next steps). Participants perceived that a chatbot could provide efficient, high-quality answers to these types of questions.

Disease information was an example of a function in the sweet spot. Participants spoke about asking the chatbot for disease information. For example

“It would be nice to know in general terms, obviously without identifying individuals, if there were any people out there that were in similar circumstances, in terms of their genetic condition and what that meant in terms of their day-to-day life, and what treatments, therapies, and strategies for daily living, were for those individuals.” (Participant 013).

Participants also indicated that a chatbot would be helpful as a mechanism to provide or guide patients towards trustworthy resources. For example, participants said

“Steering people to accurate sources of information. Letting people know, understand, help people understand the [genetic testing] process, that’s the sort of thing they can help with.” (Participant 004).

Another function in the sweet spot was providing status updates. Many participants pulled from their own experiences with lengthy waiting periods to receive results and the lack of information during their own genetic testing process. Based on these experiences, participants hoped a chatbot could provide progress updates, estimate wait times, and provide them with tasks they could work on during the waiting periods. One patient described how a chatbot could have provided assistance during their genetic testing journey by saying

“If I had to go back to my own experience, it took a long time to wait. I would love to have had an opportunity to see something that said, your results are due on this date. Here are some of the things that you can do in the interim while you wait to see the geneticist. OK? In preparation for your meeting if you could fill out this information it’s going to make it faster for us to get to you We will get back to you within X number of days I think that’s the important part is, one, I’m not alone, I’m not isolated, I’m not being dropped.” (Participant 018).

Even if a chatbot could handle these tasks, participants thought that it might be inefficient or inappropriate to go through a chatbot rather than their clinician who is already aware of their specific personal medical history. Most participants anticipated that a chatbot might provide only general answers, leaving them with uncertainty. In contrast, they imagined their clinician could provide definitive, reliable, and context-specific answers.

For example, a participant said

“Like when you’re asking, like, a really specific question, often, more often than not, those bots are like, not capable of processing that information, so they’re just like, oh, looks like you should be talking to a human. I know! Like, I know that.” (Participant 002).

Participants perceived chatbots to be of limited use or inappropriate for situations where they would prefer human contact, for example to provide individualized counseling for a rare disease or for emotional support related to test results. Participants indicated that a chatbot would be beneficial as a complement to, but not a replacement for direct consultation with their genetics health care provider.

A participant outlined the importance of a clinician remaining part of usual care by saying

“It’s nice to know that you have one person who has your personal journey at heart, and in mind, because when it comes to rare disease, it’s, it’s hard to find a professional who knows about it. So, a genetic counselor can be the one that says, yeah, I’ve seen that before. I’ve heard that. Or I know what this is. And it’s, reassuring to, to know.” (Participant 025).

Overall, participants’ acceptance of chatbots was contingent on the type of task and the ability to contact their clinician, as needed.

Discussion

There is growing interest in integrating chatbots into healthcare (Xu et al. 2021) and specifically genetic service delivery (Nazareth et al. 2021; Siglen et al. 2021). Our qualitative study provides an understanding of patients’ preferences for the use of chatbots in genetics and can build towards a foundation for the co-design of these tools.

Participants expressed an initial reluctance with using chatbots and did not want them to replace usual care. Participants indicated that having a safety net, or access to a clinician when required, increased their feeling of comfort with using a chatbot as part of their care. While this work has identified the importance of a safety net, the structure of a safety net, workflow, and interoperability merit further study. Participants were able to articulate the types of tasks that were not well suited to a chatbot and these included both very simple and very complex tasks. However, there was a spectrum of tasks participants described as potentially acceptable for a chatbot, which we labeled the ‘sweet spot’. These activities were characterized by a high return on investment; the input of time and energy was worth the output received. Typically, these were tasks of moderate complexity, such as providing disease information and maintaining engagement via testing status updates. As this work is a preliminary characterization of the range of acceptable and unacceptable features and functions for chatbots in genomic medicine, we consider the tasks within the sweet spot to be guideposts for acceptability. Ongoing chatbot development should prioritize user-centered co-design processes to enable the integration of patient preferences, in turn optimizing the appropriate implementation of such tools in practice.

Our work adds to the literature on the development and use of chatbots in genetics (Heald et al. 2020; Sato et al. 2021; Siglen et al. 2021). Much of the previous research has focused on tool mechanics and clinical impacts (Ireland et al. 2021; Nazareth et al. 2021; Sato et al. 2021), whereas our work examined patient preferences through qualitative interviews. Work that has been conducted on patient acceptability of chatbots within genetics has been limited to a narrow set of functions or has been assessed within the adult cancer and screening populations (Nazareth et al. 2021; Schmidlen et al. 2019, 2022; Siglen et al. 2021; Wang et al. 2015), whereas our work has examined acceptability within a range of diagnostic genetic testing scenarios.

One function that participants in our study requested is the ability to have a built-in safety net which allows them to leave the chatbot and be contacted by a member of their health care team. This finding aligns with other existing genetics chatbots which have the ability to contact a genetics professional (usually a genetic counselor) (Heald et al. 2020; Nazareth et al. 2021; Schmidlen et al. 2019). As further evidence of the importance of this feature, in usability testing for a chatbot designed to respond to questions about BRCA testing, participants requested this feature be added to a future iteration (Siglen et al. 2021). Similar to our findings, topics deemed inappropriate or excluded in other chatbot research include discussions of the impact of specific genetic conditions and the provision of expert support (Ireland et al. 2021). Participants in our study were also enthusiastic about status updates. In other work that examined status updates via chatbots, patients were given notice of their requisition for bloodwork in the electronic medical record and were sent reminders to complete their blood draw (Heald et al. 2020). It is conceivable that linkage of the chatbot to the medical record could automate additional status updates, especially those outside the view of the patient (e.g., notification that the blood sample has been shipped, the blood sample is being analyzed).

In addition to aligning with others’ findings that some are hesitant to use chatbots in healthcare due to accuracy concerns and a lack of empathy (Nadarzynski et al. 2019), our work expands the evidence base (Lee et al. 2022) to include details about patients’ and parents’ preferences for key functions and use cases. Since the acceptability of chatbot use may depend on the clinical indication for genetic testing or the acuity of the situation (e.g., during the newborn period), ongoing preference research in real-world clinical settings is warranted. Moreover, as mainstreaming genetics into other specialty areas gains traction (White et al. 2020), the role of chatbots in these settings merits consideration. Aligned with the principles of user-centered co-design, we will build on these findings to inform the development of the patient-facing Genetics Navigator platform.

One limitation of this study was its hypothetical nature. For some participants, it was initially difficult to imagine specific functionalities of a genetics chatbot. However, many participants were able to draw from their past experiences using chatbots in a variety of health and non-healthcare settings to imagine using a genetics chatbot in an actual clinical interaction. As well, we did not collect participants’ indication for or type of genetic testing. It is important to note that perspectives may differ across disease populations, settings, and disease presentation. Despite our efforts to speak with participants from a range of geographic locations in Canada, the majority of our sample reported living in urban settings in central regions of the country and were highly educated. While we did not collect data on ethnicity, we did collect primary language spoken at home, which can be considered a component of ethnicity (Smedley and Smedley 2005).

While new digital technologies, such as chatbots, can improve access to services (Schmidlen et al. 2019), they can also deepen existing inequities (Thomas-Jacques, Jamieson, and Shaw 2021). The ‘digital divide’ is exacerbated when usual care shifts to a technology that already marginalized communities cannot access (Goldman and Lakdawalla 2005). To minimize this divide, equity concerns should be front of mind during the development of new technologies. This can be advanced by considering low-tech alternatives, or adjuncts, to digital solutions which are co-designed and evaluated in partnership with under-represented communities (Thomas-Jacques et al. 2021). In future work, we plan to seek end-user input on acceptable low-tech options to supplement the Genetics Navigator.

Results from this study provide timely insights into patients’ comfort with and perceived limitations of integrating chatbots into genomic medicine. Our data show that patients found chatbots to be acceptable to deliver components of the genetic services and testing journey that fall in the sweet spot, so long as a “safety net” (i.e., care provider) is available as needed and more complex and sensitive components of genetic services are still offered in-person with a genetics professional. The information provided by the chatbot must be accurate and the source feeding the chatbot must be considered trustworthy. End users’ preferences must be considered in the development and integration of novel technologies in clinical care pathways. It is important to engage end users, especially those that are hesitant, in co-design to optimize innovative approaches to deliver patient-centered care.

Data availability

The data that support the findings of this study are available on request from the corresponding author. The data are not publicly available due to privacy or ethical restrictions.

References

Ahmed A, Ali N, Aziz S, Abd-alrazaq AA, Hassan A, Khalifa M, Househ M (2021) A review of mobile chatbot apps for anxiety and depression and their self-care features. Comp Method Prog Biomed Update 1:100012. https://doi.org/10.1016/j.cmpbup.2021.100012

Bibault JE, Chaix B, Guillemassé A, Cousin S, Escande A, Perrin M, Brouard B (2019) A Chatbot Versus Physicians to Provide Information for Patients With Breast Cancer: Blind, Randomized Controlled Noninferiority Trial. J Med Internet Res 21(11):15787. https://doi.org/10.2196/15787

Bombard Y, Hayeems RZ (2020) How digital tools can advance quality and equity in genomic medicine. Nat Rev Genet 21(9):505–506. https://doi.org/10.1038/s41576-020-0260-x

Bombard Y, Clausen M, Mighton C, Carlsson L, Casalino S, Glogowski E, Laupacis A (2018) The Genomics ADvISER: development and usability testing of a decision aid for the selection of incidental sequencing results. Eur J Hum Genet 26(7):984–995. https://doi.org/10.1038/s41431-018-0144-0

Braun V, Clarke V (2022) Conceptual and design thinking for thematic analysis. Qual Psychol 9(1):3–26. https://doi.org/10.1037/qup0000196

Canadian Organization for Rare Disorders. (2019). About Cord. Retrieved from https://www.raredisorders.ca/about-cord/. Accessed 11 Jan 2021

Chaix B, Bibault JE, Pienkowski A, Delamon G, Guillemassé A, Nectoux P, Brouard B (2019) When Chatbots Meet Patients: One-Year Prospective Study of Conversations Between Patients With Breast Cancer and a Chatbot. JMIR Cancer 5(1):e12856. https://doi.org/10.2196/12856

Cooksey JA, Forte G, Benkendorf J, Blitzer MG (2005) The state of the medical geneticist workforce: findings of the 2003 survey of American Board of Medical Genetics certified geneticists. Genet Med 7(6):439–443. https://doi.org/10.1097/01.gim.0000172416.35285.9f

Cooper A, Ireland D (2018) Designing a Chat-Bot for Non-Verbal Children on the Autism Spectrum. Stud Health Technol Inform 252:63–68

Dosovitsky G, Pineda BS, Jacobson NC, Chang C, Escoredo M, Bunge EL (2020) Artificial Intelligence Chatbot for Depression: Descriptive Study of Usage. JMIR Form Res 4(11):e17065–e17065. https://doi.org/10.2196/17065

Easton K, Potter S, Bec R, Bennion M, Christensen H, Grindell C, Hawley MS (2019) A Virtual Agent to Support Individuals Living With Physical and Mental Comorbidities: Co-Design and Acceptability Testing. J Med Internet Res 21(5):e12996. https://doi.org/10.2196/12996

Gaffney H, Mansell W, Tai S (2019) Conversational Agents in the Treatment of Mental Health Problems: Mixed-Method Systematic Review. JMIR Ment Health 6(10):e14166. https://doi.org/10.2196/14166

Ghosh S, Bhatia S, Bhatia A (2018) Quro: Facilitating User Symptom Check Using a Personalised Chatbot-Oriented Dialogue System. Stud Health Technol Inform 252:51–56

Goldman D, Lakdawalla D (2005) A Theory of Health Disparities and Medical Technology. Contribut Eco Analysis & Policy 4:1395–1395. https://doi.org/10.2202/1538-0645.1395

Golinelli D, Boetto E, Carullo G, Nuzzolese AG, Landini MP, Fantini MP (2020) Adoption of Digital Technologies in Health Care During the COVID-19 Pandemic: Systematic Review of Early Scientific Literature. J Med Internet Res 22(11):e22280. https://doi.org/10.2196/22280

Goonesekera Y, Donkin L (2022) A Cognitive Behavioral Therapy Chatbot (Otis) for Health Anxiety Management: Mixed Methods Pilot Study. JMIR Form Res 6(10):e37877. https://doi.org/10.2196/37877

Green MJ, Biesecker BB, McInerney AM, Mauger D, Fost N (2001a) An interactive computer program can effectively educate patients about genetic testing for breast cancer susceptibility. Am J Med Genet 103(1):16–23. https://doi.org/10.1002/ajmg.1500

Green MJ, McInerney AM, Biesecker BB, Fost N (2001b) Education about genetic testing for breast cancer susceptibility: patient preferences for a computer program or genetic counselor. Am J Med Genet 103(1):24–31. https://doi.org/10.1002/ajmg.1501

Green MJ, Peterson SK, Baker MW, Harper GR, Friedman LC, Rubinstein WS, Mauger DT (2004) Effect of a computer-based decision aid on knowledge, perceptions, and intentions about genetic testing for breast cancer susceptibility: a randomized controlled trial. JAMA 292(4):442–452. https://doi.org/10.1001/jama.292.4.442

Green MJ, Peterson SK, Baker MW, Friedman LC, Harper GR, Rubinstein WS, Mauger DT (2005) Use of an educational computer program before genetic counseling for breast cancer susceptibility: effects on duration and content of counseling sessions. Genet Med 7(4):221–229. https://doi.org/10.1097/01.gim.0000159905.13125.86

Greer S, Ramo D, Chang YJ, Fu M, Moskowitz J, Haritatos J (2019) Use of the Chatbot “Vivibot” to Deliver Positive Psychology Skills and Promote Well-Being Among Young People After Cancer Treatment: Randomized Controlled Feasibility Trial. JMIR Mhealth Uhealth 7(10):e15018. https://doi.org/10.2196/15018

Gunasekeran DV, Tham YC, Ting DSW, Tan GSW, Wong TY (2021) Digital health during COVID-19: lessons from operationalising new models of care in ophthalmology. Lancet Digit Health 3(2):e124–e134. https://doi.org/10.1016/s2589-7500(20)30287-9

Heald B, Keel E, Marquard J, Burke CA, Kalady MF, Church JM, Eng C (2020) Using chatbots to screen for heritable cancer syndromes in patients undergoing routine colonoscopy. J Med Genet. https://doi.org/10.1136/jmedgenet-2020-107294

Hoskovec JM, Bennett RL, Carey ME, DaVanzo JE, Dougherty M, Hahn SE, Wicklund CA (2018) Projecting the Supply and Demand for Certified Genetic Counselors: a Workforce Study. J Genet Couns 27(1):16–20. https://doi.org/10.1007/s10897-017-0158-8

Ireland D, Bradford D, Szepe E, Lynch E, Martyn M, Hansen D, Gaff C (2021) Introducing Edna: A trainee chatbot designed to support communication about additional (secondary) genomic findings. Patient Educ Couns 104(4):739–749. https://doi.org/10.1016/j.pec.2020.11.007

Keesara S, Jonas A, Schulman K (2020) Covid-19 and Health Care’s Digital Revolution. N Engl J Med 382(23):e82. https://doi.org/10.1056/NEJMp2005835

Kobori Y, Osaka A, Soh S, Okada H (2018) MP15-03 Novel application for sexual transmitted infection screening with an AI chatbot. J Urol 199(4S):e189–e190. https://doi.org/10.1016/j.juro.2018.02.516

Lee W, Shickh S, Assamad D, Luca S, Clausen M, Somerville C, On behalf of the Genetics Navigator Study Team (2022) Patient-facing digital tools for delivering genetic services: a systematic review. J Med Gen. https://doi.org/10.1136/jmg-2022-108653

Luk TT, Lui JHT, Wang MP (2022) Efficacy, Usability, and Acceptability of a Chatbot for Promoting COVID-19 Vaccination in Unvaccinated or Booster-Hesitant Young Adults: Pre-Post Pilot Study. J Med Internet Res 24(10):e39063. https://doi.org/10.2196/39063

Milne-Ives M, de Cock C, Lim E, Shehadeh MH, de Pennington N, Mole G, Meinert E (2020) The Effectiveness of Artificial Intelligence Conversational Agents in Health Care: Systematic Review. J Med Internet Res 22(10):e20346. https://doi.org/10.2196/20346

Nadarzynski T, Miles O, Cowie A, Ridge D (2019) Acceptability of artificial intelligence (AI)-led chatbot services in healthcare: A mixed-methods study. Digit Health 5:2055207619871808. https://doi.org/10.1177/2055207619871808

Nazareth S, Hayward L, Simmons E, Snir M, Hatchell KE, Rojahn S, Nussbaum RL (2021) Hereditary Cancer Risk Using a Genetic Chatbot Before Routine Care Visits. Obstet Gynecol 138(6):860–870. https://doi.org/10.1097/aog.0000000000004596

Office of the Auditor General of Ontario. (2017). 2017 Annual Report: Laboratory Services in the Health Sector. Retrieved from https://www.auditor.on.ca/en/content/annualreports/arreports/en17/v1_307en17.pdf. Accessed 17 Jan 2021

Sato A, Haneda E, Suganuma N, Narimatsu H (2021) Preliminary Screening for Hereditary Breast and Ovarian Cancer Using a Chatbot Augmented Intelligence Genetic Counselor: Development and Feasibility Study. JMIR Form Res 5(2):e25184. https://doi.org/10.2196/25184

Schmidlen T, Schwartz M, DiLoreto K, Kirchner HL, Sturm AC (2019) Patient assessment of chatbots for the scalable delivery of genetic counseling. J Genet Couns 28(6):1166–1177. https://doi.org/10.1002/jgc4.1169

Schmidlen T, Jones CL, Campbell-Salome G, McCormick CZ, Vanenkevort E, Sturm AC (2022) Use of a chatbot to increase uptake of cascade genetic testing. J Genet Couns. https://doi.org/10.1002/jgc4.1592

Siglen E, Vetti HH, Lunde ABF, Hatlebrekke TA, Strømsvik N, Hamang A, Bjorvatn C (2021) Ask Rosa - The making of a digital genetic conversation tool, a chatbot, about hereditary breast and ovarian cancer. Patient Educ Couns. https://doi.org/10.1016/j.pec.2021.09.027

Smedley A, Smedley BD (2005) Race as biology is fiction, racism as a social problem is real: Anthropological and historical perspectives on the social construction of race. Am Psychol 60(1):16–26. https://doi.org/10.1037/0003-066x.60.1.16

SocioCultural Research Consultants, L. (2021). Dedoose Version 9.0.17, web application for managing, analyzing, and presenting qualitative and mixed method research data. Los Angeles, CA. Retrieved from www.dedoose.com

Stein N, Brooks K (2017) A Fully Automated Conversational Artificial Intelligence for Weight Loss: Longitudinal Observational Study Among Overweight and Obese Adults. JMIR Diabetes 2(2):e28. https://doi.org/10.2196/diabetes.8590

Thomas-Jacques T, Jamieson T, Shaw J (2021) Telephone, video, equity and access in virtual care. Npj Digital Medicine 4(1):159. https://doi.org/10.1038/s41746-021-00528-y

Wang C, Bickmore T, Bowen DJ, Norkunas T, Campion M, Cabral H, Paasche-Orlow M (2015) Acceptability and feasibility of a virtual counselor (VICKY) to collect family health histories. Genet Med 17(10):822–830. https://doi.org/10.1038/gim.2014.198

Watson A, Bickmore T, Cange A, Kulshreshtha A, Kvedar J (2012) An internet-based virtual coach to promote physical activity adherence in overweight adults: randomized controlled trial. J Med Inter Res 14(1):e1. https://doi.org/10.2196/jmir.1629

White S, Jacobs C, Phillips J (2020) Mainstreaming genetics and genomics: a systematic review of the barriers and facilitators for nurses and physicians in secondary and tertiary care. Genet Med 22(7):1149–1155. https://doi.org/10.1038/s41436-020-0785-6

Xu L, Sanders L, Li K, Chow JCL (2021) Chatbot for Health Care and Oncology Applications Using Artificial Intelligence and Machine Learning: Systematic Review. JMIR Cancer 7(4):e27850–e27850. https://doi.org/10.2196/27850

Acknowledgements

Genetics Navigator Study Team: Yvonne Bombard (Co-PI): Genomics Health Services Research Program, Li Ka Shing Knowledge Institute, St. Michael’s Hospital, Unity Health Toronto, Toronto, ON, Canada; Institute of Health Policy, Management, and Evaluation, University of Toronto, Toronto, ON, Canada. Robin Z. Hayeems (Co-PI): Program in Child Health Evaluative Sciences, The Hospital for Sick Children, Toronto, ON, Canada, Institute of Health Policy, Management, and Evaluation, University of Toronto, Toronto, ON, Canada, Melyssa Aronson: Department of Molecular Genetics, University of Toronto, Toronto, ON, Canada, Zane Cohen Centre for Digestive Diseases, Sinai Health System, Toronto, ON, Canada, Francois Bernier: Department of Medical Genetics, Alberta Children’s Hospital, Calgary, AB, Canada, Michael Brudno: HPC4Health Consortium, Toronto, ON, Canada, June C. Carroll: Department of Family & Community Medicine, Sinai Health, University of Toronto, Lauren Chad: Division of Clinical and Metabolic Genetics, The Hospital for Sick Children, Toronto, ON, Canada, Department of Bioethics, The Hospital for Sick Children Toronto, ON, Canada, Department of Pediatrics, The Hospital for Sick Children, Toronto, ON, Canada, Marc Clausen: Genomics Health Services Research Program, Li Ka Shing Knowledge Institute, St. Michael’s Hospital, Unity Health Toronto, Toronto, ON, Canada, Ronald Cohn: Division of Clinical and Metabolic Genetics, The Hospital for Sick Children, Toronto, ON, Canada, Gregory Costain: Division of Clinical and Metabolic Genetics, The Hospital for Sick Children, Toronto, ON, Canada, Department of Molecular Genetics, University of Toronto, Toronto, ON, Canada, Irfan Dhalla: Institute of Health Policy, Management, and Evaluation, University of Toronto, Toronto, ON, Canada, Care Experience Institute, Unity Health Toronto, Toronto, ON, Canada, Hanna Faghfoury: Fred A. Litwin Family Centre in Genetic Medicine, University Health Network and Sinai Health System, Toronto, ON, Canada, Jan Friedman: Department of Medical Genetics, University of British Columbia, Vancouver, BC, Canada Stacy Hewson: Division of Clinical and Metabolic Genetics, The Hospital for Sick Children, Toronto, ON, Canada, Trevor Jamieson: Department of Medicine, University of Toronto, Toronto, ON, Canada, Division of General Internal Medicine, St Michael's Hospital, Unity Health Toronto, Toronto, Ontario, Canada, Rebekah Jobling: Division of Clinical and Metabolic Genetics, The Hospital for Sick Children, Toronto, ON, Canada, Rita Kodida: Genomics Health Services Research Program, Li Ka Shing Knowledge Institute, St. Michael’s Hospital, Unity Health Toronto, Toronto, ON, Canada, Anne-Marie Laberge: Department of Medical Genetics, Le Centre Hospitalier Universitaire Sainte-Justine, Montreal, QC, Canada, Jordan Lerner-Ellis: Lunenfeld-Tanenbaum Research Institute, Sinai Health System, Toronto, ON, Canada, Eriskay Liston: Division of Clinical and Metabolic Genetics, The Hospital for Sick Children, Toronto, ON, Canada, Department of Molecular Genetics, University of Toronto, Toronto, ON, Canada, Stephanie Luca: Program in Child Health Evaluative Sciences, The Hospital for Sick Children, Toronto, ON, Canada, Muhammad Mamdani: Department of Data Science and Advanced Analytics, Li Ka Shing Knowledge Institute, St. Michael’s Hospital, Unity Health Toronto, Toronto, ON, Canada, Christian R. Marshall: Department of Laboratory Medicine and Pathobiology, University of Toronto, Toronto, ON, Canada, Genome Diagnostics, Department of Pediatric Laboratory Medicine, The Hospital for Sick Children, Toronto, ON, Canada, Matthew Osmond: Children’s Hospital of Eastern Ontario Research Institute, University of Ottawa, Ottawa, ON, Canada, Quynh Pham: Institute of Health Policy, Management, and Evaluation, University of Toronto, Toronto, ON, Canada, Centre for Global eHealth Innovation University Health Network, Toronto, ON, Canada, Emma Reble: Genomics Health Services Research Program, Li Ka Shing Knowledge Institute, St. Michael’s Hospital, Unity Health Toronto, Toronto, ON, Canada, Frank Rudzicz: International Centre for Surgical Safety, Li Ka Shing Knowledge Institute, St. Michael’s Hospital, Unity Health Toronto, Toronto, ON, Canada, Vector Institute for Artificial Intelligence, Toronto, ON, Canada, Emily Seto: Institute of Health Policy, Management, and Evaluation, University of Toronto, Toronto, ON, Canada, Centre for Global eHealth Innovation University Health Network, Toronto, ON, Canada, Serena Shastri-Estrada: Genetics Navigator Advisory Board, Toronto, ON, Canada, Adjunct Lecturer, Department of Occupational Science and Occupational Therapy, Temerty Faculty of Medicine, University of Toronto, Toronto, ON, Canada, Cheryl Shuman: Department of Molecular Genetics, University of Toronto, Toronto, ON, Canada, Josh Silver: Fred A. Litwin Family Centre in Genetic Medicine, University Health Network and Sinai Health System, Toronto, ON, Canada, Department of Molecular Genetics, University of Toronto, Toronto, ON, Canada, Maureen Smith: Patient Partner, Canadian Organization for Rare Disorders, Toronto, ON, Canada, Kevin Thorpe: Dalla Lana School of Public Health, University of Toronto, Toronto, ON, Canada, Applied Health Research Centre, Li Ka Shing Knowledge Institute, St. Michael's Hospital, Toronto, ON, Canada, Wendy J. Ungar: Program in Child Health Evaluative Sciences, The Hospital for Sick Children, Toronto, ON, Canada, Institute of Health Policy, Management, and Evaluation, University of Toronto, Toronto, ON, Canada

Yvonne Bombard, Robin Z. Hayeems, Melyssa Aronson, Francois Bernier, Michael Brudno, June C Carroll, Lauren Chad, Marc Clausen, Ronald Cohn, Gregory Costain, Irfan Dhalla, Hanna Faghfoury, Jan Friedman, Stacy Hewson, Trevor Jamieson, Rebekah Jobling, Rita Kodida, Anne-Marie Laberge, Jordan Lerner-Ellis, Eriskay Liston, Stephanie Luca, Muhammad Mamdani, Christian R. Marshall, Matthew Osmond, Quynh Pham, Emma Reble, Frank Rudzicz, Emily Seto, Serena Shastri-Estrada, Cheryl Shuman, Josh Silver, Maureen Smith, Kevin Thorpe, Wendy J. Ungar

Funding

This research was funded by the McLaughlin Centre, University of Toronto and the Canadian Institutes of Health Research Project Grant—Bridge Funding (Funding Reference Numbers: PMJ 175409 and PNN 177934).

Author information

Authors and Affiliations

Consortia

Contributions

Conceptualization: SL, MC, RH, and YB. Data curation: SL, MC, AS, WL, SK, and EAW. Formal Analysis: SL, MC, AS, SK, EAW, RH, and YB. Funding acquisition: RH and YB. Investigation: SL, MC, AS, and WL. Methodology: SL, MC, AS, RH, and YB. Project administration: SL, MC, AS, WL, SK, and EAW. Resources: RH and YB. Software: SL, MC, AS, SK, and EAW. Supervision: RH and YB; Validation: SL, MC, AS, WL, SK, EAW, HF, GC, RJ, MA, EL, JS, CS, LC, RH, YB. Visualization: SL, MC, and AS. Writing—original draft: SL, MC, AS, SK, and EAW. Writing—review & editing: SL, MC, AS, WL, SK, EAW, HF, GC, RJ, MA, EL, JS, CS, LC, RH, YB; FB, MB, JC, RC, ID, JF, SH, TJ, RK, AML, JLE, MM, CM, MO, QP, ER, FR, ES, SSE, CS, MS, KT, and WU.

Corresponding author

Ethics declarations

Conflicts of interest

The authors have no relevant financial or non-financial interests to disclose.

Ethical approval

Research ethics approval was obtained through the Unity Health Toronto Research Ethics Board (REB# 20–143).

Consent to participate

Informed verbal consent was provided by all participants.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This mentioned Genetics Navigator Study Team members are listed in the Acknowledgements section.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Luca, S., Clausen, M., Shaw, A. et al. Finding the sweet spot: a qualitative study exploring patients’ acceptability of chatbots in genetic service delivery. Hum Genet 142, 321–330 (2023). https://doi.org/10.1007/s00439-022-02512-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00439-022-02512-2