Abstract

The objective of this paper is to review statistical methods, dynamics, modeling efforts, and trends related to temperature extremes, with a focus upon extreme events of short duration that affect parts of North America. These events are associated with large scale meteorological patterns (LSMPs). The statistics, dynamics, and modeling sections of this paper are written to be autonomous and so can be read separately. Methods to define extreme events statistics and to identify and connect LSMPs to extreme temperature events are presented. Recent advances in statistical techniques connect LSMPs to extreme temperatures through appropriately defined covariates that supplement more straightforward analyses. Various LSMPs, ranging from synoptic to planetary scale structures, are associated with extreme temperature events. Current knowledge about the synoptics and the dynamical mechanisms leading to the associated LSMPs is incomplete. Systematic studies of: the physics of LSMP life cycles, comprehensive model assessment of LSMP-extreme temperature event linkages, and LSMP properties are needed. Generally, climate models capture observed properties of heat waves and cold air outbreaks with some fidelity. However they overestimate warm wave frequency and underestimate cold air outbreak frequency, and underestimate the collective influence of low-frequency modes on temperature extremes. Modeling studies have identified the impact of large-scale circulation anomalies and land–atmosphere interactions on changes in extreme temperatures. However, few studies have examined changes in LSMPs to more specifically understand the role of LSMPs on past and future extreme temperature changes. Even though LSMPs are resolvable by global and regional climate models, they are not necessarily well simulated. The paper concludes with unresolved issues and research questions.

Similar content being viewed by others

1 Introduction to temperature extremes

Temperature extremes have large societal and economic consequences. While many heat waves are short-lived, longer events can have a large economic cost. Cold air outbreaks (CAOs) tend to be short-lived but carry large economic losses. Timing of the CAOs can be more important than the minimum temperatures of the freeze; during 4–10 April 2007 low temperatures across the South caused $2B in agricultural losses since many crops were in bloom or had frost sensitive buds or nascent fruit (Gu et al. 2008). The event also exemplifies how monthly means can be misleading: April 2007 average temperatures were near normal. In short, both hot spells (HSs) and CAOs have great societal importance and they are short-term events that do not necessarily appear in monthly mean data.

This report focuses on short-term (5-day or less) extreme temperature events occurring in some part of North America. Temperature extremes considered in this paper include both short-term hottest days (warm season) and CAOs (winter and spring) as these have the greatest impacts. Such events, both observed and simulated, have received considerable attention (including research papers, e.g. Meehl and Tebaldi 2004; and active websites: http://www.esrl.noaa.gov/psd/ipcc/extremes/, http://www.ncdc.noaa.gov/extremes/cei/, and http://gmao.gsfc.nasa.gov/research/subseasonal/atlas/Extremes.html). However, the emphasis here is on the less well understood context for the extreme events. Our primary context is the large-scale meteorological patterns (LSMPs) that accompany these extreme events.

Temperature extreme events are usually linked to large displacements of air masses that create a large amplitude wave pattern (here called an LSMP). LSMPs have a spatial scale bigger than mesoscale systems but smaller than the near-global scale of some modes of climate variability. The LSMP often has some portion that is superficially similar to a blocking ridge, so a blocking index can be an LSMP indicator (Sillmann et al. 2011). Extreme events have also been linked to circulation indices like the North Atlantic Oscillation (NAO) (Downton and Miller 1993; Cellitti et al. 2006; Brown et al. 2008; Kenyon and Hegerl 2008; Guirguis et al. 2011) the Madden–Julian Oscillation (MJO) (Moon et al. 2011) and El Niño/Southern Oscillation (ENSO) (Downton and Miller 1993; Higgins et al. 2002; Carrera et al. 2004; Meehl et al. 2007; Goto-Maeda et al. 2008; Kenyon and Hegerl 2008; Alexander et al. 2009; Lim and Schubert 2011). However, LSMPs are likely distinct from climate modes for several reasons. First, named climate modes such as the NAO are common modes of variability, whereas the LSMP is presumably as rare as the associated extreme event. Second, climate modes occur on a longer time scale than the LSMPs for the short-term events focused upon in this article. However, it is possible that an extreme event might occur when a climate mode has transient extreme magnitude or is amplified in association with another low frequency phenomenon. Third, tested LSMP patterns are not that similar to climate modes. In correlating eight NOAA teleconnection patterns (http://www.cpc.ncep.noaa.gov/data/teledoc/telecontents.shtml) and California LSMPs and in assessing the PNA contribution to last winter’s extreme cold in eastern North America (neither shown here), we do not find notable contribution from such modes. Several studies identified the LSMPs associated with specific extreme hot events (Grotjahn and Faure 2008; Loikith and Broccoli 2012) and CAO events (Konrad 1996; Carrera et al. 2004; Grotjahn and Faure 2008; Loikith and Broccoli 2012). Parts of these LSMPs tend to be uniquely associated with the corresponding extreme weather and those parts have some predictability (Grotjahn 2011).

The LSMPs for extreme events are not fully understood for different parts of North America. Also, local processes: topography, soil moisture, etc. play key roles but there is a knowledge gap in how well climate models simulate the LSMPs as well as these local processes and how the local and global modes interact with LSMPs. Bridging these knowledge gaps will reduce the uncertainty of future projections and drive model improvements.

Now is an opportune time to summarize critical issues and key gaps in understanding temperature extremes variability and trends because: (1) it is not known if current climate models used for future projections are producing extremes via the correct dynamical mechanisms, which directly impacts confidence in projections, and (2) knowledge of the LSMPs can improve downscaling (statistical or dynamical) by focusing attention on large scale patterns that are fundamental to the occurrence of the extreme event. Conversely, global models that do not reproduce the magnitude or duration of extreme temperature events accurately may still capture the correct LSMPs and facilitate downscaling. Finally, there is now sufficient preliminary work and growing interest to make a summary valuable.

From the LSMP context, the objectives of this review are surveys of: relevant statistical tools for extreme value analysis (Sect. 2), synoptic and dynamical interactions between LSMPs and other scales from local to global (Sect. 3), model simulation issues (Sect. 4), trends in these temperature extremes (Sect. 5), and various open questions (summary section). Different readers may be interested in different surveys. Accordingly, the sections are autonomous allowing a reader to skip to a particular section(s) of interest.

2 Extreme statistics and associated large scale meteorological patterns (LSMPs)

2.1 Definitions of extreme events

The expert team on climate change detection and indices (ETCCDI) under the auspices of the World Meteorological Organization’s CLIVAR program provide a useful, but somewhat incomplete starting point to explore the relationship between extreme temperatures and LSMPs. The ETCCDI indices as well as software to calculate them are available at http://etccdi.pacificclimate.org and are described in Alexander et al. (2006). The ETCCDI temperature indices are summarized in Table 1 and are designed for detecting and attributing human effects on extreme weather and do not necessarily represent particularly rare events. However, extreme value statistical methodologies can be applied to quantify the behavior of the tails of the distribution of certain ETCCDI indices and reveal insight into truly rare events (Brown et al. 2008; Peterson et al. 2013). Another common statistical measure is the return value (or level). Under a changing climate, the return value can be interpreted as an extreme quantile of the temperature distribution that varies over time (e.g., the 20-year return value may be interpreted as that value that has a 5 % chance of being exceeded in a particular year). Trends in these measures of extreme temperature are detectible at the global scale (Brown et al. 2008) and have been attributed to human emissions of greenhouse gases (Christidis et al. 2005). At local scales, increases and decreases are observed reflecting the significant amount of natural variability in extreme temperatures.

Figure 1 shows observed trends over North America from 1950 to 2007 in 20-year return values of the hottest/coldest days and hottest/coldest nights. The temperature of very cold nights (Fig. 1d) exhibits pronounced warming over the entire continent as does the temperature of very cold days (Fig. 1b). The pattern of changes in the temperature of very hot days (Fig. 1a) and very hot nights (Fig. 1c) follows that of average temperature change with strong cooling in the southeastern United States. This “warming hole” has been linked to sea surface temperature patterns in the equatorial Pacific (Meehl et al. 2007) and changes in anthropogenic aerosols in the eastern US (Leibensperger et al. 2012). Changes are more pronounced at the higher latitudes, except in Quebec and Newfoundland. This analysis extends the work of Peterson et al. (2013) and uses annual anomalies to define the extreme indices. Although illustrative of the statistical techniques, analyses of annualized measures of extreme temperature cannot identify associated LSMPs that develop and dissipate on a much shorter time scale. Furthermore, events of high and low impact are better separated in seasonal analyses.

Change over 1950–2007 in estimated 20-year annual return values (°C) for a hot tail of daily maximum temperature (TXx), b cold tail of daily maximum temperature (TXn) c hot tail of daily minimum temperature (TNx) and d cold tail of daily minimum temperature (TNn). Results are based on fitting extreme value statistical models with a linear trend in the location parameter to exceedances of a location-specific threshold (greater than the 99th percentile for upper tail and less than the 1th percentile for lower tail). As this analysis was based on anomalies with respect to average values for that time of year, hot minimum temperature values, for example, are just as likely to occur in winter as in summer. The circles indicate the z-score for the estimated change (estimate divided by its standard error), with absolute z-scores exceeding 1, 2, and 3 indicated by open circles of increasing size. Higher z-score indicates greater statistical significance

2.2 Application of extreme value statistical techniques

The observed changes in Fig. 1 are calculated using a time-dependent point process approach to fit “peaks over threshold” statistical models (Coles 2001). In this case, the extension of stationary extreme value methods to a time-dependent formalism used time as a “covariate” quantity to the ETCCDI indices (Kharin et al. 2013). A principal advantage of a fully time dependent formalism over a quasi-stationary approximation (Wehner 2004) is that the amount of data used to calculate extreme value parameters is substantially increased, resulting in higher quality fitted distributions and hence more accurate estimates of long period return values. Calculations involving climate model output gain additional statistical accuracy by using multiple realizations from ensembles of simulations, provided they are independent and identically distributed.

The “block maxima” and “peaks over threshold” methods to fit the tails of the distributions of random variables are asymptotic formalisms (Coles 2001). In this terminology, “block maxima” refers to use of only the maximum value during each “block” of time, usually a single season or year. The resulting generalized extreme value (GEV) or Poisson and generalized Pareto distributions (GPDs) are both three parameter functions and can be transformed between each other. Hence provided that the data used to fit a distribution are in the “asymptotic regime”, i.e. far out in the tail of the distribution, the two methods are equivalent. Uncertainty in the estimate of long period temperature return values resulting from limited sample size can be appreciable and may be as large as that from unforced internal variability (Wehner 2010). However, variations in these estimates from multi-model datasets such as CMIP3/5 are generally significantly larger.

Confidence in the estimates of the statistical properties of the tail of the parent distribution of a random variable can be ascertained by exploring the sensitivity to the sample size used to fit the extreme value distribution. For block maxima methods, the length of the block is a season (or effectively so for temperature if the block length is a year). Lengthening the block makes the sample size smaller; shortening it makes the sample size larger since only one extreme value is drawn from each block. Such sensitivities are somewhat more straightforward to explore with peaks over threshold (POT) methods. Typical thresholds may be chosen between 80 and 99 % depending on the size of the parent distribution that the extreme values are drawn from. However, standard POT methods may not discriminate between extreme values that occur at successive dates when the individual extreme values may not be truly independent. In these cases, declustering techniques (Coles 2001) are applied to avoid biased (low) estimates of the uncertainty. The trends in extreme temperature shown in Fig. 1 are calculated using such a declustering technique and a POT formalism with time as a linear covariate. An alternative approach retains possibly dependent consecutive extremes, adjusting the estimates of uncertainty through either resampling or more advanced techniques for quantifying extremal dependence (e.g., Fawcett and Walshaw 2012). Furthermore, LSMPs responsible for extreme events can be formed using only the dates of the onset of the event (e.g. Grotjahn and Faure 2008; Bumbaco et al. 2013) reducing the risk of autocorrelated extremes. In the cited studies, a 5 day gap was typically required between events. Low frequency factors (or climate modes), such as ENSO, are best treated using the previously mentioned covariate techniques.

Some of the advantages and challenges of applying statistical methods based on extreme value theory to analyze non-stationary climate extremes have been pointed out previously (e.g., Katz 2010), but are still not necessarily well appreciated by the climate science community. Conventional approaches tend to be either: (1) less informative (e.g., analyzing only the frequency of exceeding a high threshold, not the excess over the threshold and not measuring the intensity of the event); or (2) less realistic (e.g., based on assumed distributions such as the normal that may fit the overall data well, but not necessarily the tails). When relating extremes to LSMPs, standard regression approaches would not quantify the uncertainty in the relationship as realistically as using extremal distributions with covariates. Challenges in extreme value methods include specifying the dependence on LSMPs of the parameters of the extremal distributions in a manner consistent with our dynamical understanding. Moreover, heat waves and CAOs are relatively complex forms of extreme events, some of whose characteristics can be challenging to incorporate into the framework of extreme value statistics (Furrer et al. 2010). Finally, another advantage of the POT approach over the block maxima approach is being able to incorporate daily indices of LSMPs as covariates (not just monthly or seasonally aggregated indices).

2.3 Identification of LSMPs related to extreme temperatures

Several methods have been used to identify LSMPs that occur in association with extreme temperature events. These methods and their properties are summarized in Table 2. The text summarizes each method, its advantages and its disadvantages.

2.3.1 Composites

Composite methods define the LSMP using a target ensemble average. The values at a grid point for a field on specified ‘target’ dates are averaged together. These dates might be when a temperature event begins (onset dates) defined as when some parameter(s) first meet some threshold and duration criteria. Table 3 illustrates various threshold and duration criteria that have been used to identify short-term extremely hot events. The different definitions yield somewhat different dates and results. A definition using a physiological hazard (Robinson 2001) might not be satisfied at night in the coastal and some inland regions of the West, even though the daytime temperature threshold is well exceeded. Lower thresholds (90th percentile) generate more events thus increasing the sample size that can improve the statistical fit, though the behavior of the highest 1 % may not be well fit. Similarly, the number of stations (or the size of the area over which those stations occur) impacts which dates are identified, even for regions that would seem to be meteorologically consistent; for example, which stations and how many are included from the Central Valley of California changes which dates exceed a threshold. Also, different definitions target different purposes: Grotjahn and Faure were interested in the LSMPs at the onset of the hottest events while Meehl and Tebaldi were interested in finding the longest duration events of some importance. The number of dates averaged equals the number of ensemble members.

Compositing has several advantages. One can track LSMP formation by compositing fields with respect to the onset time of each event. Meteorologically relevant full fields (or anomalies) are obtained and composite analyses are constructed to obtain information on the synoptic and dynamical time evolution. The method is non-parametric in that it does not make any assumption about the pattern or the event statistics. Unlike some other methods, criteria can be applied (typically a minimum waiting period between events) to ensure events are independent. Significance can be assessed using a bootstrap resampling procedure where the target ensemble value at a grid point is compared to the distribution of values at that grid point found from a large number of ‘random ensembles’ (each of which uses the same number but randomly-chosen dates). Values above (or below) a threshold of the random ensemble distribution imply significantly high (low) values at that grid point. For example, a target ensemble value equal or higher than the top 10 of 1000 random ensemble values at that point is significant at approximately the 99 % level. Figure 2 shows the LSMPs for California Central Valley cold air outbreaks and heat waves obtained by this method, including bootstrap resampling significance.

Example large scale meteorological patterns (LSMPs) obtained as target ensemble mean composites for two types of California Central valley extreme events. Cold air outbreaks in winter (DJF) at a 72 h prior and b at onset of the events are shown in the 500 hPa geopotential height field. Heat waves during summer (JJAS), c 36 h prior and d at the onset are shown in the 700 hPa geopotential height field. Shading indicates significance at the highest or lowest 5 % level, with the innermost shading significant at the 1.5 % level. Further discussion is in Grotjahn and Faure (2008)

Compositing has some disadvantages that can be addressed. The identification of the extreme event dates must be done separately and before the compositing. The composite produces one target ensemble average (for a specific field and level) for each set of target dates. If more than one LSMP can produce the extreme event, then that must be identified either with one or more additional criteria when choosing dates or identified by examining (perhaps qualitatively) the maps for each individual member of the target ensemble. A procedure like adding the number of positive and subtracting the number of negative anomaly values at a grid point in the target ensemble members (called the ‘sign count’ in Grotjahn 2011) can assist with identifying multiple patterns. (If the sign count equals the number of ensemble members, then all members have the same sign of the anomaly field at that location.) Lee and Grotjahn (2015) apply a cluster analysis to distinctly different parts of LSMPs prior to California heat waves and identify two ways the onset LSMPs form.

2.3.2 Regression

Regression estimates one quantity (the predictand) using a function of one or more other quantities (the predictors). The method is often parametric in assuming a specific function relates a predictor to the predictand (but nonparametric methods exist, too). An example predictor might be daily minimum surface temperature and the predictand might be 700 hPa level meridional wind. At each grid point the value of the predictand can be estimated using a polynomial function of the predictor, where the coefficients of that polynomial are calculated to minimize a squared difference between actual predictand values and polynomial values at that grid point. In general, the coefficients differ from grid point to grid point. To find the LSMP in this example of extreme cold events, the polynomial can be used to construct the predictand at each grid point using a predictor value such as two standard deviations below normal.

The pattern obtained by regression can provide physical insights by directly linking the patterns in the predictand to extremes in the predictor. For example, lower tropospheric (700 hPa) winds prior to a California CAO flow from northern Alaska and northern western Canada to reach California without crossing over the Pacific Ocean. Regression can be used to examine patterns leading up to (or after) event onset dates by offsetting in time the predictor values from the predictand values when calculating the regression coefficients. The LSMP is again the resultant predictand when the predictor is at some specified value (e.g. predictor equals two standard deviations below normal). Significance can be estimated by rejecting a null hypothesis (e.g. that the regression coefficient is zero at the 1 % level using a student’s t test).

One disadvantage of regression is that the assumption of a specific polynomial to represent how the predictand varies relative to the predictor. The fit of the regression line (polynomial) to extreme values may be notably altered by the order of the polynomial assumed. Regression, like composites, only finds one pattern. Regression does not incorporate event duration criteria (such as: the event must last at least 3 days). Regression treats all dates as independent, which they are not likely to be; however this can be somewhat mitigated by sub-sampling the data (e.g. only use every fifth day). Subsampling might be combined with low pass filtering to aggregate the mixture of onset and during-event dates. Another disadvantage is that a portion of the pattern may be highly significant but only have small correlation to the predictor.

2.3.3 Empirical orthogonal functions

Empirical orthogonal functions (EOFs) or principal components (PCs) can be used to identify LSMPs for extreme events. EOFs are the eigenfunctions of a matrix formed from the covariance between grid points on maps. EOFs from all maps in a time record will be ordered based on the eigenfunctions responsible for the largest amount of variance between time samples. Such eigenfunctions are the most common modes of variability and so not likely to be LSMPs of extreme events that are rare (except as mentioned in the introduction). However, EOFs can be formed only from maps selected in reference to an extreme event, such as maps only on the target dates of events onset. (EOFs of common low frequency modes may influence short-term extreme events focused on in this paper, as discussed in Sect. 3.1.) Weighting can be used for a variable grid spacing (such as occurs when using equal intervals of longitude across a range of latitudes).

An advantage of EOFs/PCs is the method finds multiple patterns, each of which is orthogonal (not a subset) of another pattern. The method can calculate the fraction of variability that is due to each EOF/PC. This approach is most often used with filtered data to find low frequency structures. This method is suitable for finding patterns leading up to and after the event by shifting the dates chosen by the event criteria (and only using those dates). In a study of California Central Valley hot spells, Grotjahn (2011) found the leading EOF based on dates satisfying the criteria in Table 3 to be very similar to the corresponding ensemble mean composite.

A disadvantage is that the patterns found may depend on the domain chosen, though EOF ‘rotation’ may help. Also, each EOF may explain only a small fraction of the variance and no single EOF might be an LSMP thereby limiting physical insight. Different leading EOFs/PCs might have structures influenced by the required orthogonality and possibly not a pattern that occurs during an event. While the amount of variance associated with a given EOF is used to indicate the importance of the EOF, there is no inherent statistical significance test. Hence it can be unclear what portions of the EOF are significantly associated with the extreme event and what parts are not (and happen to reflect limited variation in the finite sample).

2.3.4 Clustering analysis

Clustering analysis is terminology indicating a widely used partitioning procedure that identifies separate groups of objects having common structural elements. Clustering analysis has been used to classify distinct sets of LSMPs associated with extreme events (Park et al. 2011; Stephanon et al. 2012). When we have 100 historical hot spell events over a given region, not all extreme events may have the same LSMP on or prior to their onset. Some events may have a wave-like height field, others may have a dipole pattern, and still others may have a third pattern. Although detailed grouping procedures vary for every clustering technique, the basic concept is to minimize the overall distance between patterns among events in resultant groups. For example, the k-means clustering technique applies an iterative algorithm in which events are moved from one group to another until there is no additional improvement in minimizing the squared Euclidean point-to-centroid distance in a group (Spath 1985; Seber 2008), where each centroid is the mean of the patterns in its cluster.

Output of clustering analysis is just the average field of events in each cluster, similar to the output from composite analysis. Unlike composite analysis in which members of clusters are pre-identified, the essential point of clustering analysis is to objectively classify events based on spatial pattern similarity. By applying cluster analysis to group similar onset patterns, one can isolate distinct dynamical origins of different extreme temperature events. Another advantage is that resultant clusters are based on physical maps without assumptions of orthogonality and symmetry such as in the mode separation by EOF/REOF. The robustness of a hot spell classification can be tested by a Monte Carlo test as follows. First one calculates a stability score that is the ratio of the number of verification period heat waves that are correctly attributed over the total number heat waves in the cluster. Second one estimates the probability density function (PDeF) of the null hypothesis that cluster assignment is purely random. Significance of each cluster can be estimated by rejecting a null hypothesis (e.g. stability score is located within highest 99 % of PDeF).

A disadvantage of clustering analysis is that one pre-specifies the number of clusters (e.g. k in k-means clustering). Determining the number k is subjective if one does not have sufficient prior knowledge of related physical patterns. There are several statistics to check the optimal number of k such as ‘distance of dissimilarity’ (Stephanon et al. 2012). Another disadvantage is the ambiguity of cluster assignment for certain events. Another disadvantage is the patterns of such events cannot be assigned clearly to one group over another group. Part of a pattern may resemble Cluster #1, while another part may be more similar to Cluster #2. To avoid ascribing some marginal events to specific clusters, probabilistic clustering methods (e.g. Smyth et al. 1999) are developed, which suggests the possibility (e.g. percentage) that an event could be assigned to each cluster rather than assigning it only to one cluster. If one increases the cluster number, one can expect to decrease such ambiguity in classification. However, the point of clustering analysis is to give a physical insight with minimal groups and not to interpret every single episode.

2.3.5 Self-organizing maps

Self-organizing maps (SOMs; Kohonen 1995) are two-dimensional arrays of maps that display characteristic behavior patterns of a field (e.g., Cavazos 2000; Hewitson and Crane 2006; Gutowski et al. 2004; Cassano et al. 2007). The SOM array is a discretization of the continuous pattern space occupied by the field examined. Thus, in contrast to clustering analysis, SOMs do not assume a clumping together of patterns, though such behavior can emerge if present in the input data. Figure 3 gives an example of a SOM array of synoptic weather patterns in sea level pressure over a region centered on Alaska. Individual maps in the array represent nodes in a projection of this continuous space onto a two-dimensional surface, with the size of the array determined by the degree of spatial discretization of the SOM space one “feels” is needed for the analysis at hand. The two dimensions show the two primary pattern transitions for the field examined. Although one could, in principle, use more than two dimensions, typical practice in climatological work has used only two.

Self-organizing map of synoptic weather patterns in a region focused on Alaska. The SOM array maps give the departure (in hPa) of sea level pressure (SLP) from the domain averaged sea level pressure. The SOM used daily December–January–February (DJF) SLP for 1997–2007 from ERA-interim reanalyses and output from a regional climate model. Locations with elevation exceeding 500 m are not included in the maps to avoid using SLP in regions strongly influenced by methods used to extrapolate SLP from surface pressure

The input maps themselves determine the degree and types of pattern transitions, hence the “self-organizing” nature of the resulting array. The SOM node array is trained on a sequence of input maps through an artificial neural net technique. The SOM array does not necessarily favor the largest scales in the input data, but rather the scales most relevant to the field for the domain and resolution examined. Consequently, SOMs can extract nonlinear pattern changes in fields, such as shifts in strong gradients. In addition, the pattern at each node is essentially a composite of input maps with similar spatial distribution for the field examined, so that patterns in the SOM array show archetypal patterns of the field examined and directly lend themselves to physical interpretation. Typically, the SOM array displays features having the highest temporal variance in the input data. From this perspective, the SOM array is roughly akin to a transformation of a rotated EOF from spectral space back to physical space.

An advantage of SOMs is that one can identify the nodes where extreme events occur frequently and thus the physical behavior yielding extremes. For example, extreme events may tend to cluster in a small portion of SOM space, thereby allowing identification of LSMPs yielding extreme events. A further advantage is that if more than one group emerges in the SOM-space frequency distribution, then the grouping provides a SOM-determined segregation of different types of extreme events. One can then focus analysis and composites of additional fields (e.g., precipitation, winds, temperature) on only events of the same type. For example, Cassano et al. (2006) used SOMs of sea-level pressure patterns over Alaska to determine which synoptic weather patterns were responsible for extreme wind and temperature events at Barrow, Alaska. They then found robust links between these large-scale synoptic weather patterns and local weather features (precipitation, winds, and temperature). One can construct estimates of the significance of differences in frequency distributions in SOM space through bootstrapping procedures to estimate the likelihood that frequency distributions are not simply the result of random, finite sampling of the pattern space. Thus one can compare frequency distributions between a present and projected climate to assess potential climate changes in LSMPs, or between observational and model climates to assess similarity of observed and simulated LSMPs yielding extremes.

A disadvantage of SOMs is that the array size is pre-determined by the user, and there is no clear, objective guideline for selecting array size. There are, however, some factors that can affect the array-size choice. The issue of significance limits the degree of discretization (number of nodes) one applies to the SOM space. Fine discretization will allow apparent detection of small differences in how different data sets occupy pattern space, but fine discretization will also render very noisy frequency distribution functions of the fields in SOM space, thus undermining detection of any significant differences. Coarse discretization limits the ability of the SOM procedure to resolve features producing the extreme events, so a further disadvantage is that an insufficient array size may obscure grouping that may be present in the data. The training method also requires specification of parameters that govern the training process. A well-trained SOM is insensitive to these choices, but care is needed to ensure such a result. In addition, like some of the other methods described here, the extreme events are defined separately from the SOM analysis.

2.3.6 Machine learning and other advanced techniques

Looking to the future, we note that substantial progress has been made in the field of machine learning for extracting patterns from Big Data. Commercial organizations such as Google and Facebook rely on sophisticated, scalable analytics techniques for mining web-scale datasets. Both supervised and unsupervised machine learning tools could play an important role in extracting spatio-temporal patterns from climate datasets. The technique Deep Belief Networks (Salakhutdinov and Hinton 2012) has been applied with tremendous success to classifying objects in digital images (Krizhevsky et al. 2012) and speech recognition (Hinton et al. 2012). These methods have substantially outperformed existing techniques in the field with the same underlying learning algorithm. While these techniques have not yet been adapted for a multivariate spatio-temporal dataset (such as in climate), research efforts are currently underway to evaluate the performance of such methods in extracting patterns as well as anomalies from datasets. It is too early to discern pros and cons fully for such methods.

2.4 Including large scale patterns in extreme statistics

Application of covariates in extreme value methods (termed “conditional extreme value analysis”) is relatively new to the climate science community, although it has been available to the larger statistics community for some time (Coles 2001). The basic idea of conditional extreme value analysis is to allow the extremal distribution to be dynamic; that is, shifting depending on the observed value of an index of a climate mode or LSMP (the index would be an example of a “covariate”). The book by Coles includes an example in which annual maximum sea level is related to a climate mode, the Southern Oscillation. In their study of changes in extreme daily temperatures, Brown et al. (2008) used the NAO as a covariate in addition to a trend component.

Such techniques have proven useful in connecting extreme temperatures to LSMPs. Sillmann and Croci-Maspoli (2009) and Sillmann et al. (2011) used a blocking index as a covariate for extremely cold European winter temperatures and found that extreme value distributions (based on block minima) were better fit and long period return values were somewhat colder. Furthermore, they concluded that projected future extremely cold events in Europe were less influenced by atmospheric blocking because of projected shifts in North Atlantic blocking patterns. Photiadou et al. (2014) used a similar technique (but based on the POT approach rather than block maxima) to connect blocking and other indices to European high temperature events finding that while El Nino/Southern Oscillation (ENSO) does not exert much influence on extremely high temperature magnitudes or duration, the North Atlantic Oscillation (NAO) and atmospheric blocking do. However to date, such covariate techniques relating atmospheric blocking to extreme temperatures have not been applied to North America. Many physically based covariate quantities potentially offer insight into the mechanisms behind extreme temperature events and the response to changes in the average climate. Good North American candidates for covariates include indices measuring modes of natural variability such as those describing the ENSO, the Pacific Decadal Oscillation (PDO), the NAO, the North Atlantic Subtropical High (NASH), and various blocking indices.

The ETCCDI indices were designed for climate change detection and attribution purposes rather than for exploring the mechanisms causing extreme events. They are not ideal for connecting extreme temperature events to LSMPs and they are not descriptive of particularly rare events. However, ETCCDI indices are designed to be robust over the observational record and have been calculated and described for CMIP5 models by Sillmann et al. (2013a) (see Table 1). ETCCDI indices are intended to be applied globally and be meaningful in areas of sparse observations. The relatively dense network of North American observations since the beginning of the twentieth century permits the construction of more specialized LSMP extreme indices linked to specific extreme events. Grotjahn (2011) defines an index that is an unnormalized projection of key parts of a target ensemble LSMP onto a daily map of the corresponding variable. (He combined such projections onto 850 hPa temperature and 700 hPa meridional wind to form his ‘circulation index’.) His target ensemble members are from dates satisfying criteria listed in Table 3. The key parts of the fields used are those where all the extreme events in the training period were consistent, at least in having the same anomaly sign. Grotjahn (2011) found that extreme values of such an index (based on upper air data) occurred on many of the same dates as extreme values of surface stations in the California Central Valley (CCV). Statistically significant relationships exist between extreme values of this circulation index and both the rate of CCV daily maximum temperature exceeding a high threshold and the distribution of the excess over the threshold (Katz and Grotjahn 2014). Grotjahn (2013) used such an index to show that a particular climate model was notably under-predicting the occurrence frequency (by half) of CCV hot spells. Grotjahn (2015) used such an index to show how that same model compared with a 55 year historical record and what the model implied for CCV hot spells during the last half of the twentyfirst century under two representative concentration pathways (RCPs) of greenhouse gases.

3 Large scale meteorological patterns related to extreme temperature events

Intraseasonal extreme temperature events (ETEs) are almost always associated with regional air mass excursions induced by circulation anomalies that are part of large-scale meteorological patterns (LSMPs). LSMPs can include synoptic features (e.g., midlatitude cyclones; Konrad 1996) that enhance the ETE and often with scales similar to a teleconnection pattern (though the nodes may not align and ETE onset, itself, may impact the teleconnection pattern, Cellitti et al. 2006). In some cases, the LSMP is interpreted as a juxtaposition of teleconnection patterns that leads to ETE events (Lim and Kim 2013). Heuristically, the role of LSMPs in producing ETEs could be considered the result of either (1) a direct contribution to the large-scale circulation that facilitates the air mass excursion or (2) the indirect modulation of sub-scale variability, such as regional modulation of storm track behavior by blocking patterns. Besides such dynamically driven impacts, there exist possible local impacts related to the interaction of the LSMP with local topography or coastline features, leading to possible local symmetries in the response pattern (e.g., Loikith and Broccoli 2012). Current knowledge of the remote forcing, dynamics and local forcing of LSMPs associated with ETEs is summarized next.

3.1 Remote forcing of LSMPs and ETEs

3.1.1 Connection to low frequency modes of climate variability

Numerous observational studies have ascertained that ETE behavior is modulated by recurring large scale teleconnection patterns, particularly during winter. On intraseasonal time scales there is a substantial modulation of North American ETEs during winter by the Pacific-North American (PNA) pattern, North Atlantic (or Arctic) Oscillation (NAO or AO) and blocking patterns (Walsh et al. 2001; Cellitti et al. 2006; Guirguis et al. 2011). On interannual and longer time scales additional climate modes such as El Nino-Southern Oscillation (ENSO) and the Pacific Decadal Oscillation (PDO) are also implicated (Westby et al. 2013). General relationships that have emerged from these statistical analyses are illustrated in Fig. 4: The positive (negative) phase of the NAO favors the occurrence of warm (cold) events over the eastern (southeastern) United States. The positive (negative) phase of the PNA tends to favor cold events over the southeastern (northwestern) US. These connections to climate modes are neither unique nor independent. For example, the regional influence of the PNA pattern on ETEs largely mirrors that of both the PDO and ENSO (Fig. 4) since the midlatitude atmospheric signatures of both ENSO and the PDO project on the PNA pattern. Also, the prevalence of atmospheric blocking patterns is intrinsically linked to particular climate mode phases (Renwick and Wallace 1996).

Correlation between the local seasonal impact of cold days (left column) and warm days (right column) and the seasonal mean NAO (first row), PNA (second row), PDO (third row) and Niño 3.4 indices (fourth row) during winter, 1950–2011. The black contours encompass regions having correlations statistically significant at the 95 % confidence level (figure from Westby et al. 2013)

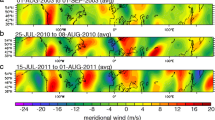

There have been pronounced episodes of climate modes influencing ETEs during recent winters. Cold extremes over Europe and the southeastern United States during recent winters (2009–2010 and 2010–2011) were primarily accounted for by the anomalous blocking associated with persistent episodes of large amplitude negative phase of the NAO (Guirguis et al. 2011). There is also evidence of an important role for stationary Rossby wave patterns in contributing to North American temperature extremes during summer (Schubert et al. 2011; Wu et al. 2012). These wave patterns appear to arise from internal forcing associated with intraseasonal transient eddies (Schubert et al. 2011).

As discussed above, two more commonly recognized remote influences upon North American ETEs are associated with ENSO and the PDO (e.g., Westby et al. 2013), both involving local sea surface temperature anomalies and atmosphere–ocean coupling. These generally operate in conjunction with PNA-like teleconnection patterns that extend from the coupling region downstream into North America. Similar to the effect of climate modes, the impact of remote forcing upon warm season ETEs is partly limited by the relative inactivity and spatial extent of climate modes, which serve as horizontal pathways for Rossby wave energy between the remote forcing region and the local surface response (Schubert et al. 2011).

3.1.2 Connection to sea ice and snow cover

The atmospheric response to the Arctic sea ice reduction is thought to be Arctic warming and destabilization of the lower troposphere, increased cloudiness, and weakening of the poleward thickness gradient and polar jet stream (Francis et al. 2009; Outten and Esau 2012). As the Arctic warms faster than lower latitudes (so-called Arctic Amplification), the meridional temperature gradient at higher latitudes is likely to weaken altering the polar jet stream according to thermal wind balance. Changes in the high latitude jet stream in turn have the potential to impact weather conditions at middle and high latitudes. For example, during winter an enhanced westerly jet over the North Atlantic can help maintain relatively mild conditions over northwest Europe via heat transport from the Atlantic. Cohen et al. (2014) review three “pathways” by which Arctic amplification may impact extreme weather events in mid-latitudes. In principle, Arctic amplification may lead to regional alterations in the structure of storm tracks, jet streams and planetary waves. In recent years, considerable attention has been focused on the role of Arctic amplification-induced changes to the jet stream (Francis and Vavrus 2012; Liu et al. 2012; Barnes 2013; Screen and Simmonds 2013). Francis and Vavrus (2012) found that a weaker zonal flow (i.e., polar jet) from weakened meridional temperature gradient slows the eastward Rossby wave progression and tends to create larger meridional excursions of height contours and associated temperature displacements resulting in a higher probability of extreme weather. In a similar vein, Liu et al. (2012) argue that the circulation change due to the decline of Arctic sea ice leads to more frequent events of atmospheric blocking that cause severe cold surges over large parts of northern continents. Francis et al. (2009), Overland and Wang (2010), Jaiser et al. (2012) and Lim et al. (2012) found that there is a delayed atmospheric response to the Arctic sea ice. Specifically, the Arctic sea ice extent in summer to fall influences the atmospheric circulation in the following winter over the northern mid- to high-latitudes, affecting the seasonal winter temperature and subseasonal warm/cold spells. Evidence presented in more recent studies (Barnes 2013; Screen and Simmonds 2013), however, suggests that the role of the mechanism put forth by Francis and Vavrus (2012) is uncertain at best. More generally, Cohen et al. (2014) conclude that our understanding of the mechanistic link between ongoing Arctic amplification and mid-latitude extreme weather is currently limited by shortcomings in relevant data records, physical models and dynamical understanding, itself. As such, the likely future impact of Arctic amplification upon extreme weather is highly uncertain.

Arctic amplification is also linked to long-term variability in high latitude snow cover. An analogous linkage between autumnal variability in Eurasian snow cover and wintertime ETE events over North America has been noted (Cohen and Jones 2011). In this case, autumnal snow cover anomalies induce a subsequent weakening of the stratospheric polar vortex during winter which, in turn, leads to a persistent negative phase episode of the tropospheric AO favoring North American cold events (Cohen et al. 2007). In addition, there is evidence that the changing Arctic sea ice extent may be linked to changes in the autumnal advance in Eurasian snow cover (Cohen et al. 2013). As for Arctic amplification, however, there is considerable uncertainty regarding the statistical robustness and physical nature of the Eurasian snow cover influence upon mid-latitude extreme weather (Peings et al. 2013; Cohen et al. 2014).

3.1.3 Large-scale climate “markers” for climate model assessment

Representation of fundamental climate modes One obvious essential minimum requirement for climate models to properly represent the modulation of ETEs by climate modes is the extent to which the models are able to represent the primary climate modes, themselves. Thus, fundamental markers for model assessment are metrics that measure the representation of key extratropical climate modes including those internally forced on intraseasonal time scales (PNA, AO/NAO and atmospheric blocking) and those externally forced on longer time scales (the extratropical response to ENSO and the atmospheric part of the PDO). Atmospheric models have had historical difficulty in representing some types of intraseasonal low frequency variability (Black and Evans 1998). A particular problem is an under-representation of atmospheric blocking activity (Scaife et al. 2010). In a similar vein, the representation of externally forced extratropical modes connected to ENSO and PDO depends on how well the coupled climate models simulate the associated oceanic phenomenological behavior.

The Coupled Model Intercomparison Project (CMIP) provides an ideal resource for assessing the ability of modern coupled climate models to represent the behavior of climate modes. A recent analysis of CMIP5 models indicates that while most models studied perform well in representing the basic aspects of the PNA pattern, a small subset of models have difficulty qualitatively replicating the NAO pattern (Lee and Black 2013; Table 4). Otherwise differences among model patterns consist of horizontal shifts or amplitude variations in the circulation anomaly pattern features. CMIP5 models generally underestimate the regional frequency of winter blocking events while summertime blocking events occurring over the high latitude oceanic basins are typically overestimated (Masato et al. 2013). Conversely, Westby et al. (2013) found serious deficiencies in the representation of the PDO by CMIP5 models with direct impacts upon the modulation of anomalous temperature regimes.

Regional flow parameters impacting remote dynamical communication The pathway between anomalous remote forcing and the regional circulation response involves several distinct factors. For example, in the case of the extratropical response to tropical heating anomalies, tropical divergent outflow interacts with subtropical vorticity gradients to produce a Rossby wave train that extends into the extratropics (Sardeshmukh and Hoskins 1988). The forced Rossby wave train can then dynamically interact with the background extratropical mean flow (such as barotropic deformation in the jet exit region) and the midlatitude storm tracks leading to a “net” large scale circulation pattern extending from the tropics into the midlatitude region of interest (e.g., Franzke et al. 2011). The ability of coupled climate models to accurately represent such pathways is dependent upon a concomitant representation of several regional atmospheric phenomena and circulation structures including:

-

1.

The tropical large-scale circulation response to tropical diabatic heating

-

2.

Upper tropospheric meridional vorticity gradients in the subtropics

-

3.

Barotropic deformation structures in the jet exit regions

-

4.

The structure and intraseasonal variability of the Atlantic and Pacific storm tracks

The authors recommend that model validation activities concentrate upon the above features in order to uncover likely sources for existing model deficiencies in leading teleconnection patterns.

3.2 Dynamics of LSMPs

3.2.1 Diagnostic tools to study dynamics of LSMP onset/decay

The onset and decay of LSMP structures linked to ETEs generally occur on relatively short time scales. As such, the range of dynamical forcing mechanisms that can directly account for LSMP time evolution is limited to internal atmospheric processes (given the relatively long time scales associated with boundary forcing). The atmospheric processes affecting LSMP evolution can be local or remote. For example, local synoptic-scale cyclogenesis can usher in a regional Arctic air outbreak while a transient episode of the PNA teleconnection pattern can provide a remote downstream influence on North American extremes. In some cases it is an optimal juxtaposition of local and remote influences operating on multiple time scales that is required to produce an extreme event (e.g., Dole et al. 2014).

Most ETE events are associated with lateral air mass excursions, which are induced by large-scale circulation anomalies in the lower troposphere forming the LSMP (e.g., Loikith and Broccoli 2012). The anomalous circulation serves as a “dynamical trigger” for ETE events. The delineation of LSMP dynamics is treated as a two-stage process: First it is of interest to assess whether the main energy source(s) are local or remote. Once the energy source location is determined, the second stage is to assess the specific physical mechanism providing the proximate energy source in this location. An effective means for assessing the source location regions for large-scale atmospheric waves is the application of wave activity flux analyses (Plumb 1985; Takaya and Nakamura 2001). The wave activity flux is parallel to Rossby wave group velocity and traces out a three-dimensional pathway between a wave source region (of flux divergence) and a wave sink region (of flux convergence).

Possible primary physical mechanisms providing wave activity sources in this context include large-scale barotropic growth, baroclinic growth/instability and nonlinear forcing by synoptic-scale eddies (Evans and Black 2003). These mechanisms may be augmented via secondary feedbacks related to internal diabatic processes or interactions of the LSMP with the local topography or land surface. Past studies have introduced comprehensive dynamical frameworks for studying the dynamical mechanisms leading to the growth and decay of large-scale circulation anomalies (e.g., Feldstein 2002, 2003). These are based upon a local analysis of tendencies in either the geopotential (Evans and Black 2003) or streamfunction (Feldstein 2002) fields. In these studies local tendencies are decomposed into separate forcing terms that can be related to distinct physical processes. The two-stage process outlined above is a generally useful means for the dynamical diagnosis of LSMP life cycles in both observations and climate model simulations.

3.2.2 North American Arctic air mass formation

Both dynamics and thermodynamics have roles in the formation of extreme cold-air masses. Wexler (1936, 1937) postulated that the cold air was formed at the surface by radiative cooling from a snow-covered ground under clear, windless conditions, creating an intense temperature inversion restricted to a very shallow layer at the surface. Finding that the Wexler model could not adequately explain the depth of the cold layer observed in soundings, Gotaas and Benson (1965) showed that the existence of suspended ice crystals were crucial to upper-level cooling. Curry (1983) later modeled the effect with the introduction of condensate, particularly ice-crystals, in the layer. In an experiment without moisture, the inversion that formed after 2 weeks of radiative cooling was less than 1000 meters deep. Only with the addition of ice crystals did the inversion rise above 900 hPa. More recently, Emanuel (2009) found that the rates and depth of cooling in his model were sensitive to the amount of water vapor and clouds present.

Turner and Gyakum (2011), in their composite study of 93 Arctic air mass formations in Northwest Canada, found that the cold air mass lifecycle is a multi-stage set of processes. During the first stage, snow falls into a layer of unsaturated air in the lee of the Rockies, causing moisture increases in the sub-cloud layer. Simultaneously, the mid-troposphere is cooled by cloud-top radiation. On the second day, snowfall abates, the air column dries and clear-sky surface radiational cooling prevails, augmented by the high emissivity of fresh snow cover. The surface temperature falls very rapidly, as quickly as 18 °C day−1. On the third day, after near-surface temperatures fall below their frost point, ice crystals and, nearer the surface, ice fog form. At the end of formation, there is cold-air damming (Forbes et al. 1987; Fritsch et al. 1992), with a cold pool and anticyclone in the lee of the Rockies, lower pressure in the Gulf of Alaska and an intense baroclinic zone oriented northwest to southeast along the mountains.

Figure 5 shows an event having extremely cold surface temperatures and unusual duration (17 days, compared with Turner et al., 2013, 93-case mean of 5 days). SLP anomalies tend to be higher near the coldest air, but are not extreme during this 5-day formation period. More to the point, the rapid 1000–500 hPa cooling of ≥10 °C (≥20 dam) over northwestern Canada is noteworthy. Surface ridging that builds southeastward along the eastern slopes of the Rockies, particularly during 31 January and 1 February, facilitates cold-air damming and areal expansion of anomalously cold air southward to the US-Canada border. Turner et al. (2013) invoke diabatic cooling to explain the observed cooling during this formation period. This cooling may consist of sublimational cooling from precipitation falling into a dry layer and radiational cooling from suspended ice crystals. Turner et al. cite empirical evidence that much of the surface weather in this region and period includes snowfall, ice crystals, and ice fog.

Sea-level pressure anomalies (solid/dashed contour interval of 4 hPa indicating positive/negative values) and 1000–500 hPa thickness anomalies (shaded, dam) at 1200 UTC during the arctic air mass formation period of 27 January 1979 through 1 February 1979. The Norman Wells, NWT rawinsonde station is indicated by the star; by 8 February the temperature there reaches −48.1 °C. The climatological reference period is 1970–2000

3.2.3 CONUS wintertime cold air outbreaks

Cold air outbreaks over North America typically consist of a two-stage process: the first stage is the formation of an Arctic air mass surface anticyclone at higher latitudes over Canada. This is followed by the rapid horizontal transport of the air mass to lower latitudes. The latter stage is enabled by the lower-tropospheric circulation anomaly embedded within LSMPs. North American cold air outbreaks are typically associated with a 500 hPa geopotential height anomaly pattern consisting of a broad region of negative anomalies over the CAO region, itself, along with a positive anomaly feature located to the northwest. This is illustrated in Fig. 6 (panels Jan Tx5 and Jan Tn5), which displays horizontally phase-shifted composites of the tropospheric circulation anomalies associated with local temperature extremes over North America (noting that the so-called “grand composite” patterns encompass a circular domain with a radius of 4500 km). This 500 hPa dipole structure is linked to a positive sea level pressure anomaly feature that extends between the upper level features along with a considerably weaker negative SLP anomaly located to the southeast. Thus, the CAO events are linked to a near-surface northerly flow embedded within a northwestward tilting anticyclonic circulation anomaly. Loikith and Broccoli observe that although these circulation anomaly patterns resemble large-scale teleconnection patterns, the inherent spatial length scale is closer to synoptic scale. This illustrates the importance of local synoptic features in the life cycles of ETE events. On the other hand, recent papers by Westby et al. (2013) and Loikith and Broccoli (2014) demonstrate an additional statistically significant modulation of ETEs by larger-scale teleconnection patterns.

Grand composites of anomalies associated with temperature regimes over North America: SLP anomalies (hPa) are shaded and Z 500 height anomalies are contoured every 20 m; red (blue) contours are positive (negative) Z 500 anomalies. Grand composites are shown for (top) January and (bottom) July extreme (from left to right) cold maximum (Tx5), warm maximum (Tx95), cold minimum (Tn5), and warm minimum (Tn95) temperatures. The Grand composite combines information from extreme temperature events occurring at multiple disparate geographical locations over North America. The anomaly fields are displayed for a circular domain with a radius of 4500 km. From Loikith and Broccoli (2012; see text for further details)

West coast events (e.g., CA Central Valley events) Compared with cold air outbreaks affecting the eastern and central parts of the US, less has been written about outbreaks affecting the western US. Extreme cold over California is related to a large scale pattern that brings cold air from the Arctic and northern Canada without crossing the Pacific, and hence over the Rocky Mountains (Grotjahn and Faure 2008). As discussed in Grotjahn and Faure, the large scale pattern is similar to outbreaks affecting the areas east of the Rockies however with a primary difference being a small but statistically significant ridge over the southeastern US (see Fig. 2a, b). This ridge precedes the CAO by several days, including the large ridge that develops subsequently over Alaska. A cold air mass between these ridges is directed further westward (a more northerly or northeasterly flow of the cold air) than for eastern CAOs. The distortion of the flow by that southeastern ridge enables the cold air mass to cross the Rockies. Loikith and Broccoli (2012) capture a portion of this pattern in geopotential heights at 500 hPa using their ‘grand composite’ technique. More local to the area of the extreme, colder temperatures are associated with an adjacent surface high (e.g. the conceptual model of Colle and Mass 1995). Favre and Gershunov (2006) pursue this link to anticyclones affecting western North America; and develop indices based on frequency and central pressure of transient cyclones and anticyclones in the eastern North Pacific. Their ‘CA’ index is the difference, cyclone minus anticyclone, in strength and frequency. Negative CA values are correlated with the coldest 10 % of winter minimum temperatures.

Eastern US events (i.e., east of Rockies) Several statistical and synoptic studies either directly or indirectly relate to the topic of US CAOs occurring east of the Rockies (e.g., Konrad and Colucci 1989; Colle and Mass 1995; Konrad 1996; Walsh et al. 2001; Cellitti et al. 2006; Portis et al. 2006). Common precursors to eastern CAO events include anomalously high surface air pressure over western Canada (linked to a polar air mass), occurrences of the negative (positive) phase of the NAO (PNA) teleconnection pattern and/or an anomalously weak stratospheric polar vortex. CAO onset is characterized by the south/southeastward advection of the polar air mass in association with one or more of the following synoptic LSMP features: southward extension or propagation of the surface high pressure from Canada, surface cyclogenesis over the eastern US, anomalously low 500 hPa geopotential heights over the Great Lake region and the southeastward movement of an upper level shortwave from Canada.

Distinguishing between east coast (EC) and Midwest (MW; just east of the Rockies) CAO events, EC events result from the geostrophic cold advection enhanced by southeastward propagation of the surface high pressure system (with winds often amplified by surface cyclogenesis further east) leading to detachment of the polar air mass from its source (Konrad and Colucci 1989; Walsh et al. 2001). MW events are linked to the southeastward meridional extension of surface high pressure from Canada leading to unusually lengthy cold air transport (Walsh et al. 2001). For MW events occurring just east of the Rockies, the cold advection is related to low-level northerly ageostrophic flow within a region of cold-air damming against the topography (Colle and Mass 1995). In all cases, the salient LSMP features are synoptic-scale in nature and, thus, governed by generally well-understood dynamical physical principles considered to be well-represented by weather and climate models. The CAO process, itself, is largely due to radiative cooling (during polar air mass formation and early stages of CAO onset) and southward air mass transport (by the horizontal wind) partly offset by adiabatic warming induced by large-scale subsidence (Konrad and Colucci 1989; Walsh et al. 2001; Portis et al. 2006). Although the proximate physics associated with the CAO process itself is reasonably well characterized, our knowledge of the physics of the LSMP patterns is implicit and depends on subjective associations (between advective circulation features and synoptic LSMPs) that have been made in earlier studies. As such, there is an existing need to (a) more objectively link critical advective CAO circulation features to dynamical entities (e.g., potential vorticity) and (b) subsequently assess the physical origin of these dynamical entities.

3.2.4 Summer heat wave events

Hot spells over North America have an intense upper level ridge as one expects hydrostatically. This ridge is seen in Figs. 2 and 6. There can also be a shallow layer of relatively lower pressure, sometimes called a ‘thermal low’. Hot spells occur from a combination of factors: a hot air mass displaced from its normal location (displaced from the southwestern desert), strong subsidence (causing adiabatic warming), and solar heating. Solar heating is more effective when surface latent heat fluxes are constrained (by drought, for example). The first two factors are enhanced and help define the LSMP for a hot spell; there may be an upstream trough (e.g. Figs. 2, 6) that enhances southwesterly advection of warmer air. Other factors influence how the LSMP develops. What follows are details for different regions of North America.

West coast events Heat waves affecting the west coast are linked to an upper air LSMP that has a local ridge. For California Central Valley heat waves, that ridge is typically aligned with the west coast and the LSMP also has upstream features: a trough south of the Gulf of Alaska and a ridge further west, south of the Aleutians (Grotjahn and Faure 2008) (see Fig. 2d). The local ridge is easily understood as resulting from high thickness due to the anomalous high temperatures through the depth of the troposphere generally centered along the west coast of the US. (Plots can be found here: http://grotjahn.ucdavis.edu/EWEs/heat_wave/heat_wave.htm) Grotjahn and Faure also show that a statistically significant ridge in the northwest Pacific develops prior to the significant intensification of that west coast ridge. These features are essentially equivalent-barotropic through the depth of the troposphere. While Grotjahn and Faure found variation amongst the events, the ensemble average consists of a significant temperature anomaly that in the lower troposphere (850 and 700 hPa) is located just off the Oregon and northern California coast and elongated meridionally. The narrow zonal with longer meridional scale is consistent with station data analyzed by Bachmann (2008). Bachmann found that extreme surface temperature dates in Sacramento were more frequently matched by corresponding extreme dates at many stations located west of the Sierra Nevada and Cascades mountain ranges than closer stations east of the mountains. Grotjahn (2011) shows that the temperature anomaly leads to a thermal low being at the coast that sets up a low level pressure gradient that opposes penetration by a cooling sea breeze. The lower and mid-tropospheric flow has anomalous significant easterlies that are also downslope over some regions, most notably the Sierra Nevada mountains. Finally, there is notable sinking that lowers the climatological subsidence inversion and hence sunlight rapidly heats up the shallow layer beneath. (These factors are seen in Fig. 7).

Composite synoptic weather patterns at the onset of the 14 Sacramento California summer (JJAS) heat waves studied by Grotjahn and Faure (2008). a Temperature at 850 hPa with a 2 K interval. b 700 hPa level pressure velocity with 2 Pa/s interval and where positive values mean sinking motion. c Sea level pressure with 2 hPa interval, d Surface wind vectors with shading applicable to the zonal component. Areas with yellow (lighter inside dark) shading are positive (above normal) anomalies that are large enough to occur only 1.5 % of the time by chance in a same-sized composite; areas that are blue (darker inside light) shading are negative anomalies occurring only 1.5 % of the time

Gershunov et al. (2009, hereafter GCI) make the novel distinction of considering separately heat waves that have high daytime maximum and events with high nighttime minimums. In so doing they uncover an LSMP with unusually high values of precipitable water (PW) over their region of interest occurring during their ‘nighttime’ heat waves (but not their ‘daytime’ events). GCI emphasize a trend of increasing occurrence of ‘nighttime’ events. Gershunov and Guirguis (2012) also find that trend in all their sub-regions of California and also show another trend: increasing longitudinal extent of Central Valley heat waves. GCI show maps of anomalous geopotential heights which also show the west coast ridge (height anomaly centered over Washington State) with upstream (and downstream) troughs, consistent with Grotjahn and Faure. GCI show the LSMP for SLP (high over the Great Plains and low off the west coast) and remark that both daytime and nighttime events have a corresponding general southeasterly flow of air out of the desert southwest. This flow occurs throughout the lower troposphere with associated high frequency (<7 day period) heat fluxes that are prominent just off the coast (Grotjahn 2015); and consistent with setting up the offshore pressure gradient (to oppose a cooling sea breeze).

For heat waves affecting the western areas of Washington and Oregon, Bumbaco et al. (2013, hereafter BDB) show an upper level (500 hPa) ridge over the west coast and trough upstream over the Gulf of Alaska. That trough and ridge pattern is generally similar to the pattern for California heat waves, including the tropospheric height anomaly centered at the coast (Grotjahn 2011). Using a regression approach Lau and Nath (2012) find a ridge and trough further upstream (over the western North Pacific) that are stronger several days prior to the event onset; both the location and timing of the ridge and trough are similar to those shown in Grotjahn and Faure. Similar to Grotjahn (2011, 2013, 2015), BDB find the lower tropospheric temperature anomaly (850 hPa) to be centered at the west coast of North America. Hence, BDB find negative values of sea level pressure anomaly centered offshore that sets up an offshore low level pressure gradient and offshore (northeasterly) flow (again similar to California heat waves). Consistent with the heat fluxes shown in Grotjahn (2015), the near-coast thermal trough appears to migrate from the southwestern deserts across the length of California to finally reach western Oregon and Washington (Brewer et al. 2012).

Midwest events Heat waves affecting the Midwest have anticyclonic flow at mid-levels, either as a closed anticyclone or as a strong ridge (Klein 1952; Karl and Quayle 1981; Namias 1982; Chang and Wallace 1987; Lyon and Dole 1995; Kunkel et al. 1996; Livezey and Tinker 1996; Palecki et al. 2001; Meehl and Tebaldi 2004; Lau and Nath 2012; Loikith and Broccoli 2012; Teng et al. 2013), with associated clear skies allowing maximum solar heating of the surface as well as adiabatic warming from subsidence.

For at least some events, the continental anticyclonic flow at mid-levels is part of a larger pattern of anomalies with remote centers over the Pacific and Atlantic (Namias 1982; Chang and Wallace 1987; Livezey and Tinker 1996; Lau and Nath 2012; Loikith and Broccoli 2012; Teng et al. 2013), with some suggestion that the anomalies are generated in the Pacific (Namias 1982; Lyon and Dole 1995; Livezey and Tinker 1996; Teng et al. 2013), with possible predictability (Teng et al. 2013). Teng et al. (2013) have noted the similarity of the circulation pattern to a Rossby wave number 5 wave train in the jet stream waveguide. The commonality of the wave train pattern structure and evolution among these studies is difficult to assess, though.

Studies of the surface flow have been less common, but negative SLP anomalies have been noted (Chang and Wallace 1987; Lau and Nath 2012; Loikith and Broccoli 2012), and Lau and Nath (2012) have shown anomalous southerly (or southwesterly) flow at the surface, consistent with strong horizontal warm advection. The regional-scale flow also interacts with local mechanisms, particularly the Urban Heat Island (UHI) effect (Kunkel et al. 1996; Palecki et al. 2001). Another common element identified in several studies is the presence of drought (Chang and Wallace 1987; Karl and Quayle 1981; Namias 1982; Lyon and Dole 1995) as well as simultaneous precipitation deficits (Lau and Nath 2012).

Eastern events While the large-scale meteorological patterns associated with heat waves in the eastern US have not yet seen much study, they have been examined for the Northeast and Central US Gulf Coast in Lau and Nath (2012) and for piedmont North Carolina by Chen and Konrad (2006). For both the Northeast and Gulf Coast, Lau and Nath found a mid-level ridge centered over the region as part of a wave train and precipitation deficits, broadly similar to the results for the Midwestern regions. For piedmont North Carolina, Chen and Konrad showed that, at upper levels, a strong ridge over or just upstream of the region was a common feature along with, at lower levels, adiabatic warming associated with descent from the Blue Ridge Mountains into the piedmont region.

4 Modeling of temperature extremes and associated circulations

Temperature extremes have some predictability at weather and climate time scales that can be exploited by models for short term predictions and long term projections. At weather time scales the predictability of North American extreme temperature events (ETEs) is largely dependent upon the nature of the LSMPs that help organize their occurrence. The greatest predictability is expected to occur during the boreal cool season when ETEs are, at least in part, influenced by low frequency modes (PNA, NAO and blocking events) (Westby et al. 2013) with intrinsic time scales of several days to weeks (Feldstein 2000). Loikith and Broccoli (2012) illustrated that the local LSMPs linked to ETEs generally exhibit synoptic spatial scales rather than planetary scales, though a wave train can be long (e.g. Grotjahn and Faure, 2008; Fig. 2). Given the essential role of synoptic-scale disturbances such as east coast cyclones and southward moving polar anticyclones over the Midwest (Konrad and Colucci 1989; Walsh et al. 2001) in ETEs, pointwise predictability of ETEs is ultimately limited by our ability to forecast the details of synoptic-scale phenomena several days in advance (Hohenegger and Schär 2007).

Hence a significant challenge for models to predict temperature extremes is their ability to predict or simulate synoptic scale phenomena, low frequency modes that provide a large-scale meteorological context, and small scale atmospheric processes and land surface processes that influence the surface heat fluxes. Both dynamical and statistical models have been developed and used to simulate and project changes in temperature extremes. This section briefly summarizes the methods and skills of the models, while analysis of observed trends and projections of future trends are summarized in Sect. 5.

4.1 Global and regional climate model skill in simulating temperature extremes

Both global and regional climate models have been used to elucidate processes contributing to ETEs and evaluate model skills. The ability of global models to reproduce the observed temperature extreme statistics has been assessed by Sillmann et al. (2013a, b), Westby et al. (2013) and Wuebbles et al. (2014) using CMIP5 multi-model ensembles. Figure 8 shows a performance portrait of the normalized root mean square errors over the North American land area from the 28 available CMIP5 “historical” models for eight temperature based ETCCDI indices over 1979–2005 compared to the ERA Interim reanalysis (Dee et al. 2011). As in Gleckler et al. (2008), to plot errors from multiple variables on the same scale, they are normalized by the median error of the CMIP5 models using the formula

Here, E median is the median root mean square error (RMSE) of the CMIP5 models, E j is the RMSE of the jth model and \(E_{j}^{R}\) is that model’s “relative RMSE” and is plotted for seasonal means of the indices. In this analysis, the model median RMSE in Eq. 1 is calculated for each variable over all seasons and then applied to normalize each season in order to assess the relative seasonal performance. Blue colors represent errors lower than the median error, while red colors represent errors larger than the median error. Seasons are denoted by triangles within each square. The different models are arranged in order of increasing average relative error with the models with the lowest average relative error on the left. The average relative error is positive for all 8 indices as Fig. 8 has more deep red than deep blue colors even though the number of positive and negative relative errors is equal. Spring has significantly lower relative error (3.8 %) averaged across all models and variables, compared to winter (5.0 %), summer (5.2 %) and fall (5.8 %).

Performance portrait of the CMIP5 models’ ability to represent the temperature based ETCCDI indices over North American land. The colors represent normalized root mean square errors of seasonal indices compared to the ERA Interim reanalysis. Blue colors represent errors lower than the median error, while red colors represent errors larger than the median error. Seasons are denoted by triangles within each square. Models marked with “*” are not included in the RCP8.5 projections. Root mean square errors normalized by the model median RMSE for 3 other reanalyses are shown in the rightmost columns for comparison