Abstract

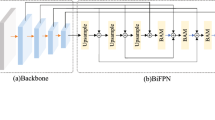

Unmanned aerial vehicle (UAV) is versatile machines that capture aerial images with a bird’s eye view of objects from various perspectives and heights. They are widely utilized in both military and civilian applications. As the domain of computer vision advances, object detection becomes a solid mainstream for UAV applications. However, due to the flight altitude of the drone and the variation of shooting angle, drone images often contain small size, dense, and confusing targets, resulting in low detection accuracy. In this article, we propose a new approach called enhanced feature pyramid network (E-FPN) for detecting objects in UAV scenarios. Our E-FPN architecture incorporates the Simplified Spatial Pyramid Pooling-Fast (SimSPPF) structure into the backbone, enabling the extraction of features at four different scales. These features are subsequently passed through different layers of the E-FPN neck, facilitating the interaction between shallow and deep features. This process gains four distinct feature representations. Firstly, the input images are pre-processed by data augmentation. Then, the CSPDarknet53 with SimSPPF is used as the backbone to extract the multi-scale features from the visuals. Secondly, the integration of Cross-Stage Partial Stage modules into the E-FPN framework enhances the network’s ability to capture target details. The E-FPN neck’s top–down pathway integrates features from diverse layers and scales of the backbone, generating three richer and multi-scale representations of intermediate features. Meanwhile, its bottom–up pathway fuses semantic information from various layers and scales into four feature maps of equal channels but differing scales. Finally, a detector head is added to improve the accuracy of the detection, and the final results are obtained. The experimental results demonstrate that E-FPN-N achieves mAP50-95 performance of 37.7% on the MS COCO2017 dataset. Moreover, the precision and mAP50 of our model on the VisDrone validation dataset reached 67.5% and 62.0%, respectively.

Similar content being viewed by others

Availability of data and materials

All data, models, and code generated and utilized in this study are available upon reasonable request from the corresponding author. The codes will upload on https://github.com/yangwygithub/PaperCode.git, Branch: E-FPN-UAV_Zhongxu-Li2023.

References

Zou, Z., Chen, K., Shi, Z., Guo, Y., Ye, J.: Object detection in 20 years: a survey. Proc. IEEE 111(3), 257–276 (2023)

Bayoudh, K., Knani, R., Hamdaoui, F., Mtibaa, A.: A survey on deep multimodal learning for computer vision: advances, trends, applications, and datasets. Vis. Comput. 38, 2939–2970 (2022)

Zhang, G., Chen, X., Tan, X., Zhang, J., Lan, X.: U-YOLO: improved YOLOv5 for small object detection on UAV-captured images. In: Cognitive Computation and Systems: First International Conference, pp. 3–15 (2023)

Xu, B., Wang, C., Liu, Y., Zhou, Y.: An anchor-based convolutional network for the near-surface camouflaged personnel detection of UAVs. Vis. Comput. 40, 1659–1671 (2024)

Li, Y.l., Feng, Y., Zhou, M.l., Xiong, X.C., Wang, Y.H., Qiang, B.H.: DMA-YOLO: multi-scale object detection method with attention mechanism for aerial images. Vis. Comput. 1–14 (2023). https://doi.org/10.1007/s00371-023-03095-3

Feng, D., Haase Schütz, C., Rosenbaum, L., Hertlein, H., Glaeser, C., Timm, F., Wiesbeck, W., Dietmayer, K.: Deep multi-modal object detection and semantic segmentation for autonomous driving: datasets, methods, and challenges. IEEE Trans. Intell. Transp. Syst. 22(3), 1341–1360 (2020)

Kiran, B.R., Sobh, I., Talpaert, V., Mannion, P., Sallab, A.A.A., Yogamani, S., Pérez, P.: Deep reinforcement learning for autonomous driving: a survey. IEEE Trans. Intell. Transp. Syst. 23(6), 4909–4926 (2022)

Chen, W., Huang, H., Peng, S., Zhou, C., Zhang, C.: YOLO-face: a real-time face detector. Vis. Comput. 37, 805–813 (2021)

Yu, Z., Huang, H., Chen, W., Su, Y., Liu, Y., Wang, X.: YOLO-FACEV2: a scale and occlusion aware face detector. arXiv preprint arXiv:2208.02019 (2022)

Yuan, W., Fu, C., Liu, R., Fan, X.: SSoB: searching a scene-oriented architecture for underwater object detection. Vis Comput 39, 5199–5208 (2023)

Girshick, R., Donahue, J., Darrell, T., Malik, J.: Rich feature hierarchies for accurate object detection and semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 580–587 (2014)

He, K., Zhang, X., Ren, S., Sun, J.: Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 37(9), 1904–1916 (2015)

Ren, S., He, K., Girshick, R., Sun, J.: Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 39, 1–14 (2015)

Lin, T.Y., Dollár, P., Girshick, R., He, K., Hariharan, B., Belongie, S.: Feature pyramid networks for object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2117–2125 (2017)

Redmon, J., Divvala, S., Girshick, R., Farhadi, A.: You only look once: unified, real-time object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 779–788 (2016)

Redmon, J., Farhadi, A.: YOLO9000: better, faster, stronger. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7263–7271 (2017)

Redmon, J., Farhadi, A.: YOLOV3: an incremental improvement. arXiv preprint arXiv:1804.02767 (2018)

Bochkovskiy, A., Wang, C., Liao, H.M.: YOLOV4: optimal speed and accuracy of object detection. arXiv preprint arXiv:2004.10934 (2020)

Jocher, G.: YOLOv5 by Ultralytics (2020)

Li, C., Li, L., Geng, Y., Jiang, H., Cheng, M., Zhang, B., Ke, Z., Xu, X., Chu, X.: YOLOV6 v3.0: a full-scale reloading. arXiv preprint arXiv:2301.05586 (2023)

Wang, C., Bochkovskiy, A., Liao, H.M.: YOLOV7: trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv preprint arXiv:2207.02696 (2022)

Ge, Z., Liu, S., Wang, F., Li, Z., Sun, J.: YOLOX: exceeding yolo series in 2021. arXiv preprint arXiv:2107.08430 (2021)

Xu, S., Wang, X., Lv, W., Chang, Q., Cui, C., Deng, K., Wang, G., Dang, Q., Wei, S., Du, Y., et al.: PP-YOLOE: An evolved version of YOLO. arXiv preprint arXiv:2203.16250 (2022)

Jocher, G., Chaurasia, A., Qiu, J.: YOLO by Ultralytics (2023)

Kellenberger, B., Marcos, D., Tuia, D.: Detecting mammals in UAV images: best practices to address a substantially imbalanced dataset with deep learning. Remote Sens. Environ. 216, 139–153 (2018)

Gu, J., Su, T., Wang, Q., Du, X., Guizani, M.: Multiple moving targets surveillance based on a cooperative network for multi-UAV. IEEE Commun. Mag. 56(4), 82–89 (2018)

Huang, J., Jiang, X., Jin, G.: Detection of river floating debris in UAV images based on improved YOLOV5. In: 2022 International Joint Conference on Neural Networks, pp. 1–8 (2022)

Liu, W., Quijano, K., Crawford, M.M.: YOLOv5-Tassel: Detecting tassels in RGB UAV imagery with improved YOLOv5 based on transfer learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 15, 8085–8094 (2022)

Fan, J., Yang, X., Lu, R., Li, W., Huang, Y.: Long-term visual tracking algorithm for UAVs based on kernel correlation filtering and surf features. Vis Comput 39(1), 319–333 (2023)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: ImageNet classification with deep convolutional neural networks. Adv Neural Inf Process Syst 60(6), 84–90 (2017)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Wang, C., Liao, H.M., Wu, Y.H., Chen, P., Hsieh, J.W., Yeh, I.H.: CSPNet: a new backbone that can enhance learning capability of CNN. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, pp. 390–391 (2020)

Dosovitskiy, A., Beyer, L., Kolesnikov, A., Weissenborn, D., Zhai, X., Unterthiner, T., Dehghani, M., Minderer, M., Heigold, G., Gelly, S., et al.: An image is worth 16x16 words: transformers for image recognition at scale. arXiv preprint arXiv:2010.11929 (2020)

Wang, W., Xie, E., Li, X., Fan, D.P., Song, K., Liang, D., Lu, T., Luo, P., Shao, L.: Pyramid vision transformer: a versatile backbone for dense prediction without convolutions. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 568–578 (2021)

Liu, Z., Lin, Y., Cao, Y., Hu, H., Wei, Y., Zhang, Z., Lin, S., Guo, B.: Swin transformer: hierarchical vision transformer using shifted windows. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 10012–10022 (2021)

Mehta, S., Rastegari, M.: MobileVit: light-weight, general-purpose, and mobile-friendly vision transformer. arXiv preprint arXiv:2110.02178 (2021)

Howard, A.G., Zhu, M., Chen, B., Kalenichenko, D., Wang, W., Weyand, T., Andreetto, M., Adam, H.: MobileNets: efficient convolutional neural networks for mobile vision applications. arXiv preprint arXiv:1704.04861 (2017)

Sandler, M., Howard, A., Zhu, M., Zhmoginov, A., Chen, L.C.: MobileNetV2: inverted residuals and linear bottlenecks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4510–4520 (2018)

Howard, A., Sandler, M., Chu, G., Chen, L.C., Chen, B., Tan, M., Wang, W., Zhu, Y., Pang, R., Vasudevan, V.: Searching for MobileNetV3. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 1314–1324 (2019)

Zhang, X., Zhou, X., Lin, M., Sun, J.: ShuffleNet: an extremely efficient convolutional neural network for mobile devices. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6848–6856 (2018)

Ma, N., Zhang, X., Zheng, H.T., Sun, J.: ShuffleNet v2: practical guidelines for efficient CNN architecture design. In: Proceedings of the European Conference on Computer Vision, pp. 116–131 (2018)

Xu, X., Jiang, Y., Chen, W., Huang, Y., Zhang, Y., Sun, X.: DAMO-YOLO: a report on real-time object detection design. arXiv preprint arXiv:2211.15444 (2022)

Liu, W., Anguelov, D., Erhan, D., Szegedy, C., Reed, S., Fu, C.Y., Berg, A.C.: SSD: single shot multibox detector. In: Computer Vision–ECCV 2016: 14th European Conference, pp. 21–37 (2016)

Wang, K., Liew, J.H., Zou, Y., Zhou, D., Feng, J.: PaNet: few-shot image semantic segmentation with prototype alignment. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 9197–9206 (2019)

Zhao, Q., Sheng, T., Wang, Y., Tang, Z., Chen, Y., Cai, L., Ling, H.: M2Det: A single-shot object detector based on multi-level feature pyramid network. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp. 9259–9266 (2019)

Ghaisi, G., Lin, T.Y., Pang, R., NAS-FPN, Q.V.L.: Learning scalable feature pyramid architecture for object detection. In: Proceedings of the IEEE Computer Vision and Pattern Recognition, pp. 7029–7038 (2019)

Tan, M., Pang, R., Le, Q.V.: EfficientDet: scalable and efficient object detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10781–10790 (2020)

Jiang, Y., Tan, Z., Wang, J., Sun, X., Lin, M., Li, H.: GiraffeDet: a heavy-neck paradigm for object detection. arXiv preprint arXiv:2202.04256 (2022)

Wang, G., Li, J., Wu, Z., Xu, J., Shen, J., Yang, W.: EfficientFace: an efficient deep network with feature enhancement for accurate face detection. arXiv preprint arXiv:2302.11816 (2023)

Yang, G., Lei, J., Zhu, Z., Cheng, S., Feng, Z., Liang, R.: AFPN: asymptotic feature pyramid network for object detection. arXiv preprint arXiv:2306.15988 (2023)

Chen, C., Zhang, Y., Lv, Q., Wei, S., Wang, X., Sun, X., Dong, J.: RRNet: a hybrid detector for object detection in drone-captured images. In: Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, pp. 100–108 (2019)

Zhang, R., Shao, Z., Huang, X., Wang, J., Li, D.: Object detection in UAV images via global density fused convolutional network. Remote Sens. 12(19), 3140–3143 (2020)

Tian, G., Liu, J., Yang, W.: A dual neural network for object detection in UAV images. Neurocomputing 443, 292–301 (2021)

Zhang, R., Shao, Z., Huang, X., Wang, J., Wang, Y., Li, D.: Adaptive dense pyramid network for object detection in UAV imagery. Neurocomputing 489, 377–389 (2022)

Zeng, S., Yang, W., Jiao, Y., Geng, L., Chen, X.: SCA-YOLO:: a new small object detection model for UAV images. Vis. Comput. 40, 1787–1803 (2024)

Wang, T., Ma, Z., Yang, T., Zou, S.: PETNet: a YOLO-based prior enhanced transformer network for aerial image detection. Neurocomputing 547, 126384–126390 (2023)

Zheng, Z., Wang, P., Liu, W., Li, J., Ye, R., Ren, D.: Distance-IoU loss: faster and better learning for bounding box regression. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp. 12993–13000 (2020)

Li, X., Wang, W., Wu, L., Chen, S., Hu, X., Li, J., Tang, J., Yang, J.: Generalized focal loss: learning qualified and distributed bounding boxes for dense object detection. Adv. Neural Inf. Process. Syst. 33, 21002–21012 (2020)

Zhu, P., Wen, L., Du, D., Bian, X., Fan, H., Hu, Q., Ling, H.: Detection and tracking meet drones challenge. IEEE Trans. Pattern Anal. Mach. Intell. 44(11), 7380–7399 (2021)

Lin, T.Y., Maire, M., Belongie, S., Hays, J., Perona, P., Ramanan, D., Dollár, P., Zitnick, C.L.: Microsoft coco: common objects in context. In: Computer Vision–ECCV, pp. 740–755 (2014)

Chen, Z., Huang, S., Lv, H., Luo, Z., Liu, J.: Defect detection in automotive glass based on modified YOLOv5 with multi-scale feature fusion and dual lightweight strategy. Vis. Comput. 1432–2315 (2024). https://doi.org/10.1007/s00371-023-03225-x

Yu, W., Yang, T., Chen, C.: Towards resolving the challenge of long-tail distribution in D images for object detection. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 3258–3267 (2021)

Cai, Z., Vasconcelos, N.: Cascade R-CNN: delving into high quality object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6154–6162 (2018)

Albaba, B.M., Ozer, S.: SyNet: an ensemble network for object detection in UAV images. In: 2020 25th International Conference on Pattern Recognition, pp. 10227–10234 (2021)

Du, D., Zhu, P., Wen, L., Bian, X., Lin, H., Hu, Q., Peng, T., Zheng, J., : VisDrone-DET2019: the vision meets drone object detection in image challenge results. In: Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, pp. 213–226 (2019)

Zhao, H., Zhou, Y., Zhang, L., Peng, Y., Hu, X., Peng, H., Cai, X.: Mixed YOLOv3-LITE: a lightweight real-time object detection method. Sensors 20(7), 1861–1873 (2020)

Lu, W., Lan, C., Niu, C., Liu, W., Lyu, L., Shi, Q., Wang, S.: A CNN-transformer hybrid model based on CSWin transformer for UAV imageobject detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 16, 1211–1231 (2023)

Feng, C., Zhong, Y., Gao, Y., Scott, M.R., Huang, W.: Tood: task-aligned one-stage object detection. In: 2021 IEEE/CVF International Conference on Computer Vision, pp. 3490–3499 (2021)

Tan, M., Le, Q.: EfficientNet: rethinking model scaling for convolutional neural networks. In: International Conference on Machine Learning, pp. 6105–6114 (2019)

Liu, Z., Mao, H., Wu, C.Y., Feichtenhofer, C., Darrell, T., Xie, S.: A convnet for the 2020s. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 11976–11986 (2022)

Chen, J., Kao, S.H., He, H., Zhuo, W., Wen, S., Lee, C.H., Chan, S.H.G.: Run, don’t walk: chasing higher flops for faster neural networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 12021–12031 (2023)

Tang, Y., Han, K., Guo, J., Xu, C., Xu, C., Wang, Y.: GhostNetv2: enhance cheap operation with long-range attention. Adv Neural Inf Process Syst 35, 9969–9982 (2022)

Chen, H., Wang, Y., Guo, J., Tao, D.: VanillaNet: the power of minimalism in deep learning. arXiv preprint arXiv:2305.12972 (2023)

Acknowledgements

The research is supported by the National Natural Science Foundation of China under Grant No.12101289 and the Natural Science Foundation of Fujian Province under Grant Nos.2020J01821 and 2022J01891. And it is supported by the Institute of Meteorological Big Data-Digital Fujian and Fujian Key Laboratory of Data Science and Statistics (Minnan Normal University), China.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

No potential conflict of interest was reported by the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Li, Z., He, Q. & Yang, W. E-FPN: an enhanced feature pyramid network for UAV scenarios detection. Vis Comput (2024). https://doi.org/10.1007/s00371-024-03355-w

Accepted:

Published:

DOI: https://doi.org/10.1007/s00371-024-03355-w