Abstract

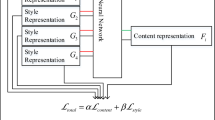

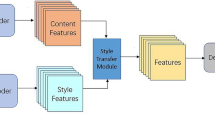

In order to avoid the shortcomings of a single optimization method, improve the effect of style transfer, and control the occurrence of artifacts, this paper proposes a neural style transfer method combining global and local optimization. In the calculation of local loss, the content mask and style mask are used to the matching process of the image patches to preserve the style details and reduce the mismatching of the image. The global loss function is calculated by Gram matrix, and the mask of the content feature map is added to the feature map of the synthetic image. The effect of mask data on the image is controlled by hyperparameters, and the Laplacian operator is introduced for structural refinement to better preserve the structural integrity of the stylized image. Experimental results show that this method can extend the scope of application of style transfer, can be effectively used for different images, and effectively control artifacts. The data for our approach are publicly available at https://github.com/xlyusegithub/styletransfer.git.

Similar content being viewed by others

References

Zhang, F., Liang, X., Sun, Y., et al.: POFMakeup: A style transfer method for peking opera makeup. Comput. Electr. Eng. 104, 108459 (2022)

Gatys, L., Ecker, A.S., Bethge, M.: Texture synthesis using convolutional neural networks. Adv. Neural. Inf. Process. Syst. 28, 262–270 (2015)

Gatys, L.A., Ecker, A.S., Bethge, M.: A neural algorithm of artistic style. arXiv preprint arXiv:1508.06576 (2015)

Gatys, L.A., Ecker, A.S., Bethge, M.: Image style transfer using convolutional neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, 2414–2423. (2016)

Dong, S., Ding, Y., Qian, Y., et al.: Video style transfer based on convolutional neural networks. Math. Probl. Eng. (2022). https://doi.org/10.1155/2022/8918722

Liao, Y.-S., Huang, C.-R.: Semantic context-aware image style transfer. IEEE Trans. Image Process. 31, 1911–1923 (2022)

Li, Y., Lin, G., He, M., et al.: Layer similarity guiding few-shot Chinese style transfer. Vis. Comput. (2023). https://doi.org/10.1007/s00371-023-02915-w

Lan, J., Ye, F., Ye, Z., et al.: Unsupervised style-guided cross-domain adaptation for few-shot stylized face translation. Vis. Comput. (2022). https://doi.org/10.1007/s00371-022-02719-4

Jin, X.: Art style transfer of oil painting based on parallel convolutional neural network. Secur. Commun. Netw. (2022). https://doi.org/10.1155/2022/5087129

Hong, S., Shen, J., Lü, G., et al.: Aesthetic style transferring method based on deep neural network between Chinese landscape painting and classical private garden’s virtual scenario. Int. J. Dig Earth 16, 1491–1509 (2023). https://doi.org/10.1080/17538947.2023.2202422

Tang, Z., Wu, C., Xiao, Y., et al.: Evaluation of painting artistic style transfer based on generative adversarial network. In: 2023 8th International Conference on Cloud Computing and Big Data Analytics (ICCCBDA), 560–566. IEEE, (2023)

Luan, F., Paris, S., Shechtman, E., et al.: Deep photo style transfer. In: Proceedings of the IEEE Conference on Computer vision and pattern recognition, 4990–4998. (2017). https://doi.org/10.48550/arXiv.1703.07511

Yang, R.R.: Multi-stage optimization for photorealistic neural style transfer. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, 1769–1776, (2019)

Johnson, J., Alahi, A., Fei-Fei, L.: Perceptual losses for real-time style transfer and super-resolution. In: Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, 694–711. Springer, (2016)

Isola, P., Zhu, J.-Y., Zhou, T., et al.: Image-to-image translation with conditional adversarial networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, 1125–1134. (2017)

Li, C., Wand, M.: Combining markov random fields and convolutional neural networks for image synthesis. In: Proceedings of the IEEE conference on computer vision and pattern recognition, 2479–2486. (2016)

Kwatra, V., Essa, I., Bobick, A., et al.: Texture optimization for example-based synthesis. ACM SIGGRAPH 2005 Papers, 795–802 (2005)

Li, S., Xu, X., Nie, L., et al.: Laplacian-steered neural style transfer. In: Proceedings of the 25th ACM International Conference on Multimedia, 1716–1724. (2017) https://doi.org/10.1145/3123266.3123425

Prince, S.J.: Computer Vision: Models, Learning, and Inference. Cambridge University Press, Cambridge (2012)

Ye, W., Zhu, X., Liu, Y.: Multi-semantic preserving neural style transfer based on Y channel information of image. Vis. Comput. 39, 609–623 (2023). https://doi.org/10.1007/s00371-021-02361-6

Ye, H., Liu, W., Huang, S.: Method of Image Style Transfer Based on Edge Detection. In: 2020 IEEE 4th Information Technology, Networking, Electronic and Automation Control Conference (ITNEC), 1635–1639. IEEE, (2020)

Ye, H., Xue, L., Chen, X., et al.: Research on the Method of Landscape Image Style Transfer based on Semantic Segmentation. In: 2021 IEEE 2nd International Conference on Information Technology, Big Data and Artificial Intelligence (ICIBA), 1171–1175. (2021)

Zhu, J.-Y., Park, T., Isola, P., et al.: Unpaired image-to-image translation using cycle-consistent adversarial networks. In: Proceedings of the IEEE international conference on computer vision, 2223–2232. (2017)

Choi, Y., Choi, M., Kim, M., et al.: Stargan: Unified generative adversarial networks for multi-domain image-to-image translation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, 8789–8797. (2018). https://arxiv.org/abs/1701.08893

Zhu, S., Luo, X., Ma, L., et al.: Realistic Style-Transfer Generative Adversarial Network With a Weight-Sharing Strategy. In: 2020 IEEE 32nd International Conference on Tools with Artificial Intelligence (ICTAI), 694–699. IEEE, (2020)

Yu, Y., Li, D., Li, B., et al.: Multi-style image generation based on semantic image. Vis. Comput. (2023). https://doi.org/10.1007/s00371-023-03042-2

Liu, Y., Guo, Z., Guo, H., et al.: Zoom-GAN: learn to colorize multi-scale targets. Vis. Comput. 39, 3299–3310 (2023). https://doi.org/10.1007/s00371-023-02941-8

Wang, F., Geng, S., Zhang, D., et al.: Automatic colorization for Thangka sketch-based paintings. Vis. Comput. (2023). https://doi.org/10.1007/s00371-023-02814-0

Li, H., Sheng, B., Li, P., et al.: Globally and locally semantic colorization via exemplar-based broad-GAN. IEEE Trans. Image Process. 30, 8526–8539 (2021)

Park, D.Y., Lee, K.H.: Arbitrary style transfer with style-attentional networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 5880–5888 (2019)

Zhang, Y., Fang, C., Wang, Y., et al.: Multimodal style transfer via graph cuts. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, 5943–5951. (2019)

Wang, H., Li, Y., Wang, Y., et al.: Collaborative distillation for ultra-resolution universal style transfer. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 1860–1869 (2020). https://doi.org/10.48550/arXiv.2003.08436

Li, Y., Fang, C., Yang, J., et al.: Diversified texture synthesis with feed-forward networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, 3920–3928. (2017). https://doi.org/10.48550/arXiv.1703.01664

Chen, X., Xu, C., Yang, X., et al.: Gated-gan: adversarial gated networks for multi-collection style transfer. IEEE Trans. Image Process. 28, 546–560 (2018)

Yu, J., Jin, L., Chen, J., et al.: Deep semantic space guided multi-scale neural style transfer. Multimed. Tools Appl. 81, 1–24 (2022)

Ma, Z., Li, J., Wang, N., et al.: Semantic-related image style transfer with dual-consistency loss. Neurocomputing 406, 135–149 (2020). https://doi.org/10.1016/j.neucom.2020.04.027

Kim, S., Do, J., Kim, M.: Pseudo-supervised learning for semantic multi-style transfer. IEEE Access 9, 7930–7942 (2021)

Ma, Z., Lin, T., Li, X., et al.: Dual-affinity style embedding network for semantic-aligned image style transfer. IEEE Trans. Neural Networks Learn. Syst. (2022)

Ye, W., Zhu, X., Xu, Z., et al.: A comprehensive framework of multiple semantics preservation in neural style transfer. J. Vis. Commun. Image Represent. 82, 103378 (2022). https://doi.org/10.1016/j.jvcir.2021.103378

Champandard, A.J.: Semantic style transfer and turning two-bit doodles into fine artworks. arXiv preprint arXiv:1603.01768 (2016)

Wang, Z., Zhao, L., Lin, S., et al.: GLStyleNet: exquisite style transfer combining global and local pyramid features. IET Comput. Vis. 14, 575–586 (2020). https://doi.org/10.1049/iet-cvi.2019.0844

Li, C., Wand, M.: Precomputed real-time texture synthesis with markovian generative adversarial networks. In: Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 702–716. (2016)

Zhao, H.-H., Rosin, P.L., Lai, Y.-K., et al.: Automatic semantic style transfer using deep convolutional neural networks and soft masks. Vis. Comput. 36, 1307–1324 (2020)

Lin, Z., Wang, Z., Chen, H., et al.: Image style transfer algorithm based on semantic segmentation. IEEE Access 9, 54518–54529 (2021)

Wu, C., Yao, M.: Automatically Extract Semantic Map for Semantic Style Transfer. In: 2022 7th International Conference on Intelligent Computing and Signal Processing (ICSP), 1127–1130. IEEE, (2022)

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China (Project No.52065010 and No.52165063), Department of Science and Technology of Guizhou Province (Project No. [2022] G140; No. [2022] K024; [2023] G094; [2023] G125), Laboratory Open Project of Guizhou University (SYSKF2023-089), Bureau of Science and Technology of Guiyang (Project No. [2022] 2-3).

Author information

Authors and Affiliations

Contributions

LX contributed to conceptualization, methodology, software, formal analysis, writing—original draft, and visualization. QY contributed to conceptualization, methodology, supervision, writing—review and editing. YS contributed to supervision, writing—review and editing. QG contributed to supervision, writing—review and editing.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Xu, L., Yuan, Q., Sun, Y. et al. Image neural style transfer combining global and local optimization. Vis Comput (2024). https://doi.org/10.1007/s00371-023-03244-8

Accepted:

Published:

DOI: https://doi.org/10.1007/s00371-023-03244-8