Abstract

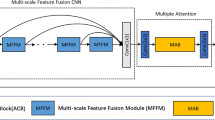

Although the convolution neural network (CNN) and transformer methods have greatly promoted the development of image super-resolution, these two methods have their disadvantages. Making a trade-off between the two methods and effectively integrating their advantages can restore high-frequency information of images with fewer parameters and higher quality. Hence, in this study, a novel dual parallel fusion structure of distilled feature pyramid and serial CNN and transformer (PFDFCT) model is proposed. In one branch, a lightweight serial structure of CNN and transformer is implemented to guarantee the richness of the global features extracted by transformer. In the other branch, an efficient distillation feature pyramid hybrid attention module is designed to efficiently purify the local features extracted by CNN and maintain integrity through cross-fusion. Such a multi-path parallel fusion strategy can ensure the richness and accuracy of features while avoiding the use of complex and long-range structures. The results show that the PFDFCT can reduce the mis-generated stripes and make the reconstructed image clearer for both easy-to-reconstruct and difficult-to-reconstruct targets compared to other advanced methods. Additionally, PFDFCT achieves a remarkable advance in model size and computational cost. Compared to the state-of-the-art (SOTA) model (i.e., efficient long-range attention network) in 2022, PFDFCT reduces parameters and floating point of operations (FLOPs) by more than 20% and 26% under all three scales, while maintaining a similar advanced reconstruction ability. The FLOPs of PFDFCT are as low as 31.8G, 55.3G, and 122.5G under scales of 2, 3 and 4, which are much lower than most current SOTA methods.

Similar content being viewed by others

References

Ullah, Z., Qi, L., Hasan, A., Asim, M.: Improved deep CNN-based two stream super resolution and hybrid deep model-based facial emotion recognition. Eng. Appl. Artif. Intell. 116, 105486 (2022)

Wang, Y., Bashir, S.M.A., Khan, M., Ullah, Q., Wang, R., et al.: Remote sensing image super-resolution and object detection: benchmark and state of the art. Expert Syst. Appl. 197, 116793 (2022)

Niu, T., Chen, B., Lyu, Q., Li, B., Luo, W., Wang, Z., Li, B.: Scoring Bayesian Neural Networks for learning from inconsistent labels in surface defect segmentation. Measurement. 225, 113998 (2024)

Yang, H., Yang, X., Liu, K., Jeon, G., Zhu, C.: SCN: self-calibration network for fast and accurate image super-resolution. Expert Syst. Appl. 226, 120159 (2023)

Keys, R.: Cubic convolution interpolation for digital image processing. IEEE Trans. Acoust. Speech Signal Process. 29(6), 1153–1160 (1981)

Dong, C., Loy, C.C., He, K., Tang, X.: Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. 38(2), 295–307 (2015)

Anwar, S., Barnes, N.: Densely residual laplacian super-resolution. IEEE T. Pattern Anal. 44(3), 1192–1204 (2020)

Park, D., Kim, K., Young Chun, S.: Efficient module based single image super resolution for multiple problems. In: Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, pp. 995–9958. IEEE, Salt Lake City (2018)

Liu, Y., Yang, D., Zhang, F., Xie, Q., Zhang, C.: Deep recurrent residual channel attention network for single image super-resolution. Vis. Comput. (2023). https://doi.org/10.1007/s00371-023-03044-0

Wang, J., Zou, Y., Wu, H.: Image super-resolution method based on attention aggregation hierarchy feature. Vis. Comput. (2023). https://doi.org/10.1007/s00371-023-02968-x

Chen, Y., Xia, R., Yang, K., Zou, K.: MFFN: image super-resolution via multi-level features fusion network. Vis. Comput. (2023). https://doi.org/10.1007/s00371-023-02795-0

Agustsson, E., Timofte, R.: Ntire 2017 challenge on single image super-resolution: Dataset and study. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 126–135. IEEE, Honolulu (2017)

Ahn, N., Kang, B., Sohn, K.: Fast, accurate, and lightweight super-resolution with cascading residual network. In: Proceedings of the European Conference on Computer Vision, pp. 252–268. IEEE, Munich (2018)

Li, W., Zhou, K., Qi, L., Jiang, N., Lu, J., Jia, J.L.: Linearly-assembled pixel-adaptive regression network for single image super-resolution and beyond. Adv. Neural Inf. Proc. Syst. 33, 20343–20355 (2020)

Gao, Q., Zhao, Y., Li, G., Tong, T.: Image super-resolution using knowledge distillation. In: Asian Conference on Computer Vision, pp. 1103–1112. Springer (2018)

Angarano, S., Salvetti, F., Martini, M., Chiaberge, M.: Generative adversarial super-resolution at the edge with knowledge distillation. Eng. Appl. Artif. Intel. 123, 106407 (2023)

Wang, H., Li, J., Wu, H., Hovy, E., Sun, Y.: Pre-trained language models and their applications. Engineering. 25, 51–65 (2023)

Zhou, M., Duan, N., Liu, S., Shum, H.: Progress in neural NLP: modeling, learning, and reasoning. Engineering 6(3), 275–290 (2020)

Zhou, Z., Li, G., Wang, G.: A hybrid of transformer and CNN for efficient single image super-resolution via multi-level distillation. Displays 76, 102352 (2023)

Fang, J., Lin, H., Chen, X., Zeng, K.: A hybrid network of cnn and transformer for lightweight image super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition , pp. 1103–1112. IEEE, New Orleans (2022)

Lu, Z., Li, J., Liu, H., Huang, C., Zhang, L., Zeng, T.: Transformer for single image super-resolution. In: Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, pp. 456–465. IEEE, New Orleans (2022)

Wang, H., Wu, H., He, Z., Huang, L., Church, K.W.: Progress in machine translation. Engineering 18, 143–153 (2021)

Hui, Z., Gao, X., Yang, Y., Wang, X.: Lightweight image super-resolution with information multi-distillation network. In: Proceedings of the 27th ACM International Conference on Multimedia, pp. 2024–2032. New York, NY, USA (2019)

Luo, X., Xie, Y., Zhang, Y., Qu, Y., Li, C., Fu, Y.: Latticenet: Towards lightweight image super-resolution with lattice block. In: Computer Vision—ECCV 2020: 16th European Conference, pp. 272–289. Glasgow, UK (2020)

Liu, J., Tang, J., Wu, G.: Residual feature distillation network for lightweight image super-resolution. In: Computer Vision—ECCV 2020 Workshops, pp. 41–55. Glasgow, UK (2020)

Yang, X., Guo, Y., Li, Z., Zhou, D., Li, T.: MRDN: a lightweight multi-stage residual distillation network for image super-resolution. Expert Syst. Appl. 204, 117594 (2022)

Zamir, S. W., Arora, A., Khan, S., Hayat, M., Khan, F. S., Yang, M.: Restormer: efficient transformer for high-resolution image restoration. In: Proceedings of 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 5718–5729. IEEE, New Orleans (2022)

Wang, Z., Cun, X., Bao, J., Zhou, W., Liu, J., Li, H.: Uformer: a general U-shaped transformer for image restoration. In: Proceedings of 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition. IEEE, New Orleans, LA, USA, pp. 17662–17672 (2022)

Chen, H., Wang, Y., Guo, T., Xu, C., Deng, Y., Liu, Z., et al.: Pre-trained image processing transformer. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 2165–0608. IEEE Safranbolu (2021)

Liang, J., Cao, J., Sun, G., Zhang, K., Van Gool, L., Timofte, R.: Swinir: Image restoration using swin transformer. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 1833–1844. IEEE, Montreal (2021)

Liu, Z., Lin, Y., Cao, Y., Hu, H., Wei, Y., Zhang, Z., et al.: Swin transformer: hierarchical vision transformer using shifted windows. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 10012–10022. IEEE, Montreal (2021)

Zhang, X., Zeng, H., Guo, S., Zhang, L.: Efficient long-range attention network for image super-resolution. In: Proceedings of European Conference on Computer Vision, p. 13677. Springer, Cham (2022)

Xu, B., Yin, H.: A slimmer and deeper approach to deep network structures for low-level vision tasks. Expert Syst. e13092, 1–16 (2022)

Shi, W., Du, H., Mei, W., Ma, Z.: (SARN)spatial-wise attention residual network for image super-resolution. Vis. Comput. 37, 1569 (2021)

Yang, A., Wei, Z., Wang, J., Cao, J., Ji, Z., Pang, Y.: Multi-feature self-attention super-resolution network. Vis. Comput. (2023). https://doi.org/10.1007/s00371-023-03046-y

Wang, G., Chen, M., Lin, Y. C., Tan, X., Zhang, C., Yao, W., Gao, B., Li, K., Li, Z., Zeng, W.: Efficient multi-branch dynamic fusion network for super-resolution of industrial component image. Displays. 82, 102633 (2024)

Kim, J. H., Choi, J. H., Cheon, M., Lee, J. S. Ram.: residual attention module for single image super-resolution. arXiv preprint arXiv:1811.12043 (2018)

Niu, B., Wen, W., Ren, W., Zhang, X., Yang, L., Wang, S., et al.: Single image super-resolution via a holistic attention network. In: Proceedings of European Conference on Computer Vision, pp. 191–207. Springer, Cham (2020)

Lan, R., Sun, L., Liu, Z., Lu, H., Pang, C., Luo, X.: MADNet: a fast and lightweight network for single-image super resolution. IEEE Trans. Cybern. 51(3), 1443–1453 (2020)

Wang, L., Li, D., Zhu, Y., Tian, L., Shan, Y.: Dual super-resolution learning for semantic segmentation. In: Proceedings of 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3773–3782. IEEE, Seattle (2020)

Huang, Z., Li, W., Li, J., Zhou, D.: Dual-path attention network for single image super-resolution. Expert Syst. Appl. 169, 114450 (2021)

Yang, X., Guo, Y., Li, Z., Zhou, D.: Image super-resolution network based on a multi-branch attention mechanism. Signal Image Video Process. 15(7), 1397–1405 (2021)

Huang, S., Liu, X., Tan, T., et al.: TransMRSR: transformer-based self-distilled generative prior for brain MRI super-resolution. Visual Comput. 39(8), 3647–3659 (2023)

Lin, X., Sun, S., Huang, W., Sheng, B., Li, P., Feng, D.: EAPT: efficient attention pyramid transformer for image processing. IEEE Trans. Multimed. 25, 50–61 (2023)

Liu, J., Tang, J., Wu, G.: Residual feature distillation network for lightweight image super-resolution. Computer Vision–ECCV 2020 Workshops, pp. 41–55. Glasgow, UK (2020)

Fang, J., Lin, H., Chen, X., Zeng, K.: A hybrid network of cnn and transformer for lightweight image super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1103–1112. IEEE, New Orleans (2022)

Lin, T., Dollár, P., Girshick, R., He, K., Hariharan, B., Belongie, S.: Feature pyramid networks for object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2117–2125. IEEE, Honolulu (2017)

Bevilacqua, M., Roumy, A., Guillemot, C., Alberi-morel, M. L.: Low-complexity single-image super-resolution based on nonnegative neighbor embedding. British Machine Vision Conference, Surrey, UK, pp. 1–10 (2012)

Zeyde, R., Elad, M., Protter, M.: On single image scale-up using sparse-representations. In: Proceedings of International Conference on Curves and Surfaces, pp. 711–730. Springer, Berlin (2010)

Martin, D., Fowlkes, C., Tal, D., Malik, J.: A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In: Proceedings of Eighth IEEE International Conference on Computer Vision, pp. 416–423. IEEE, Vancouver (2001)

Huang, J., Singh, A., Ahuja, N.: Single image super-resolution from transformed self-exemplars. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5197–5206. IEEE, Waknaghat (2015)

Matsui, Y., Ito, K., Aramaki, Y., Fujimoto, A., Ogawa, T., Yamasaki, T., et al.: Sketch-based manga retrieval using manga109 dataset. Multimed. Tools Appl. 76, 21811–21838 (2017)

Kingma, D. P., Ba, J. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

Fang, J., Lin, H., Chen, X., Zeng, K. A hybrid network of cnn and transformer for lightweight image super-resolution. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 1103–1112. IEEE, New Orleans (2022)

Paszke, A. Gross, S., Massa, F, Lerer, A., Bradbury, J., Chanan.G, et al.: Pytorch: An imperative style, high-performance deep learning library. In: Proceedings of the international conference on neural information processing systems, pp. 8024–8035. Curran Associates, Vancouver (2019)

Hu, J., Shen, L., Sun, G.: Squeeze-and-excitation networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 7132–7141. IEEE, Salt Lake City (2018)

Acknowledgements

This work was financially supported by the \({\text{National}}\;{\text{ Key }}\;{\text{Research }}\;{\text{and}}\;{\text{ Development }}\;{\text{Program}}\) of China under Grant 2022YFB3706902. Hunan Provincial Natural Science Foundation of China under Grant 2022JJ40614.

Author information

Authors and Affiliations

Contributions

GQW designed and performed the experiments, derived the models and analyzed the data. MSC verified the analytical methods and oversaw overall direction and planning. YCL supervised the project and provided financial support. XHT and CZZ verified the experimental results and supervised the findings of this work. Both WXY and BHG contributed to the final version of the manuscript. WDZ modified the grammar. All authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, G., Chen, M., Lin, Y. et al. An efficient parallel fusion structure of distilled and transformer-enhanced modules for lightweight image super-resolution. Vis Comput (2024). https://doi.org/10.1007/s00371-023-03243-9

Accepted:

Published:

DOI: https://doi.org/10.1007/s00371-023-03243-9