Abstract

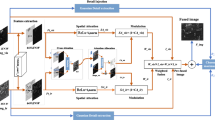

Visible-infrared image fusion cannot only reveal respective features of multiband imaging but also combine complementary information. It thus highlights salient information that cannot be directly obtained from a single waveband and enhances scene detection and perception. However, low-light condition for special scenarios, i.e., underground coal mine, impacts the performance of visible-infrared image fusion as they lead to lower contrast for visible light images and loss of local details. In this respect, we propose an infrared and visible image fusion architecture in low-light conditions based on self- and cross-attention (SACA-Fusion). This architecture replaces traditional fusion approaches with a transformer-based fusion network. It better extracts long-range dependencies of images and improves space recovery of fused images. The architecture has an attention mechanism composed of two modules. The self-attention module achieves global interaction and fusion of features and reduces loss in local details; the cross-attention module in nest connect enhances features in low-light conditions and achieves low-contrast space recovery. In the experiment part, through ablation, we confirm that the wonderful fusion strategy is transformer module, rather than RFN or directly connecting. Then, based on comparison experiments on TNO and LLVIP datasets, it is shown that the better fusion performance of the proposed one under some evaluation indicators. Especially in the actual low-light condition, the improvement of the fusion effect is commendable.

Similar content being viewed by others

Availability of data and materials

The datasets used or analyzed during the current study are available from the corresponding author on reasonable request.

References

Li, H., Ding, W., Cao, X., Liu, C.: Image registration and fusion of visible and infrared integrated camera for medium-altitude unmanned aerial vehicle remote sensing. Remote Sensing 9, 441 (2017)

Ma, J., Ma, Y., Li, C.: Infrared and visible image fusion methods and applications: a survey. Inf. Fusion 45, 153–178 (2019)

Lopez-Molina, C., Montero, J., Bustince, H., De Baets, B.: Self-adapting weighted operators for multiscale gradient fusion. Inf. Fusion 44, 136–146 (2018)

Luo, C., Sun, B., Yang, K., Lu, T., Yeh, W.-C.: Thermal infrared and visible sequences fusion tracking based on a hybrid tracking framework with adaptive weighting scheme. Infrared Phys. Technol. 99, 265–276 (2019)

Li, S., Kang, X., Fang, L., Hu, J., Yin, H.: Pixel-level image fusion: a survey of the state of the art. Inf. Fusion 33, 100–112 (2017)

Liu, Y., Chen, X., Wang, Z., Wang, Z.J., Ward, R.K., Wang, X.: Deep learning for pixel-level image fusion: recent advances and future prospects. Inf. Fusion 42, 158–173 (2018)

Tian, J., Leng, Y., Zhao, Z., Xia, Y., Sang, Y., Hao, P., Zhan, J., Li, M., Liu, H.: Carbon quantum dots/hydrogenated TiO2 nanobelt heterostructures and their broad spectrum photocatalytic properties under UV, visible, and near-infrared irradiation. Nano Energy 11, 419–427 (2015)

Jin, X., Jiang, Q., Yao, S., Zhou, D., Nie, R., Hai, J., He, K.: A survey of infrared and visual image fusion methods. Infrared Phys. Technol. 85, 478–501 (2017)

Ma, W., Wang, K., Li, J., Yang, S., Li, J., Song, L., Li, Q.: Infrared and visible image fusion technology and application: a review. Sensors 23, 599 (2023)

Bin, Y., Chao, Y., Guoyu, H.: Efficient image fusion with approximate sparse representation. Int. J. Wavelets Multiresolut. Inf. Process. 14(04), 1650024 (2016)

Zhang, Q., Fu, Y., Li, H., Zou, J.: Dictionary learning method for joint sparse representation-based image fusion. Opt. Eng. 52(5), 057006 (2013)

Li, S., Kang, X., Hu, J.: Image fusion with guided filtering. IEEE Trans. Image Process. 22(7), 2864–2875 (2013)

Hu, H.-M., Wu, J., Li, B., Guo, Q., Zheng, J.: An adaptive fusion algorithm for visible and infrared videos based on entropy and the cumulative distribution of gray levels. IEEE Trans. Multimedia 19(12), 2706–2719 (2017)

Liu, Y., Jin, J., Wang, Q., Shen, Y., Dong, X.: Region level based multi-focus image fusion using quaternion wavelet and normalized cut. Signal Process. 97, 9–30 (2014)

Burt, P.J., Adelson, E.H.: The laplacian pyramid as a compact image code. IEEE Trans. Commun. 31(4), 532–540 (1983)

Cunha, A.L., Zhou, J., Do, M.N.: The non-subsampled contourlet transform: theory, design, and applications. IEEE Trans. Image Process. 15, 3089–3101 (2006)

Liu, X., Mei, W., Du, H.: Structure tensor and nonsubsampled shearlet transform based algorithm for CT and MRI image fusion. Neurocomputing 235, 131–139 (2017)

Liu, Y., Chen, X., Ward, R.K., Wang, J.: Image fusion with convolutional sparse representation. IEEE Signal Process. Lett. 23(12), 1882–1886 (2016)

Fu, Z., Wang, X., Xu, J., Zhou, N., Zhao, Y.: Infrared and visible images fusion based on RPCA and NSCT. Infrared Phys. Technol. 77, 114–123 (2016)

Cvejic, N., Bull, D., Canagarajah, N.: Region-based multimodal image fusion using ICA bases. IEEE Sens. J. 7(5), 743–751 (2007)

Ma, J., Tang, L., Fan, F., Huang, J., Mei, X., Ma, Y.: SwinFusion: cross-domain long-range learning for general image fusion via swin transformer. IEEE/CAA J. Autom. Sin. 9(7), 1200–1217 (2022)

Li, H., Wu, X.-J., Kittler, J.: Infrared and visible image fusion using a deep learning framework. In: 2018 24th International conference on pattern recognition (ICPR), pp. 2705–2710 (2018)

Li, H., Wu, X.-J., Durrani, T.S.: Infrared and visible image fusion with resnet and zero-phase component analysis. Infrared Phys. Technol. 102, 103039 (2019)

Ma, J., Yu, W., Liang, P., Li, C., Jiang, J.: FusionGan: a generative adversarial network for infrared and visible image fusion. Inf. Fusion 48, 11–26 (2019)

Ma, J., Liang, P., Yu, W., Chen, C., Guo, X., Wu, J., Jiang, J.: Infrared and visible image fusion via detail preserving adversarial learning. Info. Fusion 54, 85–98 (2020)

Ma, J., Xu, H., Jiang, J., Mei, X., Zhang, X.-P.: DDcGAN: a dual- discriminator conditional generative adversarial network for multi- resolution image fusion. IEEE Trans. Image Process. 29, 4980–4995 (2020)

Ma, J., Zhang, H., Shao, Z., Liang, P., Xu, H.: GANMcC: a generative adversarial network with multi-classification constraints for infrared and visible image fusion. IEEE Trans. Instrum. Meas. 70, 1–14 (2020)

Li, H., Wu, X.-J., Durrani, T.: NestFuse: an infrared and visible image fusion architecture based on nest connection and spatial/channel attention models. IEEE Trans. Instrum. Meas. 69(12), 9645–9656 (2020)

Li, H., Wu, X.-J., Kittler, J.: RFN-Nest: AN end-to-end residual fusion network for infrared and visible images. Inf. Fusion 73, 72–86 (2021)

Cao, L., Jin, L., Tao, H., Li, G., Zhuang, Z., Zhang, Y.: Multi-focus image fusion based on spatial frequency in discrete cosine transform domain. IEEE Signal Process. Lett. 22(2), 220–224 (2014)

Zhang, Q., Liu, Y., Blum, R.S., Han, J., Tao, D.: Sparse representation based multi-sensor image fusion for multi-focus and multi-modality images: a review. Inf. Fusion 40, 57–75 (2018)

Kuncheva, L.I., Faithfull, W.J.: Pca feature extraction for change detection in multidimensional unlabeled data. IEEE Trans Neural Netw Learn Syst 25(1), 69–80 (2013)

Li, H., Wu, X.-J.: Densefuse: a fusion approach to infrared and visible images. IEEE Trans. Image Process. 28(5), 2614–2623 (2018)

Huang, G., Liu, Z., Maaten, L., Weinberger, K.: Densely connected convolutional networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp. 4700–4708 (2017)

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, L ., Polosukhin, I.: Attention is all you need. Advances in neural information processing systems 30 (2017)

Dosovitskiy, A., Beyer, L., Kolesnikov, A., Weissenborn, D., Zhai, X., Unterthiner, T., Dehghani, M., Minderer, M., Heigold, G., Gelly, S., et al.: An image is worth 16x16 words: transformers for image recognition at scale. arXiv:2010.11929 (2020)

Wang, W., Xie, E., Li, X., Fan, D.-P., Song, K., Liang, D., Lu, T., Luo, P., Shao, L.: Pyramid vision transformer: A versatile backbone for dense prediction without convolutions. In: Proceedings of the IEEE/CVF international conference on computer vision, pp. 568–578 (2021)

Yuan, L., Chen, Y., Wang, T., Yu, W., Shi, Y., Jiang, Z.-H., Tay, F.E., Feng, J., Yan, S.: Tokens-to-token vit: Training vision transformers from scratch on imagenet. In: Proceedings of the IEEE/CVF International conference on computer vision, pp. 558–567 (2021)

Liu, Z., Lin, Y., Cao, Y., Hu, H., Wei, Y., Zhang, Z., Lin, S., Guo, B.: Swin transformer: Hierarchical vision transformer using shifted windows. In: Proceedings of the IEEE/CVF international conference on computer vision, pp. 10012–10022 (2021)

Huang, Z., Ben, Y., Luo, G., Cheng, P., Yu, G., Fu, B.: Shuffle transformer: Rethinking spatial shuffle for vision transformer. arXiv preprint arXiv:2106.03650 (2021)

Chen, C.-F.R., Fan, Q., Panda, R.: Crossvit: cross-attention multi-scale vision transformer for image classification. In: Proceedings of the IEEE/CVF international conference on computer vision, pp. 357–366 (2021)

Wen, Z., Lin, W., Wang, T., Xu, G.: Distract your attention: multi-head cross attention network for facial expression recognition. Biomimetics 8, 199 (2023)

Hwang, S., Park, J., Kim, N., Choi, Y., So Kweon, I.: Multispectral pedestrian detection: Benchmark dataset and baseline. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 1037–1045 (2015)

Toet, A., et al.: Tno image fusion dataset. Figshare. data (2014)

Jia, X., Zhu, C., Li, M., Tang, W., Zhou, W.: LLVIP: a visible-infrared paired dataset for low-light vision. In: Proceedings of the IEEE/CVF international conference on computer vision, pp. 3496–3504 (2021)

Roberts, J.W., Van Aardt, J.A., Ahmed, F.B.: Assessment of image fusion procedures using entropy, image quality, and multispectral classification. J. Appl. Remote Sens. 2(1), 023522 (2008)

Rao, Y.-J.: In-fibre bragg grating sensors. Meas. Sci. Technol. 8(4), 355 (1997)

Qu, G., Zhang, D., Yan, P.: Information measure for performance of image fusion. Electron. Lett. 38(7), 1 (2002)

Aslantas, V., Bendes, E.: A new image quality metric for image fusion: the sum of the correlations of differences. Aeuint. J. Electron. Commun. 69(12), 1890–1896 (2015)

Ma, K., Zeng, K., Wang, Z.: Perceptual quality assessment for multi-exposure image fusion. IEEE Trans. Image Process. 24(11), 3345–3356 (2015)

Shreyamsha Kumar, B.: Multifocus and multispectral image fusion based on pixel significance using discrete cosine harmonic wavelet transform. SIViP 7(6), 1125–1143 (2013)

Zhang, Y., Liu, Y., Sun, P., Yan, H., Zhao, X., Zhang, L.: Ifcnn: a general image fusion framework based on convolutional neural network. Inform. Fusion 54, 99–118 (2020)

Xu, H., Ma, J., Jiang, J., Guo, X., Ling, H.: U2fusion: a unified unsupervised image fusion network. IEEE Trans. Pattern Anal. Mach. Intell. 44(1), 502–518 (2020)

Funding

Beijing Natural Science Foundation (4202015), Chinese University Industry-University-Research Innovation Fund—Blue Dot Distributed Intelligent Computing Project (2021LDA06002).

Author information

Authors and Affiliations

Contributions

CY was contributed to investigation, visualization, writing—original draft. SL was contributed to methodology, validation, writing—review and editing. WF was contributed to methodology, validation. TZ was contributed to investigation, visualization. SL was contributed to methodology, writing—review and editing.

Corresponding author

Ethics declarations

Conflict of interest

The author declared that they have no competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Ethical approval

There are no ethical issues involved in our research.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yu, C., Li, S., Feng, W. et al. SACA-fusion: a low-light fusion architecture of infrared and visible images based on self- and cross-attention. Vis Comput 40, 3347–3356 (2024). https://doi.org/10.1007/s00371-023-03037-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-023-03037-z