Abstract

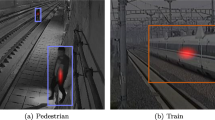

Suspended foreign objects on transmission lines will shorten the discharge distance, easily leading to phase-to-ground or phase-to-phase short circuits, which induces outage accidents. Foreign objects are small and difficult to identify, resulting in low detection accuracy. An improved foreign object detection method based on Swin Transformer V2 and YOLOX (ST2Rep–YOLOX) is proposed. First, the feature extraction layer ST2CSP constructed by Swin Transformer V2 is used in the original backbone network to extract global and local features. Secondly, hybrid spatial pyramid pooling (HSPP) is designed to enlarge the receptive field and retain more feature information. Then, Re-param VGG block (RepVGGBlock) is introduced to replace all 3 × 3 convolutions in the network to deepen the network and improve feature extraction capabilities. Finally, experiments are carried out on the transmission lines foreign object image dataset, which was obtained using unmanned aerial vehicles (UAVs). The experimental results show that the average accuracy of the ST2Rep–YOLOX method can reach 96.7%, which is 4.4% higher than that of YOLOX. The accuracy of the nest, kite, and balloon increased by 9.3%, 15.4%, and 9.6%, and the recall increased by 6.5%, 9.4%, and 2.5%, respectively. This method has high detection accuracy, which provides an important reference for foreign object detection in transmission lines.

Similar content being viewed by others

Data availability statement

The datasets analyzed during the current study are not publicly available but are available from the corresponding author on reasonable request.

References

Zhang, R., Yang, B., Xiao, W., Liang, F., Liu, Y., Wang, Z.: Automatic extraction of high-voltage power transmission objects from UAV Lidar point clouds. Remote Sens. 11, 2600 (2019). https://doi.org/10.3390/rs11222600

Cheng, L., Wu, G.: Obstacles detection and depth estimation from monocular vision for inspection robot of high voltage transmission line. Cluster Comput. 22(Suppl 2), 2611–2627 (2019). https://doi.org/10.1007/s10586-017-1356-8

Chen, C., Jin, A., Yang, B., Ma, R., Sun, S., Wang, Z., Zong, Z., Zhang, F.: DCPLD-Net: a diffusion coupled convolution neural network for real-time power transmission lines detection from UAV-Borne LiDAR data. Int. J. Appl. Earth Observ. Geoinf. 112, 102960 (2022). https://doi.org/10.1016/j.jag.2022.102960

Nguyen, V.N., Jenssen, R., Roverso, D.: Automatic autonomous vision-based power line inspection: a review of current status and the potential role of deep learning. Int. J. Electr. Power Energy Syst. 99, 107–120 (2018). https://doi.org/10.1016/j.ijepes.2017.12.016

Alhassan, A.B., Zhang, X., Shen, H., Xu, H.: Power transmission line inspection robots: a review, trends and challenges for future research. Int. J. Electr. Power Energy Syst. 118, 105862 (2020). https://doi.org/10.1016/j.ijepes.2020.105862

Luo, Y., Yu, X., Yang, D., Zhou, B.: A survey of intelligent transmission line inspection based on unmanned aerial vehicle. Artif Intell Rev. 56, 173–201 (2023). https://doi.org/10.1007/s10462-022-10189-2

Liu, X., Miao, X., Jiang, H., Chen, J.: Data analysis in visual power line inspection: an in-depth review of deep learning for component detection and fault diagnosis. Annu. Rev. Control. 50, 253–277 (2020). https://doi.org/10.1016/j.arcontrol.2020.09.002

Chen, C., Yang, B., Song, S., Peng, X., Huang, R.: Automatic clearance anomaly detection for transmission line corridors utilizing UAV-Borne LIDAR data. Remote Sens. 10, 613 (2018). https://doi.org/10.3390/rs10040613

Liu, Y., Liao, L., Wu, H., Qin, J., He, L., Yang, G., Zhang, H., Zhang, J.: Trajectory and image-based detection and identification of UAV. Vis. Comput. 37, 1769–1780 (2021). https://doi.org/10.1007/s00371-020-01937-y

Dalal, N., Triggs, B.: Histograms of oriented gradients for human detection. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, pp. 886–893 (2005). https://doi.org/10.1109/CVPR.2005.177

Lowe, D.G.: Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 60, 91–110 (2004). https://doi.org/10.1023/B:VISI.0000029664.99615.94

Cha, Y.J., You, K., Choi, W.: Vision-based detection of loosened bolts using the Hough transform and support vector machines. Autom. Constr. 71(Part 2), 181–188 (2016). https://doi.org/10.1016/j.autcon.2016.06.008

Wang, J., Wang, J., Shao, J., Li, J.: Image recognition of icing thickness on power transmission lines based on a least squares Hough transform. Energies 10, 415 (2017). https://doi.org/10.3390/en10040415

Duda, R.O., Hart, P.E.: Use of the Hough transformation to detect lines and curves in pictures. Commun. ACM 15, 11–15 (1972). https://doi.org/10.1145/361237.361242

Hazgui, M., Ghazouani, H., Barhoumi, W.: Genetic programming-based fusion of HOG and LBP features for fully automated texture classification. Vis. Comput. 38, 457–476 (2022). https://doi.org/10.1007/s00371-020-02028-8

Lu, J., Xu, X., Li, X., Li, L., Chang, C., Feng, X., Zhang, S.: Detection of bird’s nest in high power lines in the vicinity of remote campus based on combination features and cascade classifier. IEEE Access 6, 39063–39071 (2018). https://doi.org/10.1109/ACCESS.2018.2851588

Fan, J., Yang, X., Lu, R., Li, W., Huang, Y.: Long-term visual tracking algorithm for UAVs based on kernel correlation filtering and SURF features. Vis. Comput. 39, 319–333 (2023). https://doi.org/10.1007/s00371-021-02331-y

Liu, D., Cui Y., Tan W., Chen, Y.: SG-Net: Spatial Granularity Network for One-Stage Video Instance Segmentation. arXiv preprint arXiv:2103.10284 (2021). https://doi.org/10.48550/arXiv.2103.10284

Wang, W., Liang, J., Liu, D.: Learning Equivariant Segmentation with Instance-Unique Querying. arXiv preprint arXiv:2210.00911 (2022). https://doi.org/10.48550/arXiv.2210.00911

Li, H., Dong, Y., Liu, Y., Ai, J.: Design and implementation of UAVs for bird’s nest inspection on transmission lines based on deep learning. Drones 6, 252 (2022). https://doi.org/10.3390/drones6090252

Zhao, W., Xu, M., Cheng, X., Zhao, Z.: An insulator in transmission lines recognition and fault detection model based on improved faster RCNN. IEEE Trans. Instrum. Meas. 70, 1–8 (2021). https://doi.org/10.1109/TIM.2021.3112227

Zhang, X., Gong, Y., Qiao, C., et al.: Multiview deep learning based on tensor decomposition and its application in fault detection of overhead contact systems. Vis. Comput. 38, 1457–1467 (2022). https://doi.org/10.1007/s00371-021-02080-y

Xu, L., Song, Y., Zhang, W., An, Y., Wang, Y., Ning, H.: An efficient foreign objects detection network for power substation. Image Vis. Comput. 109, 104159 (2021). https://doi.org/10.1016/j.imavis.2021.104159

Sarkar, D., Gunturi, S.K.: Online health status monitoring of high voltage insulators using deep learning model. Vis. Comput. 38, 4457–4468 (2022). https://doi.org/10.1007/s00371-021-02308-x

Li, H., Liu, L., Du, J., Jiang, F., Guo, F., Hu, Q., Fan, L.: An improved YOLOv3 for foreign objects detection of transmission lines. IEEE Access 10, 45620–45628 (2022). https://doi.org/10.1109/ACCESS.2022.3170696

Qiu, Z., Zhu, X., Liao, C., Qu, W., Yu, Y.: A lightweight YOLOv4-EDAM model for accurate and real-time detection of foreign objects suspended on power lines. IEEE Trans. Power Deliv. (2022). https://doi.org/10.1109/TPWRD.2022.3213598

Cui, Y., Yan L., Cao Z., Liu D.: TF-Blender: Temporal Feature Blender for Video Object Detection. arXiv preprint arXiv:2108.05821 (2021). https://doi.org/10.48550/arXiv.2108.05821

Su, J., Su, Y., Zhang, Y., Yang, W., Huang, H., Wu, Q.: EpNet: Power lines foreign object detection with edge proposal network and data composition. Knowl. Based Syst. 249, 108857 (2022). https://doi.org/10.1016/j.knosys.2022.108857

Wang, W., Han, C., Zhou, T., Liu, D.: Visual Recognition with Deep Nearest Centroids. arXiv preprint arXiv:2209.07383 (2023). https://doi.org/10.48550/arXiv.2209.073 YOLOv7: Trainable 83

Wang, C., Bochkovskiy, A., Liao, H.M.: Bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv preprint arXiv:2207.02696 (2022). https://doi.org/10.48550/arXiv.2207.02696

Ge, Z., Liu, S., Wang, F., Li, Z., Sun, J.: Yolox: Exceeding yolo series in 2021. arXiv preprint arXiv:2107.08430 (2021). https://doi.org/10.48550/arXiv.2107.08430

Ding, X., Zhang, X., Ma, N., Han, J., Ding, G., Sun, J.: RepVGG: Making VGG-style ConvNets Great Again. arXiv preprint arXiv:2101.03697 (2021). https://doi.org/10.48550/arXiv.2101.03697

He, K., Zhang, X., Ren, S., Sun, J.: Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 37, 1904–1916 (2015). https://doi.org/10.1109/TPAMI.2015.2389824

Shen, X., Wang, H., Cui, T., Guo, Z., Fu, X.: Multiple information perception-based attention in YOLO for underwater object detection. Vis. Comput. (2023). https://doi.org/10.1007/s00371-023-02858-2

Liu, Z., Hu, H., Lin, Y., Yao, Z., Xie, Z., Wei, Y., Ning, J., Cao, Y., Zhang, Z., Dong, L., Wei, F., Guo, B.: Swin Transformer V2: Scaling Up Capacity and Resolution. arXiv preprint arXiv:2111.09883 (2022). https://doi.org/10.48550/arXiv.2111.09883

Wang, S., Gao, Z., Liu, D.: Swin-GAN: generative adversarial network based on shifted windows transformer architecture for image generation. Vis. Comput. (2022). https://doi.org/10.1007/s00371-022-02714-9

Zhou, W., Wang, C., Xiao, B., Zhang, Z.: Human action recognition using weighted pooling. IET Comput. Vis. 8, 579–587 (2014). https://doi.org/10.1049/iet-cvi.2013.0306

Lin, T., Goyal, P., Girshick, R.B., He, K., Dollár, P.: Focal loss for dense object detection. In: Proceedings of the IEEE international conference on computer vision, Venice, Italy, pp. 2999–3007 (2017). https://doi.org/10.1109/TPAMI.2018.2858826

Leslie, N. Smith.: Cyclical Learning Rates for Training Neural Networks. arXiv preprint arXiv:1506.01186 (2017). doi:https://doi.org/10.48550/arXiv.1506.01186

Kingma, D., Ba, J.: Adam: A Method for Stochastic Optimization. arXiv preprint arXiv:1412.6980 (2014). https://doi.org/10.48550/arXiv.1412.6980

Zhang, H., Hu, Z., Hao, R.: Joint information fusion and multi-scale network model for pedestrian detection. Vis. Comput. 37, 2433–2442 (2021). https://doi.org/10.1007/s00371-020-01997-0

Ren, S.Q., He, K.M., Girshick, R., Sun, J.: Faster R-CNN: towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 39, 1137–1149 (2017). https://doi.org/10.1109/TPAMI.2016.2577031

Ultralytics/yolov5. https://github.com/ultralytics/yolov5. Accessed 25 June 2020

Funding

This research was funded by the National Natural Science Foundation of China, Grant Number 61772033 and Anhui University Collaborative Innovation Project, Grant Number GXXT-2019-048, GXXT-2020-54.

Author information

Authors and Affiliations

Contributions

CT, HD, YH, and TH contributed to methodology and conceptualization; HD and CT conceived and designed the experiments; HD, MF, and JF contributed to data curation and performed the experiments; HD analyzed the data and contributed to writing—original draft preparation; HD, CT, and YH contributed to writing—review and editing; and YH contributed to funding acquisition. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest. The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Tang , C., Dong, H., Huang, Y. et al. Foreign object detection for transmission lines based on Swin Transformer V2 and YOLOX. Vis Comput 40, 3003–3021 (2024). https://doi.org/10.1007/s00371-023-03004-8

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-023-03004-8